Kvm4nfv Configuration Guide¶

Euphrates 1.0¶

Configuration Abstract¶

This document provides guidance for the configurations available in the Euphrates release of OPNFV

The release includes four installer tools leveraging different technologies; Apex, Compass4nfv, Fuel and JOID, which deploy components of the platform.

This document also includes the selection of tools and components including guidelines for how to deploy and configure the platform to an operational state.

Configuration Options¶

OPNFV provides a variety of virtual infrastructure deployments called scenarios designed to host virtualised network functions (VNF’s). KVM4NFV scenarios provide specific capabilities and/or components aimed to solve specific problems for the deployment of VNF’s. KVM4NFV scenario includes components such as OpenStack,KVM etc. which includes different source components or configurations.

Note

- Each KVM4NFV scenario provides unique features and capabilities, it is important to understand your target platform capabilities before installing and configuring. This configuration guide outlines how to configure components in order to enable the features required.

- More deatils of kvm4nfv scenarios installation and description can be found in the scenario guide of kvm4nfv docs

Low Latency Feature Configuration Description¶

Introduction¶

In KVM4NFV project, we focus on the KVM hypervisor to enhance it for NFV, by looking at the following areas initially

- Minimal Interrupt latency variation for data plane VNFs:

- Minimal Timing Variation for Timing correctness of real-time VNFs

- Minimal packet latency variation for data-plane VNFs

Inter-VM communication,

Fast live migration

Configuration of Cyclictest¶

Cyclictest measures Latency of response to a stimulus. Achieving low latency with the KVM4NFV project requires setting up a special test environment. This environment includes the BIOS settings, kernel configuration, kernel parameters and the run-time environment.

- For more information regarding the test environment, please visit https://wiki.opnfv.org/display/kvm/KVM4NFV+Test++Environment https://wiki.opnfv.org/display/kvm/Nfv-kvm-tuning

Pre-configuration activities¶

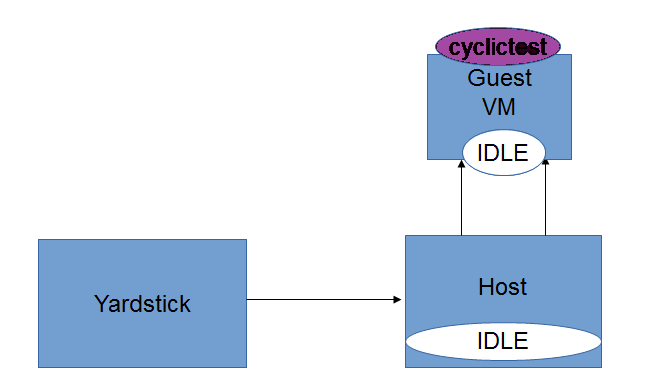

Intel POD10 is currently used as OPNFV-KVM4NFV test environment. The rpm packages from the latest build are downloaded onto Intel-Pod10 jump server from artifact repository. Yardstick running in a ubuntu docker container on Intel Pod10-jump server will configure the host(intel pod10 node1/node2 based on job type), the guest and triggers the cyclictest on the guest using below sample yaml file.

For IDLE-IDLE test,

host_setup_seqs:

- "host-setup0.sh"

- "reboot"

- "host-setup1.sh"

- "host-run-qemu.sh"

guest_setup_seqs:

- "guest-setup0.sh"

- "reboot"

- "guest-setup1.sh"

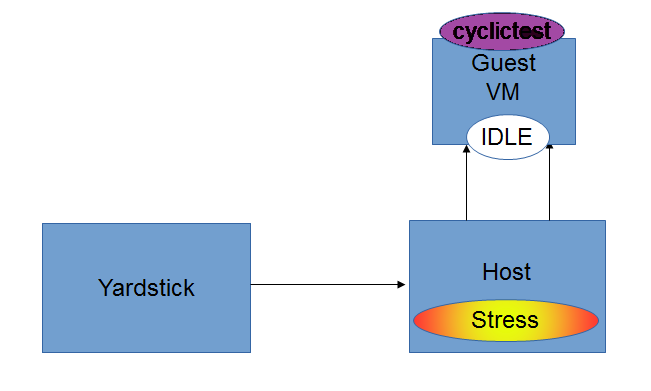

For [CPU/Memory/IO]Stress-IDLE tests,

host_setup_seqs:

- "host-setup0.sh"

- "reboot"

- "host-setup1.sh"

- "stress_daily.sh" [cpustress/memory/io]

- "host-run-qemu.sh"

guest_setup_seqs:

- "guest-setup0.sh"

- "reboot"

- "guest-setup1.sh"

The following scripts are used for configuring host and guest to create a special test environment and achieve low latency.

Note: host-setup0.sh, host-setup1.sh and host-run-qemu.sh are run on the host, followed by guest-setup0.sh and guest-setup1.sh scripts on the guest VM.

host-setup0.sh: Running this script will install the latest kernel rpm on host and will make necessary changes as following to create special test environment.

- Isolates CPUs from the general scheduler

- Stops timer ticks on isolated CPUs whenever possible

- Stops RCU callbacks on isolated CPUs

- Enables intel iommu driver and disables DMA translation for devices

- Sets HugeTLB pages to 1GB

- Disables machine check

- Disables clocksource verification at runtime

host-setup1.sh: Running this script will make the following test environment changes.

- Disabling watchdogs to reduce overhead

- Disabling RT throttling

- Reroute interrupts bound to isolated CPUs to CPU 0

- Change the iptable so that we can ssh to the guest remotely

stress_daily.sh: Scripts gets triggered only for stress-idle tests. Running this script make the following environment changes.

- Triggers stress_script.sh, which runs the stress command with necessary options

- CPU,Memory or IO stress can be applied based on the test type

- Applying stress only on the Host is handled in D-Release

- For Idle-Idle test the stress script is not triggered

- Stress is applied only on the free cores to prevent load on qemu process

- Note:

- On Numa Node 1: 22,23 cores are allocated for QEMU process

- 24-43 are used for applying stress

- host-run-qemu.sh: Running this script will launch a guest vm on the host.

- Note: download guest disk image from artifactory.

guest-setup0.sh: Running this scrcipt on the guest vm will install the latest build kernel rpm, cyclictest and make the following configuration on guest vm.

- Isolates CPUs from the general scheduler

- Stops timer ticks on isolated CPUs whenever possible

- Uses polling idle loop to improve performance

- Disables clocksource verification at runtime

guest-setup1.sh: Running this script on guest vm will do the following configurations.

- Disable watchdogs to reduce overhead

- Routes device interrupts to non-RT CPU

- Disables RT throttling

Hardware configuration¶

Currently Intel POD10 is used as test environment for kvm4nfv to execute cyclictest. As part of this test environment Intel pod10-jump is configured as jenkins slave and all the latest build artifacts are downloaded on to it.

- For more information regarding hardware configuration, please visit https://wiki.opnfv.org/display/pharos/Intel+Pod10 https://build.opnfv.org/ci/computer/intel-pod10/ http://artifacts.opnfv.org/octopus/brahmaputra/docs/octopus_docs/opnfv-jenkins-slave-connection.html

Scenariomatrix¶

Scenarios are implemented as deployable compositions through integration with an installation tool. OPNFV supports multiple installation tools and for any given release not all tools will support all scenarios. While our target is to establish parity across the installation tools to ensure they can provide all scenarios, the practical challenge of achieving that goal for any given feature and release results in some disparity.

Euphrates scenario overeview¶

The following table provides an overview of the installation tools and available scenario’s in the Euphrates release of OPNFV.

Scenario status is indicated by a weather pattern icon. All scenarios listed with a weather pattern are possible to deploy and run in your environment or a Pharos lab, however they may have known limitations or issues as indicated by the icon.

Weather pattern icon legend:

| Weather Icon | Scenario Status |

|---|---|

|

Stable, no known issues |

|

Stable, documented limitations |

|

Deployable, stability or feature limitations |

|

Not deployed with this installer |

Scenarios that are not yet in a state of “Stable, no known issues” will continue to be stabilised and updates will be made on the stable/euphrates branch. While we intend that all Euphrates scenarios should be stable it is worth checking regularly to see the current status. Due to our dependency on upstream communities and code, some issues may not be resolved prior to E release.

Scenario Naming¶

In OPNFV scenarios are identified by short scenario names, these names follow a scheme that identifies the key components and behaviours of the scenario. The rules for scenario naming are as follows:

os-[controller]-[feature]-[mode]-[option]

Details of the fields are

[os]: mandatory

- Refers to the platform type used

- possible value: os (OpenStack)

[controller]: mandatory

- Refers to the SDN controller integrated in the platform

- example values: nosdn, ocl, odl, onos

[feature]: mandatory

- Refers to the feature projects supported by the scenario

- example values: nofeature, kvm, ovs, sfc

[mode]: mandatory

- Refers to the deployment type, which may include for instance high availability

- possible values: ha, noha

[option]: optional

- Used for the scenarios those do not fit into naming scheme.

- The optional field in the short scenario name should not be included if there is no optional

scenario.

Some examples of supported scenario names are:

os-nosdn-kvm-noha

- This is an OpenStack based deployment using neutron including the OPNFV enhanced KVM hypervisor

os-onos-nofeature-ha

- This is an OpenStack deployment in high availability mode including ONOS as the SDN controller

os-odl_l2-sfc

- This is an OpenStack deployment using OpenDaylight and OVS enabled with SFC features

os-nosdn-kvm_ovs_dpdk-ha

- This is an Openstack deployment with high availability using OVS, DPDK including the OPNFV

enhanced KVM hypervisor * This deployment has

3-Contoller and 2-Compute nodesos-nosdn-kvm_ovs_dpdk-noha

- This is an Openstack deployment without high availability using OVS, DPDK including the OPNFV

enhanced KVM hypervisor * This deployment has

1-Contoller and 3-Compute nodesos-nosdn-kvm_ovs_dpdk_bar-ha

- This is an Openstack deployment with high availability using OVS, DPDK including the OPNFV

- enhanced KVM hypervisor

and Barometer

- This deployment has

3-Contoller and 2-Compute nodesos-nosdn-kvm_ovs_dpdk_bar-noha

- This is an Openstack deployment without high availability using OVS, DPDK including the OPNFV

- enhanced KVM hypervisor

and Barometer

- This deployment has

1-Contoller and 3-Compute nodes

Installing your scenario¶

There are two main methods of deploying your target scenario, one method is to follow this guide which will walk you through the process of deploying to your hardware using scripts or ISO images, the other method is to set up a Jenkins slave and connect your infrastructure to the OPNFV Jenkins master.

For the purposes of evaluation and development a number of Euphrates scenarios are able to be deployed virtually to mitigate the requirements on physical infrastructure. Details and instructions on performing virtual deployments can be found in the installer specific installation instructions.

To set up a Jenkins slave for automated deployment to your lab, refer to the Jenkins slave connect guide.