Yardstick User Guide¶

1. Introduction¶

Welcome to Yardstick’s documentation !

Yardstick is an OPNFV Project.

The project’s goal is to verify infrastructure compliance, from the perspective of a Virtual Network Function (VNF).

The Project’s scope is the development of a test framework, Yardstick, test cases and test stimuli to enable Network Function Virtualization Infrastructure (NFVI) verification.

Yardstick is used in OPNFV for verifying the OPNFV infrastructure and some of the OPNFV features. The Yardstick framework is deployed in several OPNFV community labs. It is installer, infrastructure and application independent.

See also

Pharos for information on OPNFV community labs and this Presentation for an overview of Yardstick

1.1. About This Document¶

This document consists of the following chapters:

- Chapter Introduction provides a brief introduction to Yardstick project’s background and describes the structure of this document.

- Chapter Methodology describes the methodology implemented by the Yardstick Project for NFVI verification.

- Chapter Architecture provides information on the software architecture of Yardstick.

- Chapter Yardstick Installation provides instructions to install Yardstick.

- Chapter Installing a plug-in into Yardstick provides information on how to integrate other OPNFV testing projects into Yardstick.

- Chapter Store Other Project’s Test Results in InfluxDB provides inforamtion on how to run plug-in test cases and store test results into community’s InfluxDB.

- Chapter Grafana dashboard provides inforamtion on Yardstick grafana dashboard and how to add a dashboard into Yardstick grafana dashboard.

- Chapter Yardstick Restful API provides inforamtion on Yardstick ReST API and how to use Yardstick API.

- Chapter Yardstick User Interface provides inforamtion on how to use yardstick report CLI to view the test result in table format and also values pinned on to a graph

- Chapter Virtual Traffic Classifier provides information on the VTC.

- Chapter

13-nsb-overviewdescribes the methodology implemented by the Yardstick - Network service benchmarking to test real world usecase for a given VNF. - Chapter

14-nsb_installationprovides instructions to install Yardstick - Network service benchmarking testing. - Chapter Yardstick Test Cases includes a list of available Yardstick test cases.

1.2. Contact Yardstick¶

Feedback? Contact us

2. Methodology¶

2.1. Abstract¶

This chapter describes the methodology implemented by the Yardstick project for verifying the NFVI from the perspective of a VNF.

2.2. ETSI-NFV¶

The document ETSI GS NFV-TST001, “Pre-deployment Testing; Report on Validation of NFV Environments and Services”, recommends methods for pre-deployment testing of the functional components of an NFV environment.

The Yardstick project implements the methodology described in chapter 6, “Pre- deployment validation of NFV infrastructure”.

The methodology consists in decomposing the typical VNF work-load performance metrics into a number of characteristics/performance vectors, which each can be represented by distinct test-cases.

The methodology includes five steps:

- Step1: Define Infrastruture - the Hardware, Software and corresponding

configuration target for validation; the OPNFV infrastructure, in OPNFV community labs.

- Step2: Identify VNF type - the application for which the

infrastructure is to be validated, and its requirements on the underlying infrastructure.

- Step3: Select test cases - depending on the workload that represents the

application for which the infrastruture is to be validated, the relevant test cases amongst the list of available Yardstick test cases.

- Step4: Execute tests - define the duration and number of iterations for the

selected test cases, tests runs are automated via OPNFV Jenkins Jobs.

Step5: Collect results - using the common API for result collection.

See also

Yardsticktst for material on alignment ETSI TST001 and Yardstick.

2.3. Metrics¶

The metrics, as defined by ETSI GS NFV-TST001, are shown in Table1, Table2 and Table3.

In OPNFV Colorado release, generic test cases covering aspects of the listed metrics are available; further OPNFV releases will provide extended testing of these metrics. The view of available Yardstick test cases cross ETSI definitions in Table1, Table2 and Table3 is shown in Table4. It shall be noticed that the Yardstick test cases are examples, the test duration and number of iterations are configurable, as are the System Under Test (SUT) and the attributes (or, in Yardstick nomemclature, the scenario options).

Table 1 - Performance/Speed Metrics

| Category | Performance/Speed |

| Compute |

|

| Network |

|

| Storage |

|

Table 2 - Capacity/Scale Metrics

| Category | Capacity/Scale |

| Compute |

|

| Network |

|

| Storage |

|

Table 3 - Availability/Reliability Metrics

| Category | Availability/Reliability |

| Compute |

|

| Network |

|

| Storage |

|

Table 4 - Yardstick Generic Test Cases

| Category | Performance/Speed | Capacity/Scale | Availability/Reliability |

| Compute | TC003 [1] TC004 TC010 TC012 TC014 TC069 | TC003 [1] TC004 TC024 TC055 | TC013 [1] TC015 [1] |

| Network | TC001 TC002 TC009 TC011 TC042 TC043 | TC044 TC073 TC075 | TC016 [1] TC018 [1] |

| Storage | TC005 | TC063 | TC017 [1] |

Note

The description in this OPNFV document is intended as a reference for users to understand the scope of the Yardstick Project and the deliverables of the Yardstick framework. For complete description of the methodology, please refer to the ETSI document.

Footnotes

| [1] | (1, 2, 3, 4, 5, 6, 7) To be included in future deliveries. |

3. Architecture¶

3.1. Abstract¶

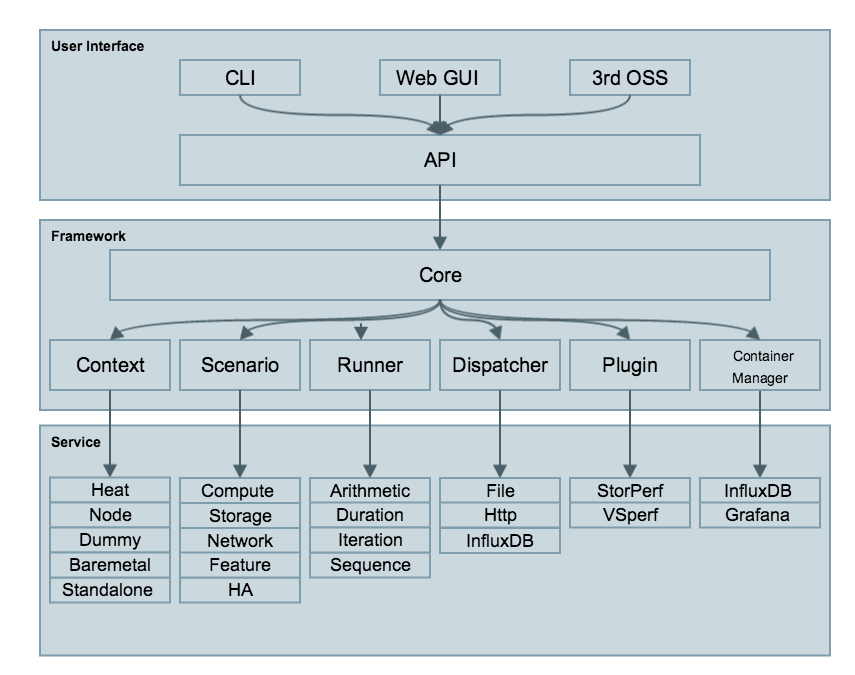

This chapter describes the yardstick framework software architecture. we will introduce it from Use-Case View, Logical View, Process View and Deployment View. More technical details will be introduced in this chapter.

3.2. Overview¶

3.2.1. Architecture overview¶

Yardstick is mainly written in Python, and test configurations are made in YAML. Documentation is written in reStructuredText format, i.e. .rst files. Yardstick is inspired by Rally. Yardstick is intended to run on a computer with access and credentials to a cloud. The test case is described in a configuration file given as an argument.

How it works: the benchmark task configuration file is parsed and converted into an internal model. The context part of the model is converted into a Heat template and deployed into a stack. Each scenario is run using a runner, either serially or in parallel. Each runner runs in its own subprocess executing commands in a VM using SSH. The output of each scenario is written as json records to a file or influxdb or http server, we use influxdb as the backend, the test result will be shown with grafana.

3.2.2. Concept¶

Benchmark - assess the relative performance of something

Benchmark configuration file - describes a single test case in yaml format

Context - The set of Cloud resources used by a scenario, such as user names, image names, affinity rules and network configurations. A context is converted into a simplified Heat template, which is used to deploy onto the Openstack environment.

Data - Output produced by running a benchmark, written to a file in json format

Runner - Logic that determines how a test scenario is run and reported, for example the number of test iterations, input value stepping and test duration. Predefined runner types exist for re-usage, see Runner types.

Scenario - Type/class of measurement for example Ping, Pktgen, (Iperf, LmBench, ...)

SLA - Relates to what result boundary a test case must meet to pass. For example a latency limit, amount or ratio of lost packets and so on. Action based on SLA can be configured, either just to log (monitor) or to stop further testing (assert). The SLA criteria is set in the benchmark configuration file and evaluated by the runner.

3.2.3. Runner types¶

There exists several predefined runner types to choose between when designing a test scenario:

Arithmetic: Every test run arithmetically steps the specified input value(s) in the test scenario, adding a value to the previous input value. It is also possible to combine several input values for the same test case in different combinations.

Snippet of an Arithmetic runner configuration:

runner:

type: Arithmetic

iterators:

-

name: stride

start: 64

stop: 128

step: 64

Duration: The test runs for a specific period of time before completed.

Snippet of a Duration runner configuration:

runner:

type: Duration

duration: 30

Sequence: The test changes a specified input value to the scenario. The input values to the sequence are specified in a list in the benchmark configuration file.

Snippet of a Sequence runner configuration:

runner:

type: Sequence

scenario_option_name: packetsize

sequence:

- 100

- 200

- 250

Iteration: Tests are run a specified number of times before completed.

Snippet of an Iteration runner configuration:

runner:

type: Iteration

iterations: 2

3.3. Use-Case View¶

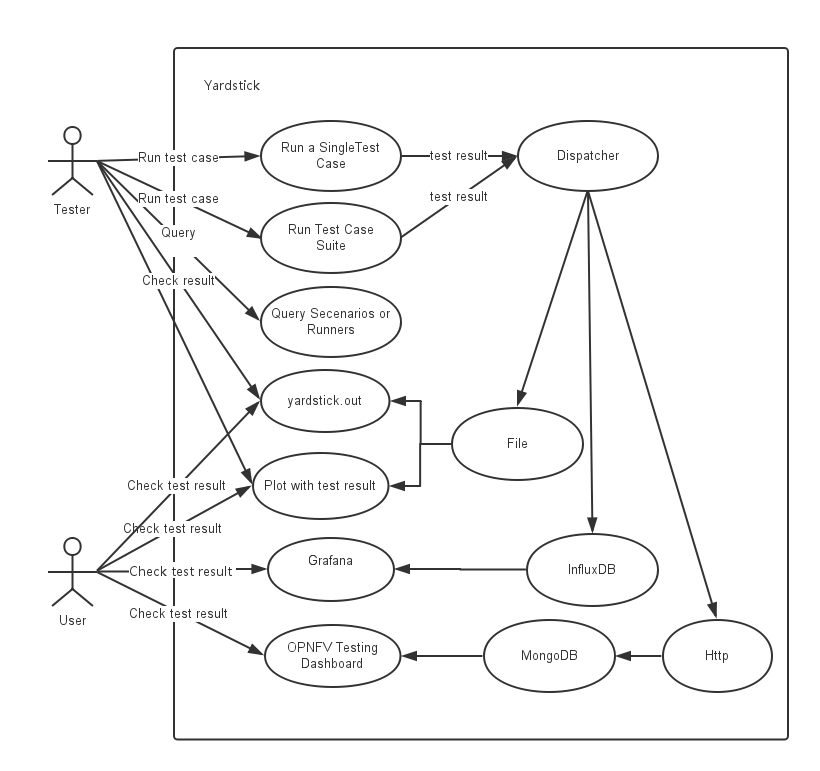

Yardstick Use-Case View shows two kinds of users. One is the Tester who will do testing in cloud, the other is the User who is more concerned with test result and result analyses.

For testers, they will run a single test case or test case suite to verify infrastructure compliance or bencnmark their own infrastructure performance. Test result will be stored by dispatcher module, three kinds of store method (file, influxdb and http) can be configured. The detail information of scenarios and runners can be queried with CLI by testers.

For users, they would check test result with four ways.

If dispatcher module is configured as file(default), there are two ways to check test result. One is to get result from yardstick.out ( default path: /tmp/yardstick.out), the other is to get plot of test result, it will be shown if users execute command “yardstick-plot”.

If dispatcher module is configured as influxdb, users will check test result on Grafana which is most commonly used for visualizing time series data.

If dispatcher module is configured as http, users will check test result on OPNFV testing dashboard which use MongoDB as backend.

3.4. Logical View¶

Yardstick Logical View describes the most important classes, their organization, and the most important use-case realizations.

Main classes:

TaskCommands - “yardstick task” subcommand handler.

HeatContext - Do test yaml file context section model convert to HOT, deploy and undeploy Openstack heat stack.

Runner - Logic that determines how a test scenario is run and reported.

TestScenario - Type/class of measurement for example Ping, Pktgen, (Iperf, LmBench, ...)

Dispatcher - Choose user defined way to store test results.

TaskCommands is the “yardstick task” subcommand’s main entry. It takes yaml file (e.g. test.yaml) as input, and uses HeatContext to convert the yaml file’s context section to HOT. After Openstack heat stack is deployed by HeatContext with the converted HOT, TaskCommands use Runner to run specified TestScenario. During first runner initialization, it will create output process. The output process use Dispatcher to push test results. The Runner will also create a process to execute TestScenario. And there is a multiprocessing queue between each runner process and output process, so the runner process can push the real-time test results to the storage media. TestScenario is commonly connected with VMs by using ssh. It sets up VMs and run test measurement scripts through the ssh tunnel. After all TestScenaio is finished, TaskCommands will undeploy the heat stack. Then the whole test is finished.

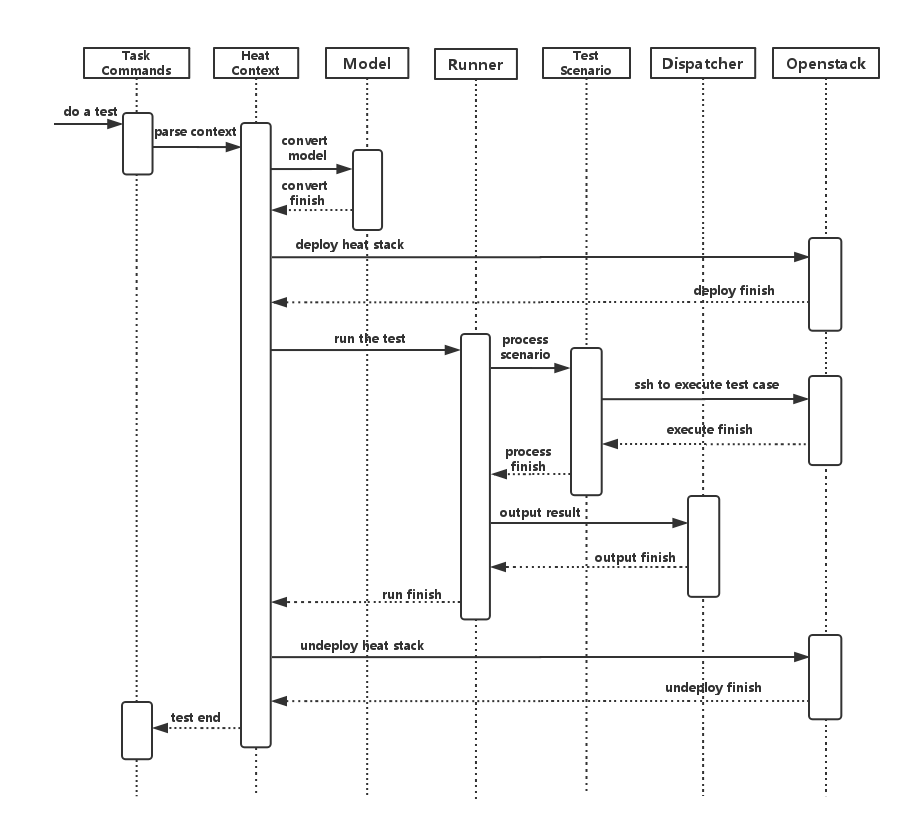

3.5. Process View (Test execution flow)¶

Yardstick process view shows how yardstick runs a test case. Below is the sequence graph about the test execution flow using heat context, and each object represents one module in yardstick:

A user wants to do a test with yardstick. He can use the CLI to input the command to start a task. “TaskCommands” will receive the command and ask “HeatContext” to parse the context. “HeatContext” will then ask “Model” to convert the model. After the model is generated, “HeatContext” will inform “Openstack” to deploy the heat stack by heat template. After “Openstack” deploys the stack, “HeatContext” will inform “Runner” to run the specific test case.

Firstly, “Runner” would ask “TestScenario” to process the specific scenario. Then “TestScenario” will start to log on the openstack by ssh protocal and execute the test case on the specified VMs. After the script execution finishes, “TestScenario” will send a message to inform “Runner”. When the testing job is done, “Runner” will inform “Dispatcher” to output the test result via file, influxdb or http. After the result is output, “HeatContext” will call “Openstack” to undeploy the heat stack. Once the stack is undepoyed, the whole test ends.

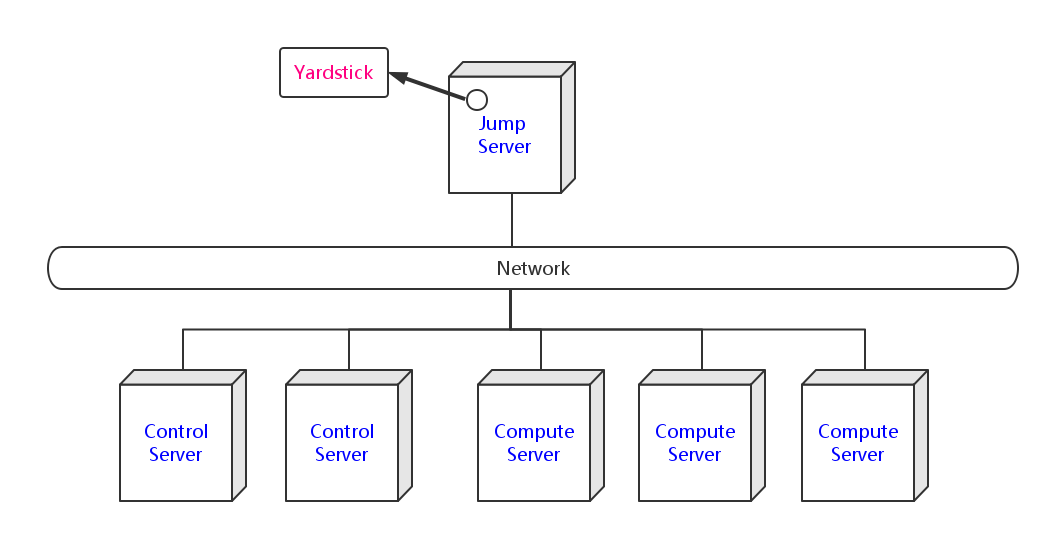

3.6. Deployment View¶

Yardstick deployment view shows how the yardstick tool can be deployed into the underlying platform. Generally, yardstick tool is installed on JumpServer(see 07-installation for detail installation steps), and JumpServer is connected with other control/compute servers by networking. Based on this deployment, yardstick can run the test cases on these hosts, and get the test result for better showing.

3.7. Yardstick Directory structure¶

yardstick/ - Yardstick main directory.

- tests/ci/ - Used for continuous integration of Yardstick at different PODs and

- with support for different installers.

- docs/ - All documentation is stored here, such as configuration guides,

- user guides and Yardstick descriptions.

etc/ - Used for test cases requiring specific POD configurations.

- samples/ - test case samples are stored here, most of all scenario and

- feature’s samples are shown in this directory.

- tests/ - Here both Yardstick internal tests (functional/ and unit/) as

- well as the test cases run to verify the NFVI (opnfv/) are stored. Also configurations of what to run daily and weekly at the different PODs is located here.

- tools/ - Currently contains tools to build image for VMs which are deployed

- by Heat. Currently contains how to build the yardstick-trusty-server image with the different tools that are needed from within the image.

plugin/ - Plug-in configuration files are stored here.

vTC/ - Contains the files for running the virtual Traffic Classifier tests.

- yardstick/ - Contains the internals of Yardstick: Runners, Scenario, Contexts,

- CLI parsing, keys, plotting tools, dispatcher, plugin install/remove scripts and so on.

4. Yardstick Installation¶

4.1. Abstract¶

Yardstick supports installation by Docker or directly in Ubuntu. The installation procedure for Docker and direct installation are detailed in the sections below.

To use Yardstick you should have access to an OpenStack environment, with at least Nova, Neutron, Glance, Keystone and Heat installed.

The steps needed to run Yardstick are:

- Install Yardstick.

- Load OpenStack environment variables.

- Create Yardstick flavor.

- Build a guest image and load it into the OpenStack environment.

- Create the test configuration

.yamlfile and run the test case/suite.

4.2. Prerequisites¶

The OPNFV deployment is out of the scope of this document and can be found here. The OPNFV platform is considered as the System Under Test (SUT) in this document.

Several prerequisites are needed for Yardstick:

- A Jumphost to run Yardstick on

- A Docker daemon or a virtual environment installed on the Jumphost

- A public/external network created on the SUT

- Connectivity from the Jumphost to the SUT public/external network

NOTE: Jumphost refers to any server which meets the previous requirements. Normally it is the same server from where the OPNFV deployment has been triggered.

WARNING: Connectivity from Jumphost is essential and it is of paramount importance to make sure it is working before even considering to install and run Yardstick. Make also sure you understand how your networking is designed to work.

NOTE: If your Jumphost is operating behind a company http proxy and/or Firewall, please consult first the section `Proxy Support (**Todo**)`_, towards the end of this document. That section details some tips/tricks which may be of help in a proxified environment.

4.3. Install Yardstick using Docker (recommended)¶

Yardstick has a Docker image. It is recommended to use this Docker image to run Yardstick test.

4.3.1. Prepare the Yardstick container¶

Install docker on your guest system with the following command, if not done yet:

wget -qO- https://get.docker.com/ | sh

Pull the Yardstick Docker image (opnfv/yardstick) from the public dockerhub

registry under the OPNFV account: dockerhub, with the following docker

command:

docker pull opnfv/yardstick:stable

After pulling the Docker image, check that it is available with the following docker command:

[yardsticker@jumphost ~]$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

opnfv/yardstick stable a4501714757a 1 day ago 915.4 MB

Run the Docker image to get a Yardstick container:

docker run -itd --privileged -v /var/run/docker.sock:/var/run/docker.sock -p 8888:5000 --name yardstick opnfv/yardstick:stable

Note:

| parameters | Detail |

|---|---|

| -itd | -i: interactive, Keep STDIN open even if not attached. -t: allocate a pseudo-TTY. -d: run container in detached mode, in the background. |

| –privileged | If you want to build

yardstick-image in

Yardstick container, this

parameter is needed. |

| -p 8888:5000 | If you want to call Yardstick API out of Yardstick container, this parameter is needed. |

| -v /var/run/docker.sock:/var/run/docker.sock | If you want to use yardstick env grafana/influxdb to create a grafana/influxdb container out of Yardstick container, this parameter is needed. |

| –name yardstick | The name for this container, not needed and can be defined by the user. |

4.3.2. Configure the Yardstick container environment¶

There are three ways to configure environments for running Yardstick, which will be shown in the following sections. Before that, enter the Yardstick container:

docker exec -it yardstick /bin/bash

and then configure Yardstick environments in the Yardstick container.

4.3.2.1. The first way (recommended)¶

In the Yardstick container, the Yardstick repository is located in the /home/opnfv/repos directory. Yardstick provides a CLI to prepare OpenStack environment variables and create Yardstick flavor and guest images automatically:

yardstick env prepare

NOTE: Since Euphrates release, the above command will not able to automatically configure the /etc/yardstick/openstack.creds file. So before running the above command, it is necessary to create the /etc/yardstick/openstack.creds file and save OpenStack environment variables into it manually. If you have the openstack credential file saved outside the Yardstcik Docker container, you can do this easily by mapping the credential file into Yardstick container

using ‘-v /path/to/credential_file:/etc/yardstick/openstack.creds’ when running the Yardstick container.

For details of the required OpenStack environment variables please refer to section Export OpenStack environment variables

The env prepare command may take up to 6-8 minutes to finish building yardstick-image and other environment preparation. Meanwhile if you wish to monitor the env prepare process, you can enter the Yardstick container in a new terminal window and execute the following command:

tail -f /var/log/yardstick/uwsgi.log

4.3.2.2. The second way¶

4.3.2.2.1. Export OpenStack environment variables¶

Before running Yardstick it is necessary to export OpenStack environment variables:

source openrc

Environment variables in the openrc file have to include at least:

OS_AUTH_URLOS_USERNAMEOS_PASSWORDOS_TENANT_NAMEEXTERNAL_NETWORK

A sample openrc file may look like this:

export OS_PASSWORD=console

export OS_TENANT_NAME=admin

export OS_AUTH_URL=http://172.16.1.222:35357/v2.0

export OS_USERNAME=admin

export OS_VOLUME_API_VERSION=2

export EXTERNAL_NETWORK=net04_ext

4.3.2.2.2. Manually create Yardstick falvor and guest images¶

Before executing Yardstick test cases, make sure that Yardstick flavor and guest image are available in OpenStack. Detailed steps about creating the Yardstick flavor and building the Yardstick guest image can be found below.

Most of the sample test cases in Yardstick are using an OpenStack flavor called

yardstick-flavor which deviates from the OpenStack standard m1.tiny flavor by the disk size - instead of 1GB it has 3GB. Other parameters are the same as in m1.tiny.

Create yardstick-flavor:

nova flavor-create yardstick-flavor 100 512 3 1

Most of the sample test cases in Yardstick are using a guest image called

yardstick-image which deviates from an Ubuntu Cloud Server image

containing all the required tools to run test cases supported by Yardstick.

Yardstick has a tool for building this custom image. It is necessary to have

sudo rights to use this tool.

Also you may need install several additional packages to use this tool, by follwing the commands below:

sudo apt-get update && sudo apt-get install -y qemu-utils kpartx

This image can be built using the following command in the directory where Yardstick is installed:

export YARD_IMG_ARCH='amd64'

sudo echo "Defaults env_keep += \'YARD_IMG_ARCH\'" >> /etc/sudoers

sudo tools/yardstick-img-modify tools/ubuntu-server-cloudimg-modify.sh

Warning: Before building the guest image inside the Yardstick container, make sure the container is granted with privilege. The script will create files by default in /tmp/workspace/yardstick and the files will be owned by root!

The created image can be added to OpenStack using the glance image-create or via the OpenStack Dashboard. Example command is:

glance --os-image-api-version 1 image-create \

--name yardstick-image --is-public true \

--disk-format qcow2 --container-format bare \

--file /tmp/workspace/yardstick/yardstick-image.img

Some Yardstick test cases use a Cirros 0.3.5 image and/or a Ubuntu 16.04 image. Add Cirros and Ubuntu images to OpenStack:

openstack image create \

--disk-format qcow2 \

--container-format bare \

--file $cirros_image_file \

cirros-0.3.5

openstack image create \

--disk-format qcow2 \

--container-format bare \

--file $ubuntu_image_file \

Ubuntu-16.04

4.3.2.3. The third way¶

Similar to the second way, the first step is also to Export OpenStack environment variables. Then the following steps should be done.

4.3.2.3.1. Automatically create Yardstcik flavor and guest images¶

Yardstick has a script for automatically creating Yardstick flavor and building Yardstick guest images. This script is mainly used for CI and can be also used in the local environment:

source $YARDSTICK_REPO_DIR/tests/ci/load_images.sh

4.3.3. The Yardstick container GUI¶

In Euphrates release, Yardstick implemeted a GUI for Yardstick Docker container. After booting up Yardstick container, you can visit the GUI at <container_host_ip>:8888/gui/index.html

For usage of Yardstick GUI, please watch our demo video at https://www.youtube.com/watch?v=M3qbJDp6QBk Note: The Yardstick GUI is still in development, the GUI layout and features may change.

4.3.4. Delete the Yardstick container¶

If you want to uninstall Yardstick, just delete the Yardstick container:

docker stop yardstick && docker rm yardstick

4.4. Install Yardstick directly in Ubuntu¶

Alternatively you can install Yardstick framework directly in Ubuntu or in an Ubuntu Docker image. No matter which way you choose to install Yardstick, the following installation steps are identical.

If you choose to use the Ubuntu Docker image, you can pull the Ubuntu Docker image from Docker hub:

docker pull ubuntu:16.04

4.4.1. Install Yardstick¶

Prerequisite preparation:

apt-get update && apt-get install -y git python-setuptools python-pip

easy_install -U setuptools==30.0.0

pip install appdirs==1.4.0

pip install virtualenv

Create a virtual environment:

virtualenv ~/yardstick_venv

export YARDSTICK_VENV=~/yardstick_venv

source ~/yardstick_venv/bin/activate

Download the source code and install Yardstick from it:

git clone https://gerrit.opnfv.org/gerrit/yardstick

export YARDSTICK_REPO_DIR=~/yardstick

cd yardstick

./install.sh

4.4.2. Configure the Yardstick environment (Todo)¶

For installing Yardstick directly in Ubuntu, the yardstick env command is not available. You need to prepare OpenStack environment variables and create Yardstick flavor and guest images manually.

4.4.3. Uninstall Yardstick¶

For unistalling Yardstick, just delete the virtual environment:

rm -rf ~/yardstick_venv

4.5. Verify the installation¶

It is recommended to verify that Yardstick was installed successfully

by executing some simple commands and test samples. Before executing Yardstick

test cases make sure yardstick-flavor and yardstick-image can be found in OpenStack and the openrc file is sourced. Below is an example

invocation of Yardstick help command and ping.py test sample:

yardstick -h

yardstick task start samples/ping.yaml

NOTE: The above commands could be run in both the Yardstick container and the Ubuntu directly.

Each testing tool supported by Yardstick has a sample configuration file.

These configuration files can be found in the samples directory.

Default location for the output is /tmp/yardstick.out.

4.6. Deploy InfluxDB and Grafana using Docker¶

Without InfluxDB, Yardstick stores results for runnning test case in the file

/tmp/yardstick.out. However, it’s unconvenient to retrieve and display

test results. So we will show how to use InfluxDB to store data and use

Grafana to display data in the following sections.

4.6.1. Automatically deploy InfluxDB and Grafana containers (recommended)¶

Firstly, enter the Yardstick container:

docker exec -it yardstick /bin/bash

Secondly, create InfluxDB container and configure with the following command:

yardstick env influxdb

Thirdly, create and configure Grafana container:

yardstick env grafana

Then you can run a test case and visit http://host_ip:3000 (admin/admin) to see the results.

NOTE: Executing yardstick env command to deploy InfluxDB and Grafana requires Jumphost’s docker API version => 1.24. Run the following command to check the docker API version on the Jumphost:

docker version

4.6.2. Manually deploy InfluxDB and Grafana containers¶

You could also deploy influxDB and Grafana containers manually on the Jumphost. The following sections show how to do.

4.6.2.1. Pull docker images¶

docker pull tutum/influxdb

docker pull grafana/grafana

4.6.2.2. Run and configure influxDB¶

Run influxDB:

docker run -d --name influxdb \

-p 8083:8083 -p 8086:8086 --expose 8090 --expose 8099 \

tutum/influxdb

docker exec -it influxdb bash

Configure influxDB:

influx

>CREATE USER root WITH PASSWORD 'root' WITH ALL PRIVILEGES

>CREATE DATABASE yardstick;

>use yardstick;

>show MEASUREMENTS;

4.6.2.3. Run and configure Grafana¶

Run Grafana:

docker run -d --name grafana -p 3000:3000 grafana/grafana

Log on http://{YOUR_IP_HERE}:3000 using admin/admin and configure database resource to be {YOUR_IP_HERE}:8086.

4.6.2.4. Configure yardstick.conf¶

docker exec -it yardstick /bin/bash

cp etc/yardstick/yardstick.conf.sample /etc/yardstick/yardstick.conf

vi /etc/yardstick/yardstick.conf

Modify yardstick.conf:

[DEFAULT]

debug = True

dispatcher = influxdb

[dispatcher_influxdb]

timeout = 5

target = http://{YOUR_IP_HERE}:8086

db_name = yardstick

username = root

password = root

Now you can run Yardstick test cases and store the results in influxDB.

4.7. Deploy InfluxDB and Grafana directly in Ubuntu (Todo)¶

4.8. Yardstick common CLI¶

yardstick testcase list

This command line would list all test cases in yardstick. It would show like below:

+---------------------------------------------------------------------------------------

| Testcase Name | Description

+---------------------------------------------------------------------------------------

| opnfv_yardstick_tc001 | Measure network throughput using pktgen

| opnfv_yardstick_tc002 | measure network latency using ping

| opnfv_yardstick_tc005 | Measure Storage IOPS, throughput and latency using fio.

| opnfv_yardstick_tc006 | Measure volume storage IOPS, throughput and latency using fio.

| opnfv_yardstick_tc008 | Measure network throughput and packet loss using Pktgen

| opnfv_yardstick_tc009 | Measure network throughput and packet loss using pktgen

| opnfv_yardstick_tc010 | measure memory read latency using lmbench.

| opnfv_yardstick_tc011 | Measure packet delay variation (jitter) using iperf3.

| opnfv_yardstick_tc012 | Measure memory read and write bandwidth using lmbench.

| opnfv_yardstick_tc014 | Measure Processing speed using unixbench.

| opnfv_yardstick_tc019 | Sample test case for the HA of controller node service.

...

+---------------------------------------------------------------------------------------

Take opnfv_yardstick_tc002 for an example. This test case measure network latency. You just need to type in yardstick testcase show opnfv_yardstick_tc002, and the console would show the config yaml of this test case:

##############################################################################

# Copyright (c) 2017 kristian.hunt@gmail.com and others.

#

# All rights reserved. This program and the accompanying materials

# are made available under the terms of the Apache License, Version 2.0

# which accompanies this distribution, and is available at

# http://www.apache.org/licenses/LICENSE-2.0

##############################################################################

---

schema: "yardstick:task:0.1"

description: >

Yardstick TC002 config file;

measure network latency using ping;

{% set image = image or "cirros-0.3.5" %}

{% set provider = provider or none %}

{% set physical_network = physical_network or 'physnet1' %}

{% set segmentation_id = segmentation_id or none %}

{% set packetsize = packetsize or 100 %}

scenarios:

{% for i in range(2) %}

-

type: Ping

options:

packetsize: {{packetsize}}

host: athena.demo

target: ares.demo

runner:

type: Duration

duration: 60

interval: 10

sla:

max_rtt: 10

action: monitor

{% endfor %}

context:

name: demo

image: {{image}}

flavor: yardstick-flavor

user: cirros

placement_groups:

pgrp1:

policy: "availability"

servers:

athena:

floating_ip: true

placement: "pgrp1"

ares:

placement: "pgrp1"

networks:

test:

cidr: '10.0.1.0/24'

{% if provider == "vlan" %}

provider: {{provider}}

physical_network: {{physical_network}}å

{% if segmentation_id %}

segmentation_id: {{segmentation_id}}

{% endif %}

{% endif %}

If you want run a test case, then you need to use yardstick task start <test_case_path> this command support some parameters as below:

| Parameters | Detail |

|---|---|

| -d | show debug log of yardstick running |

| –task-args | If you want to customize test case parameters, use “–task-args” to pass the value. The format is a json string with parameter key-value pair. |

| –task-args-file | If you want to use yardstick env prepare command(or related API) to load the |

| –parse-only | |

| –output-file OUTPUT_FILE_PATH | Specify where to output the log. if not pass, the default value is “/tmp/yardstick/yardstick.log” |

| –suite TEST_SUITE_PATH | run a test suite, TEST_SUITE_PATH speciy where the test suite locates |

4.9. Run Yardstick in a local environment¶

We also have a guide about how to run Yardstick in a local environment. This work is contributed by Tapio Tallgren. You can find this guide at here.

4.10. Create a test suite for Yardstick¶

A test suite in yardstick is a yaml file which include one or more test cases. Yardstick is able to support running test suite task, so you can customize your own test suite and run it in one task.

tests/opnfv/test_suites is the folder where Yardstick puts CI test suite. A typical test suite is like below (the fuel_test_suite.yaml example):

---

# Fuel integration test task suite

schema: "yardstick:suite:0.1"

name: "fuel_test_suite"

test_cases_dir: "samples/"

test_cases:

-

file_name: ping.yaml

-

file_name: iperf3.yaml

As you can see, there are two test cases in the fuel_test_suite.yaml. The

schema and the name must be specified. The test cases should be listed

via the tag test_cases and their relative path is also marked via the tag

test_cases_dir.

Yardstick test suite also supports constraints and task args for each test

case. Here is another sample (the os-nosdn-nofeature-ha.yaml example) to

show this, which is digested from one big test suite:

---

schema: "yardstick:suite:0.1"

name: "os-nosdn-nofeature-ha"

test_cases_dir: "tests/opnfv/test_cases/"

test_cases:

-

file_name: opnfv_yardstick_tc002.yaml

-

file_name: opnfv_yardstick_tc005.yaml

-

file_name: opnfv_yardstick_tc043.yaml

constraint:

installer: compass

pod: huawei-pod1

task_args:

huawei-pod1: '{"pod_info": "etc/yardstick/.../pod.yaml",

"host": "node4.LF","target": "node5.LF"}'

As you can see in test case opnfv_yardstick_tc043.yaml, there are two

tags, constraint and task_args. constraint is to specify which

installer or pod it can be run in the CI environment. task_args is to

specify the task arguments for each pod.

All in all, to create a test suite in Yardstick, you just need to create a yaml file and add test cases, constraint or task arguments if necessary.

4.11. Proxy Support (Todo)¶

5. Installing a plug-in into Yardstick¶

5.1. Abstract¶

Yardstick provides a plugin CLI command to support integration with other

OPNFV testing projects. Below is an example invocation of Yardstick plugin

command and Storperf plug-in sample.

5.2. Installing Storperf into Yardstick¶

Storperf is delivered as a Docker container from https://hub.docker.com/r/opnfv/storperf/tags/.

There are two possible methods for installation in your environment:

- Run container on Jump Host

- Run container in a VM

In this introduction we will install Storperf on Jump Host.

5.2.1. Step 0: Environment preparation¶

Running Storperf on Jump Host Requirements:

- Docker must be installed

- Jump Host must have access to the OpenStack Controller API

- Jump Host must have internet connectivity for downloading docker image

- Enough floating IPs must be available to match your agent count

Before installing Storperf into yardstick you need to check your openstack environment and other dependencies:

- Make sure docker is installed.

- Make sure Keystone, Nova, Neutron, Glance, Heat are installed correctly.

- Make sure Jump Host have access to the OpenStack Controller API.

- Make sure Jump Host must have internet connectivity for downloading docker image.

- You need to know where to get basic openstack Keystone authorization info, such as OS_PASSWORD, OS_TENANT_NAME, OS_AUTH_URL, OS_USERNAME.

- To run a Storperf container, you need to have OpenStack Controller environment variables defined and passed to Storperf container. The best way to do this is to put environment variables in a “storperf_admin-rc” file. The storperf_admin-rc should include credential environment variables at least:

- OS_AUTH_URL

- OS_USERNAME

- OS_PASSWORD

- OS_TENANT_ID

- OS_TENANT_NAME

- OS_PROJECT_NAME

- OS_PROJECT_ID

- OS_USER_DOMAIN_ID

Yardstick has a “prepare_storperf_admin-rc.sh” script which can be used to generate the “storperf_admin-rc” file, this script is located at test/ci/prepare_storperf_admin-rc.sh

#!/bin/bash

# Prepare storperf_admin-rc for StorPerf.

AUTH_URL=${OS_AUTH_URL}

USERNAME=${OS_USERNAME:-admin}

PASSWORD=${OS_PASSWORD:-console}

TENANT_NAME=${OS_TENANT_NAME:-admin}

TENANT_ID=`openstack project show admin|grep '\bid\b' |awk -F '|' '{print $3}'|sed -e 's/^[[:space:]]*//'`

PROJECT_NAME=${OS_PROJECT_NAME:-$TENANT_NAME}

PROJECT_ID=`openstack project show admin|grep '\bid\b' |awk -F '|' '{print $3}'|sed -e 's/^[[:space:]]*//'`

USER_DOMAIN_ID=${OS_USER_DOMAIN_ID:-default}

rm -f ~/storperf_admin-rc

touch ~/storperf_admin-rc

echo "OS_AUTH_URL="$AUTH_URL >> ~/storperf_admin-rc

echo "OS_USERNAME="$USERNAME >> ~/storperf_admin-rc

echo "OS_PASSWORD="$PASSWORD >> ~/storperf_admin-rc

echo "OS_PROJECT_NAME="$PROJECT_NAME >> ~/storperf_admin-rc

echo "OS_PROJECT_ID="$PROJECT_ID >> ~/storperf_admin-rc

echo "OS_TENANT_NAME="$TENANT_NAME >> ~/storperf_admin-rc

echo "OS_TENANT_ID="$TENANT_ID >> ~/storperf_admin-rc

echo "OS_USER_DOMAIN_ID="$USER_DOMAIN_ID >> ~/storperf_admin-rc

The generated “storperf_admin-rc” file will be stored in the root directory. If you installed Yardstick using Docker, this file will be located in the container. You may need to copy it to the root directory of the Storperf deployed host.

5.2.2. Step 1: Plug-in configuration file preparation¶

To install a plug-in, first you need to prepare a plug-in configuration file in YAML format and store it in the “plugin” directory. The plugin configration file work as the input of yardstick “plugin” command. Below is the Storperf plug-in configuration file sample:

---

# StorPerf plugin configuration file

# Used for integration StorPerf into Yardstick as a plugin

schema: "yardstick:plugin:0.1"

plugins:

name: storperf

deployment:

ip: 192.168.23.2

user: root

password: root

In the plug-in configuration file, you need to specify the plug-in name and the plug-in deployment info, including node ip, node login username and password. Here the Storperf will be installed on IP 192.168.23.2 which is the Jump Host in my local environment.

5.2.3. Step 2: Plug-in install/remove scripts preparation¶

In “yardstick/resource/scripts” directory, there are two folders: a “install” folder and a “remove” folder. You need to store the plug-in install/remove scripts in these two folders respectively.

The detailed installation or remove operation should de defined in these two scripts. The name of both install and remove scripts should match the plugin-in name that you specified in the plug-in configuration file.

For example, the install and remove scripts for Storperf are both named to “storperf.bash”.

5.2.4. Step 3: Install and remove Storperf¶

To install Storperf, simply execute the following command:

# Install Storperf

yardstick plugin install plugin/storperf.yaml

5.2.4.1. removing Storperf from yardstick¶

To remove Storperf, simply execute the following command:

# Remove Storperf

yardstick plugin remove plugin/storperf.yaml

What yardstick plugin command does is using the username and password to log into the deployment target and then execute the corresponding install or remove script.

6. Store Other Project’s Test Results in InfluxDB¶

6.1. Abstract¶

This chapter illustrates how to run plug-in test cases and store test results into community’s InfluxDB. The framework is shown in Framework.

6.2. Store Storperf Test Results into Community’s InfluxDB¶

As shown in Framework, there are two ways to store Storperf test results into community’s InfluxDB:

- Yardstick executes Storperf test case (TC074), posting test job to Storperf container via ReST API. After the test job is completed, Yardstick reads test results via ReST API from Storperf and posts test data to the influxDB.

- Additionally, Storperf can run tests by itself and post the test result directly to the InfluxDB. The method for posting data directly to influxDB will be supported in the future.

Our plan is to support rest-api in D release so that other testing projects can call the rest-api to use yardstick dispatcher service to push data to yardstick’s influxdb database.

For now, influxdb only support line protocol, and the json protocol is deprecated.

Take ping test case for example, the raw_result is json format like this:

"benchmark": {

"timestamp": 1470315409.868095,

"errors": "",

"data": {

"rtt": {

"ares": 1.125

}

},

"sequence": 1

},

"runner_id": 2625

}

With the help of “influxdb_line_protocol”, the json is transform to like below as a line string:

'ping,deploy_scenario=unknown,host=athena.demo,installer=unknown,pod_name=unknown,

runner_id=2625,scenarios=Ping,target=ares.demo,task_id=77755f38-1f6a-4667-a7f3-

301c99963656,version=unknown rtt.ares=1.125 1470315409868094976'

So, for data output of json format, you just need to transform json into line format and call influxdb api to post the data into the database. All this function has been implemented in Influxdb. If you need support on this, please contact Mingjiang.

curl -i -XPOST 'http://104.197.68.199:8086/write?db=yardstick' --

data-binary 'ping,deploy_scenario=unknown,host=athena.demo,installer=unknown, ...'

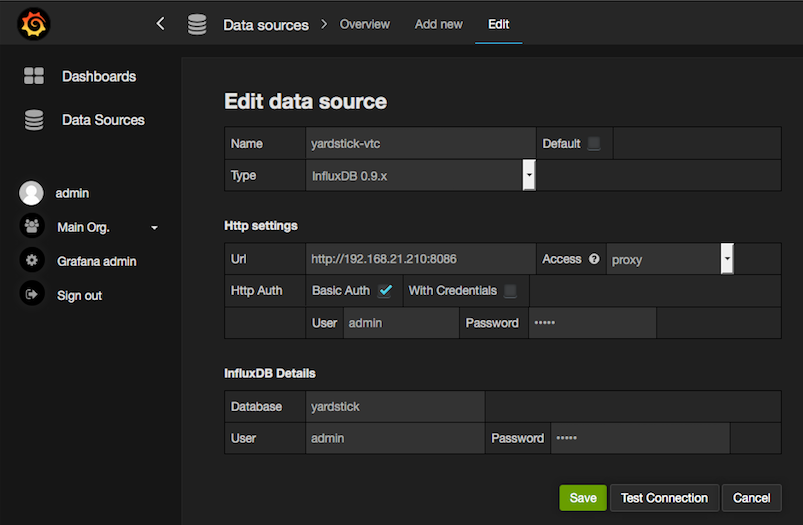

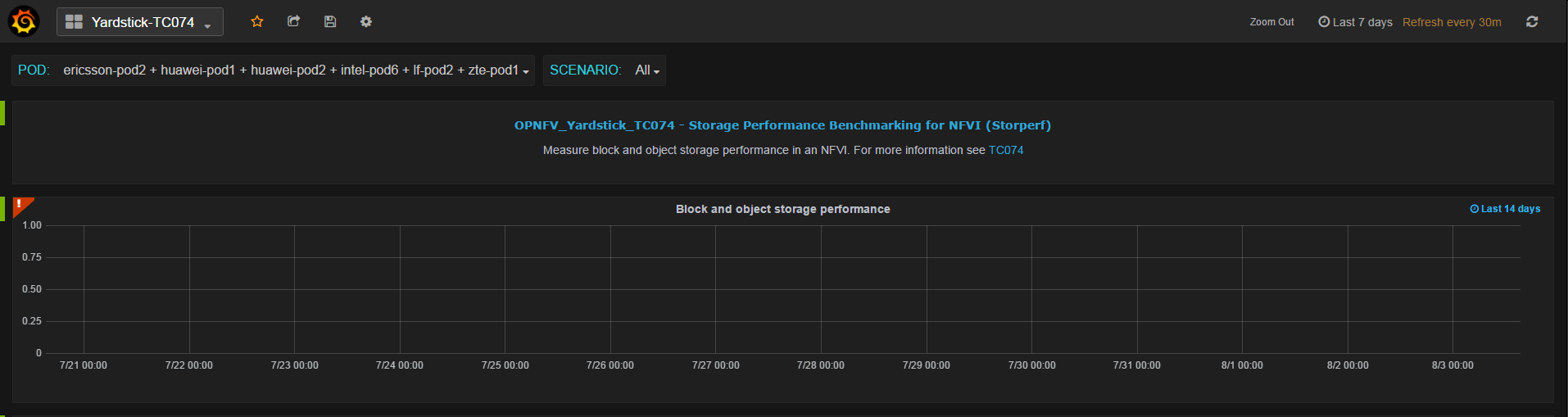

Grafana will be used for visualizing the collected test data, which is shown in Visual. Grafana can be accessed by Login.

7. Grafana dashboard¶

7.1. Abstract¶

This chapter describes the Yardstick grafana dashboard. The Yardstick grafana dashboard can be found here: http://testresults.opnfv.org/grafana/

7.2. Public access¶

Yardstick provids a public account for accessing to the dashboard. The username and password are both set to ‘opnfv’.

7.3. Testcase dashboard¶

For each test case, there is a dedicated dashboard. Shown here is the dashboard of TC002.

For each test case dashboard. On the top left, we have a dashboard selection, you can switch to different test cases using this pull-down menu.

Underneath, we have a pod and scenario selection. All the pods and scenarios that have ever published test data to the InfluxDB will be shown here.

You can check multiple pods or scenarios.

For each test case, we have a short description and a link to detailed test case information in Yardstick user guide.

Underneath, it is the result presentation section. You can use the time period selection on the top right corner to zoom in or zoom out the chart.

7.4. Administration access¶

For a user with administration rights it is easy to update and save any dashboard configuration. Saved updates immediately take effect and become live. This may cause issues like:

- Changes and updates made to the live configuration in Grafana can compromise existing Grafana content in an unwanted, unpredicted or incompatible way. Grafana as such is not version controlled, there exists one single Grafana configuration per dashboard.

- There is a risk several people can disturb each other when doing updates to the same Grafana dashboard at the same time.

Any change made by administrator should be careful.

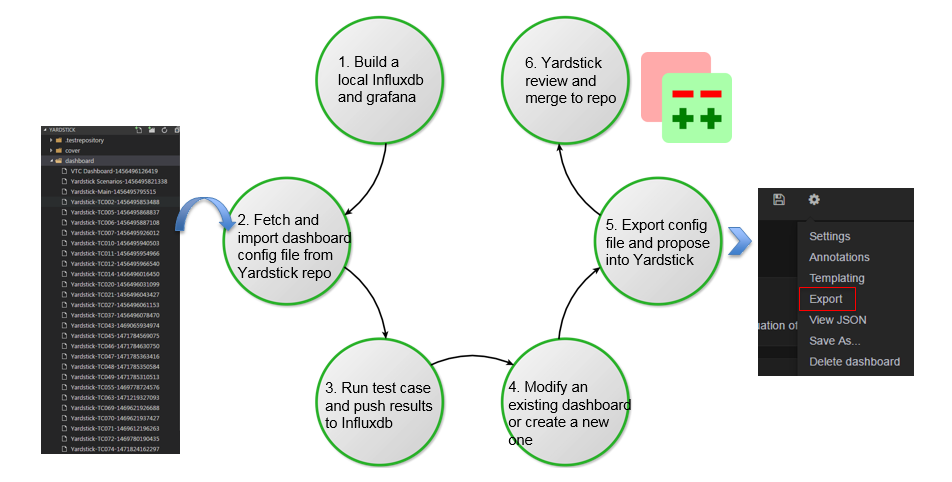

7.5. Add a dashboard into yardstick grafana¶

Due to security concern, users that using the public opnfv account are not able to edit the yardstick grafana directly.It takes a few more steps for a non-yardstick user to add a custom dashboard into yardstick grafana.

There are 6 steps to go.

- You need to build a local influxdb and grafana, so you can do the work locally. You can refer to How to deploy InfluxDB and Grafana locally wiki page about how to do this.

- Once step one is done, you can fetch the existing grafana dashboard configuration file from the yardstick repository and import it to your local grafana. After import is done, you grafana dashboard will be ready to use just like the community’s dashboard.

- The third step is running some test cases to generate test results and publishing it to your local influxdb.

- Now you have some data to visualize in your dashboard. In the fourth step, it is time to create your own dashboard. You can either modify an existing dashboard or try to create a new one from scratch. If you choose to modify an existing dashboard then in the curtain menu of the existing dashboard do a “Save As...” into a new dashboard copy instance, and then continue doing all updates and saves within the dashboard copy.

- When finished with all Grafana configuration changes in this temporary dashboard then chose “export” of the updated dashboard copy into a JSON file and put it up for review in Gerrit, in file /yardstick/dashboard/Yardstick-TCxxx-yyyyyyyyyyyyy. For instance a typical default name of the file would be “Yardstick-TC001 Copy-1234567891234”.

- Once you finish your dashboard, the next step is exporting the configuration file and propose a patch into Yardstick. Yardstick team will review and merge it into Yardstick repository. After approved review Yardstick team will do an “import” of the JSON file and also a “save dashboard” as soon as possible to replace the old live dashboard configuration.

8. Yardstick Restful API¶

8.1. Abstract¶

Yardstick support restful API since Danube.

8.2. Available API¶

8.2.1. /yardstick/env/action¶

Description: This API is used to prepare Yardstick test environment. For Euphrates, it supports:

- Prepare yardstick test environment, including set external network environment variable, load Yardstick VM images and create flavors;

- Start an InfluxDB Docker container and config Yardstick output to InfluxDB;

- Start a Grafana Docker container and config it with the InfluxDB.

Which API to call will depend on the parameters.

Method: POST

Prepare Yardstick test environment Example:

{

'action': 'prepareYardstickEnv'

}

This is an asynchronous API. You need to call /yardstick/asynctask API to get the task result.

Start and config an InfluxDB docker container Example:

{

'action': 'createInfluxDBContainer'

}

This is an asynchronous API. You need to call /yardstick/asynctask API to get the task result.

Start and config a Grafana docker container Example:

{

'action': 'createGrafanaContainer'

}

This is an asynchronous API. You need to call /yardstick/asynctask API to get the task result.

8.2.2. /yardstick/asynctask¶

Description: This API is used to get the status of asynchronous tasks

Method: GET

Get the status of asynchronous tasks Example:

http://localhost:8888/yardstick/asynctask?task_id=3f3f5e03-972a-4847-a5f8-154f1b31db8c

The returned status will be 0(running), 1(finished) and 2(failed).

8.2.3. /yardstick/testcases¶

Description: This API is used to list all released Yardstick test cases.

Method: GET

Get a list of released test cases Example:

http://localhost:8888/yardstick/testcases

8.2.4. /yardstick/testcases/release/action¶

Description: This API is used to run a Yardstick released test case.

Method: POST

Run a released test case Example:

{

'action': 'runTestCase',

'args': {

'opts': {},

'testcase': 'tc002'

}

}

This is an asynchronous API. You need to call /yardstick/results to get the result.

8.2.5. /yardstick/testcases/samples/action¶

Description: This API is used to run a Yardstick sample test case.

Method: POST

Run a sample test case Example:

{

'action': 'runTestCase',

'args': {

'opts': {},

'testcase': 'ping'

}

}

This is an asynchronous API. You need to call /yardstick/results to get the result.

8.2.6. /yardstick/testcases/<testcase_name>/docs¶

Description: This API is used to the documentation of a certain released test case.

Method: GET

Get the documentation of a certain test case Example:

http://localhost:8888/yardstick/taskcases/opnfv_yardstick_tc002/docs

8.2.7. /yardstick/testsuites/action¶

Description: This API is used to run a Yardstick test suite.

Method: POST

Run a test suite Example:

{

'action': 'runTestSuite',

'args': {

'opts': {},

'testcase': 'smoke'

}

}

This is an asynchronous API. You need to call /yardstick/results to get the result.

/yardstick/tasks/<task_id>/log

Description: This API is used to get the real time log of test case execution.

Method: GET

Get real time of test case execution Example:

http://localhost:8888/yardstick/tasks/14795be8-f144-4f54-81ce-43f4e3eab33f/log?index=0

8.2.8. /yardstick/results¶

Description: This API is used to get the test results of tasks. If you call /yardstick/testcases/samples/action API, it will return a task id. You can use the returned task id to get the results by using this API.

Method: GET

Get test results of one task Example:

http://localhost:8888/yardstick/results?task_id=3f3f5e03-972a-4847-a5f8-154f1b31db8c

This API will return a list of test case result

/api/v2/yardstick/openrcs/action

Description: This API provides functionality of handling OpenStack credential file (openrc). For Euphrates, it supports:

- Upload an openrc file for an OpenStack environment;

- Update an openrc file;

- Get openrc file information;

- Delete an openrc file.

Which API to call will depend on the parameters.

METHOD: POST

Upload an openrc file for an OpenStack environment Example:

{

'action': 'upload_openrc',

'args': {

'file': file,

'environment_id': environment_id

}

}

METHOD: POST

Update an openrc file Example:

{

'action': 'update_openrc',

'args': {

'openrc': {

"EXTERNAL_NETWORK": "ext-net",

"OS_AUTH_URL": "http://192.168.23.51:5000/v3",

"OS_IDENTITY_API_VERSION": "3",

"OS_IMAGE_API_VERSION": "2",

"OS_PASSWORD": "console",

"OS_PROJECT_DOMAIN_NAME": "default",

"OS_PROJECT_NAME": "admin",

"OS_TENANT_NAME": "admin",

"OS_USERNAME": "admin",

"OS_USER_DOMAIN_NAME": "default"

},

'environment_id': environment_id

}

}

METHOD: GET

Get openrc file information Example:

http://localhost:8888/api/v2/yardstick/openrcs/5g6g3e02-155a-4847-a5f8-154f1b31db8c

METHOD: DELETE

Delete openrc file Example:

http://localhost:8888/api/v2/yardstick/openrcs/5g6g3e02-155a-4847-a5f8-154f1b31db8c

/api/v2/yardstick/pods/action

Description: This API provides functionality of handling Yardstick pod file (pod.yaml). For Euphrates, it supports:

- Upload a pod file;

- Get pod file information;

- Delete an openrc file.

Which API to call will depend on the parameters.

METHOD: POST

Upload a pod.yaml file Example:

{

'action': 'upload_pod_file',

'args': {

'file': file,

'environment_id': environment_id

}

}

METHOD: GET

Get pod file information Example:

http://localhost:8888/api/v2/yardstick/pods/5g6g3e02-155a-4847-a5f8-154f1b31db8c

METHOD: DELETE

Delete openrc file Example:

http://localhost:8888/api/v2/yardstick/pods/5g6g3e02-155a-4847-a5f8-154f1b31db8c

/api/v2/yardstick/images/action

Description: This API is used to do some work related to Yardstick VM images. For Euphrates, it supports:

- Load Yardstick VM images;

- Get image’s information;

- Delete images.

Which API to call will depend on the parameters.

METHOD: POST

Load VM images Example:

{

'action': 'load_images'

}

METHOD: GET

Get image information Example:

http://localhost:8888/api/v2/yardstick/images/5g6g3e02-155a-4847-a5f8-154f1b31db8c

METHOD: DELETE

Delete images Example:

http://localhost:8888/api/v2/yardstick/images/5g6g3e02-155a-4847-a5f8-154f1b31db8c

/api/v2/yardstick/tasks/action

Description: This API is used to do some work related to yardstick tasks. For Euphrates, it supports:

- Create a Yardstick task;

- run a Yardstick task;

- Add a test case to a task;

- Add a test suite to a task;

- Get a tasks’ information;

- Delete a task.

Which API to call will depend on the parameters.

METHOD: POST

Create a Yardstick task Example:

{

'action': 'create_task',

'args': {

'name': 'task1',

'project_id': project_id

}

}

METHOD: PUT

Run a task Example:

{

'action': 'run'

}

METHOD: PUT

Add a test case to a task Example:

{

'action': 'add_case',

'args': {

'case_name': 'opnfv_yardstick_tc002',

'case_content': case_content

}

}

METHOD: PUT

Add a test suite to a task Example:

{

'action': 'add_suite',

'args': {

'suite_name': 'opnfv_smoke',

'suite_content': suite_content

}

}

METHOD: GET

Get a task’s information Example:

http://localhost:8888/api/v2/yardstick/tasks/5g6g3e02-155a-4847-a5f8-154f1b31db8c

METHOD: DELETE

Delete a task Example:

http://localhost:8888/api/v2/yardstick/tasks/5g6g3e02-155a-4847-a5f8-154f1b31db8c

/api/v2/yardstick/testcases/action

Description: This API is used to do some work related to yardstick testcases. For Euphrates, it supports:

- Upload a test case;

- Get all released test cases’ information;

- Get a certain released test case’s information;

- Delete a test case.

Which API to call will depend on the parameters.

METHOD: POST

Upload a test case Example:

{

'action': 'upload_case',

'args': {

'file': file

}

}

METHOD: GET

Get all released test cases’ information Example:

http://localhost:8888/api/v2/yardstick/testcases

METHOD: GET

Get a certain released test case’s information Example:

http://localhost:8888/api/v2/yardstick/testcases/opnfv_yardstick_tc002

METHOD: DELETE

Delete a certain test case Example:

http://localhost:8888/api/v2/yardstick/testcases/opnfv_yardstick_tc002

/api/v2/yardstick/testsuites/action

Description: This API is used to do some work related to yardstick test suites. For Euphrates, it supports:

- Create a test suite;

- Get a certain test suite’s information;

- Get all test suites;

- Delete a test case.

Which API to call will depend on the parameters.

METHOD: POST

Create a test suite Example:

{

'action': 'create_sutie',

'args': {

'name': <suite_name>,

'testcases': [

'opnfv_yardstick_tc002'

]

}

}

METHOD: GET

Get a certain test suite’s information Example:

http://localhost:8888/api/v2/yardstick/testsuites/<suite_name>

METHOD: GET

Get all test suite Example:

http://localhost:8888/api/v2/yardstick/testsuites

METHOD: DELETE

Delete a certain test suite Example:

http://localhost:8888/api/v2/yardstick/testsuites/<suite_name>

/api/v2/yardstick/projects/action

Description: This API is used to do some work related to yardstick test projects. For Euphrates, it supports:

- Create a Yardstick project;

- Get a certain project’s information;

- Get all projects;

- Delete a project.

Which API to call will depend on the parameters.

METHOD: POST

Create a Yardstick project Example:

{

'action': 'create_project',

'args': {

'name': 'project1'

}

}

METHOD: GET

Get a certain project’s information Example:

http://localhost:8888/api/v2/yardstick/projects/<project_id>

METHOD: GET

Get all projects’ information Example:

http://localhost:8888/api/v2/yardstick/projects

METHOD: DELETE

Delete a certain project Example:

http://localhost:8888/api/v2/yardstick/projects/<project_id>

/api/v2/yardstick/containers/action

Description: This API is used to do some work related to Docker containers. For Euphrates, it supports:

- Create a Grafana Docker container;

- Create an InfluxDB Docker container;

- Get a certain container’s information;

- Delete a container.

Which API to call will depend on the parameters.

METHOD: POST

Create a Grafana Docker container Example:

{

'action': 'create_grafana',

'args': {

'environment_id': <environment_id>

}

}

METHOD: POST

Create an InfluxDB Docker container Example:

{

'action': 'create_influxdb',

'args': {

'environment_id': <environment_id>

}

}

METHOD: GET

Get a certain container’s information Example:

http://localhost:8888/api/v2/yardstick/containers/<container_id>

METHOD: DELETE

Delete a certain container Example:

http://localhost:8888/api/v2/yardstick/containers/<container_id>

9. Yardstick User Interface¶

This interface provides a user to view the test result in table format and also values pinned on to a graph.

9.1. Command¶

yardstick report generate <task-ID> <testcase-filename>

9.2. Description¶

1. When the command is triggered using the task-id and the testcase name provided the respective values are retrieved from the database (influxdb in this particular case).

2. The values are then formatted and then provided to the html template framed with complete html body using Django Framework.

- Then the whole template is written into a html file.

The graph is framed with Timestamp on x-axis and output values (differ from testcase to testcase) on y-axis with the help of “Highcharts”.

10. Virtual Traffic Classifier¶

10.1. Abstract¶

This chapter provides an overview of the virtual Traffic Classifier, a contribution to OPNFV Yardstick from the EU Project TNOVA. Additional documentation is available in TNOVAresults.

10.2. Overview¶

The virtual Traffic Classifier (VTC) VNF, comprises of a Virtual Network Function Component (VNFC). The VNFC contains both the Traffic Inspection module, and the Traffic forwarding module, needed to run the VNF. The exploitation of Deep Packet Inspection (DPI) methods for traffic classification is built around two basic assumptions:

- third parties unaffiliated with either source or recipient are able to

inspect each IP packet’s payload

- the classifier knows the relevant syntax of each application’s packet

payloads (protocol signatures, data patterns, etc.).

The proposed DPI based approach will only use an indicative, small number of the initial packets from each flow in order to identify the content and not inspect each packet.

In this respect it follows the Packet Based per Flow State (term:PBFS). This method uses a table to track each session based on the 5-tuples (src address, dest address, src port,dest port, transport protocol) that is maintained for each flow.

10.3. Concepts¶

- Traffic Inspection: The process of packet analysis and application

identification of network traffic that passes through the VTC.

- Traffic Forwarding: The process of packet forwarding from an incoming

network interface to a pre-defined outgoing network interface.

- Traffic Rule Application: The process of packet tagging, based on a

predefined set of rules. Packet tagging may include e.g. Type of Service (ToS) field modification.

10.4. Architecture¶

The Traffic Inspection module is the most computationally intensive component of the VNF. It implements filtering and packet matching algorithms in order to support the enhanced traffic forwarding capability of the VNF. The component supports a flow table (exploiting hashing algorithms for fast indexing of flows) and an inspection engine for traffic classification.

The implementation used for these experiments exploits the nDPI library. The packet capturing mechanism is implemented using libpcap. When the DPI engine identifies a new flow, the flow register is updated with the appropriate information and transmitted across the Traffic Forwarding module, which then applies any required policy updates.

The Traffic Forwarding moudle is responsible for routing and packet forwarding. It accepts incoming network traffic, consults the flow table for classification information for each incoming flow and then applies pre-defined policies marking e.g. ToS/Differentiated Services Code Point (DSCP) multimedia traffic for Quality of Service (QoS) enablement on the forwarded traffic. It is assumed that the traffic is forwarded using the default policy until it is identified and new policies are enforced.

The expected response delay is considered to be negligible, as only a small number of packets are required to identify each flow.

10.5. Graphical Overview¶

+----------------------------+

| |

| Virtual Traffic Classifier |

| |

| Analysing/Forwarding |

| ------------> |

| ethA ethB |

| |

+----------------------------+

| ^

| |

v |

+----------------------------+

| |

| Virtual Switch |

| |

+----------------------------+

10.6. Install¶

run the vTC/build.sh with root privileges

10.7. Run¶

sudo ./pfbridge -a eth1 -b eth2

Note

Virtual Traffic Classifier is not support in OPNFV Danube release.

10.8. Development Environment¶

Ubuntu 14.04 Ubuntu 16.04

11. Network Services Benchmarking (NSB)¶

11.1. Abstract¶

This chapter provides an overview of the NSB, a contribution to OPNFV Yardstick from Intel.

11.2. Overview¶

The goal of NSB is to Extend Yardstick to perform real world VNFs and NFVi Characterization and benchmarking with repeatable and deterministic methods.

The Network Service Benchmarking (NSB) extends the yardstick framework to do VNF characterization and benchmarking in three different execution environments - bare metal i.e. native Linux environment, standalone virtual environment and managed virtualized environment (e.g. Open stack etc.). It also brings in the capability to interact with external traffic generators both hardware & software based for triggering and validating the traffic according to user defined profiles.

NSB extension includes:

Generic data models of Network Services, based on ETSI spec ETSI GS NFV-TST 001

New Standalone context for VNF testing like SRIOV, OVS, OVS-DPDK etc

Generic VNF configuration models and metrics implemented with Python classes

Traffic generator features and traffic profiles

- L1-L3 state-less traffic profiles

- L4-L7 state-full traffic profiles

- Tunneling protocol / network overlay support

Test case samples

- Ping

- Trex

- vPE,vCGNAT, vFirewall etc - ipv4 throughput, latency etc

Traffic generators like Trex, ab/nginx, ixia, iperf etc

KPIs for a given use case:

System agent support for collecting NFVi KPI. This includes:

- CPU statistic

- Memory BW

- OVS-DPDK Stats

Network KPIs, e.g., inpackets, outpackets, thoughput, latency etc

VNF KPIs, e.g., packet_in, packet_drop, packet_fwd etc

11.3. Architecture¶

The Network Service (NS) defines a set of Virtual Network Functions (VNF) connected together using NFV infrastructure.

The Yardstick NSB extension can support multiple VNFs created by different vendors including traffic generators. Every VNF being tested has its own data model. The Network service defines a VNF modelling on base of performed network functionality. The part of the data model is a set of the configuration parameters, number of connection points used and flavor including core and memory amount.

The ETSI defines a Network Service as a set of configurable VNFs working in some NFV Infrastructure connecting each other using Virtual Links available through Connection Points. The ETSI MANO specification defines a set of management entities called Network Service Descriptors (NSD) and VNF Descriptors (VNFD) that define real Network Service. The picture below makes an example how the real Network Operator use-case can map into ETSI Network service definition

Network Service framework performs the necessary test steps. It may involve

- Interacting with traffic generator and providing the inputs on traffic type / packet structure to generate the required traffic as per the test case. Traffic profiles will be used for this.

- Executing the commands required for the test procedure and analyses the command output for confirming whether the command got executed correctly or not. E.g. As per the test case, run the traffic for the given time period / wait for the necessary time delay

- Verify the test result.

- Validate the traffic flow from SUT

- Fetch the table / data from SUT and verify the value as per the test case

- Upload the logs from SUT onto the Test Harness server

- Read the KPI’s provided by particular VNF

11.3.1. Components of Network Service¶

- Models for Network Service benchmarking: The Network Service benchmarking requires the proper modelling approach. The NSB provides models using Python files and defining of NSDs and VNFDs.

The benchmark control application being a part of OPNFV yardstick can call that python models to instantiate and configure the VNFs. Depending on infrastructure type (bare-metal or fully virtualized) that calls could be made directly or using MANO system.

- Traffic generators in NSB: Any benchmark application requires a set of traffic generator and traffic profiles defining the method in which traffic is generated.

The Network Service benchmarking model extends the Network Service definition with a set of Traffic Generators (TG) that are treated same way as other VNFs being a part of benchmarked network service. Same as other VNFs the traffic generator are instantiated and terminated.

Every traffic generator has own configuration defined as a traffic profile and a set of KPIs supported. The python models for TG is extended by specific calls to listen and generate traffic.

- The stateless TREX traffic generator: The main traffic generator used as Network Service stimulus is open source TREX tool.

The TREX tool can generate any kind of stateless traffic.

+--------+ +-------+ +--------+ | | | | | | | Trex | ---> | VNF | ---> | Trex | | | | | | | +--------+ +-------+ +--------+Supported testcases scenarios:

Correlated UDP traffic using TREX traffic generator and replay VNF.

- using different IMIX configuration like pure voice, pure video traffic etc

- using different number IP flows like 1 flow, 1K, 16K, 64K, 256K, 1M flows

- Using different number of rules configured like 1 rule, 1K, 10K rules

For UDP correlated traffic following Key Performance Indicators are collected for every combination of test case parameters:

- RFC2544 throughput for various loss rate defined (1% is a default)

11.4. Graphical Overview¶

NSB Testing with yardstick framework facilitate performance testing of various VNFs provided.

+-----------+

| | +-----------+

| vPE | ->|TGen Port 0|

| TestCase | | +-----------+

| | |

+-----------+ +------------------+ +-------+ |

| | -- API --> | VNF | <--->

+-----------+ | Yardstick | +-------+ |

| Test Case | --> | NSB Testing | |

+-----------+ | | |

| | | |

| +------------------+ |

+-----------+ | +-----------+

| Traffic | ->|TGen Port 1|

| patterns | +-----------+

+-----------+

Figure 1: Network Service - 2 server configuration

11.4.1. VNFs supported for chracterization:¶

- CGNAPT - Carrier Grade Network Address and port Translation

- vFW - Virtual Firewall

3. vACL - Access Control List 5. Prox - Packet pROcessing eXecution engine:

- VNF can act as Drop, Basic Forwarding (no touch), L2 Forwarding (change MAC), GRE encap/decap, Load balance based on packet fields, Symmetric load balancing,

- QinQ encap/decap IPv4/IPv6, ARP, QoS, Routing, Unmpls, Policing, ACL

- UDP_Replay

12. Yardstick - NSB Testing -Installation¶

12.1. Abstract¶

The Network Service Benchmarking (NSB) extends the yardstick framework to do VNF characterization and benchmarking in three different execution environments viz., bare metal i.e. native Linux environment, standalone virtual environment and managed virtualized environment (e.g. Open stack etc.). It also brings in the capability to interact with external traffic generators both hardware & software based for triggering and validating the traffic according to user defined profiles.

The steps needed to run Yardstick with NSB testing are:

- Install Yardstick (NSB Testing).

- Setup/Reference pod.yaml describing Test topology

- Create/Reference the test configuration yaml file.

- Run the test case.

12.2. Prerequisites¶

Refer chapter Yardstick Installation for more information on yardstick prerequisites

Several prerequisites are needed for Yardstick(VNF testing):

- Python Modules: pyzmq, pika.

- flex

- bison

- build-essential

- automake

- libtool

- librabbitmq-dev

- rabbitmq-server

- collectd

- intel-cmt-cat

12.2.1. Hardware & Software Ingredients¶

SUT requirements:

Item Description Memory Min 20GB NICs 2 x 10G OS Ubuntu 16.04.3 LTS kernel 4.4.0-34-generic DPDK 17.02

Boot and BIOS settings:

Boot settings default_hugepagesz=1G hugepagesz=1G hugepages=16 hugepagesz=2M hugepages=2048 isolcpus=1-11,22-33 nohz_full=1-11,22-33 rcu_nocbs=1-11,22-33 iommu=on iommu=pt intel_iommu=on Note: nohz_full and rcu_nocbs is to disable Linux kernel interrupts BIOS CPU Power and Performance Policy <Performance> CPU C-state Disabled CPU P-state Disabled Enhanced Intel® Speedstep® Tech Disabled Hyper-Threading Technology (If supported) Enabled Virtualization Techology Enabled Intel(R) VT for Direct I/O Enabled Coherency Enabled Turbo Boost Disabled

12.3. Install Yardstick (NSB Testing)¶

Download the source code and install Yardstick from it

git clone https://gerrit.opnfv.org/gerrit/yardstick

cd yardstick

# Switch to latest stable branch

# git checkout <tag or stable branch>

git checkout stable/euphrates

# For Bare-Metal or Standalone Virtualization

./nsb_setup.sh

# For OpenStack

./nsb_setup.sh <path to admin-openrc.sh>

Above command setup docker with latest yardstick code. To execute

docker exec -it yardstick bash

It will also automatically download all the packages needed for NSB Testing setup. Refer chapter Yardstick Installation for more on docker Install Yardstick using Docker (recommended)

12.4. System Topology:¶

+----------+ +----------+

| | | |

| | (0)----->(0) | |

| TG1 | | DUT |

| | | |

| | (1)<-----(1) | |

+----------+ +----------+

trafficgen_1 vnf

12.5. Environment parameters and credentials¶

12.5.1. Config yardstick conf¶

If user did not run ‘yardstick env influxdb’ inside the container, which will generate correct yardstick.conf, then create the config file manually (run inside the container):

cp ./etc/yardstick/yardstick.conf.sample /etc/yardstick/yardstick.conf vi /etc/yardstick/yardstick.conf

Add trex_path, trex_client_lib and bin_path in ‘nsb’ section.

[DEFAULT]

debug = True

dispatcher = file, influxdb

[dispatcher_influxdb]

timeout = 5

target = http://{YOUR_IP_HERE}:8086

db_name = yardstick

username = root

password = root

[nsb]

trex_path=/opt/nsb_bin/trex/scripts

bin_path=/opt/nsb_bin

trex_client_lib=/opt/nsb_bin/trex_client/stl

12.6. Run Yardstick - Network Service Testcases¶

12.6.1. NS testing - using yardstick CLI¶

docker exec -it yardstick /bin/bash

source /etc/yardstick/openstack.creds (only for heat TC if nsb_setup.sh was NOT used)

export EXTERNAL_NETWORK="<openstack public network>" (only for heat TC)

yardstick --debug task start yardstick/samples/vnf_samples/nsut/<vnf>/<test case>

12.7. Network Service Benchmarking - Bare-Metal¶

12.7.1. Bare-Metal Config pod.yaml describing Topology¶

12.7.1.1. Bare-Metal 2-Node setup:¶

+----------+ +----------+

| | | |

| | (0)----->(0) | |

| TG1 | | DUT |

| | | |

| | (n)<-----(n) | |

+----------+ +----------+

trafficgen_1 vnf

12.7.2. Bare-Metal Config pod.yaml¶

Before executing Yardstick test cases, make sure that pod.yaml reflects the topology and update all the required fields.:

cp /etc/yardstick/nodes/pod.yaml.nsb.sample /etc/yardstick/nodes/pod.yaml

nodes:

-

name: trafficgen_1

role: TrafficGen

ip: 1.1.1.1

user: root

password: r00t

interfaces:

xe0: # logical name from topology.yaml and vnfd.yaml

vpci: "0000:07:00.0"

driver: i40e # default kernel driver

dpdk_port_num: 0

local_ip: "152.16.100.20"

netmask: "255.255.255.0"

local_mac: "00:00:00:00:00:01"

xe1: # logical name from topology.yaml and vnfd.yaml

vpci: "0000:07:00.1"

driver: i40e # default kernel driver

dpdk_port_num: 1

local_ip: "152.16.40.20"

netmask: "255.255.255.0"

local_mac: "00:00.00:00:00:02"

-

name: vnf

role: vnf

ip: 1.1.1.2

user: root

password: r00t

host: 1.1.1.2 #BM - host == ip, virtualized env - Host - compute node

interfaces:

xe0: # logical name from topology.yaml and vnfd.yaml

vpci: "0000:07:00.0"

driver: i40e # default kernel driver

dpdk_port_num: 0

local_ip: "152.16.100.19"

netmask: "255.255.255.0"

local_mac: "00:00:00:00:00:03"

xe1: # logical name from topology.yaml and vnfd.yaml

vpci: "0000:07:00.1"

driver: i40e # default kernel driver

dpdk_port_num: 1

local_ip: "152.16.40.19"

netmask: "255.255.255.0"

local_mac: "00:00:00:00:00:04"

routing_table:

- network: "152.16.100.20"

netmask: "255.255.255.0"

gateway: "152.16.100.20"

if: "xe0"

- network: "152.16.40.20"

netmask: "255.255.255.0"

gateway: "152.16.40.20"

if: "xe1"

nd_route_tbl:

- network: "0064:ff9b:0:0:0:0:9810:6414"

netmask: "112"

gateway: "0064:ff9b:0:0:0:0:9810:6414"

if: "xe0"

- network: "0064:ff9b:0:0:0:0:9810:2814"

netmask: "112"

gateway: "0064:ff9b:0:0:0:0:9810:2814"

if: "xe1"

12.8. Network Service Benchmarking - Standalone Virtualization¶

12.8.1. SR-IOV:¶

12.8.1.1. SR-IOV Pre-requisites¶

- On Host:

- Create a bridge for VM to connect to external network

brctl addbr br-int brctl addif br-int <interface_name> #This interface is connected to internet

Build guest image for VNF to run. Most of the sample test cases in Yardstick are using a guest image called

yardstick-imagewhich deviates from an Ubuntu Cloud Server image Yardstick has a tool for building this custom image with samplevnf. It is necessary to havesudorights to use this tool.Also you may need to install several additional packages to use this tool, by following the commands below:

sudo apt-get update && sudo apt-get install -y qemu-utils kpartx

This image can be built using the following command in the directory where Yardstick is installed

export YARD_IMG_ARCH='amd64' sudo echo "Defaults env_keep += \'YARD_IMG_ARCH\'" >> /etc/sudoers

Please use ansible script to generate a cloud image refer to Yardstick Installation

for more details refer to chapter Yardstick Installation

Note

VM should be build with static IP and should be accessible from yardstick host.

12.8.1.2. SR-IOV Config pod.yaml describing Topology¶

12.8.1.3. SR-IOV 2-Node setup:¶

+--------------------+

| |

| |

| DUT |

| (VNF) |

| |

+--------------------+

| VF NIC | | VF NIC |

+--------+ +--------+

^ ^

| |

| |

+----------+ +-------------------------+

| | | ^ ^ |

| | | | | |

| | (0)<----->(0) | ------ | |

| TG1 | | SUT | |

| | | | |

| | (n)<----->(n) |------------------ |

+----------+ +-------------------------+

trafficgen_1 host

12.8.1.5. SR-IOV Config pod_trex.yaml¶

nodes:

-

name: trafficgen_1

role: TrafficGen

ip: 1.1.1.1

user: root