VSPERF¶

VSPERF is an OPNFV testing project.

VSPERF will develop a generic and architecture agnostic vSwitch testing framework and associated tests, that will serve as a basis for validating the suitability of different vSwitch implementations in a Telco NFV deployment environment. The output of this project will be utilized by the OPNFV Performance and Test group and its associated projects, as part of OPNFV Platform and VNF level testing and validation.

- Project Wiki: https://wiki.opnfv.org/characterize_vswitch_performance_for_telco_nfv_use_cases

- Project Repository: https://git.opnfv.org/vswitchperf

- Project Artifacts: https://artifacts.opnfv.org/vswitchperf.html

- Continuous Integration https://build.opnfv.org/ci/view/vswitchperf/

VSPERF Installation Guide¶

1. Installing vswitchperf¶

1.1. Downloading vswitchperf¶

The vswitchperf can be downloaded from its official git repository, which is

hosted by OPNFV. It is necessary to install a git at your DUT before downloading

vswitchperf. Installation of git is specific to the packaging system used by

Linux OS installed at DUT.

Example of installation of GIT package and its dependencies:

in case of OS based on RedHat Linux:

sudo yum install git

in case of Ubuntu or Debian:

sudo apt-get install git

After the git is successfully installed at DUT, then vswitchperf can be downloaded

as follows:

git clone http://git.opnfv.org/vswitchperf

The last command will create a directory vswitchperf with a local copy of vswitchperf

repository.

1.2. Supported Operating Systems¶

- CentOS 7.3

- Fedora 24 (kernel 4.8 requires DPDK 16.11 and newer)

- Fedora 25 (kernel 4.9 requires DPDK 16.11 and newer)

- RedHat 7.2 Enterprise Linux

- RedHat 7.3 Enterprise Linux

- Ubuntu 14.04

- Ubuntu 16.04

- Ubuntu 16.10 (kernel 4.8 requires DPDK 16.11 and newer)

1.3. Supported vSwitches¶

The vSwitch must support Open Flow 1.3 or greater.

- Open vSwitch

- Open vSwitch with DPDK support

- TestPMD application from DPDK (supports p2p and pvp scenarios)

1.4. Supported Hypervisors¶

- Qemu version 2.3 or greater (version 2.5.0 is recommended)

1.5. Supported VNFs¶

In theory, it is possible to use any VNF image, which is compatible with supported hypervisor. However such VNF must ensure, that appropriate number of network interfaces is configured and that traffic is properly forwarded among them. For new vswitchperf users it is recommended to start with official vloop-vnf image, which is maintained by vswitchperf community.

1.5.1. vloop-vnf¶

The official VM image is called vloop-vnf and it is available for free download from OPNFV artifactory. This image is based on Linux Ubuntu distribution and it supports following applications for traffic forwarding:

- DPDK testpmd

- Linux Bridge

- Custom l2fwd module

The vloop-vnf can be downloaded to DUT, for example by wget:

wget http://artifacts.opnfv.org/vswitchperf/vnf/vloop-vnf-ubuntu-14.04_20160823.qcow2

NOTE: In case that wget is not installed at your DUT, you could install it at RPM

based system by sudo yum install wget or at DEB based system by sudo apt-get install

wget.

Changelog of vloop-vnf:

- vloop-vnf-ubuntu-14.04_20160823

- ethtool installed

- only 1 NIC is configured by default to speed up boot with 1 NIC setup

- security updates applied

- vloop-vnf-ubuntu-14.04_20160804

- Linux kernel 4.4.0 installed

- libnuma-dev installed

- security updates applied

- vloop-vnf-ubuntu-14.04_20160303

- snmpd service is disabled by default to avoid error messages during VM boot

- security updates applied

- vloop-vnf-ubuntu-14.04_20151216

- version with development tools required for build of DPDK and l2fwd

1.6. Installation¶

The test suite requires Python 3.3 or newer and relies on a number of other system and python packages. These need to be installed for the test suite to function.

Installation of required packages, preparation of Python 3 virtual environment and compilation of OVS, DPDK and QEMU is performed by script systems/build_base_machine.sh. It should be executed under user account, which will be used for vsperf execution.

NOTE: Password-less sudo access must be configured for given user account before script is executed.

$ cd systems

$ ./build_base_machine.sh

NOTE: you don’t need to go into any of the systems subdirectories, simply run the top level build_base_machine.sh, your OS will be detected automatically.

Script build_base_machine.sh will install all the vsperf dependencies

in terms of system packages, Python 3.x and required Python modules.

In case of CentOS 7 or RHEL it will install Python 3.3 from an additional

repository provided by Software Collections (a link). Installation script

will also use virtualenv to create a vsperf virtual environment, which is

isolated from the default Python environment. This environment will reside in a

directory called vsperfenv in $HOME. It will ensure, that system wide Python

installation is not modified or broken by VSPERF installation. The complete list

of Python packages installed inside virtualenv can be found at file

requirements.txt, which is located at vswitchperf repository.

NOTE: For RHEL 7.3 Enterprise and CentOS 7.3 OVS Vanilla is not built from upstream source due to kernel incompatibilities. Please see the instructions in the vswitchperf_design document for details on configuring OVS Vanilla for binary package usage.

1.7. Using vswitchperf¶

You will need to activate the virtual environment every time you start a new shell session. Its activation is specific to your OS:

CentOS 7 and RHEL

$ scl enable python33 bash $ source $HOME/vsperfenv/bin/activate

Fedora and Ubuntu

$ source $HOME/vsperfenv/bin/activate

After the virtual environment is configued, then VSPERF can be used. For example:

(vsperfenv) $ cd vswitchperf (vsperfenv) $ ./vsperf --help

1.7.1. Gotcha¶

In case you will see following error during environment activation:

$ source $HOME/vsperfenv/bin/activate

Badly placed ()'s.

then check what type of shell you are using:

$ echo $SHELL

/bin/tcsh

See what scripts are available in $HOME/vsperfenv/bin

$ ls $HOME/vsperfenv/bin/

activate activate.csh activate.fish activate_this.py

source the appropriate script

$ source bin/activate.csh

1.7.2. Working Behind a Proxy¶

If you’re behind a proxy, you’ll likely want to configure this before running any of the above. For example:

export http_proxy=proxy.mycompany.com:123 export https_proxy=proxy.mycompany.com:123

1.8. Hugepage Configuration¶

Systems running vsperf with either dpdk and/or tests with guests must configure hugepage amounts to support running these configurations. It is recommended to configure 1GB hugepages as the pagesize.

The amount of hugepages needed depends on your configuration files in vsperf.

Each guest image requires 2048 MB by default according to the default settings

in the 04_vnf.conf file.

GUEST_MEMORY = ['2048']

The dpdk startup parameters also require an amount of hugepages depending on

your configuration in the 02_vswitch.conf file.

VSWITCHD_DPDK_ARGS = ['-c', '0x4', '-n', '4', '--socket-mem 1024,1024']

VSWITCHD_DPDK_CONFIG = {

'dpdk-init' : 'true',

'dpdk-lcore-mask' : '0x4',

'dpdk-socket-mem' : '1024,1024',

}

NOTE: Option VSWITCHD_DPDK_ARGS is used for vswitchd, which supports --dpdk

parameter. In recent vswitchd versions, option VSWITCHD_DPDK_CONFIG is

used to configure vswitchd via ovs-vsctl calls.

With the --socket-mem argument set to use 1 hugepage on the specified sockets as

seen above, the configuration will need 10 hugepages total to run all tests

within vsperf if the pagesize is set correctly to 1GB.

VSPerf will verify hugepage amounts are free before executing test environments. In case of hugepage amounts not being free, test initialization will fail and testing will stop.

NOTE: In some instances on a test failure dpdk resources may not release hugepages used in dpdk configuration. It is recommended to configure a few extra hugepages to prevent a false detection by VSPerf that not enough free hugepages are available to execute the test environment. Normally dpdk would use previously allocated hugepages upon initialization.

Depending on your OS selection configuration of hugepages may vary. Please refer to your OS documentation to set hugepages correctly. It is recommended to set the required amount of hugepages to be allocated by default on reboots.

Information on hugepage requirements for dpdk can be found at http://dpdk.org/doc/guides/linux_gsg/sys_reqs.html

You can review your hugepage amounts by executing the following command

cat /proc/meminfo | grep Huge

If no hugepages are available vsperf will try to automatically allocate some.

Allocation is controlled by HUGEPAGE_RAM_ALLOCATION configuration parameter in

02_vswitch.conf file. Default is 2GB, resulting in either 2 1GB hugepages

or 1024 2MB hugepages.

2. Upgrading vswitchperf¶

2.1. Generic¶

In case, that VSPERF is cloned from git repository, then it is easy to upgrade it to the newest stable version or to the development version.

You could get a list of stable releases by git command. It is necessary

to update local git repository first.

NOTE: Git commands must be executed from directory, where VSPERF repository

was cloned, e.g. vswitchperf.

Update of local git repository:

$ git pull

List of stable releases:

$ git tag

brahmaputra.1.0

colorado.1.0

colorado.2.0

colorado.3.0

danube.1.0

You could select which stable release should be used. For example, select danube.1.0:

$ git checkout danube.1.0

Development version of VSPERF can be selected by:

$ git checkout master

2.2. Colorado to Danube upgrade notes¶

2.2.1. Obsoleted features¶

Support of vHost Cuse interface has been removed in Danube release. It means,

that it is not possible to select QemuDpdkVhostCuse as a VNF anymore. Option

QemuDpdkVhostUser should be used instead. Please check you configuration files

and definition of your testcases for any occurrence of:

VNF = "QemuDpdkVhostCuse"

or

"VNF" : "QemuDpdkVhostCuse"

In case that QemuDpdkVhostCuse is found, it must be modified to QemuDpdkVhostUser.

NOTE: In case that execution of VSPERF is automated by scripts (e.g. for CI purposes), then these scripts must be checked and updated too. It means, that any occurrence of:

./vsperf --vnf QemuDpdkVhostCuse

must be updated to:

./vsperf --vnf QemuDpdkVhostUser

2.2.2. Configuration¶

Several configuration changes were introduced during Danube release. The most important changes are discussed below.

2.2.2.1. Paths to DPDK, OVS and QEMU¶

VSPERF uses external tools for proper testcase execution. Thus it is important

to properly configure paths to these tools. In case that tools are installed

by installation scripts and are located inside ./src directory inside

VSPERF home, then no changes are needed. On the other hand, if path settings

was changed by custom configuration file, then it is required to update configuration

accordingly. Please check your configuration files for following configuration

options:

OVS_DIR

OVS_DIR_VANILLA

OVS_DIR_USER

OVS_DIR_CUSE

RTE_SDK_USER

RTE_SDK_CUSE

QEMU_DIR

QEMU_DIR_USER

QEMU_DIR_CUSE

QEMU_BIN

In case that any of these options is defined, then configuration must be updated.

All paths to the tools are now stored inside PATHS dictionary. Please

refer to the Configuration of PATHS dictionary and update your configuration where necessary.

2.2.2.2. Configuration change via CLI¶

In previous releases it was possible to modify selected configuration options

(mostly VNF specific) via command line interface, i.e. by --test-params

argument. This concept has been generalized in Danube release and it is

possible to modify any configuration parameter via CLI or via Parameters

section of the testcase definition. Old configuration options were obsoleted

and it is required to specify configuration parameter name in the same form

as it is defined inside configuration file, i.e. in uppercase. Please

refer to the Overriding values defined in configuration files for additional details.

NOTE: In case that execution of VSPERF is automated by scripts (e.g. for CI purposes), then these scripts must be checked and updated too. It means, that any occurrence of

guest_loopback

vanilla_tgen_port1_ip

vanilla_tgen_port1_mac

vanilla_tgen_port2_ip

vanilla_tgen_port2_mac

tunnel_type

shall be changed to the uppercase form and data type of entered values must match to data types of original values from configuration files.

In case that guest_nic1_name or guest_nic2_name is changed,

then new dictionary GUEST_NICS must be modified accordingly.

Please see Configuration of GUEST options and conf/04_vnf.conf for additional

details.

2.2.2.3. Traffic configuration via CLI¶

In previous releases it was possible to modify selected attributes of generated

traffic via command line interface. This concept has been enhanced in Danube

release and it is now possible to modify all traffic specific options via

CLI or by TRAFFIC dictionary in configuration file. Detailed description

is available at Configuration of TRAFFIC dictionary section of documentation.

Please check your automated scripts for VSPERF execution for following CLI parameters and update them according to the documentation:

bidir

duration

frame_rate

iload

lossrate

multistream

pkt_sizes

pre-installed_flows

rfc2544_tests

stream_type

traffic_type

3. ‘vsperf’ Traffic Gen Guide¶

3.1. Overview¶

VSPERF supports the following traffic generators:

- Dummy (DEFAULT)

- Ixia

- Spirent TestCenter

- Xena Networks

- MoonGen

To see the list of traffic gens from the cli:

$ ./vsperf --list-trafficgens

This guide provides the details of how to install and configure the various traffic generators.

3.2. Background Information¶

The traffic default configuration can be found in conf/03_traffic.conf, and is configured as follows:

TRAFFIC = {

'traffic_type' : 'rfc2544_throughput',

'frame_rate' : 100,

'bidir' : 'True', # will be passed as string in title format to tgen

'multistream' : 0,

'stream_type' : 'L4',

'pre_installed_flows' : 'No', # used by vswitch implementation

'flow_type' : 'port', # used by vswitch implementation

'l2': {

'framesize': 64,

'srcmac': '00:00:00:00:00:00',

'dstmac': '00:00:00:00:00:00',

},

'l3': {

'proto': 'udp',

'srcip': '1.1.1.1',

'dstip': '90.90.90.90',

},

'l4': {

'srcport': 3000,

'dstport': 3001,

},

'vlan': {

'enabled': False,

'id': 0,

'priority': 0,

'cfi': 0,

},

}

The framesize parameter can be overridden from the configuration

files by adding the following to your custom configuration file

10_custom.conf:

TRAFFICGEN_PKT_SIZES = (64, 128,)

OR from the commandline:

$ ./vsperf --test-params "TRAFFICGEN_PKT_SIZES=(x,y)" $TESTNAME

You can also modify the traffic transmission duration and the number of tests run by the traffic generator by extending the example commandline above to:

$ ./vsperf --test-params "TRAFFICGEN_PKT_SIZES=(x,y);TRAFFICGEN_DURATION=10;" \

"TRAFFICGEN_RFC2544_TESTS=1" $TESTNAME

3.3. Dummy¶

The Dummy traffic generator can be used to test VSPERF installation or to demonstrate VSPERF functionality at DUT without connection to a real traffic generator.

You could also use the Dummy generator in case, that your external traffic generator is not supported by VSPERF. In such case you could use VSPERF to setup your test scenario and then transmit the traffic. After the transmission is completed you could specify values for all collected metrics and VSPERF will use them to generate final reports.

3.3.1. Setup¶

To select the Dummy generator please add the following to your

custom configuration file 10_custom.conf.

TRAFFICGEN = 'Dummy'

OR run vsperf with the --trafficgen argument

$ ./vsperf --trafficgen Dummy $TESTNAME

Where $TESTNAME is the name of the vsperf test you would like to run. This will setup the vSwitch and the VNF (if one is part of your test) print the traffic configuration and prompt you to transmit traffic when the setup is complete.

Please send 'continuous' traffic with the following stream config:

30mS, 90mpps, multistream False

and the following flow config:

{

"flow_type": "port",

"l3": {

"srcip": "1.1.1.1",

"proto": "tcp",

"dstip": "90.90.90.90"

},

"traffic_type": "rfc2544_continuous",

"multistream": 0,

"bidir": "True",

"vlan": {

"cfi": 0,

"priority": 0,

"id": 0,

"enabled": false

},

"frame_rate": 90,

"l2": {

"dstport": 3001,

"srcport": 3000,

"dstmac": "00:00:00:00:00:00",

"srcmac": "00:00:00:00:00:00",

"framesize": 64

}

}

What was the result for 'frames tx'?

When your traffic generator has completed traffic transmission and provided the results please input these at the VSPERF prompt. VSPERF will try to verify the input:

Is '$input_value' correct?

Please answer with y OR n.

VSPERF will ask you to provide a value for every of collected metrics. The list of metrics can be found at traffic-type-metrics. Finally vsperf will print out the results for your test and generate the appropriate logs and report files.

3.3.2. Metrics collected for supported traffic types¶

Below you could find a list of metrics collected by VSPERF for each of supported traffic types.

RFC2544 Throughput and Continuous:

- frames tx

- frames rx

- min latency

- max latency

- avg latency

- frameloss

RFC2544 Back2back:

- b2b frames

- b2b frame loss %

3.3.3. Dummy result pre-configuration¶

In case of a Dummy traffic generator it is possible to pre-configure the test results. This is useful for creation of demo testcases, which do not require a real traffic generator. Such testcase can be run by any user and it will still generate all reports and result files.

Result values can be specified within TRAFFICGEN_DUMMY_RESULTS dictionary,

where every of collected metrics must be properly defined. Please check the list

of traffic-type-metrics.

Dictionary with dummy results can be passed by CLI argument --test-params

or specified in Parameters section of testcase definition.

Example of testcase execution with dummy results defined by CLI argument:

$ ./vsperf back2back --trafficgen Dummy --test-params \

"TRAFFICGEN_DUMMY_RESULTS={'b2b frames':'3000','b2b frame loss %':'0.0'}"

Example of testcase definition with pre-configured dummy results:

{

"Name": "back2back",

"Traffic Type": "rfc2544_back2back",

"Deployment": "p2p",

"biDirectional": "True",

"Description": "LTD.Throughput.RFC2544.BackToBackFrames",

"Parameters" : {

'TRAFFICGEN_DUMMY_RESULTS' : {'b2b frames':'3000','b2b frame loss %':'0.0'}

},

},

NOTE: Pre-configured results for the Dummy traffic generator will be used only

in case, that the Dummy traffic generator is used. Otherwise the option

TRAFFICGEN_DUMMY_RESULTS will be ignored.

3.4. Ixia¶

VSPERF can use both IxNetwork and IxExplorer TCL servers to control Ixia chassis. However usage of IxNetwork TCL server is a preferred option. Following sections will describe installation and configuration of IxNetwork components used by VSPERF.

3.4.1. Installation¶

On the system under the test you need to install IxNetworkTclClient$(VER_NUM)Linux.bin.tgz.

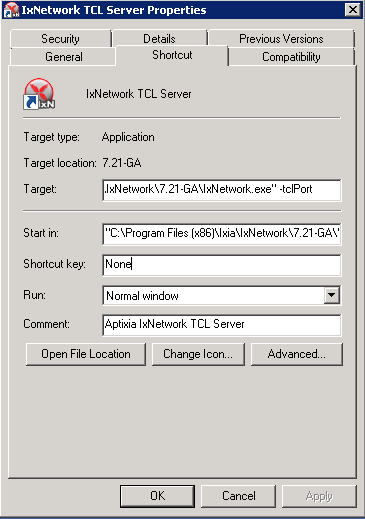

On the IXIA client software system you need to install IxNetwork TCL server. After its installation you should configure it as follows:

Find the IxNetwork TCL server app (start -> All Programs -> IXIA -> IxNetwork -> IxNetwork_$(VER_NUM) -> IxNetwork TCL Server)

Right click on IxNetwork TCL Server, select properties - Under shortcut tab in the Target dialogue box make sure there is the argument “-tclport xxxx” where xxxx is your port number (take note of this port number as you will need it for the 10_custom.conf file).

Hit Ok and start the TCL server application

3.4.2. VSPERF configuration¶

There are several configuration options specific to the IxNetwork traffic generator from IXIA. It is essential to set them correctly, before the VSPERF is executed for the first time.

Detailed description of options follows:

TRAFFICGEN_IXNET_MACHINE- IP address of server, where IxNetwork TCL Server is runningTRAFFICGEN_IXNET_PORT- PORT, where IxNetwork TCL Server is accepting connections from TCL clientsTRAFFICGEN_IXNET_USER- username, which will be used during communication with IxNetwork TCL Server and IXIA chassisTRAFFICGEN_IXIA_HOST- IP address of IXIA traffic generator chassisTRAFFICGEN_IXIA_CARD- identification of card with dedicated ports at IXIA chassisTRAFFICGEN_IXIA_PORT1- identification of the first dedicated port atTRAFFICGEN_IXIA_CARDat IXIA chassis; VSPERF uses two separated ports for traffic generation. In case of unidirectional traffic, it is essential to correctly connect 1st IXIA port to the 1st NIC at DUT, i.e. to the first PCI handle fromWHITELIST_NICSlist. Otherwise traffic may not be able to pass through the vSwitch.TRAFFICGEN_IXIA_PORT2- identification of the second dedicated port atTRAFFICGEN_IXIA_CARDat IXIA chassis; VSPERF uses two separated ports for traffic generation. In case of unidirectional traffic, it is essential to correctly connect 2nd IXIA port to the 2nd NIC at DUT, i.e. to the second PCI handle fromWHITELIST_NICSlist. Otherwise traffic may not be able to pass through the vSwitch.TRAFFICGEN_IXNET_LIB_PATH- path to the DUT specific installation of IxNetwork TCL APITRAFFICGEN_IXNET_TCL_SCRIPT- name of the TCL script, which VSPERF will use for communication with IXIA TCL serverTRAFFICGEN_IXNET_TESTER_RESULT_DIR- folder accessible from IxNetwork TCL server, where test results are stored, e.g.c:/ixia_results; see test-results-shareTRAFFICGEN_IXNET_DUT_RESULT_DIR- directory accessible from the DUT, where test results from IxNetwork TCL server are stored, e.g./mnt/ixia_results; see test-results-share

3.5. Spirent Setup¶

Spirent installation files and instructions are available on the Spirent support website at:

Select a version of Spirent TestCenter software to utilize. This example will use Spirent TestCenter v4.57 as an example. Substitute the appropriate version in place of ‘v4.57’ in the examples, below.

3.5.1. On the CentOS 7 System¶

Download and install the following:

Spirent TestCenter Application, v4.57 for 64-bit Linux Client

3.5.2. Spirent Virtual Deployment Service (VDS)¶

Spirent VDS is required for both TestCenter hardware and virtual chassis in the vsperf environment. For installation, select the version that matches the Spirent TestCenter Application version. For v4.57, the matching VDS version is 1.0.55. Download either the ova (VMware) or qcow2 (QEMU) image and create a VM with it. Initialize the VM according to Spirent installation instructions.

3.5.3. Using Spirent TestCenter Virtual (STCv)¶

STCv is available in both ova (VMware) and qcow2 (QEMU) formats. For VMware, download:

Spirent TestCenter Virtual Machine for VMware, v4.57 for Hypervisor - VMware ESX.ESXi

Virtual test port performance is affected by the hypervisor configuration. For best practice results in deploying STCv, the following is suggested:

- Create a single VM with two test ports rather than two VMs with one port each

- Set STCv in DPDK mode

- Give STCv 2*n + 1 cores, where n = the number of ports. For vsperf, cores = 5.

- Turning off hyperthreading and pinning these cores will improve performance

- Give STCv 2 GB of RAM

To get the highest performance and accuracy, Spirent TestCenter hardware is recommended. vsperf can run with either stype test ports.

3.5.4. Using STC REST Client¶

The stcrestclient package provides the stchttp.py ReST API wrapper module. This allows simple function calls, nearly identical to those provided by StcPython.py, to be used to access TestCenter server sessions via the STC ReST API. Basic ReST functionality is provided by the resthttp module, and may be used for writing ReST clients independent of STC.

- Project page: <https://github.com/Spirent/py-stcrestclient>

- Package download: <http://pypi.python.org/pypi/stcrestclient>

To use REST interface, follow the instructions in the Project page to install the package. Once installed, the scripts named with ‘rest’ keyword can be used. For example: testcenter-rfc2544-rest.py can be used to run RFC 2544 tests using the REST interface.

3.5.5. Configuration:¶

- The Labserver and license server addresses. These parameters applies to all the tests, and are mandatory for all tests.

TRAFFICGEN_STC_LAB_SERVER_ADDR = " "

TRAFFICGEN_STC_LICENSE_SERVER_ADDR = " "

TRAFFICGEN_STC_PYTHON2_PATH = " "

TRAFFICGEN_STC_TESTCENTER_PATH = " "

TRAFFICGEN_STC_TEST_SESSION_NAME = " "

TRAFFICGEN_STC_CSV_RESULTS_FILE_PREFIX = " "

- For RFC2544 tests, the following parameters are mandatory

TRAFFICGEN_STC_EAST_CHASSIS_ADDR = " "

TRAFFICGEN_STC_EAST_SLOT_NUM = " "

TRAFFICGEN_STC_EAST_PORT_NUM = " "

TRAFFICGEN_STC_EAST_INTF_ADDR = " "

TRAFFICGEN_STC_EAST_INTF_GATEWAY_ADDR = " "

TRAFFICGEN_STC_WEST_CHASSIS_ADDR = ""

TRAFFICGEN_STC_WEST_SLOT_NUM = " "

TRAFFICGEN_STC_WEST_PORT_NUM = " "

TRAFFICGEN_STC_WEST_INTF_ADDR = " "

TRAFFICGEN_STC_WEST_INTF_GATEWAY_ADDR = " "

TRAFFICGEN_STC_RFC2544_TPUT_TEST_FILE_NAME

- RFC2889 tests: Currently, the forwarding, address-caching, and address-learning-rate tests of RFC2889 are supported. The testcenter-rfc2889-rest.py script implements the rfc2889 tests. The configuration for RFC2889 involves test-case definition, and parameter definition, as described below. New results-constants, as shown below, are added to support these tests.

Example of testcase definition for RFC2889 tests:

{

"Name": "phy2phy_forwarding",

"Deployment": "p2p",

"Description": "LTD.Forwarding.RFC2889.MaxForwardingRate",

"Parameters" : {

"TRAFFIC" : {

"traffic_type" : "rfc2889_forwarding",

},

},

}

For RFC2889 tests, specifying the locations for the monitoring ports is mandatory. Necessary parameters are:

Other Configurations are :

TRAFFICGEN_STC_RFC2889_MIN_LR = 1488

TRAFFICGEN_STC_RFC2889_MAX_LR = 14880

TRAFFICGEN_STC_RFC2889_MIN_ADDRS = 1000

TRAFFICGEN_STC_RFC2889_MAX_ADDRS = 65536

TRAFFICGEN_STC_RFC2889_AC_LR = 1000

The first 2 values are for address-learning test where as other 3 values are for the Address caching capacity test. LR: Learning Rate. AC: Address Caching. Maximum value for address is 16777216. Whereas, maximum for LR is 4294967295.

Results for RFC2889 Tests: Forwarding tests outputs following values:

TX_RATE_FPS : "Transmission Rate in Frames/sec"

THROUGHPUT_RX_FPS: "Received Throughput Frames/sec"

TX_RATE_MBPS : " Transmission rate in MBPS"

THROUGHPUT_RX_MBPS: "Received Throughput in MBPS"

TX_RATE_PERCENT: "Transmission Rate in Percentage"

FRAME_LOSS_PERCENT: "Frame loss in Percentage"

FORWARDING_RATE_FPS: " Maximum Forwarding Rate in FPS"

Whereas, the address caching test outputs following values,

CACHING_CAPACITY_ADDRS = 'Number of address it can cache'

ADDR_LEARNED_PERCENT = 'Percentage of address successfully learned'

and address learning test outputs just a single value:

OPTIMAL_LEARNING_RATE_FPS = 'Optimal learning rate in fps'

Note that ‘FORWARDING_RATE_FPS’, ‘CACHING_CAPACITY_ADDRS’, ‘ADDR_LEARNED_PERCENT’ and ‘OPTIMAL_LEARNING_RATE_FPS’ are the new result-constants added to support RFC2889 tests.

3.6. Xena Networks¶

3.6.1. Installation¶

Xena Networks traffic generator requires specific files and packages to be installed. It is assumed the user has access to the Xena2544.exe file which must be placed in VSPerf installation location under the tools/pkt_gen/xena folder. Contact Xena Networks for the latest version of this file. The user can also visit www.xenanetworks/downloads to obtain the file with a valid support contract.

Note VSPerf has been fully tested with version v2.43 of Xena2544.exe

To execute the Xena2544.exe file under Linux distributions the mono-complete package must be installed. To install this package follow the instructions below. Further information can be obtained from http://www.mono-project.com/docs/getting-started/install/linux/

rpm --import "http://keyserver.ubuntu.com/pks/lookup?op=get&search=0x3FA7E0328081BFF6A14DA29AA6A19B38D3D831EF"

yum-config-manager --add-repo http://download.mono-project.com/repo/centos/

yum -y install mono-complete

To prevent gpg errors on future yum installation of packages the mono-project repo should be disabled once installed.

yum-config-manager --disable download.mono-project.com_repo_centos_

3.6.2. Configuration¶

Connection information for your Xena Chassis must be supplied inside the

10_custom.conf or 03_custom.conf file. The following parameters must be

set to allow for proper connections to the chassis.

TRAFFICGEN_XENA_IP = ''

TRAFFICGEN_XENA_PORT1 = ''

TRAFFICGEN_XENA_PORT2 = ''

TRAFFICGEN_XENA_USER = ''

TRAFFICGEN_XENA_PASSWORD = ''

TRAFFICGEN_XENA_MODULE1 = ''

TRAFFICGEN_XENA_MODULE2 = ''

3.6.3. RFC2544 Throughput Testing¶

Xena traffic generator testing for rfc2544 throughput can be modified for different behaviors if needed. The default options for the following are optimized for best results.

TRAFFICGEN_XENA_2544_TPUT_INIT_VALUE = '10.0'

TRAFFICGEN_XENA_2544_TPUT_MIN_VALUE = '0.1'

TRAFFICGEN_XENA_2544_TPUT_MAX_VALUE = '100.0'

TRAFFICGEN_XENA_2544_TPUT_VALUE_RESOLUTION = '0.5'

TRAFFICGEN_XENA_2544_TPUT_USEPASS_THRESHHOLD = 'false'

TRAFFICGEN_XENA_2544_TPUT_PASS_THRESHHOLD = '0.0'

Each value modifies the behavior of rfc 2544 throughput testing. Refer to your Xena documentation to understand the behavior changes in modifying these values.

3.6.4. Continuous Traffic Testing¶

Xena continuous traffic by default does a 3 second learning preemption to allow the DUT to receive learning packets before a continuous test is performed. If a custom test case requires this learning be disabled, you can disable the option or modify the length of the learning by modifying the following settings.

TRAFFICGEN_XENA_CONT_PORT_LEARNING_ENABLED = False

TRAFFICGEN_XENA_CONT_PORT_LEARNING_DURATION = 3

3.7. MoonGen¶

3.7.1. Installation¶

MoonGen architecture overview and general installation instructions can be found here:

https://github.com/emmericp/MoonGen

- Note: Today, MoonGen with VSPERF only supports 10Gbps line speeds.

For VSPERF use, MoonGen should be cloned from here (as opposed to the previously mentioned GitHub):

git clone https://github.com/atheurer/lua-trafficgen

and use the master branch:

git checkout master

VSPERF uses a particular Lua script with the MoonGen project:

trafficgen.lua

Follow MoonGen set up and execution instructions here:

https://github.com/atheurer/lua-trafficgen/blob/master/README.md

Note one will need to set up ssh login to not use passwords between the server running MoonGen and the device under test (running the VSPERF test infrastructure). This is because VSPERF on one server uses ‘ssh’ to configure and run MoonGen upon the other server.

One can set up this ssh access by doing the following on both servers:

ssh-keygen -b 2048 -t rsa

ssh-copy-id <other server>

3.7.2. Configuration¶

Connection information for MoonGen must be supplied inside the

10_custom.conf or 03_custom.conf file. The following parameters must be

set to allow for proper connections to the host with MoonGen.

TRAFFICGEN_MOONGEN_HOST_IP_ADDR = ""

TRAFFICGEN_MOONGEN_USER = ""

TRAFFICGEN_MOONGEN_BASE_DIR = ""

TRAFFICGEN_MOONGEN_PORTS = ""

TRAFFICGEN_MOONGEN_LINE_SPEED_GBPS = ""

VSPERF User Guide¶

1. vSwitchPerf test suites userguide¶

1.1. General¶

VSPERF requires a traffic generators to run tests, automated traffic gen support in VSPERF includes:

- IXIA traffic generator (IxNetwork hardware) and a machine that runs the IXIA client software.

- Spirent traffic generator (TestCenter hardware chassis or TestCenter virtual in a VM) and a VM to run the Spirent Virtual Deployment Service image, formerly known as “Spirent LabServer”.

- Xena Network traffic generator (Xena hardware chassis) that houses the Xena Traffic generator modules.

- Moongen software traffic generator. Requires a separate machine running moongen to execute packet generation.

If you want to use another traffic generator, please select the Dummy generator.

1.2. VSPERF Installation¶

To see the supported Operating Systems, vSwitches and system requirements, please follow the installation instructions <vsperf-installation>.

1.3. Traffic Generator Setup¶

Follow the Traffic generator instructions <trafficgen-installation> to install and configure a suitable traffic generator.

1.4. Cloning and building src dependencies¶

In order to run VSPERF, you will need to download DPDK and OVS. You can do this manually and build them in a preferred location, OR you could use vswitchperf/src. The vswitchperf/src directory contains makefiles that will allow you to clone and build the libraries that VSPERF depends on, such as DPDK and OVS. To clone and build simply:

$ cd src

$ make

VSPERF can be used with stock OVS (without DPDK support). When build is finished, the libraries are stored in src_vanilla directory.

The ‘make’ builds all options in src:

- Vanilla OVS

- OVS with vhost_user as the guest access method (with DPDK support)

The vhost_user build will reside in src/ovs/ The Vanilla OVS build will reside in vswitchperf/src_vanilla

To delete a src subdirectory and its contents to allow you to re-clone simply use:

$ make clobber

1.5. Configure the ./conf/10_custom.conf file¶

The 10_custom.conf file is the configuration file that overrides

default configurations in all the other configuration files in ./conf

The supplied 10_custom.conf file MUST be modified, as it contains

configuration items for which there are no reasonable default values.

The configuration items that can be added is not limited to the initial

contents. Any configuration item mentioned in any .conf file in

./conf directory can be added and that item will be overridden by

the custom configuration value.

Further details about configuration files evaluation and special behaviour

of options with GUEST_ prefix could be found at design document.

1.6. Using a custom settings file¶

If your 10_custom.conf doesn’t reside in the ./conf directory

of if you want to use an alternative configuration file, the file can

be passed to vsperf via the --conf-file argument.

$ ./vsperf --conf-file <path_to_custom_conf> ...

Note that configuration passed in via the environment (--load-env)

or via another command line argument will override both the default and

your custom configuration files. This “priority hierarchy” can be

described like so (1 = max priority):

- Testcase definition section

Parameters - Command line arguments

- Environment variables

- Configuration file(s)

Further details about configuration files evaluation and special behaviour

of options with GUEST_ prefix could be found at design document.

1.7. Overriding values defined in configuration files¶

The configuration items can be overridden by command line argument

--test-params. In this case, the configuration items and

their values should be passed in form of item=value and separated

by semicolon.

Example:

$ ./vsperf --test-params "TRAFFICGEN_DURATION=10;TRAFFICGEN_PKT_SIZES=(128,);" \

"GUEST_LOOPBACK=['testpmd','l2fwd']" pvvp_tput

The second option is to override configuration items by Parameters section

of the test case definition. The configuration items can be added into Parameters

dictionary with their new values. These values will override values defined in

configuration files or specified by --test-params command line argument.

Example:

"Parameters" : {'TRAFFICGEN_PKT_SIZES' : (128,),

'TRAFFICGEN_DURATION' : 10,

'GUEST_LOOPBACK' : ['testpmd','l2fwd'],

}

NOTE: In both cases, configuration item names and their values must be specified

in the same form as they are defined inside configuration files. Parameter names

must be specified in uppercase and data types of original and new value must match.

Python syntax rules related to data types and structures must be followed.

For example, parameter TRAFFICGEN_PKT_SIZES above is defined as a tuple

with a single value 128. In this case trailing comma is mandatory, otherwise

value can be wrongly interpreted as a number instead of a tuple and vsperf

execution would fail. Please check configuration files for default values and their

types and use them as a basis for any customized values. In case of any doubt, please

check official python documentation related to data structures like tuples, lists

and dictionaries.

NOTE: Vsperf execution will terminate with runtime error in case, that unknown

parameter name is passed via --test-params CLI argument or defined in Parameters

section of test case definition. It is also forbidden to redefine a value of

TEST_PARAMS configuration item via CLI or Parameters section.

1.8. vloop_vnf¶

VSPERF uses a VM image called vloop_vnf for looping traffic in the deployment scenarios involving VMs. The image can be downloaded from http://artifacts.opnfv.org/.

Please see the installation instructions for information on vloop-vnf images.

1.9. l2fwd Kernel Module¶

A Kernel Module that provides OSI Layer 2 Ipv4 termination or forwarding with support for Destination Network Address Translation (DNAT) for both the MAC and IP addresses. l2fwd can be found in <vswitchperf_dir>/src/l2fwd

1.10. Executing tests¶

All examples inside these docs assume, that user is inside the VSPERF directory. VSPERF can be executed from any directory.

Before running any tests make sure you have root permissions by adding the following line to /etc/sudoers:

username ALL=(ALL) NOPASSWD: ALL

username in the example above should be replaced with a real username.

To list the available tests:

$ ./vsperf --list

To run a single test:

$ ./vsperf $TESTNAME

Where $TESTNAME is the name of the vsperf test you would like to run.

To run a group of tests, for example all tests with a name containing ‘RFC2544’:

$ ./vsperf --conf-file=<path_to_custom_conf>/10_custom.conf --tests="RFC2544"

To run all tests:

$ ./vsperf --conf-file=<path_to_custom_conf>/10_custom.conf

Some tests allow for configurable parameters, including test duration (in seconds) as well as packet sizes (in bytes).

$ ./vsperf --conf-file user_settings.py \

--tests RFC2544Tput \

--test-params "TRAFFICGEN_DURATION=10;TRAFFICGEN_PKT_SIZES=(128,)"

For all available options, check out the help dialog:

$ ./vsperf --help

1.11. Executing Vanilla OVS tests¶

If needed, recompile src for all OVS variants

$ cd src $ make distclean $ make

Update your

10_custom.conffile to use Vanilla OVS:VSWITCH = 'OvsVanilla'

Run test:

$ ./vsperf --conf-file=<path_to_custom_conf>

Please note if you don’t want to configure Vanilla OVS through the configuration file, you can pass it as a CLI argument.

$ ./vsperf --vswitch OvsVanilla

1.12. Executing tests with VMs¶

To run tests using vhost-user as guest access method:

Set VHOST_METHOD and VNF of your settings file to:

VSWITCH = 'OvsDpdkVhost' VNF = 'QemuDpdkVhost'

If needed, recompile src for all OVS variants

$ cd src $ make distclean $ make

Run test:

$ ./vsperf --conf-file=<path_to_custom_conf>/10_custom.conf

1.13. Executing tests with VMs using Vanilla OVS¶

To run tests using Vanilla OVS:

Set the following variables:

VSWITCH = 'OvsVanilla' VNF = 'QemuVirtioNet' VANILLA_TGEN_PORT1_IP = n.n.n.n VANILLA_TGEN_PORT1_MAC = nn:nn:nn:nn:nn:nn VANILLA_TGEN_PORT2_IP = n.n.n.n VANILLA_TGEN_PORT2_MAC = nn:nn:nn:nn:nn:nn VANILLA_BRIDGE_IP = n.n.n.n

or use

--test-paramsoption$ ./vsperf --conf-file=<path_to_custom_conf>/10_custom.conf \ --test-params "VANILLA_TGEN_PORT1_IP=n.n.n.n;" \ "VANILLA_TGEN_PORT1_MAC=nn:nn:nn:nn:nn:nn;" \ "VANILLA_TGEN_PORT2_IP=n.n.n.n;" \ "VANILLA_TGEN_PORT2_MAC=nn:nn:nn:nn:nn:nn"

If needed, recompile src for all OVS variants

$ cd src $ make distclean $ make

Run test:

$ ./vsperf --conf-file<path_to_custom_conf>/10_custom.conf

1.14. Using vfio_pci with DPDK¶

To use vfio with DPDK instead of igb_uio add into your custom configuration file the following parameter:

PATHS['dpdk']['src']['modules'] = ['uio', 'vfio-pci']

NOTE: In case, that DPDK is installed from binary package, then please

set PATHS['dpdk']['bin']['modules'] instead.

NOTE: Please ensure that Intel VT-d is enabled in BIOS.

NOTE: Please ensure your boot/grub parameters include the following:

iommu=pt intel_iommu=on

To check that IOMMU is enabled on your platform:

$ dmesg | grep IOMMU

[ 0.000000] Intel-IOMMU: enabled

[ 0.139882] dmar: IOMMU 0: reg_base_addr fbffe000 ver 1:0 cap d2078c106f0466 ecap f020de

[ 0.139888] dmar: IOMMU 1: reg_base_addr ebffc000 ver 1:0 cap d2078c106f0466 ecap f020de

[ 0.139893] IOAPIC id 2 under DRHD base 0xfbffe000 IOMMU 0

[ 0.139894] IOAPIC id 0 under DRHD base 0xebffc000 IOMMU 1

[ 0.139895] IOAPIC id 1 under DRHD base 0xebffc000 IOMMU 1

[ 3.335744] IOMMU: dmar0 using Queued invalidation

[ 3.335746] IOMMU: dmar1 using Queued invalidation

....

1.15. Using SRIOV support¶

To use virtual functions of NIC with SRIOV support, use extended form of NIC PCI slot definition:

WHITELIST_NICS = ['0000:05:00.0|vf0', '0000:05:00.1|vf3']

Where ‘vf’ is an indication of virtual function usage and following number defines a VF to be used. In case that VF usage is detected, then vswitchperf will enable SRIOV support for given card and it will detect PCI slot numbers of selected VFs.

So in example above, one VF will be configured for NIC ‘0000:05:00.0’ and four VFs will be configured for NIC ‘0000:05:00.1’. Vswitchperf will detect PCI addresses of selected VFs and it will use them during test execution.

At the end of vswitchperf execution, SRIOV support will be disabled.

SRIOV support is generic and it can be used in different testing scenarios. For example:

- vSwitch tests with DPDK or without DPDK support to verify impact of VF usage on vSwitch performance

- tests without vSwitch, where traffic is forwared directly between VF interfaces by packet forwarder (e.g. testpmd application)

- tests without vSwitch, where VM accesses VF interfaces directly by PCI-passthrough to measure raw VM throughput performance.

1.16. Using QEMU with PCI passthrough support¶

Raw virtual machine throughput performance can be measured by execution of PVP test with direct access to NICs by PCI passthrough. To execute VM with direct access to PCI devices, enable vfio-pci. In order to use virtual functions, SRIOV-support must be enabled.

Execution of test with PCI passthrough with vswitch disabled:

$ ./vsperf --conf-file=<path_to_custom_conf>/10_custom.conf \

--vswitch none --vnf QemuPciPassthrough pvp_tput

Any of supported guest-loopback-application can be used inside VM with PCI passthrough support.

Note: Qemu with PCI passthrough support can be used only with PVP test deployment.

1.17. Selection of loopback application for tests with VMs¶

To select the loopback applications which will forward packets inside VMs, the following parameter should be configured:

GUEST_LOOPBACK = ['testpmd']

or use --test-params CLI argument:

$ ./vsperf --conf-file=<path_to_custom_conf>/10_custom.conf \

--test-params "GUEST_LOOPBACK=['testpmd']"

Supported loopback applications are:

'testpmd' - testpmd from dpdk will be built and used

'l2fwd' - l2fwd module provided by Huawei will be built and used

'linux_bridge' - linux bridge will be configured

'buildin' - nothing will be configured by vsperf; VM image must

ensure traffic forwarding between its interfaces

Guest loopback application must be configured, otherwise traffic will not be forwarded by VM and testcases with VM related deployments will fail. Guest loopback application is set to ‘testpmd’ by default.

NOTE: In case that only 1 or more than 2 NICs are configured for VM, then ‘testpmd’ should be used. As it is able to forward traffic between multiple VM NIC pairs.

NOTE: In case of linux_bridge, all guest NICs are connected to the same bridge inside the guest.

1.18. Mergable Buffers Options with QEMU¶

Mergable buffers can be disabled with VSPerf within QEMU. This option can increase performance significantly when not using jumbo frame sized packets. By default VSPerf disables mergable buffers. If you wish to enable it you can modify the setting in the a custom conf file.

GUEST_NIC_MERGE_BUFFERS_DISABLE = [False]

Then execute using the custom conf file.

$ ./vsperf --conf-file=<path_to_custom_conf>/10_custom.conf

Alternatively you can just pass the param during execution.

$ ./vsperf --test-params "GUEST_NIC_MERGE_BUFFERS_DISABLE=[False]"

1.19. Selection of dpdk binding driver for tests with VMs¶

To select dpdk binding driver, which will specify which driver the vm NICs will use for dpdk bind, the following configuration parameter should be configured:

GUEST_DPDK_BIND_DRIVER = ['igb_uio_from_src']

The supported dpdk guest bind drivers are:

'uio_pci_generic' - Use uio_pci_generic driver

'igb_uio_from_src' - Build and use the igb_uio driver from the dpdk src

files

'vfio_no_iommu' - Use vfio with no iommu option. This requires custom

guest images that support this option. The default

vloop image does not support this driver.

Note: uio_pci_generic does not support sr-iov testcases with guests attached. This is because uio_pci_generic only supports legacy interrupts. In case uio_pci_generic is selected with the vnf as QemuPciPassthrough it will be modified to use igb_uio_from_src instead.

Note: vfio_no_iommu requires kernels equal to or greater than 4.5 and dpdk 16.04 or greater. Using this option will also taint the kernel.

Please refer to the dpdk documents at http://dpdk.org/doc/guides for more information on these drivers.

1.20. Multi-Queue Configuration¶

VSPerf currently supports multi-queue with the following limitations:

Requires QEMU 2.5 or greater and any OVS version higher than 2.5. The default upstream package versions installed by VSPerf satisfies this requirement.

Guest image must have ethtool utility installed if using l2fwd or linux bridge inside guest for loopback.

If using OVS versions 2.5.0 or less enable old style multi-queue as shown in the ‘‘02_vswitch.conf’’ file.

OVS_OLD_STYLE_MQ = True

To enable multi-queue for dpdk modify the ‘‘02_vswitch.conf’’ file.

VSWITCH_DPDK_MULTI_QUEUES = 2

NOTE: you should consider using the switch affinity to set a pmd cpu mask that can optimize your performance. Consider the numa of the NIC in use if this applies by checking /sys/class/net/<eth_name>/device/numa_node and setting an appropriate mask to create PMD threads on the same numa node.

When multi-queue is enabled, each dpdk or dpdkvhostuser port that is created on the switch will set the option for multiple queues. If old style multi queue has been enabled a global option for multi queue will be used instead of the port by port option.

To enable multi-queue on the guest modify the ‘‘04_vnf.conf’’ file.

GUEST_NIC_QUEUES = [2]

Enabling multi-queue at the guest will add multiple queues to each NIC port when qemu launches the guest.

In case of Vanilla OVS, multi-queue is enabled on the tuntap ports and nic queues will be enabled inside the guest with ethtool. Simply enabling the multi-queue on the guest is sufficient for Vanilla OVS multi-queue.

Testpmd should be configured to take advantage of multi-queue on the guest if using DPDKVhostUser. This can be done by modifying the ‘‘04_vnf.conf’’ file.

GUEST_TESTPMD_PARAMS = ['-l 0,1,2,3,4 -n 4 --socket-mem 512 -- '

'--burst=64 -i --txqflags=0xf00 '

'--nb-cores=4 --rxq=2 --txq=2 '

'--disable-hw-vlan']

NOTE: The guest SMP cores must be configured to allow for testpmd to use the optimal number of cores to take advantage of the multiple guest queues.

In case of using Vanilla OVS and qemu virtio-net you can increase performance by binding vhost-net threads to cpus. This can be done by enabling the affinity in the ‘‘04_vnf.conf’’ file. This can be done to non multi-queue enabled configurations as well as there will be 2 vhost-net threads.

VSWITCH_VHOST_NET_AFFINITIZATION = True

VSWITCH_VHOST_CPU_MAP = [4,5,8,11]

NOTE: This method of binding would require a custom script in a real environment.

NOTE: For optimal performance guest SMPs and/or vhost-net threads should be on the same numa as the NIC in use if possible/applicable. Testpmd should be assigned at least (nb_cores +1) total cores with the cpu mask.

1.21. Executing Packet Forwarding tests¶

To select the applications which will forward packets, the following parameters should be configured:

VSWITCH = 'none'

PKTFWD = 'TestPMD'

or use --vswitch and --fwdapp CLI arguments:

$ ./vsperf phy2phy_cont --conf-file user_settings.py \

--vswitch none \

--fwdapp TestPMD

Supported Packet Forwarding applications are:

'testpmd' - testpmd from dpdk

Update your ‘‘10_custom.conf’’ file to use the appropriate variables for selected Packet Forwarder:

# testpmd configuration TESTPMD_ARGS = [] # packet forwarding mode supported by testpmd; Please see DPDK documentation # for comprehensive list of modes supported by your version. # e.g. io|mac|mac_retry|macswap|flowgen|rxonly|txonly|csum|icmpecho|... # Note: Option "mac_retry" has been changed to "mac retry" since DPDK v16.07 TESTPMD_FWD_MODE = 'csum' # checksum calculation layer: ip|udp|tcp|sctp|outer-ip TESTPMD_CSUM_LAYER = 'ip' # checksum calculation place: hw (hardware) | sw (software) TESTPMD_CSUM_CALC = 'sw' # recognize tunnel headers: on|off TESTPMD_CSUM_PARSE_TUNNEL = 'off'

Run test:

$ ./vsperf phy2phy_tput --conf-file <path_to_settings_py>

1.22. Executing Packet Forwarding tests with one guest¶

TestPMD with DPDK 16.11 or greater can be used to forward packets as a switch to a single guest using TestPMD vdev option. To set this configuration the following parameters should be used.

VSWITCH = 'none' PKTFWD = 'TestPMD'

or use --vswitch and --fwdapp CLI arguments:

$ ./vsperf pvp_tput --conf-file user_settings.py \ --vswitch none \ --fwdapp TestPMD

Guest forwarding application only supports TestPMD in this configuration.

GUEST_LOOPBACK = ['testpmd']

For optimal performance one cpu per port +1 should be used for TestPMD. Also set additional params for packet forwarding application to use the correct number of nb-cores.

VSWITCHD_DPDK_ARGS = ['-l', '46,44,42,40,38', '-n', '4', '--socket-mem 1024,0'] TESTPMD_ARGS = ['--nb-cores=4', '--txq=1', '--rxq=1']

For guest TestPMD 3 VCpus should be assigned with the following TestPMD params.

GUEST_TESTPMD_PARAMS = ['-l 0,1,2 -n 4 --socket-mem 1024 -- ' '--burst=64 -i --txqflags=0xf00 ' '--disable-hw-vlan --nb-cores=2 --txq=1 --rxq=1']

Execution of TestPMD can be run with the following command line

./vsperf pvp_tput --vswitch=none --fwdapp=TestPMD --conf-file <path_to_settings_py>

NOTE: To achieve the best 0% loss numbers with rfc2544 throughput testing, other tunings should be applied to host and guest such as tuned profiles and CPU tunings to prevent possible interrupts to worker threads.

1.23. VSPERF modes of operation¶

VSPERF can be run in different modes. By default it will configure vSwitch, traffic generator and VNF. However it can be used just for configuration and execution of traffic generator. Another option is execution of all components except traffic generator itself.

Mode of operation is driven by configuration parameter -m or –mode

-m MODE, --mode MODE vsperf mode of operation;

Values:

"normal" - execute vSwitch, VNF and traffic generator

"trafficgen" - execute only traffic generator

"trafficgen-off" - execute vSwitch and VNF

"trafficgen-pause" - execute vSwitch and VNF but wait before traffic transmission

In case, that VSPERF is executed in “trafficgen” mode, then configuration

of traffic generator can be modified through TRAFFIC dictionary passed to the

--test-params option. It is not needed to specify all values of TRAFFIC

dictionary. It is sufficient to specify only values, which should be changed.

Detailed description of TRAFFIC dictionary can be found at

Configuration of TRAFFIC dictionary.

Example of execution of VSPERF in “trafficgen” mode:

$ ./vsperf -m trafficgen --trafficgen IxNet --conf-file vsperf.conf \

--test-params "TRAFFIC={'traffic_type':'rfc2544_continuous','bidir':'False','framerate':60}"

1.24. Code change verification by pylint¶

Every developer participating in VSPERF project should run pylint before his python code is submitted for review. Project specific configuration for pylint is available at ‘pylint.rc’.

Example of manual pylint invocation:

$ pylint --rcfile ./pylintrc ./vsperf

1.25. GOTCHAs:¶

1.25.1. Custom image fails to boot¶

Using custom VM images may not boot within VSPerf pxp testing because of the drive boot and shared type which could be caused by a missing scsi driver inside the image. In case of issues you can try changing the drive boot type to ide.

GUEST_BOOT_DRIVE_TYPE = ['ide']

GUEST_SHARED_DRIVE_TYPE = ['ide']

1.25.2. OVS with DPDK and QEMU¶

If you encounter the following error: “before (last 100 chars): ‘-path=/dev/hugepages,share=on: unable to map backing store for hugepages: Cannot allocate memoryrnrn” during qemu initialization, check the amount of hugepages on your system:

$ cat /proc/meminfo | grep HugePages

By default the vswitchd is launched with 1Gb of memory, to change this, modify –socket-mem parameter in conf/02_vswitch.conf to allocate an appropriate amount of memory:

VSWITCHD_DPDK_ARGS = ['-c', '0x4', '-n', '4', '--socket-mem 1024,0']

VSWITCHD_DPDK_CONFIG = {

'dpdk-init' : 'true',

'dpdk-lcore-mask' : '0x4',

'dpdk-socket-mem' : '1024,0',

}

Note: Option VSWITCHD_DPDK_ARGS is used for vswitchd, which supports –dpdk parameter. In recent vswitchd versions, option VSWITCHD_DPDK_CONFIG will be used to configure vswitchd via ovs-vsctl calls.

1.26. More information¶

For more information and details refer to the rest of vSwitchPerfuser documentation.

2. Step driven tests¶

In general, test scenarios are defined by a deployment used in the particular

test case definition. The chosen deployment scenario will take care of the vSwitch

configuration, deployment of VNFs and it can also affect configuration of a traffic

generator. In order to allow a more flexible way of testcase scripting, VSPERF supports

a detailed step driven testcase definition. It can be used to configure and

program vSwitch, deploy and terminate VNFs, execute a traffic generator,

modify a VSPERF configuration, execute external commands, etc.

Execution of step driven tests is done on a step by step work flow starting with step 0 as defined inside the test case. Each step of the test increments the step number by one which is indicated in the log.

(testcases.integration) - Step 0 'vswitch add_vport ['br0']' start

Step driven tests can be used for both performance and integration testing. In case of integration test, each step in the test case is validated. If a step does not pass validation the test will fail and terminate. The test will continue until a failure is detected or all steps pass. A csv report file is generated after a test completes with an OK or FAIL result.

In case of performance test, the validation of steps is not performed and standard output files with results from traffic generator and underlying OS details are generated by vsperf.

Step driven testcases can be used in two different ways:

- # description of full testcase - in this case

cleandeployment is used- to indicate that vsperf should neither configure vSwitch nor deploy any VNF. Test shall perform all required vSwitch configuration and programming and deploy required number of VNFs.

- # modification of existing deployment - in this case, any of supported

- deployments can be used to perform initial vSwitch configuration and deployment of VNFs. Additional actions defined by TestSteps can be used to alter vSwitch configuration or deploy additional VNFs. After the last step is processed, the test execution will continue with traffic execution.

2.1. Test objects and their functions¶

Every test step can call a function of one of the supported test objects. The list of supported objects and their most common functions follows:

vswitch- provides functions for vSwitch configurationList of supported functions:

add_switch br_name- creates a new switch (bridge) with givenbr_namedel_switch br_name- deletes switch (bridge) with givenbr_nameadd_phy_port br_name- adds a physical port into bridge specified bybr_nameadd_vport br_name- adds a virtual port into bridge specified bybr_namedel_port br_name port_name- removes physical or virtual port specified byport_namefrom bridgebr_nameadd_flow br_name flow- adds flow specified byflowdictionary into the bridgebr_name; Content of flow dictionary will be passed to the vSwitch. In case of Open vSwitch it will be passed to theovs-ofctl add-flowcommand. Please see Open vSwitch documentation for the list of supported flow parameters.del_flow br_name [flow]- deletes flow specified byflowdictionary from bridgebr_name; In case that optional parameterflowis not specified or set to an empty dictionary{}, then all flows from bridgebr_namewill be deleted.dump_flows br_name- dumps all flows from bridge specified bybr_nameenable_stp br_name- enables Spanning Tree Protocol for bridgebr_namedisable_stp br_name- disables Spanning Tree Protocol for bridgebr_nameenable_rstp br_name- enables Rapid Spanning Tree Protocol for bridgebr_namedisable_rstp br_name- disables Rapid Spanning Tree Protocol for bridgebr_nameExamples:

['vswitch', 'add_switch', 'int_br0'] ['vswitch', 'del_switch', 'int_br0'] ['vswitch', 'add_phy_port', 'int_br0'] ['vswitch', 'del_port', 'int_br0', '#STEP[2][0]'] ['vswitch', 'add_flow', 'int_br0', {'in_port': '1', 'actions': ['output:2'], 'idle_timeout': '0'}], ['vswitch', 'enable_rstp', 'int_br0']

vnf[ID]- provides functions for deployment and termination of VNFs; Optional alfanumericalIDis used for VNF identification in case that testcase deploys multiple VNFs.List of supported functions:

start- starts a VNF based on VSPERF configurationstop- gracefully terminates given VNFExamples:

['vnf1', 'start'] ['vnf2', 'start'] ['vnf2', 'stop'] ['vnf1', 'stop']

trafficgen- triggers traffic generationList of supported functions:

send_traffic traffic- starts a traffic based on the vsperf configuration and giventrafficdictionary. More details abouttrafficdictionary and its possible values are available at Traffic Generator Integration GuideExamples:

['trafficgen', 'send_traffic', {'traffic_type' : 'rfc2544_throughput'}] ['trafficgen', 'send_traffic', {'traffic_type' : 'rfc2544_back2back', 'bidir' : 'True'}]

settings- reads or modifies VSPERF configurationList of supported functions:

getValue param- returns value of givenparamsetValue param value- sets value ofparamto givenvalueExamples:

['settings', 'getValue', 'TOOLS'] ['settings', 'setValue', 'GUEST_USERNAME', ['root']]

namespace- creates or modifies network namespacesList of supported functions:

create_namespace name- creates new namespace with givennamedelete_namespace name- deletes namespace specified by itsnameassign_port_to_namespace port name [port_up]- assigns NIC specified byportinto given namespacename; If optional parameterport_upis set toTrue, then port will be brought up.add_ip_to_namespace_eth port name addr cidr- assigns an IP addressaddr/cidrto the NIC specified byportwithin namespacenamereset_port_to_root port name- returns givenportfrom namespacenameback to the root namespaceExamples:

['namespace', 'create_namespace', 'testns'] ['namespace', 'assign_port_to_namespace', 'eth0', 'testns']

veth- manipulates with eth and veth devicesList of supported functions:

add_veth_port port peer_port- adds a pair of veth ports namedportandpeer_portdel_veth_port port peer_port- deletes a veth port pair specified byportandpeer_portbring_up_eth_port eth_port [namespace]- brings upeth_portin (optional)namespaceExamples:

['veth', 'add_veth_port', 'veth', 'veth1'] ['veth', 'bring_up_eth_port', 'eth1']

tools- provides a set of helper functionsList of supported functions:

Assert condition- evaluates givenconditionand raisesAssertionErrorin case that condition is notTrueEval expression- evaluates given expression as a python code and returns its resultExec command [regex]- executes a shell command and filters its output by (optional) regular expressionExamples:

['tools', 'exec', 'numactl -H', 'available: ([0-9]+)'] ['tools', 'assert', '#STEP[-1][0]>1']

wait- is used for test case interruption. This object doesn’t have any functions. Once reached, vsperf will pause test execution and waits for press ofEnter key. It can be used during testcase design for debugging purposes.Examples:

['wait']

2.2. Test Macros¶

Test profiles can include macros as part of the test step. Each step in the profile may return a value such as a port name. Recall macros use #STEP to indicate the recalled value inside the return structure. If the method the test step calls returns a value it can be later recalled, for example:

{

"Name": "vswitch_add_del_vport",

"Deployment": "clean",

"Description": "vSwitch - add and delete virtual port",

"TestSteps": [

['vswitch', 'add_switch', 'int_br0'], # STEP 0

['vswitch', 'add_vport', 'int_br0'], # STEP 1

['vswitch', 'del_port', 'int_br0', '#STEP[1][0]'], # STEP 2

['vswitch', 'del_switch', 'int_br0'], # STEP 3

]

}

This test profile uses the vswitch add_vport method which returns a string value of the port added. This is later called by the del_port method using the name from step 1.

It is also possible to use negative indexes in step macros. In that case

#STEP[-1] will refer to the result from previous step, #STEP[-2]

will refer to result of step called before previous step, etc. It means,

that you could change STEP 2 from previous example to achieve the same

functionality:

['vswitch', 'del_port', 'int_br0', '#STEP[-1][0]'], # STEP 2

Also commonly used steps can be created as a separate profile.

STEP_VSWITCH_PVP_INIT = [

['vswitch', 'add_switch', 'int_br0'], # STEP 0

['vswitch', 'add_phy_port', 'int_br0'], # STEP 1

['vswitch', 'add_phy_port', 'int_br0'], # STEP 2

['vswitch', 'add_vport', 'int_br0'], # STEP 3

['vswitch', 'add_vport', 'int_br0'], # STEP 4

]

This profile can then be used inside other testcases

{

"Name": "vswitch_pvp",

"Deployment": "clean",

"Description": "vSwitch - configure switch and one vnf",

"TestSteps": STEP_VSWITCH_PVP_INIT +

[

['vnf', 'start'],

['vnf', 'stop'],

] +

STEP_VSWITCH_PVP_FINIT

}

2.3. HelloWorld and other basic Testcases¶

The following examples are for demonstration purposes. You can run them by copying and pasting into the conf/integration/01_testcases.conf file. A command-line instruction is shown at the end of each example.

2.3.1. HelloWorld¶

The first example is a HelloWorld testcase. It simply creates a bridge with 2 physical ports, then sets up a flow to drop incoming packets from the port that was instantiated at the STEP #1. There’s no interaction with the traffic generator. Then the flow, the 2 ports and the bridge are deleted. ‘add_phy_port’ method creates a ‘dpdk’ type interface that will manage the physical port. The string value returned is the port name that will be referred by ‘del_port’ later on.

{

"Name": "HelloWorld",

"Description": "My first testcase",

"Deployment": "clean",

"TestSteps": [

['vswitch', 'add_switch', 'int_br0'], # STEP 0

['vswitch', 'add_phy_port', 'int_br0'], # STEP 1

['vswitch', 'add_phy_port', 'int_br0'], # STEP 2

['vswitch', 'add_flow', 'int_br0', {'in_port': '#STEP[1][1]', \

'actions': ['drop'], 'idle_timeout': '0'}],

['vswitch', 'del_flow', 'int_br0'],

['vswitch', 'del_port', 'int_br0', '#STEP[1][0]'],

['vswitch', 'del_port', 'int_br0', '#STEP[2][0]'],

['vswitch', 'del_switch', 'int_br0'],

]

},

To run HelloWorld test:

./vsperf --conf-file user_settings.py --integration HelloWorld

2.3.2. Specify a Flow by the IP address¶

The next example shows how to explicitly set up a flow by specifying a destination IP address. All packets received from the port created at STEP #1 that have a destination IP address = 90.90.90.90 will be forwarded to the port created at the STEP #2.

{

"Name": "p2p_rule_l3da",

"Description": "Phy2Phy with rule on L3 Dest Addr",

"Deployment": "clean",

"biDirectional": "False",

"TestSteps": [

['vswitch', 'add_switch', 'int_br0'], # STEP 0

['vswitch', 'add_phy_port', 'int_br0'], # STEP 1

['vswitch', 'add_phy_port', 'int_br0'], # STEP 2

['vswitch', 'add_flow', 'int_br0', {'in_port': '#STEP[1][1]', \

'dl_type': '0x0800', 'nw_dst': '90.90.90.90', \

'actions': ['output:#STEP[2][1]'], 'idle_timeout': '0'}],

['trafficgen', 'send_traffic', \

{'traffic_type' : 'rfc2544_continuous'}],

['vswitch', 'dump_flows', 'int_br0'], # STEP 5

['vswitch', 'del_flow', 'int_br0'], # STEP 7 == del-flows

['vswitch', 'del_port', 'int_br0', '#STEP[1][0]'],

['vswitch', 'del_port', 'int_br0', '#STEP[2][0]'],

['vswitch', 'del_switch', 'int_br0'],

]

},

To run the test:

./vsperf --conf-file user_settings.py --integration p2p_rule_l3da

2.3.3. Multistream feature¶

The next testcase uses the multistream feature. The traffic generator will send packets with different UDP ports. That is accomplished by using “Stream Type” and “MultiStream” keywords. 4 different flows are set to forward all incoming packets.

{

"Name": "multistream_l4",

"Description": "Multistream on UDP ports",

"Deployment": "clean",

"Parameters": {

'TRAFFIC' : {

"multistream": 4,

"stream_type": "L4",

},

},

"TestSteps": [

['vswitch', 'add_switch', 'int_br0'], # STEP 0

['vswitch', 'add_phy_port', 'int_br0'], # STEP 1

['vswitch', 'add_phy_port', 'int_br0'], # STEP 2

# Setup Flows

['vswitch', 'add_flow', 'int_br0', {'in_port': '#STEP[1][1]', \

'dl_type': '0x0800', 'nw_proto': '17', 'udp_dst': '0', \

'actions': ['output:#STEP[2][1]'], 'idle_timeout': '0'}],

['vswitch', 'add_flow', 'int_br0', {'in_port': '#STEP[1][1]', \

'dl_type': '0x0800', 'nw_proto': '17', 'udp_dst': '1', \

'actions': ['output:#STEP[2][1]'], 'idle_timeout': '0'}],

['vswitch', 'add_flow', 'int_br0', {'in_port': '#STEP[1][1]', \

'dl_type': '0x0800', 'nw_proto': '17', 'udp_dst': '2', \

'actions': ['output:#STEP[2][1]'], 'idle_timeout': '0'}],

['vswitch', 'add_flow', 'int_br0', {'in_port': '#STEP[1][1]', \

'dl_type': '0x0800', 'nw_proto': '17', 'udp_dst': '3', \

'actions': ['output:#STEP[2][1]'], 'idle_timeout': '0'}],

# Send mono-dir traffic

['trafficgen', 'send_traffic', \

{'traffic_type' : 'rfc2544_continuous', \

'bidir' : 'False'}],

# Clean up

['vswitch', 'del_flow', 'int_br0'],

['vswitch', 'del_port', 'int_br0', '#STEP[1][0]'],

['vswitch', 'del_port', 'int_br0', '#STEP[2][0]'],

['vswitch', 'del_switch', 'int_br0'],

]

},

To run the test:

./vsperf --conf-file user_settings.py --integration multistream_l4

2.3.4. PVP with a VM Replacement¶

This example launches a 1st VM in a PVP topology, then the VM is replaced by another VM. When VNF setup parameter in ./conf/04_vnf.conf is “QemuDpdkVhostUser” ‘add_vport’ method creates a ‘dpdkvhostuser’ type port to connect a VM.

{

"Name": "ex_replace_vm",

"Description": "PVP with VM replacement",

"Deployment": "clean",

"TestSteps": [

['vswitch', 'add_switch', 'int_br0'], # STEP 0

['vswitch', 'add_phy_port', 'int_br0'], # STEP 1

['vswitch', 'add_phy_port', 'int_br0'], # STEP 2

['vswitch', 'add_vport', 'int_br0'], # STEP 3 vm1

['vswitch', 'add_vport', 'int_br0'], # STEP 4

# Setup Flows

['vswitch', 'add_flow', 'int_br0', {'in_port': '#STEP[1][1]', \

'actions': ['output:#STEP[3][1]'], 'idle_timeout': '0'}],

['vswitch', 'add_flow', 'int_br0', {'in_port': '#STEP[4][1]', \

'actions': ['output:#STEP[2][1]'], 'idle_timeout': '0'}],

['vswitch', 'add_flow', 'int_br0', {'in_port': '#STEP[2][1]', \

'actions': ['output:#STEP[4][1]'], 'idle_timeout': '0'}],

['vswitch', 'add_flow', 'int_br0', {'in_port': '#STEP[3][1]', \

'actions': ['output:#STEP[1][1]'], 'idle_timeout': '0'}],

# Start VM 1

['vnf1', 'start'],

# Now we want to replace VM 1 with another VM

['vnf1', 'stop'],

['vswitch', 'add_vport', 'int_br0'], # STEP 11 vm2

['vswitch', 'add_vport', 'int_br0'], # STEP 12

['vswitch', 'del_flow', 'int_br0'],

['vswitch', 'add_flow', 'int_br0', {'in_port': '#STEP[1][1]', \

'actions': ['output:#STEP[11][1]'], 'idle_timeout': '0'}],

['vswitch', 'add_flow', 'int_br0', {'in_port': '#STEP[12][1]', \

'actions': ['output:#STEP[2][1]'], 'idle_timeout': '0'}],

# Start VM 2

['vnf2', 'start'],

['vnf2', 'stop'],

['vswitch', 'dump_flows', 'int_br0'],

# Clean up

['vswitch', 'del_flow', 'int_br0'],

['vswitch', 'del_port', 'int_br0', '#STEP[1][0]'],

['vswitch', 'del_port', 'int_br0', '#STEP[2][0]'],

['vswitch', 'del_port', 'int_br0', '#STEP[3][0]'], # vm1

['vswitch', 'del_port', 'int_br0', '#STEP[4][0]'],

['vswitch', 'del_port', 'int_br0', '#STEP[11][0]'], # vm2

['vswitch', 'del_port', 'int_br0', '#STEP[12][0]'],

['vswitch', 'del_switch', 'int_br0'],

]

},

To run the test:

./vsperf --conf-file user_settings.py --integration ex_replace_vm

2.3.5. VM with a Linux bridge¶

This example setups a PVP topology and routes traffic to the VM based on

the destination IP address. A command-line parameter is used to select a Linux

bridge as a guest loopback application. It is also possible to select a guest

loopback application by a configuration option GUEST_LOOPBACK.

{

"Name": "ex_pvp_rule_l3da",

"Description": "PVP with flow on L3 Dest Addr",

"Deployment": "clean",

"TestSteps": [

['vswitch', 'add_switch', 'int_br0'], # STEP 0

['vswitch', 'add_phy_port', 'int_br0'], # STEP 1

['vswitch', 'add_phy_port', 'int_br0'], # STEP 2

['vswitch', 'add_vport', 'int_br0'], # STEP 3 vm1

['vswitch', 'add_vport', 'int_br0'], # STEP 4

# Setup Flows

['vswitch', 'add_flow', 'int_br0', {'in_port': '#STEP[1][1]', \

'dl_type': '0x0800', 'nw_dst': '90.90.90.90', \

'actions': ['output:#STEP[3][1]'], 'idle_timeout': '0'}],

# Each pkt from the VM is forwarded to the 2nd dpdk port

['vswitch', 'add_flow', 'int_br0', {'in_port': '#STEP[4][1]', \

'actions': ['output:#STEP[2][1]'], 'idle_timeout': '0'}],

# Start VMs

['vnf1', 'start'],

['trafficgen', 'send_traffic', \

{'traffic_type' : 'rfc2544_continuous', \

'bidir' : 'False'}],

['vnf1', 'stop'],

# Clean up

['vswitch', 'dump_flows', 'int_br0'], # STEP 10

['vswitch', 'del_flow', 'int_br0'], # STEP 11

['vswitch', 'del_port', 'int_br0', '#STEP[1][0]'],

['vswitch', 'del_port', 'int_br0', '#STEP[2][0]'],

['vswitch', 'del_port', 'int_br0', '#STEP[3][0]'], # vm1 ports

['vswitch', 'del_port', 'int_br0', '#STEP[4][0]'],

['vswitch', 'del_switch', 'int_br0'],

]

},

To run the test:

./vsperf --conf-file user_settings.py --test-params \ "GUEST_LOOPBACK=['linux_bridge']" --integration ex_pvp_rule_l3da

2.3.6. Forward packets based on UDP port¶

This examples launches 2 VMs connected in parallel. Incoming packets will be forwarded to one specific VM depending on the destination UDP port.

{

"Name": "ex_2pvp_rule_l4dp",

"Description": "2 PVP with flows on L4 Dest Port",

"Deployment": "clean",

"Parameters": {

'TRAFFIC' : {

"multistream": 2,

"stream_type": "L4",

},

},

"TestSteps": [

['vswitch', 'add_switch', 'int_br0'], # STEP 0

['vswitch', 'add_phy_port', 'int_br0'], # STEP 1

['vswitch', 'add_phy_port', 'int_br0'], # STEP 2

['vswitch', 'add_vport', 'int_br0'], # STEP 3 vm1

['vswitch', 'add_vport', 'int_br0'], # STEP 4

['vswitch', 'add_vport', 'int_br0'], # STEP 5 vm2

['vswitch', 'add_vport', 'int_br0'], # STEP 6

# Setup Flows to reply ICMPv6 and similar packets, so to

# avoid flooding internal port with their re-transmissions

['vswitch', 'add_flow', 'int_br0', \

{'priority': '1', 'dl_src': '00:00:00:00:00:01', \

'actions': ['output:#STEP[3][1]'], 'idle_timeout': '0'}],

['vswitch', 'add_flow', 'int_br0', \

{'priority': '1', 'dl_src': '00:00:00:00:00:02', \

'actions': ['output:#STEP[4][1]'], 'idle_timeout': '0'}],

['vswitch', 'add_flow', 'int_br0', \

{'priority': '1', 'dl_src': '00:00:00:00:00:03', \

'actions': ['output:#STEP[5][1]'], 'idle_timeout': '0'}],

['vswitch', 'add_flow', 'int_br0', \

{'priority': '1', 'dl_src': '00:00:00:00:00:04', \

'actions': ['output:#STEP[6][1]'], 'idle_timeout': '0'}],

# Forward UDP packets depending on dest port

['vswitch', 'add_flow', 'int_br0', {'in_port': '#STEP[1][1]', \

'dl_type': '0x0800', 'nw_proto': '17', 'udp_dst': '0', \

'actions': ['output:#STEP[3][1]'], 'idle_timeout': '0'}],

['vswitch', 'add_flow', 'int_br0', {'in_port': '#STEP[1][1]', \

'dl_type': '0x0800', 'nw_proto': '17', 'udp_dst': '1', \

'actions': ['output:#STEP[5][1]'], 'idle_timeout': '0'}],

# Send VM output to phy port #2

['vswitch', 'add_flow', 'int_br0', {'in_port': '#STEP[4][1]', \

'actions': ['output:#STEP[2][1]'], 'idle_timeout': '0'}],

['vswitch', 'add_flow', 'int_br0', {'in_port': '#STEP[6][1]', \

'actions': ['output:#STEP[2][1]'], 'idle_timeout': '0'}],

# Start VMs

['vnf1', 'start'], # STEP 16

['vnf2', 'start'], # STEP 17

['trafficgen', 'send_traffic', \

{'traffic_type' : 'rfc2544_continuous', \

'bidir' : 'False'}],

['vnf1', 'stop'],

['vnf2', 'stop'],

['vswitch', 'dump_flows', 'int_br0'],

# Clean up

['vswitch', 'del_flow', 'int_br0'],

['vswitch', 'del_port', 'int_br0', '#STEP[1][0]'],

['vswitch', 'del_port', 'int_br0', '#STEP[2][0]'],

['vswitch', 'del_port', 'int_br0', '#STEP[3][0]'], # vm1 ports

['vswitch', 'del_port', 'int_br0', '#STEP[4][0]'],

['vswitch', 'del_port', 'int_br0', '#STEP[5][0]'], # vm2 ports

['vswitch', 'del_port', 'int_br0', '#STEP[6][0]'],

['vswitch', 'del_switch', 'int_br0'],

]

},

To run the test:

./vsperf --conf-file user_settings.py --integration ex_2pvp_rule_l4dp

2.3.7. Modification of existing PVVP deployment¶

This is an example of modification of a standard deployment scenario with additional TestSteps. Standard PVVP scenario is used to configure a vSwitch and to deploy two VNFs connected in series. Additional TestSteps will deploy a 3rd VNF and connect it in parallel to already configured VNFs. Traffic generator is instructed (by Multistream feature) to send two separate traffic streams. One stream will be sent to the standalone VNF and second to two chained VNFs.