VSPERF Configuration and User Guide¶

Introduction¶

VSPERF is an OPNFV testing project.

VSPERF provides an automated test-framework and comprehensive test suite based on Industry Test Specifications for measuring NFVI data-plane performance. The data-path includes switching technologies with physical and virtual network interfaces. The VSPERF architecture is switch and traffic generator agnostic and test cases can be easily customized. VSPERF was designed to be independent of OpenStack therefore OPNFV installer scenarios are not required. VSPERF can source, configure and deploy the device-under-test using specified software versions and network topology. VSPERF is used as a development tool for optimizing switching technologies, qualification of packet processing functions and for evaluation of data-path performance.

The Euphrates release adds new features and improvements that will help advance high performance packet processing on Telco NFV platforms. This includes new test cases, flexibility in customizing test-cases, new results display options, improved tool resiliency, additional traffic generator support and VPP support.

VSPERF provides a framework where the entire NFV Industry can learn about NFVI data-plane performance and try-out new techniques together. A new IETF benchmarking specification (RFC8204) is based on VSPERF work contributed since 2015. VSPERF is also contributing to development of ETSI NFV test specifications through the Test and Open Source Working Group.

- Wiki: https://wiki.opnfv.org/characterize_vswitch_performance_for_telco_nfv_use_cases

- Repository: https://git.opnfv.org/vswitchperf

- Artifacts: https://artifacts.opnfv.org/vswitchperf.html

- Continuous Integration: https://build.opnfv.org/ci/view/vswitchperf/

VSPERF Install and Configuration¶

1. Installing vswitchperf¶

1.1. Downloading vswitchperf¶

The vswitchperf can be downloaded from its official git repository, which is

hosted by OPNFV. It is necessary to install a git at your DUT before downloading

vswitchperf. Installation of git is specific to the packaging system used by

Linux OS installed at DUT.

Example of installation of GIT package and its dependencies:

in case of OS based on RedHat Linux:

sudo yum install git

in case of Ubuntu or Debian:

sudo apt-get install git

After the git is successfully installed at DUT, then vswitchperf can be downloaded

as follows:

git clone http://git.opnfv.org/vswitchperf

The last command will create a directory vswitchperf with a local copy of vswitchperf

repository.

1.2. Supported Operating Systems¶

- CentOS 7.3

- Fedora 24 (kernel 4.8 requires DPDK 16.11 and newer)

- Fedora 25 (kernel 4.9 requires DPDK 16.11 and newer)

- openSUSE 42.2

- openSUSE 42.3

- RedHat 7.2 Enterprise Linux

- RedHat 7.3 Enterprise Linux

- Ubuntu 14.04

- Ubuntu 16.04

- Ubuntu 16.10 (kernel 4.8 requires DPDK 16.11 and newer)

1.3. Supported vSwitches¶

The vSwitch must support Open Flow 1.3 or greater.

- Open vSwitch

- Open vSwitch with DPDK support

- TestPMD application from DPDK (supports p2p and pvp scenarios)

- Cisco VPP

1.4. Supported Hypervisors¶

- Qemu version 2.3 or greater (version 2.5.0 is recommended)

1.5. Supported VNFs¶

In theory, it is possible to use any VNF image, which is compatible with supported hypervisor. However such VNF must ensure, that appropriate number of network interfaces is configured and that traffic is properly forwarded among them. For new vswitchperf users it is recommended to start with official vloop-vnf image, which is maintained by vswitchperf community.

1.5.1. vloop-vnf¶

The official VM image is called vloop-vnf and it is available for free download from OPNFV artifactory. This image is based on Linux Ubuntu distribution and it supports following applications for traffic forwarding:

- DPDK testpmd

- Linux Bridge

- Custom l2fwd module

The vloop-vnf can be downloaded to DUT, for example by wget:

wget http://artifacts.opnfv.org/vswitchperf/vnf/vloop-vnf-ubuntu-14.04_20160823.qcow2

NOTE: In case that wget is not installed at your DUT, you could install it at RPM

based system by sudo yum install wget or at DEB based system by sudo apt-get install

wget.

Changelog of vloop-vnf:

- vloop-vnf-ubuntu-14.04_20160823

- ethtool installed

- only 1 NIC is configured by default to speed up boot with 1 NIC setup

- security updates applied

- vloop-vnf-ubuntu-14.04_20160804

- Linux kernel 4.4.0 installed

- libnuma-dev installed

- security updates applied

- vloop-vnf-ubuntu-14.04_20160303

- snmpd service is disabled by default to avoid error messages during VM boot

- security updates applied

- vloop-vnf-ubuntu-14.04_20151216

- version with development tools required for build of DPDK and l2fwd

1.6. Installation¶

The test suite requires Python 3.3 or newer and relies on a number of other system and python packages. These need to be installed for the test suite to function.

Installation of required packages, preparation of Python 3 virtual environment and compilation of OVS, DPDK and QEMU is performed by script systems/build_base_machine.sh. It should be executed under user account, which will be used for vsperf execution.

NOTE: Password-less sudo access must be configured for given user account before script is executed.

$ cd systems

$ ./build_base_machine.sh

NOTE: you don’t need to go into any of the systems subdirectories, simply run the top level build_base_machine.sh, your OS will be detected automatically.

Script build_base_machine.sh will install all the vsperf dependencies

in terms of system packages, Python 3.x and required Python modules.

In case of CentOS 7 or RHEL it will install Python 3.3 from an additional

repository provided by Software Collections (a link). Installation script

will also use virtualenv to create a vsperf virtual environment, which is

isolated from the default Python environment. This environment will reside in a

directory called vsperfenv in $HOME. It will ensure, that system wide Python

installation is not modified or broken by VSPERF installation. The complete list

of Python packages installed inside virtualenv can be found at file

requirements.txt, which is located at vswitchperf repository.

NOTE: For RHEL 7.3 Enterprise and CentOS 7.3 OVS Vanilla is not built from upstream source due to kernel incompatibilities. Please see the instructions in the vswitchperf_design document for details on configuring OVS Vanilla for binary package usage.

1.6.1. VPP installation¶

VPP installation is now included as part of the VSPerf installation scripts.

In case of an error message about a missing file such as “Couldn’t open file /etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7” you can resolve this issue by simply downloading the file.

$ wget https://dl.fedoraproject.org/pub/epel/RPM-GPG-KEY-EPEL-7

1.7. Using vswitchperf¶

You will need to activate the virtual environment every time you start a new shell session. Its activation is specific to your OS:

CentOS 7 and RHEL

$ scl enable python33 bash $ source $HOME/vsperfenv/bin/activate

Fedora and Ubuntu

$ source $HOME/vsperfenv/bin/activate

After the virtual environment is configued, then VSPERF can be used. For example:

(vsperfenv) $ cd vswitchperf (vsperfenv) $ ./vsperf --help

1.7.1. Gotcha¶

In case you will see following error during environment activation:

$ source $HOME/vsperfenv/bin/activate

Badly placed ()'s.

then check what type of shell you are using:

$ echo $SHELL

/bin/tcsh

See what scripts are available in $HOME/vsperfenv/bin

$ ls $HOME/vsperfenv/bin/

activate activate.csh activate.fish activate_this.py

source the appropriate script

$ source bin/activate.csh

1.7.2. Working Behind a Proxy¶

If you’re behind a proxy, you’ll likely want to configure this before running any of the above. For example:

export http_proxy=proxy.mycompany.com:123 export https_proxy=proxy.mycompany.com:123

1.7.3. Bind Tools DPDK¶

VSPerf supports the default DPDK bind tool, but also supports driverctl. The driverctl tool is a new tool being used that allows driver binding to be persistent across reboots. The driverctl tool is not provided by VSPerf, but can be downloaded from upstream sources. Once installed set the bind tool to driverctl to allow VSPERF to correctly bind cards for DPDK tests.

PATHS['dpdk']['src']['bind-tool'] = 'driverctl'

1.8. Hugepage Configuration¶

Systems running vsperf with either dpdk and/or tests with guests must configure hugepage amounts to support running these configurations. It is recommended to configure 1GB hugepages as the pagesize.

The amount of hugepages needed depends on your configuration files in vsperf.

Each guest image requires 2048 MB by default according to the default settings

in the 04_vnf.conf file.

GUEST_MEMORY = ['2048']

The dpdk startup parameters also require an amount of hugepages depending on

your configuration in the 02_vswitch.conf file.

DPDK_SOCKET_MEM = ['1024', '0']

NOTE: Option DPDK_SOCKET_MEM is used by all vSwitches with DPDK support.

It means Open vSwitch, VPP and TestPMD.

VSPerf will verify hugepage amounts are free before executing test environments. In case of hugepage amounts not being free, test initialization will fail and testing will stop.

NOTE: In some instances on a test failure dpdk resources may not release hugepages used in dpdk configuration. It is recommended to configure a few extra hugepages to prevent a false detection by VSPerf that not enough free hugepages are available to execute the test environment. Normally dpdk would use previously allocated hugepages upon initialization.

Depending on your OS selection configuration of hugepages may vary. Please refer to your OS documentation to set hugepages correctly. It is recommended to set the required amount of hugepages to be allocated by default on reboots.

Information on hugepage requirements for dpdk can be found at http://dpdk.org/doc/guides/linux_gsg/sys_reqs.html

You can review your hugepage amounts by executing the following command

cat /proc/meminfo | grep Huge

If no hugepages are available vsperf will try to automatically allocate some.

Allocation is controlled by HUGEPAGE_RAM_ALLOCATION configuration parameter in

02_vswitch.conf file. Default is 2GB, resulting in either 2 1GB hugepages

or 1024 2MB hugepages.

1.9. Tuning Considerations¶

With the large amount of tuning guides available online on how to properly tune a DUT, it becomes difficult to achieve consistent numbers for DPDK testing. VSPerf recommends a simple approach that has been tested by different companies to achieve proper CPU isolation.

The idea behind CPU isolation when running DPDK based tests is to achieve as few interruptions to a PMD process as possible. There is now a utility available on most Linux Systems to achieve proper CPU isolation with very little effort and customization. The tool is called tuned-adm and is most likely installed by default on the Linux DUT

VSPerf recommends the latest tuned-adm package, which can be downloaded from the following location:

http://www.tuned-project.org/2017/04/27/tuned-2-8-0-released/

Follow the instructions to install the latest tuned-adm onto your system. For current RHEL customers you should already have the most current version. You just need to install the cpu-partitioning profile.

yum install -y tuned-profiles-cpu-partitioning.noarch

Proper CPU isolation starts with knowing what NUMA your NIC is installed onto. You can identify this by checking the output of the following command

cat /sys/class/net/<NIC NAME>/device/numa_node

You can then use utilities such as lscpu or cpu_layout.py which is located in the src dpdk area of VSPerf. These tools will show the CPU layout of which cores/hyperthreads are located on the same NUMA.

Determine which CPUS/Hyperthreads will be used for PMD threads and VCPUs for VNFs. Then modify the /etc/tuned/cpu-partitioning-variables.conf and add the CPUs into the isolated_cores variable in some form of x-y or x,y,z or x-y,z, etc. Then apply the profile.

tuned-adm profile cpu-partitioning

After applying the profile, reboot your system.

After rebooting the DUT, you can verify the profile is active by running

tuned-adm active

Now you should have proper CPU isolation active and can achieve consistent results with DPDK based tests.

The last consideration is when running TestPMD inside of a VNF, it may make sense to enable enough cores to run a PMD thread on separate core/HT. To achieve this, set the number of VCPUs to 3 and enable enough nb-cores in the TestPMD config. You can modify options in the conf files.

GUEST_SMP = ['3']

GUEST_TESTPMD_PARAMS = ['-l 0,1,2 -n 4 --socket-mem 512 -- '

'--burst=64 -i --txqflags=0xf00 '

'--disable-hw-vlan --nb-cores=2']

Verify you set the VCPU core locations appropriately on the same NUMA as with your PMD mask for OVS-DPDK.

2. Upgrading vswitchperf¶

2.1. Generic¶

In case, that VSPERF is cloned from git repository, then it is easy to upgrade it to the newest stable version or to the development version.

You could get a list of stable releases by git command. It is necessary

to update local git repository first.

NOTE: Git commands must be executed from directory, where VSPERF repository

was cloned, e.g. vswitchperf.

Update of local git repository:

$ git pull

List of stable releases:

$ git tag

brahmaputra.1.0

colorado.1.0

colorado.2.0

colorado.3.0

danube.1.0

euphrates.1.0

You could select which stable release should be used. For example, select danube.1.0:

$ git checkout danube.1.0

Development version of VSPERF can be selected by:

$ git checkout master

2.2. Colorado to Danube upgrade notes¶

2.2.1. Obsoleted features¶

Support of vHost Cuse interface has been removed in Danube release. It means,

that it is not possible to select QemuDpdkVhostCuse as a VNF anymore. Option

QemuDpdkVhostUser should be used instead. Please check you configuration files

and definition of your testcases for any occurrence of:

VNF = "QemuDpdkVhostCuse"

or

"VNF" : "QemuDpdkVhostCuse"

In case that QemuDpdkVhostCuse is found, it must be modified to QemuDpdkVhostUser.

NOTE: In case that execution of VSPERF is automated by scripts (e.g. for CI purposes), then these scripts must be checked and updated too. It means, that any occurrence of:

./vsperf --vnf QemuDpdkVhostCuse

must be updated to:

./vsperf --vnf QemuDpdkVhostUser

2.2.2. Configuration¶

Several configuration changes were introduced during Danube release. The most important changes are discussed below.

2.2.2.1. Paths to DPDK, OVS and QEMU¶

VSPERF uses external tools for proper testcase execution. Thus it is important

to properly configure paths to these tools. In case that tools are installed

by installation scripts and are located inside ./src directory inside

VSPERF home, then no changes are needed. On the other hand, if path settings

was changed by custom configuration file, then it is required to update configuration

accordingly. Please check your configuration files for following configuration

options:

OVS_DIR

OVS_DIR_VANILLA

OVS_DIR_USER

OVS_DIR_CUSE

RTE_SDK_USER

RTE_SDK_CUSE

QEMU_DIR

QEMU_DIR_USER

QEMU_DIR_CUSE

QEMU_BIN

In case that any of these options is defined, then configuration must be updated.

All paths to the tools are now stored inside PATHS dictionary. Please

refer to the paths-documentation and update your configuration where necessary.

2.2.2.2. Configuration change via CLI¶

In previous releases it was possible to modify selected configuration options

(mostly VNF specific) via command line interface, i.e. by --test-params

argument. This concept has been generalized in Danube release and it is

possible to modify any configuration parameter via CLI or via Parameters

section of the testcase definition. Old configuration options were obsoleted

and it is required to specify configuration parameter name in the same form

as it is defined inside configuration file, i.e. in uppercase. Please

refer to the overriding-parameters-documentation for additional details.

NOTE: In case that execution of VSPERF is automated by scripts (e.g. for CI purposes), then these scripts must be checked and updated too. It means, that any occurrence of

guest_loopback

vanilla_tgen_port1_ip

vanilla_tgen_port1_mac

vanilla_tgen_port2_ip

vanilla_tgen_port2_mac

tunnel_type

shall be changed to the uppercase form and data type of entered values must match to data types of original values from configuration files.

In case that guest_nic1_name or guest_nic2_name is changed,

then new dictionary GUEST_NICS must be modified accordingly.

Please see configuration-of-guest-options and conf/04_vnf.conf for additional

details.

2.2.2.3. Traffic configuration via CLI¶

In previous releases it was possible to modify selected attributes of generated

traffic via command line interface. This concept has been enhanced in Danube

release and it is now possible to modify all traffic specific options via

CLI or by TRAFFIC dictionary in configuration file. Detailed description

is available at configuration-of-traffic-dictionary section of documentation.

Please check your automated scripts for VSPERF execution for following CLI parameters and update them according to the documentation:

bidir

duration

frame_rate

iload

lossrate

multistream

pkt_sizes

pre-installed_flows

rfc2544_tests

stream_type

traffic_type

3. ‘vsperf’ Traffic Gen Guide¶

3.1. Overview¶

VSPERF supports the following traffic generators:

- Dummy (DEFAULT)

- Ixia

- Spirent TestCenter

- Xena Networks

- MoonGen

- Trex

To see the list of traffic gens from the cli:

$ ./vsperf --list-trafficgens

This guide provides the details of how to install and configure the various traffic generators.

3.2. Background Information¶

The traffic default configuration can be found in conf/03_traffic.conf, and is configured as follows:

TRAFFIC = {

'traffic_type' : 'rfc2544_throughput',

'frame_rate' : 100,

'bidir' : 'True', # will be passed as string in title format to tgen

'multistream' : 0,

'stream_type' : 'L4',

'pre_installed_flows' : 'No', # used by vswitch implementation

'flow_type' : 'port', # used by vswitch implementation

'l2': {

'framesize': 64,

'srcmac': '00:00:00:00:00:00',

'dstmac': '00:00:00:00:00:00',

},

'l3': {

'enabled': True,

'proto': 'udp',

'srcip': '1.1.1.1',

'dstip': '90.90.90.90',

},

'l4': {

'enabled': True,

'srcport': 3000,

'dstport': 3001,

},

'vlan': {

'enabled': False,

'id': 0,

'priority': 0,

'cfi': 0,

},

}

The framesize parameter can be overridden from the configuration

files by adding the following to your custom configuration file

10_custom.conf:

TRAFFICGEN_PKT_SIZES = (64, 128,)

OR from the commandline:

$ ./vsperf --test-params "TRAFFICGEN_PKT_SIZES=(x,y)" $TESTNAME

You can also modify the traffic transmission duration and the number of tests run by the traffic generator by extending the example commandline above to:

$ ./vsperf --test-params "TRAFFICGEN_PKT_SIZES=(x,y);TRAFFICGEN_DURATION=10;" \

"TRAFFICGEN_RFC2544_TESTS=1" $TESTNAME

3.3. Dummy¶

The Dummy traffic generator can be used to test VSPERF installation or to demonstrate VSPERF functionality at DUT without connection to a real traffic generator.

You could also use the Dummy generator in case, that your external traffic generator is not supported by VSPERF. In such case you could use VSPERF to setup your test scenario and then transmit the traffic. After the transmission is completed you could specify values for all collected metrics and VSPERF will use them to generate final reports.

3.3.1. Setup¶

To select the Dummy generator please add the following to your

custom configuration file 10_custom.conf.

TRAFFICGEN = 'Dummy'

OR run vsperf with the --trafficgen argument

$ ./vsperf --trafficgen Dummy $TESTNAME

Where $TESTNAME is the name of the vsperf test you would like to run. This will setup the vSwitch and the VNF (if one is part of your test) print the traffic configuration and prompt you to transmit traffic when the setup is complete.

Please send 'continuous' traffic with the following stream config:

30mS, 90mpps, multistream False

and the following flow config:

{

"flow_type": "port",

"l3": {

"enabled": True,

"srcip": "1.1.1.1",

"proto": "udp",

"dstip": "90.90.90.90"

},

"traffic_type": "rfc2544_continuous",

"multistream": 0,

"bidir": "True",

"vlan": {

"cfi": 0,

"priority": 0,

"id": 0,

"enabled": False

},

"l4": {

"enabled": True,

"srcport": 3000,

"dstport": 3001,

},

"frame_rate": 90,

"l2": {

"dstmac": "00:00:00:00:00:00",

"srcmac": "00:00:00:00:00:00",

"framesize": 64

}

}

What was the result for 'frames tx'?

When your traffic generator has completed traffic transmission and provided the results please input these at the VSPERF prompt. VSPERF will try to verify the input:

Is '$input_value' correct?

Please answer with y OR n.

VSPERF will ask you to provide a value for every of collected metrics. The list of metrics can be found at traffic-type-metrics. Finally vsperf will print out the results for your test and generate the appropriate logs and report files.

3.3.2. Metrics collected for supported traffic types¶

Below you could find a list of metrics collected by VSPERF for each of supported traffic types.

RFC2544 Throughput and Continuous:

- frames tx

- frames rx

- min latency

- max latency

- avg latency

- frameloss

RFC2544 Back2back:

- b2b frames

- b2b frame loss %

3.3.3. Dummy result pre-configuration¶

In case of a Dummy traffic generator it is possible to pre-configure the test results. This is useful for creation of demo testcases, which do not require a real traffic generator. Such testcase can be run by any user and it will still generate all reports and result files.

Result values can be specified within TRAFFICGEN_DUMMY_RESULTS dictionary,

where every of collected metrics must be properly defined. Please check the list

of traffic-type-metrics.

Dictionary with dummy results can be passed by CLI argument --test-params

or specified in Parameters section of testcase definition.

Example of testcase execution with dummy results defined by CLI argument:

$ ./vsperf back2back --trafficgen Dummy --test-params \

"TRAFFICGEN_DUMMY_RESULTS={'b2b frames':'3000','b2b frame loss %':'0.0'}"

Example of testcase definition with pre-configured dummy results:

{

"Name": "back2back",

"Traffic Type": "rfc2544_back2back",

"Deployment": "p2p",

"biDirectional": "True",

"Description": "LTD.Throughput.RFC2544.BackToBackFrames",

"Parameters" : {

'TRAFFICGEN_DUMMY_RESULTS' : {'b2b frames':'3000','b2b frame loss %':'0.0'}

},

},

NOTE: Pre-configured results for the Dummy traffic generator will be used only

in case, that the Dummy traffic generator is used. Otherwise the option

TRAFFICGEN_DUMMY_RESULTS will be ignored.

3.4. Ixia¶

VSPERF can use both IxNetwork and IxExplorer TCL servers to control Ixia chassis. However, usage of IxNetwork TCL server is a preferred option. The following sections will describe installation and configuration of IxNetwork components used by VSPERF.

3.4.1. Installation¶

On the system under the test you need to install IxNetworkTclClient$(VER_NUM)Linux.bin.tgz.

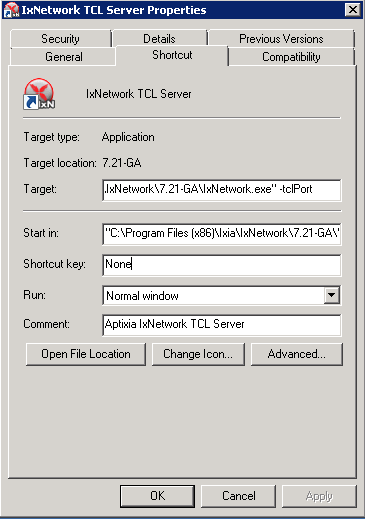

On the IXIA client software system you need to install IxNetwork TCL server. After its installation you should configure it as follows:

Find the IxNetwork TCL server app (start -> All Programs -> IXIA -> IxNetwork -> IxNetwork_$(VER_NUM) -> IxNetwork TCL Server)

Right click on IxNetwork TCL Server, select properties - Under shortcut tab in the Target dialogue box make sure there is the argument “-tclport xxxx” where xxxx is your port number (take note of this port number as you will need it for the 10_custom.conf file).

Hit Ok and start the TCL server application

3.4.2. VSPERF configuration¶

There are several configuration options specific to the IxNetwork traffic generator from IXIA. It is essential to set them correctly, before the VSPERF is executed for the first time.

Detailed description of options follows:

TRAFFICGEN_IXNET_MACHINE- IP address of server, where IxNetwork TCL Server is runningTRAFFICGEN_IXNET_PORT- PORT, where IxNetwork TCL Server is accepting connections from TCL clientsTRAFFICGEN_IXNET_USER- username, which will be used during communication with IxNetwork TCL Server and IXIA chassisTRAFFICGEN_IXIA_HOST- IP address of IXIA traffic generator chassisTRAFFICGEN_IXIA_CARD- identification of card with dedicated ports at IXIA chassisTRAFFICGEN_IXIA_PORT1- identification of the first dedicated port atTRAFFICGEN_IXIA_CARDat IXIA chassis; VSPERF uses two separated ports for traffic generation. In case of unidirectional traffic, it is essential to correctly connect 1st IXIA port to the 1st NIC at DUT, i.e. to the first PCI handle fromWHITELIST_NICSlist. Otherwise traffic may not be able to pass through the vSwitch. NOTE: In case thatTRAFFICGEN_IXIA_PORT1andTRAFFICGEN_IXIA_PORT2are set to the same value, then VSPERF will assume, that there is only one port connection between IXIA and DUT. In this case it must be ensured, that chosen IXIA port is physically connected to the first NIC fromWHITELIST_NICSlist.TRAFFICGEN_IXIA_PORT2- identification of the second dedicated port atTRAFFICGEN_IXIA_CARDat IXIA chassis; VSPERF uses two separated ports for traffic generation. In case of unidirectional traffic, it is essential to correctly connect 2nd IXIA port to the 2nd NIC at DUT, i.e. to the second PCI handle fromWHITELIST_NICSlist. Otherwise traffic may not be able to pass through the vSwitch. NOTE: In case thatTRAFFICGEN_IXIA_PORT1andTRAFFICGEN_IXIA_PORT2are set to the same value, then VSPERF will assume, that there is only one port connection between IXIA and DUT. In this case it must be ensured, that chosen IXIA port is physically connected to the first NIC fromWHITELIST_NICSlist.TRAFFICGEN_IXNET_LIB_PATH- path to the DUT specific installation of IxNetwork TCL APITRAFFICGEN_IXNET_TCL_SCRIPT- name of the TCL script, which VSPERF will use for communication with IXIA TCL serverTRAFFICGEN_IXNET_TESTER_RESULT_DIR- folder accessible from IxNetwork TCL server, where test results are stored, e.g.c:/ixia_results; see test-results-shareTRAFFICGEN_IXNET_DUT_RESULT_DIR- directory accessible from the DUT, where test results from IxNetwork TCL server are stored, e.g./mnt/ixia_results; see test-results-share

3.5. Spirent Setup¶

Spirent installation files and instructions are available on the Spirent support website at:

Select a version of Spirent TestCenter software to utilize. This example will use Spirent TestCenter v4.57 as an example. Substitute the appropriate version in place of ‘v4.57’ in the examples, below.

3.5.1. On the CentOS 7 System¶

Download and install the following:

Spirent TestCenter Application, v4.57 for 64-bit Linux Client

3.5.2. Spirent Virtual Deployment Service (VDS)¶

Spirent VDS is required for both TestCenter hardware and virtual chassis in the vsperf environment. For installation, select the version that matches the Spirent TestCenter Application version. For v4.57, the matching VDS version is 1.0.55. Download either the ova (VMware) or qcow2 (QEMU) image and create a VM with it. Initialize the VM according to Spirent installation instructions.

3.5.3. Using Spirent TestCenter Virtual (STCv)¶

STCv is available in both ova (VMware) and qcow2 (QEMU) formats. For VMware, download:

Spirent TestCenter Virtual Machine for VMware, v4.57 for Hypervisor - VMware ESX.ESXi

Virtual test port performance is affected by the hypervisor configuration. For best practice results in deploying STCv, the following is suggested:

- Create a single VM with two test ports rather than two VMs with one port each

- Set STCv in DPDK mode

- Give STCv 2*n + 1 cores, where n = the number of ports. For vsperf, cores = 5.

- Turning off hyperthreading and pinning these cores will improve performance

- Give STCv 2 GB of RAM

To get the highest performance and accuracy, Spirent TestCenter hardware is recommended. vsperf can run with either stype test ports.

3.5.4. Using STC REST Client¶

The stcrestclient package provides the stchttp.py ReST API wrapper module. This allows simple function calls, nearly identical to those provided by StcPython.py, to be used to access TestCenter server sessions via the STC ReST API. Basic ReST functionality is provided by the resthttp module, and may be used for writing ReST clients independent of STC.

- Project page: <https://github.com/Spirent/py-stcrestclient>

- Package download: <http://pypi.python.org/pypi/stcrestclient>

To use REST interface, follow the instructions in the Project page to install the package. Once installed, the scripts named with ‘rest’ keyword can be used. For example: testcenter-rfc2544-rest.py can be used to run RFC 2544 tests using the REST interface.

3.5.5. Configuration:¶

- The Labserver and license server addresses. These parameters applies to all the tests, and are mandatory for all tests.

TRAFFICGEN_STC_LAB_SERVER_ADDR = " "

TRAFFICGEN_STC_LICENSE_SERVER_ADDR = " "

TRAFFICGEN_STC_PYTHON2_PATH = " "

TRAFFICGEN_STC_TESTCENTER_PATH = " "

TRAFFICGEN_STC_TEST_SESSION_NAME = " "

TRAFFICGEN_STC_CSV_RESULTS_FILE_PREFIX = " "

- For RFC2544 tests, the following parameters are mandatory

TRAFFICGEN_STC_EAST_CHASSIS_ADDR = " "

TRAFFICGEN_STC_EAST_SLOT_NUM = " "

TRAFFICGEN_STC_EAST_PORT_NUM = " "

TRAFFICGEN_STC_EAST_INTF_ADDR = " "

TRAFFICGEN_STC_EAST_INTF_GATEWAY_ADDR = " "

TRAFFICGEN_STC_WEST_CHASSIS_ADDR = ""

TRAFFICGEN_STC_WEST_SLOT_NUM = " "

TRAFFICGEN_STC_WEST_PORT_NUM = " "

TRAFFICGEN_STC_WEST_INTF_ADDR = " "

TRAFFICGEN_STC_WEST_INTF_GATEWAY_ADDR = " "

TRAFFICGEN_STC_RFC2544_TPUT_TEST_FILE_NAME

- RFC2889 tests: Currently, the forwarding, address-caching, and address-learning-rate tests of RFC2889 are supported. The testcenter-rfc2889-rest.py script implements the rfc2889 tests. The configuration for RFC2889 involves test-case definition, and parameter definition, as described below. New results-constants, as shown below, are added to support these tests.

Example of testcase definition for RFC2889 tests:

{

"Name": "phy2phy_forwarding",

"Deployment": "p2p",

"Description": "LTD.Forwarding.RFC2889.MaxForwardingRate",

"Parameters" : {

"TRAFFIC" : {

"traffic_type" : "rfc2889_forwarding",

},

},

}

For RFC2889 tests, specifying the locations for the monitoring ports is mandatory. Necessary parameters are:

TRAFFICGEN_STC_RFC2889_TEST_FILE_NAME

TRAFFICGEN_STC_EAST_CHASSIS_ADDR = " "

TRAFFICGEN_STC_EAST_SLOT_NUM = " "

TRAFFICGEN_STC_EAST_PORT_NUM = " "

TRAFFICGEN_STC_EAST_INTF_ADDR = " "

TRAFFICGEN_STC_EAST_INTF_GATEWAY_ADDR = " "

TRAFFICGEN_STC_WEST_CHASSIS_ADDR = ""

TRAFFICGEN_STC_WEST_SLOT_NUM = " "

TRAFFICGEN_STC_WEST_PORT_NUM = " "

TRAFFICGEN_STC_WEST_INTF_ADDR = " "

TRAFFICGEN_STC_WEST_INTF_GATEWAY_ADDR = " "

TRAFFICGEN_STC_VERBOSE = "True"

TRAFFICGEN_STC_RFC2889_LOCATIONS="//10.1.1.1/1/1,//10.1.1.1/2/2"

Other Configurations are :

TRAFFICGEN_STC_RFC2889_MIN_LR = 1488

TRAFFICGEN_STC_RFC2889_MAX_LR = 14880

TRAFFICGEN_STC_RFC2889_MIN_ADDRS = 1000

TRAFFICGEN_STC_RFC2889_MAX_ADDRS = 65536

TRAFFICGEN_STC_RFC2889_AC_LR = 1000

The first 2 values are for address-learning test where as other 3 values are for the Address caching capacity test. LR: Learning Rate. AC: Address Caching. Maximum value for address is 16777216. Whereas, maximum for LR is 4294967295.

Results for RFC2889 Tests: Forwarding tests outputs following values:

TX_RATE_FPS : "Transmission Rate in Frames/sec"

THROUGHPUT_RX_FPS: "Received Throughput Frames/sec"

TX_RATE_MBPS : " Transmission rate in MBPS"

THROUGHPUT_RX_MBPS: "Received Throughput in MBPS"

TX_RATE_PERCENT: "Transmission Rate in Percentage"

FRAME_LOSS_PERCENT: "Frame loss in Percentage"

FORWARDING_RATE_FPS: " Maximum Forwarding Rate in FPS"

Whereas, the address caching test outputs following values,

CACHING_CAPACITY_ADDRS = 'Number of address it can cache'

ADDR_LEARNED_PERCENT = 'Percentage of address successfully learned'

and address learning test outputs just a single value:

OPTIMAL_LEARNING_RATE_FPS = 'Optimal learning rate in fps'

Note that ‘FORWARDING_RATE_FPS’, ‘CACHING_CAPACITY_ADDRS’, ‘ADDR_LEARNED_PERCENT’ and ‘OPTIMAL_LEARNING_RATE_FPS’ are the new result-constants added to support RFC2889 tests.

3.6. Xena Networks¶

3.6.1. Installation¶

Xena Networks traffic generator requires specific files and packages to be installed. It is assumed the user has access to the Xena2544.exe file which must be placed in VSPerf installation location under the tools/pkt_gen/xena folder. Contact Xena Networks for the latest version of this file. The user can also visit www.xenanetworks/downloads to obtain the file with a valid support contract.

Note VSPerf has been fully tested with version v2.43 of Xena2544.exe

To execute the Xena2544.exe file under Linux distributions the mono-complete package must be installed. To install this package follow the instructions below. Further information can be obtained from http://www.mono-project.com/docs/getting-started/install/linux/

rpm --import "http://keyserver.ubuntu.com/pks/lookup?op=get&search=0x3FA7E0328081BFF6A14DA29AA6A19B38D3D831EF"

yum-config-manager --add-repo http://download.mono-project.com/repo/centos/

yum -y install mono-complete

To prevent gpg errors on future yum installation of packages the mono-project repo should be disabled once installed.

yum-config-manager --disable download.mono-project.com_repo_centos_

3.6.2. Configuration¶

Connection information for your Xena Chassis must be supplied inside the

10_custom.conf or 03_custom.conf file. The following parameters must be

set to allow for proper connections to the chassis.

TRAFFICGEN_XENA_IP = ''

TRAFFICGEN_XENA_PORT1 = ''

TRAFFICGEN_XENA_PORT2 = ''

TRAFFICGEN_XENA_USER = ''

TRAFFICGEN_XENA_PASSWORD = ''

TRAFFICGEN_XENA_MODULE1 = ''

TRAFFICGEN_XENA_MODULE2 = ''

3.6.3. RFC2544 Throughput Testing¶

Xena traffic generator testing for rfc2544 throughput can be modified for different behaviors if needed. The default options for the following are optimized for best results.

TRAFFICGEN_XENA_2544_TPUT_INIT_VALUE = '10.0'

TRAFFICGEN_XENA_2544_TPUT_MIN_VALUE = '0.1'

TRAFFICGEN_XENA_2544_TPUT_MAX_VALUE = '100.0'

TRAFFICGEN_XENA_2544_TPUT_VALUE_RESOLUTION = '0.5'

TRAFFICGEN_XENA_2544_TPUT_USEPASS_THRESHHOLD = 'false'

TRAFFICGEN_XENA_2544_TPUT_PASS_THRESHHOLD = '0.0'

Each value modifies the behavior of rfc 2544 throughput testing. Refer to your Xena documentation to understand the behavior changes in modifying these values.

Xena RFC2544 testing inside VSPerf also includes a final verification option. This option allows for a faster binary search with a longer final verification of the binary search result. This feature can be enabled in the configuration files as well as the length of the final verification in seconds.

..code-block:: python

TRAFFICGEN_XENA_RFC2544_VERIFY = False TRAFFICGEN_XENA_RFC2544_VERIFY_DURATION = 120

If the final verification does not pass the test with the lossrate specified it will continue the binary search from its previous point. If the smart search option is enabled the search will continue by taking the current pass rate minus the minimum and divided by 2. The maximum is set to the last pass rate minus the threshold value set.

For example if the settings are as follows

..code-block:: python

TRAFFICGEN_XENA_RFC2544_BINARY_RESTART_SMART_SEARCH = True TRAFFICGEN_XENA_2544_TPUT_MIN_VALUE = ‘0.5’ TRAFFICGEN_XENA_2544_TPUT_VALUE_RESOLUTION = ‘0.5’

and the verification attempt was 64.5, smart search would take 64.5 - 0.5 / 2. This would continue the search at 32 but still have a maximum possible value of 64.

If smart is not enabled it will just resume at the last pass rate minus the threshold value.

3.6.4. Continuous Traffic Testing¶

Xena continuous traffic by default does a 3 second learning preemption to allow the DUT to receive learning packets before a continuous test is performed. If a custom test case requires this learning be disabled, you can disable the option or modify the length of the learning by modifying the following settings.

TRAFFICGEN_XENA_CONT_PORT_LEARNING_ENABLED = False

TRAFFICGEN_XENA_CONT_PORT_LEARNING_DURATION = 3

3.7. MoonGen¶

3.7.1. Installation¶

MoonGen architecture overview and general installation instructions can be found here:

https://github.com/emmericp/MoonGen

- Note: Today, MoonGen with VSPERF only supports 10Gbps line speeds.

For VSPERF use, MoonGen should be cloned from here (as opposed to the previously mentioned GitHub):

git clone https://github.com/atheurer/lua-trafficgen

and use the master branch:

git checkout master

VSPERF uses a particular Lua script with the MoonGen project:

trafficgen.lua

Follow MoonGen set up and execution instructions here:

https://github.com/atheurer/lua-trafficgen/blob/master/README.md

Note one will need to set up ssh login to not use passwords between the server running MoonGen and the device under test (running the VSPERF test infrastructure). This is because VSPERF on one server uses ‘ssh’ to configure and run MoonGen upon the other server.

One can set up this ssh access by doing the following on both servers:

ssh-keygen -b 2048 -t rsa

ssh-copy-id <other server>

3.7.2. Configuration¶

Connection information for MoonGen must be supplied inside the

10_custom.conf or 03_custom.conf file. The following parameters must be

set to allow for proper connections to the host with MoonGen.

TRAFFICGEN_MOONGEN_HOST_IP_ADDR = ""

TRAFFICGEN_MOONGEN_USER = ""

TRAFFICGEN_MOONGEN_BASE_DIR = ""

TRAFFICGEN_MOONGEN_PORTS = ""

TRAFFICGEN_MOONGEN_LINE_SPEED_GBPS = ""

3.8. Trex¶

3.8.1. Installation¶

Trex architecture overview and general installation instructions can be found here:

https://trex-tgn.cisco.com/trex/doc/trex_stateless.html

You can directly download from GitHub:

git clone https://github.com/cisco-system-traffic-generator/trex-core

and use the master branch:

git checkout master

or Trex latest release you can download from here:

wget --no-cache http://trex-tgn.cisco.com/trex/release/latest

After download, Trex repo has to be built:

cd trex-core/linux_dpdk

./b configure (run only once)

./b build

Next step is to create a minimum configuration file. It can be created by script dpdk_setup_ports.py.

The script with parameter -i will run in interactive mode and it will create file /etc/trex_cfg.yaml.

cd trex-core/scripts

sudo ./dpdk_setup_ports.py -i

Or example of configuration file can be found at location below, but it must be updated manually:

cp trex-core/scripts/cfg/simple_cfg /etc/trex_cfg.yaml

For additional information about configuration file see official documentation (chapter 3.1.2):

https://trex-tgn.cisco.com/trex/doc/trex_manual.html#_creating_minimum_configuration_file

After compilation and configuration it is possible to run trex server in stateless mode. It is neccesary for proper connection between Trex server and VSPERF.

cd trex-core/scripts/

./t-rex-64 -i

For additional information about Trex stateless mode see Trex stateless documentation:

https://trex-tgn.cisco.com/trex/doc/trex_stateless.html

NOTE: One will need to set up ssh login to not use passwords between the server running Trex and the device under test (running the VSPERF test infrastructure). This is because VSPERF on one server uses ‘ssh’ to configure and run Trex upon the other server.

One can set up this ssh access by doing the following on both servers:

ssh-keygen -b 2048 -t rsa

ssh-copy-id <other server>

3.8.2. Configuration¶

Connection information for Trex must be supplied inside the custom configuration file. The following parameters must be set to allow for proper connections to the host with Trex. Example of this configuration is in conf/03_traffic.conf or conf/10_custom.conf.

TRAFFICGEN_TREX_HOST_IP_ADDR = ''

TRAFFICGEN_TREX_USER = ''

TRAFFICGEN_TREX_BASE_DIR = ''

TRAFFICGEN_TREX_USER has to have sudo permission and password-less access. TRAFFICGEN_TREX_BASE_DIR is the place, where is stored ‘t-rex-64’ file.

It is possible to specify the accuracy of RFC2544 Throughput measurement. Threshold below defines maximal difference between frame rate of successful (i.e. defined frameloss was reached) and unsuccessful (i.e. frameloss was exceeded) iterations.

Default value of this parameter is defined in conf/03_traffic.conf as follows:

TRAFFICGEN_TREX_RFC2544_TPUT_THRESHOLD = ''

3.8.3. SR-IOV and Multistream layer 2¶

T-Rex by default only accepts packets on the receive side if the destination mac matches the MAC address specified in the /etc/trex-cfg.yaml on the server side. For SR-IOV this creates challenges with modifying the MAC address in the traffic profile to correctly flow packets through specified VFs. To remove this limitation enable promiscuous mode on T-Rex to allow all packets regardless of the destination mac to be accepted.

This also creates problems when doing multistream at layer 2 since the source macs will be modified. Enable Promiscuous mode when doing multistream at layer 2 testing with T-Rex.

TRAFFICGEN_TREX_PROMISCUOUS=True