Calipso.io

Product Description and Value

Copyright (c) 2017 Koren Lev (Cisco Systems), Yaron Yogev (Cisco Systems) and others

All rights reserved. This program and the accompanying materials

are made available under the terms of the Apache License, Version 2.0

which accompanies this distribution, and is available at

http://www.apache.org/licenses/LICENSE-2.0

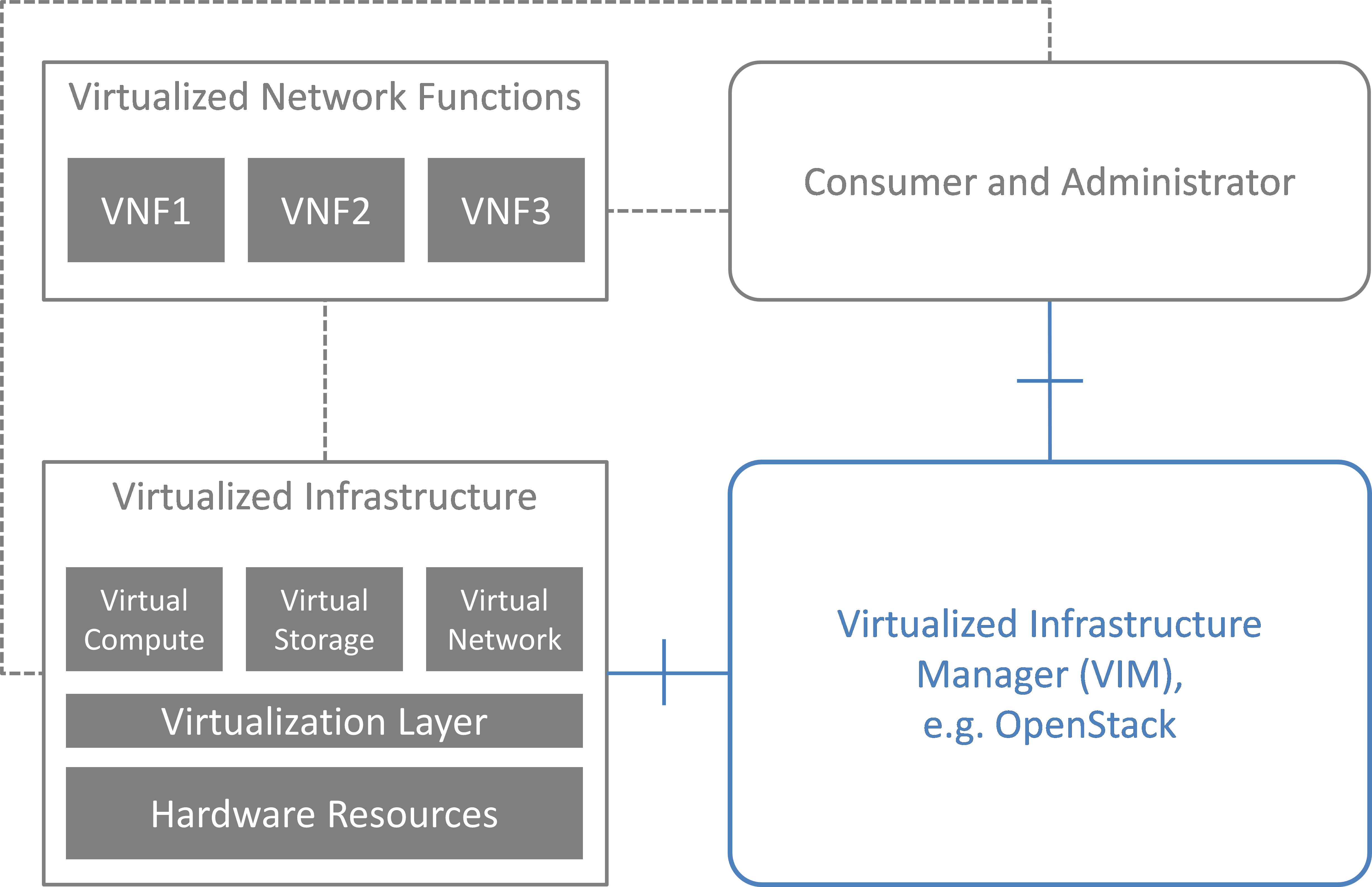

Virtual and Physical networking low level details and inter-connections,

dependencies in OpenStack, Docker or Kubernetes environments are

currently invisible and abstracted, by design, so data is not exposed

through any API or UI.

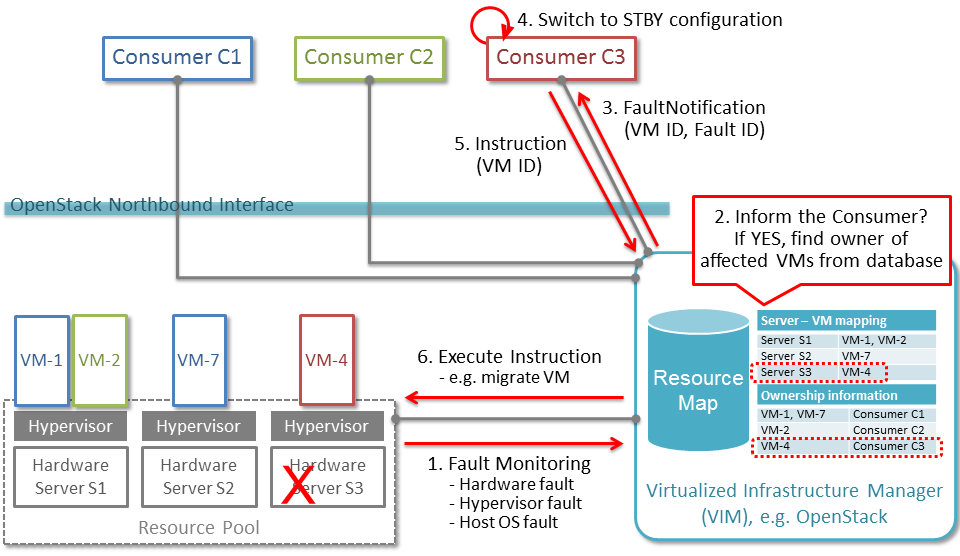

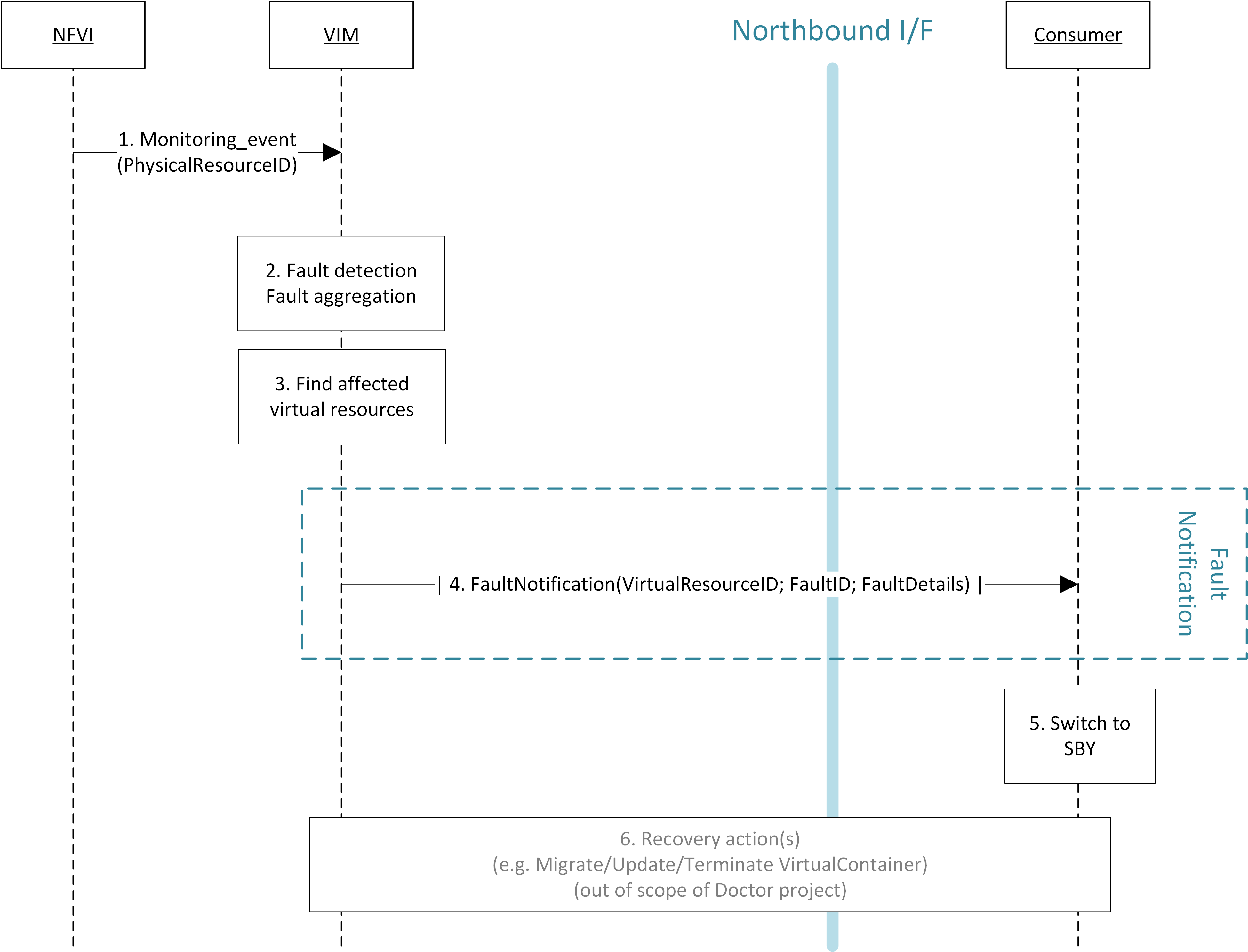

During virtual networking failures, troubleshooting takes substantial

amount of time due to manual discovery and analysis.

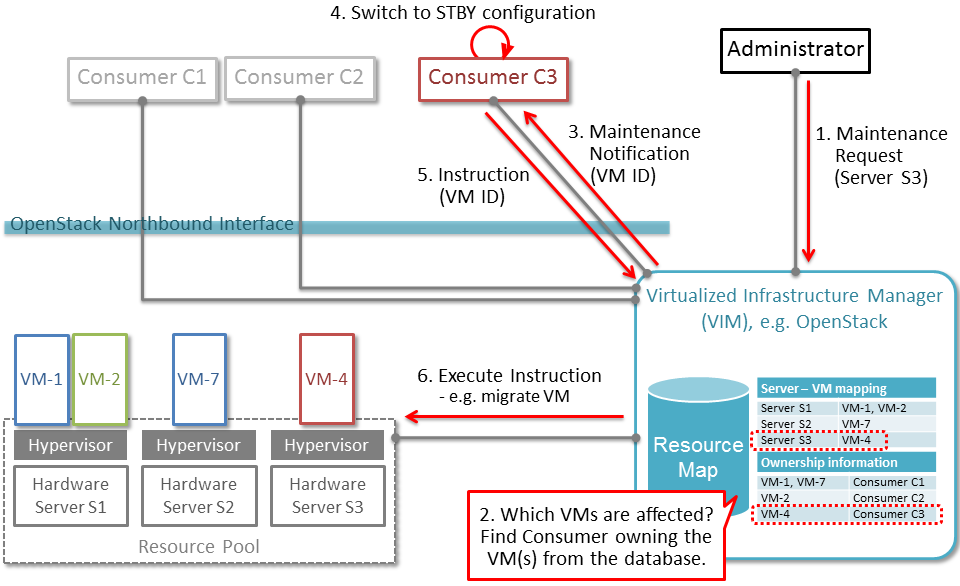

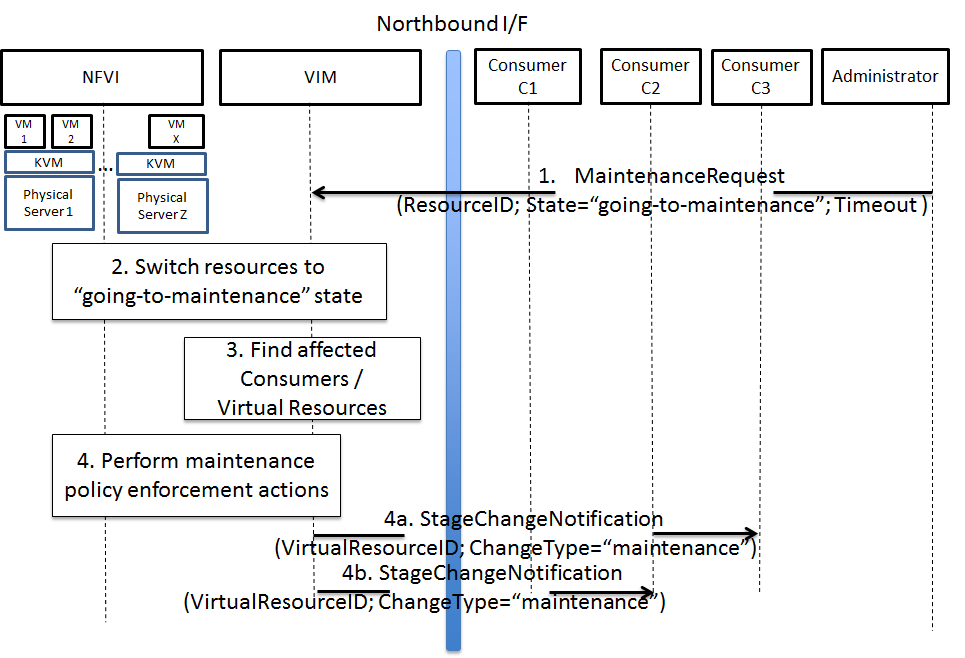

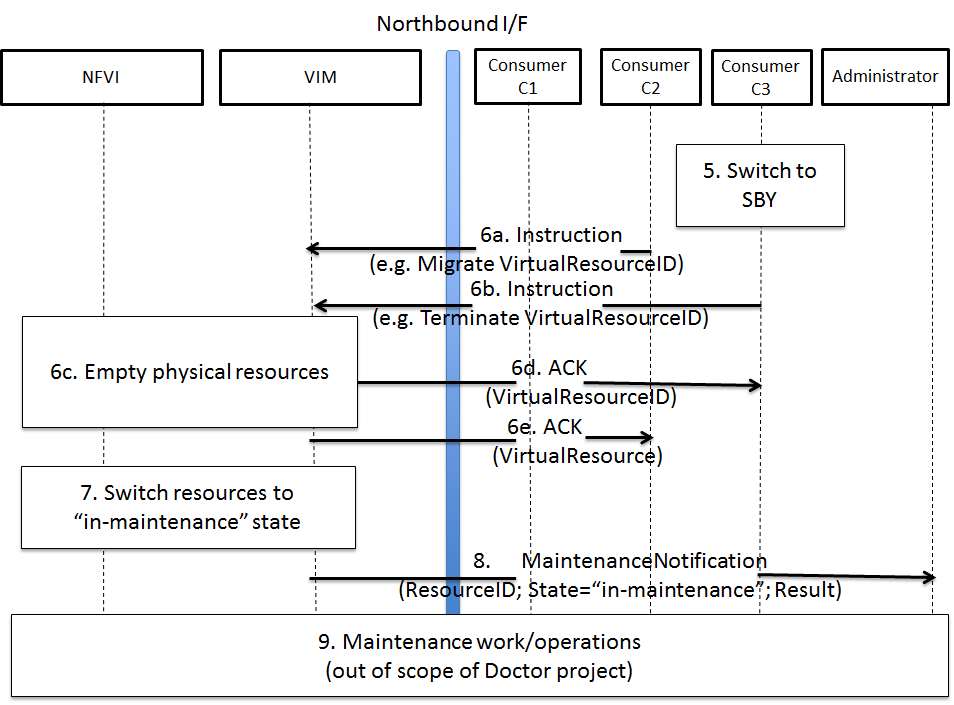

Maintenance work needs to happen in the data center, virtual and

physical networking (controlled or not) are impacted.

Most of the times, the impact of any of the above scenarios is

catastrophic.

Project “Calipso” tries to illuminate complex virtual networking with

real time operational state visibility for large and highly distributed

Virtual Infrastructure Management (VIM).

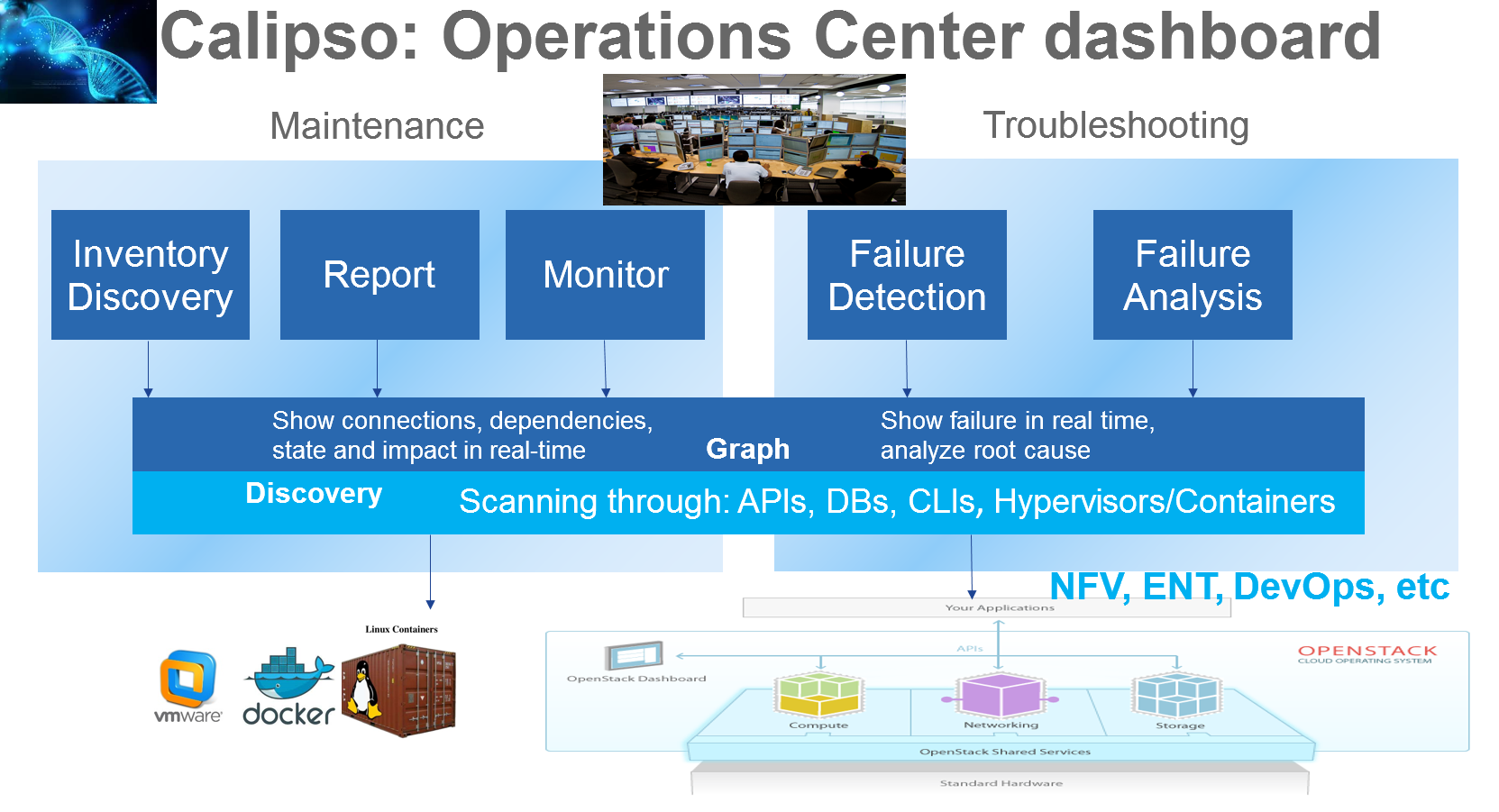

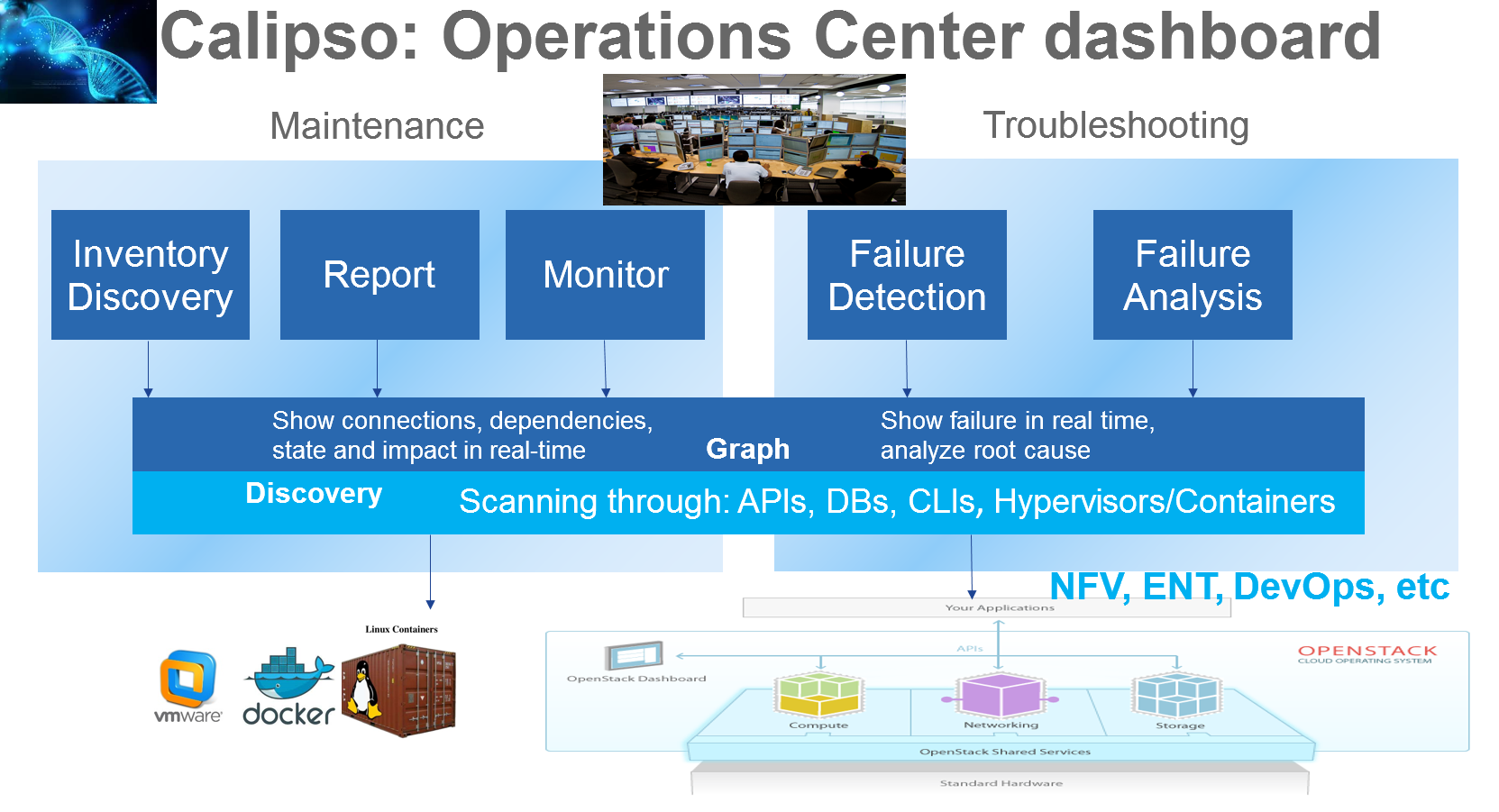

Customer needs during maintenance:

Visualize the networking topology, easily pinpointing the location

needed for maintenance and show the impact of maintenance work needed in

that location.

Administrator can plan ahead easily and report up his command chain the

detailed impact – Calipso substantially lower the admin time and

overhead needed for that reporting.

Customer need during troubleshooting:

Visualize and pinpointing the exact location of the failure in the

networking chain, using a suspected ‘focal point’ (ex: a VM that cannot

communicate).

Monitor the networking location and alerting till the problem is

resolved. Calipso also covers pinpointing the root cause.

Calipso is for multiple distributions/plugins and many virtual

environment variances:

We built a fully tested unified model to deal with many variances.

Supporting in initial release: VPP, OVS, LXB with all type drivers

possible, onto 5 different OS distributions, totaling to more than 60

variances (see Calipso-model guide).

New classes per object, link and topology can be programmed (see

development guide).

Detailed Monitoring:

Calipso provides visible insights using smart discovery and virtual

topological representation in graphs, with monitoring per object in the

graph inventory to reduce error vectors and troubleshooting, maintenance

cycles for VIM operators and administrators.

We believe that Stability is driven by accurate Visibility.

Table of Contents

Calipso.io Product Description and Value 1

1 About 4

1.1 Project Description 4

2 Main modules 5

2.1 High level module descriptions 5

2.2 High level functionality 5

3 Customer Requirements 6

3.1 Releases and Distributions 7

About

Project Description

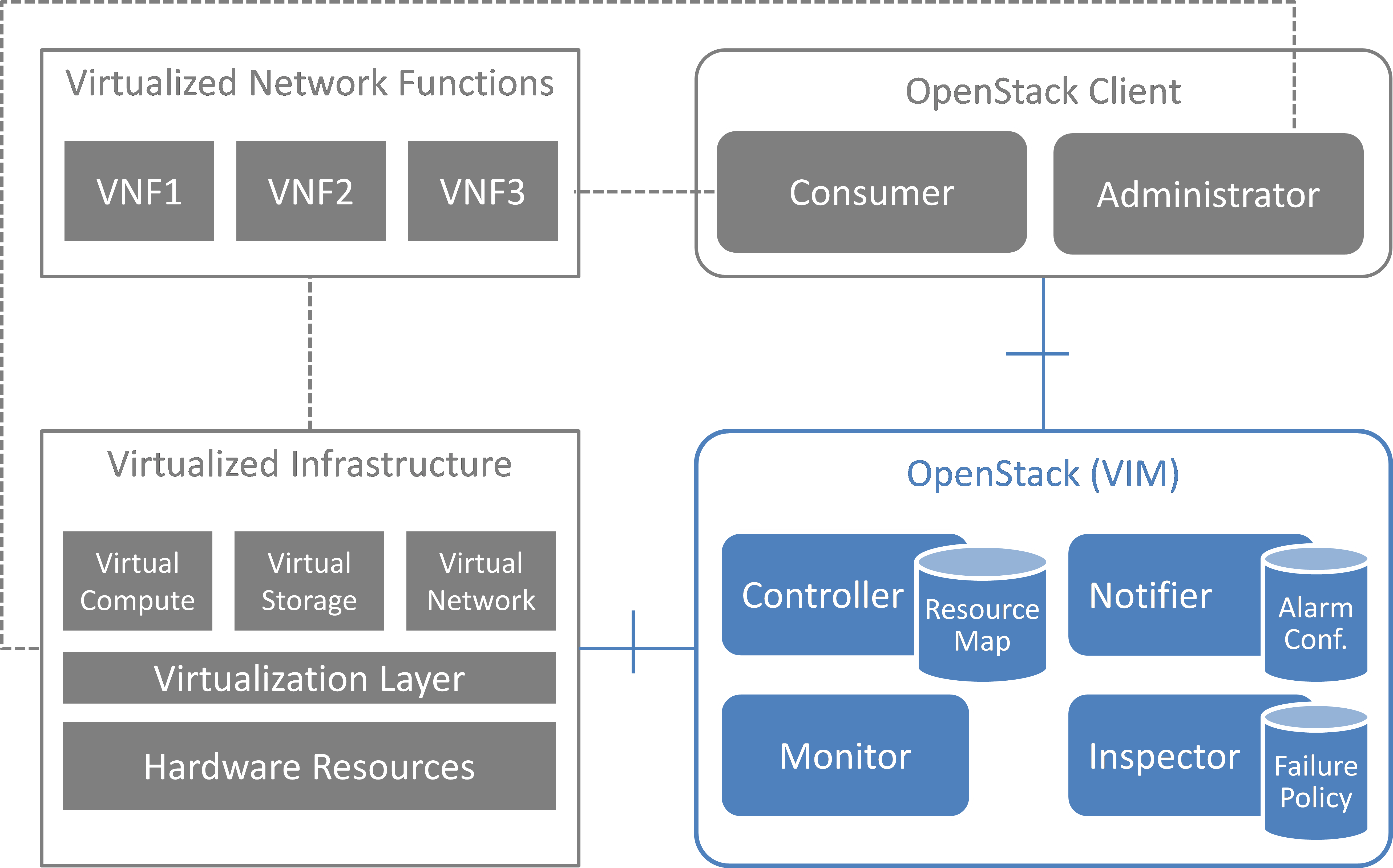

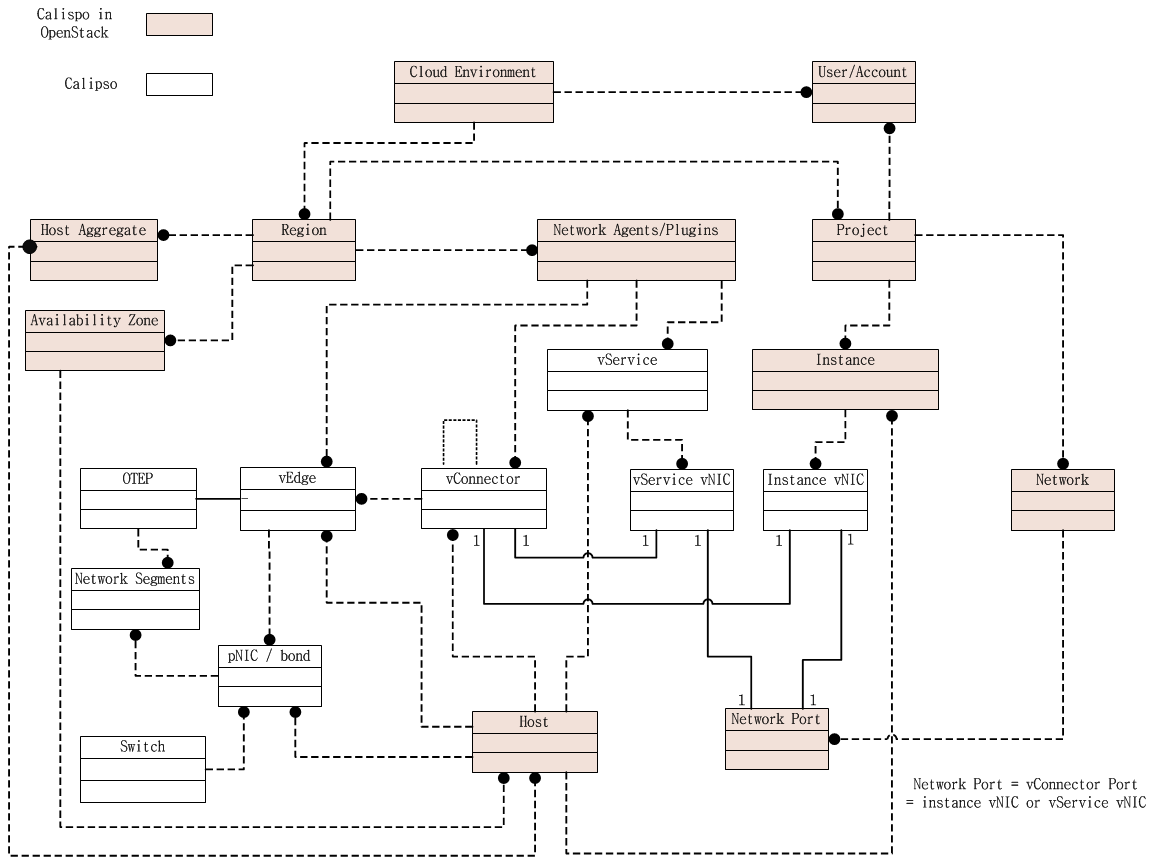

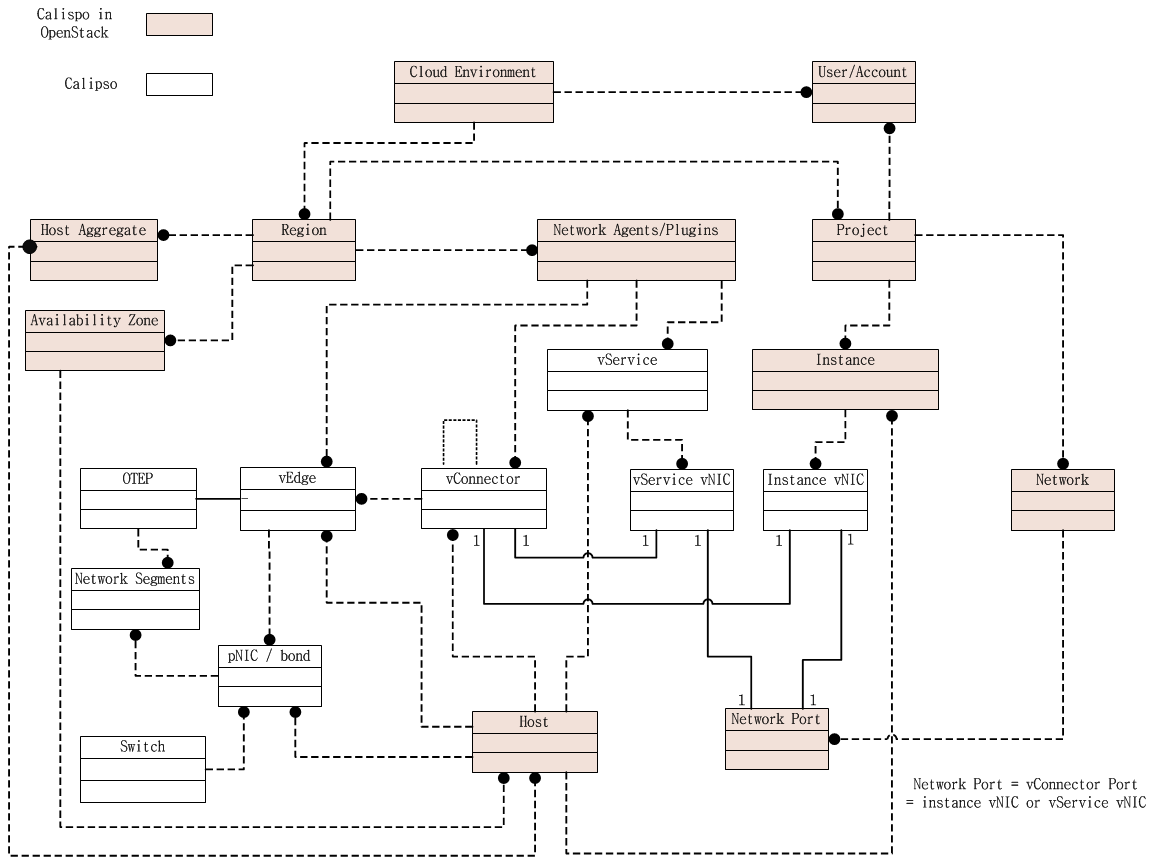

Calipso interfaces with the virtual infrastructure (like OpenStack)

through API, DB and CLI adapters, discovers the specific

distribution/plugins in-use, their versions and based on that collects

detailed data regarding running objects in the underlying workers and

processes running on the different hosts. Calipso analyzes the inventory

for inter-relationships and keeps them in a common and highly adaptive

data model.

Calipso then represents the inter-connections as real-time topologies

using automatic updates per changes in VIM, monitors the related objects

and analyzes the data for impact and root-cause analysis.

This is done with the objective to lower and potentially eliminate

complexity and lack of visibility from the VIM layers as well as to

offer a common and coherent representation of all physical and virtual

network components used under the VIM, all exposed through an API.

Calipso is developed to work with different OpenStack flavors, plugins

and installers.

Calipso is developed to save network admins discovery and

troubleshooting cycles of the networking aspects. Calipso helps estimate

the impact of several micro failure in the infrastructure to allow

appropriate resolutions.

Calipso focuses on scenarios, which requires VIM/OpenStack maintenance

and troubleshooting enhancements using operations dashboards i.e.

connectivity, topology and related stats – as well as their correlation.

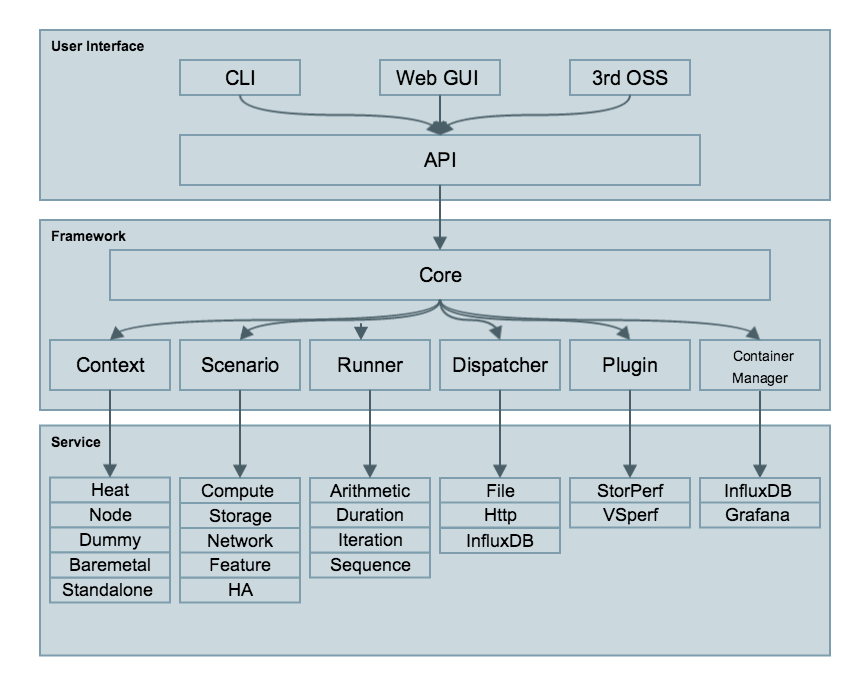

Main modules

High level module descriptions

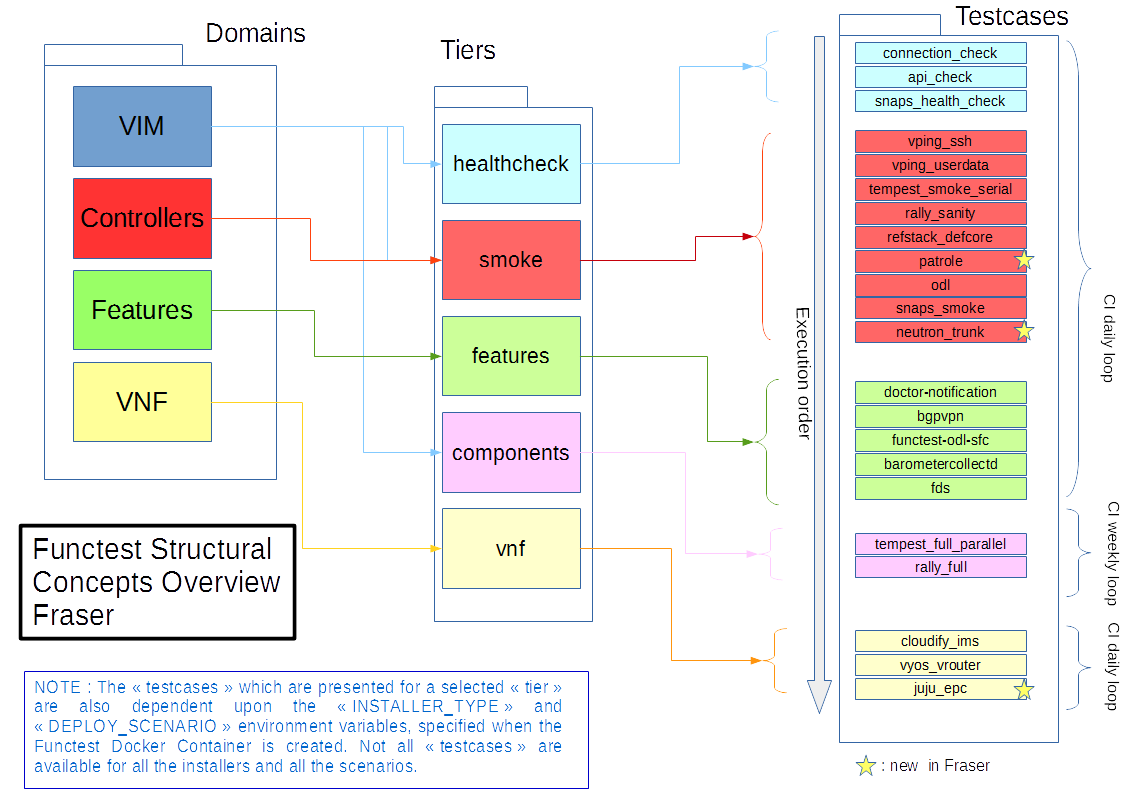

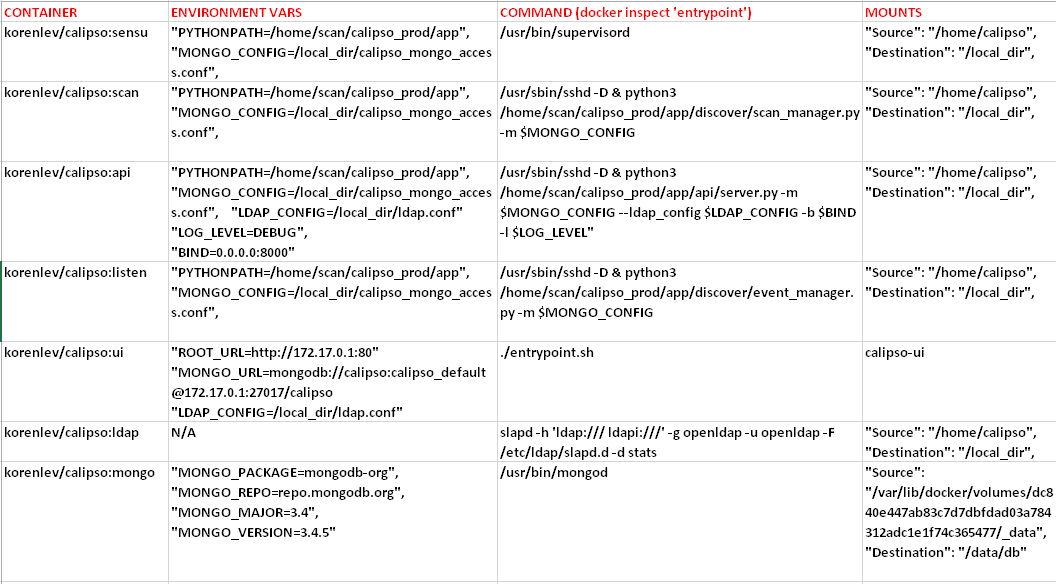

Calipso modules included with initial release:

- Scanning: detailed inventory discovery and inter-connection

analysis, smart/logical and automated learning from the VIM, based on

specific environment version/type etc.

- Listening: Attach to VIM message BUS and update changes in real

time.

- Visualization: represent the result of the discovery in browsable

graph topology and tree.

- Monitoring: Health and status for all discovered objects and

inter-connections: use the discovered data to configure monitoring

agents and gather monitoring results.

- Analysis: some traffic analysis, impact and root-cause analysis for

troubleshooting.

- API: allow integration with Calipso application’s inventory and

monitoring results.

- Database: Mongo based

- LDAP: pre-built integration for smooth attachment to corporate

directories.

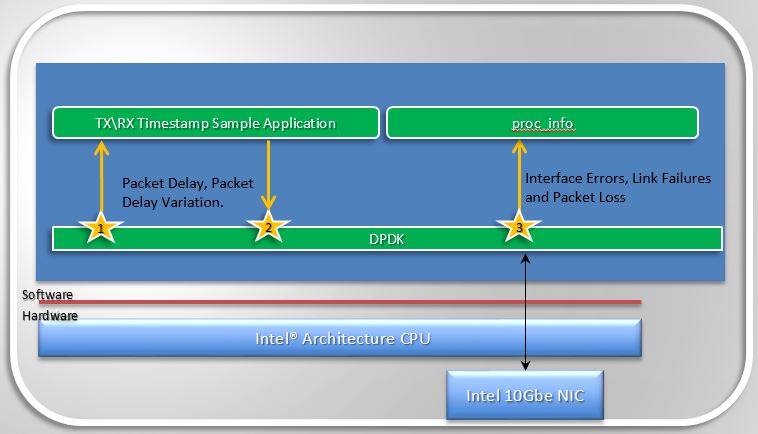

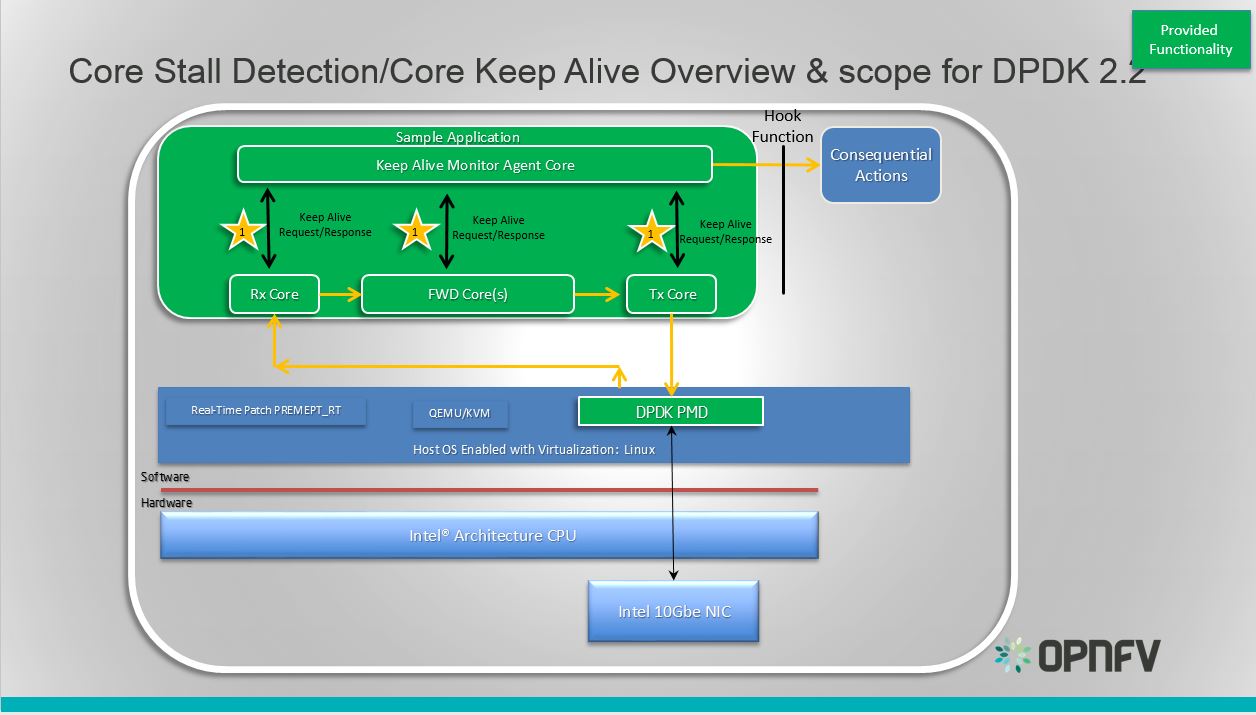

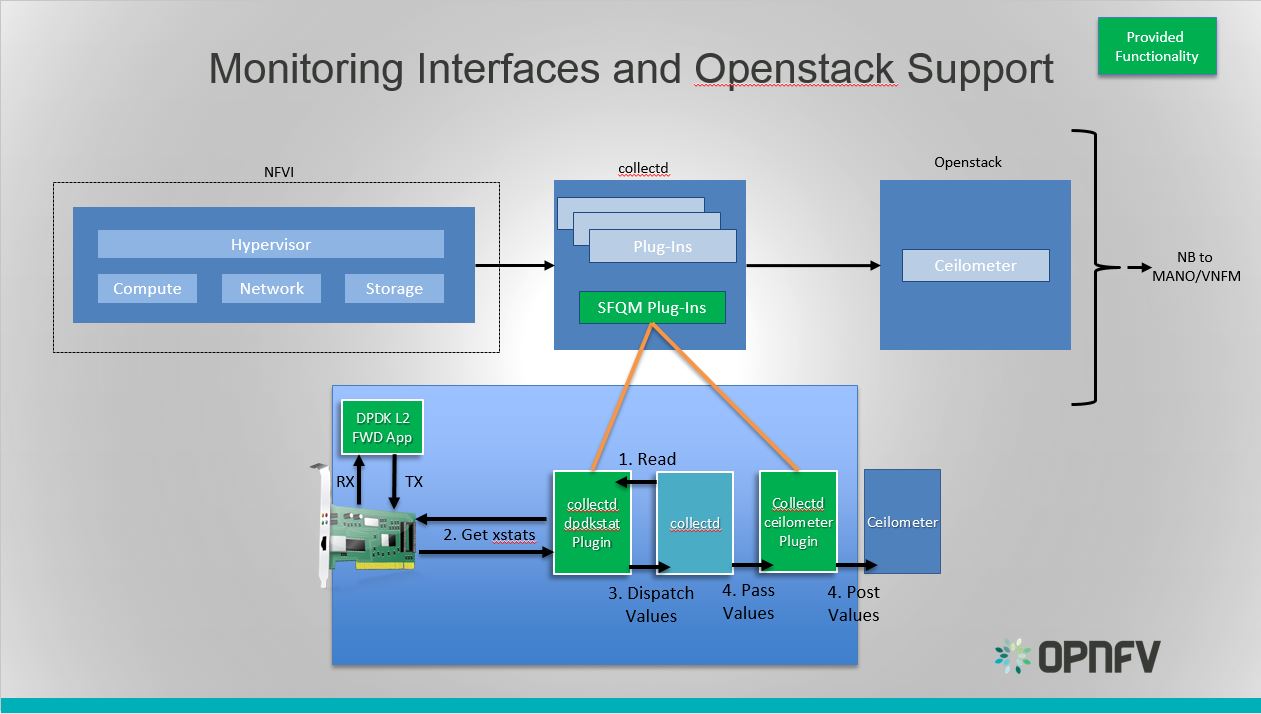

For Monitoring we are planning to utilize the work done by ‘Sensu’ and

‘Barometer’.

The project also develops required enhancements to individual components

in OpenStack like Neutron, Telemetry API and the different OpenStack

monitoring agents in order to provide a baseline for “Operations APIs”.

High level functionality

Scanning:

Calipso uses API, Database and Command-Line adapters for interfacing

with the Cloud infrastructure to logically discover every networking

component and it’s relationships with others, building a smart topology

and inventory.

Automated setup:

Calipso uses Sensu framework for Monitoring. It automatically deploys

and configures the necessary configuration files on all hosts, writes

customized checks and handlers to setup monitoring per inventory object.

Modeled analysis:

Calipso uses a unique logical model to help facilitate the topology

discovery, analysis of inter-connections and dependencies. Impact

Analysis is embedded, other types of analysis is possible through a

plugin framework.

Visualization:

Using its unique dependency model calipso visualize topological

inventory and monitoring results, in a highly customizable and modeled

UI framework

Monitoring:

After collecting the data, from processes and workers provisioned by the

cloud management systems, calipso dynamically checks for health and

availability, as a baseline for SLA monitoring.

Reporting:

Calipso allows networking administrators to operate, plan for

maintenance or troubleshooting and provides an easy to use hierarchical

representation of all the virtual networking components.

Customer Requirements

We identified an operational challenge: lack of visibility that leads to

limited stability.

The lack of operational tooling coupled with the reality of deployment

tools really needs to get solved to decrease the complexity as well as

assist not only deploying but also supporting OpenStack and other cloud

stacks.

Calispo integrates well with installers like Apex to offer enhanced day

2 operations.

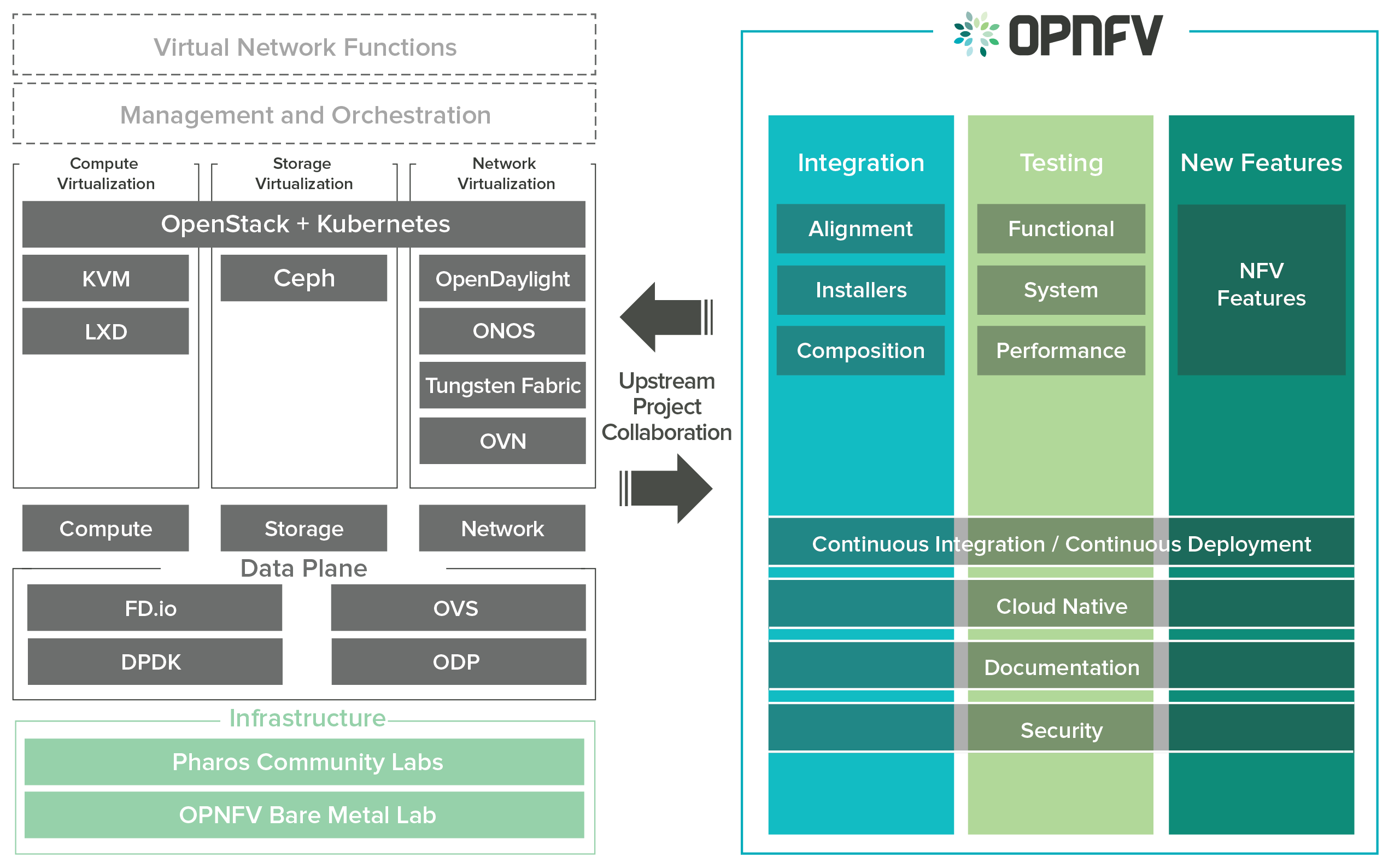

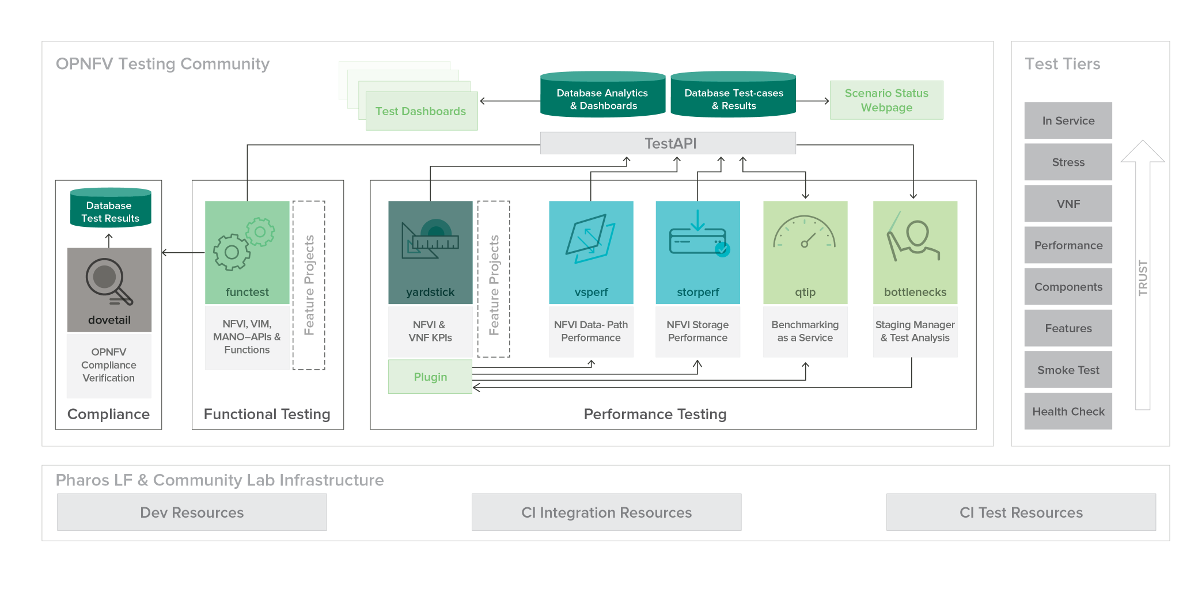

Releases and Distributions

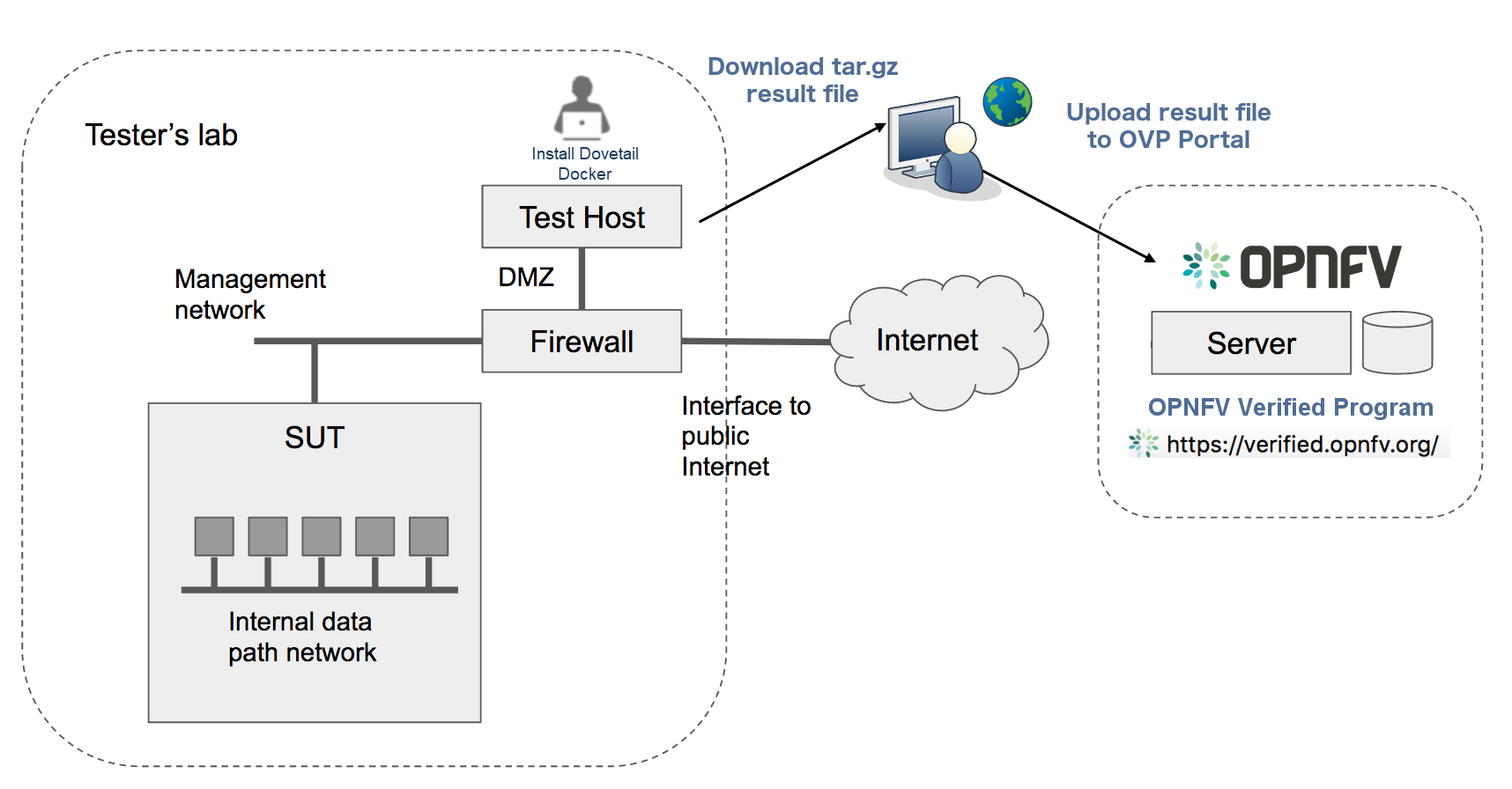

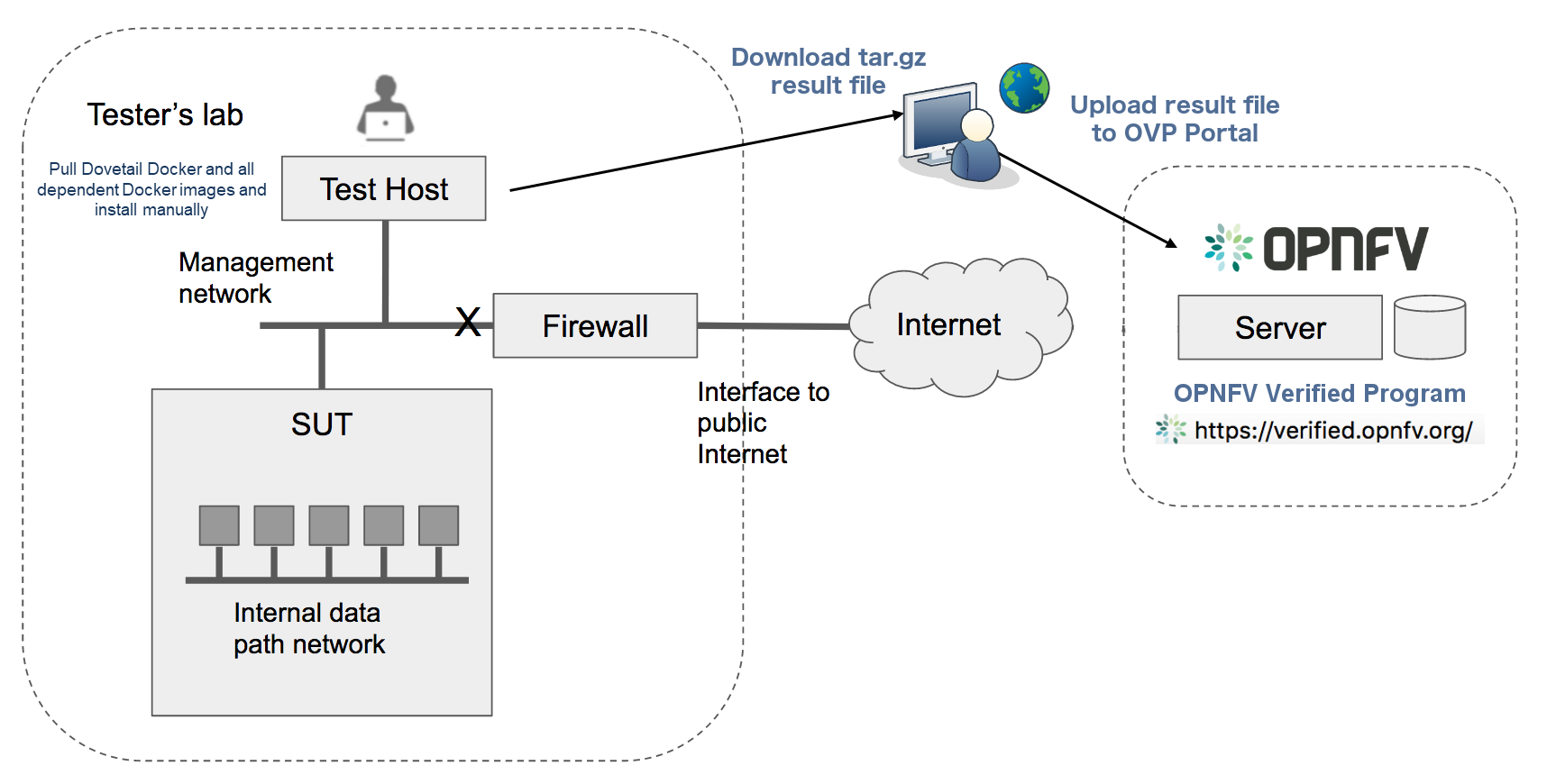

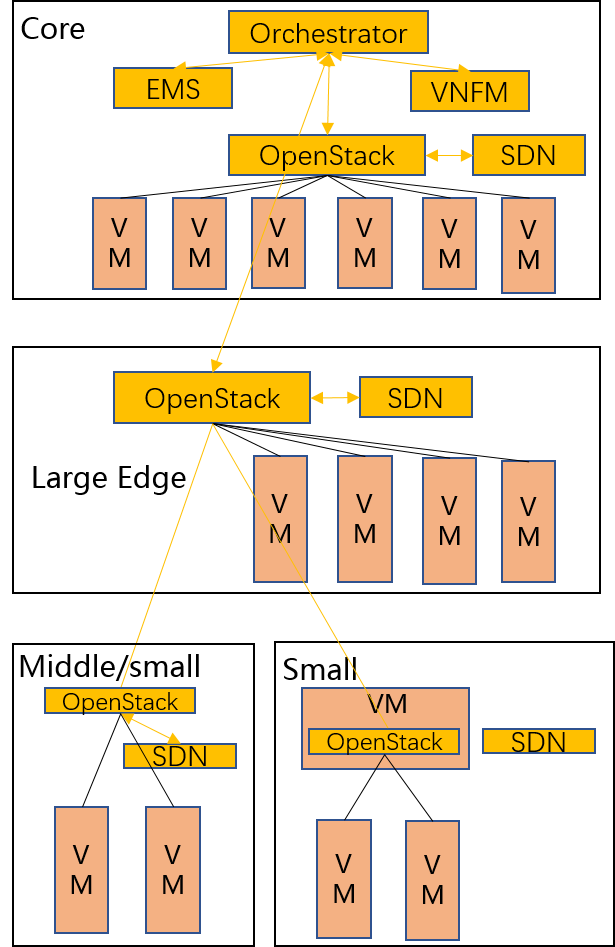

Calipso is distributed for enterprises - ‘S’ release, through

calipso.io, and for service providers - ‘P’ release, through OPNFV.

Calipso.io

Administration Guide

Copyright (c) 2017 Koren Lev (Cisco Systems), Yaron Yogev (Cisco Systems) and others

All rights reserved. This program and the accompanying materials

are made available under the terms of the Apache License, Version 2.0

which accompanies this distribution, and is available at

http://www.apache.org/licenses/LICENSE-2.0

Project “Calipso” tries to illuminate complex virtual networking with

real time operational state visibility for large and highly distributed

Virtual Infrastructure Management (VIM).

Calipso provides visible insights using smart discovery and virtual

topological representation in graphs, with monitoring per object in the

graph inventory to reduce error vectors and troubleshooting, maintenance

cycles for VIM operators and administrators.

Calipso model, described in this document, was built for

multi-environment and many VIM variances, the model was tested

successfully (as of Aug 27th) against 60 different VIM

variances (Distributions, Versions, Networking Drivers and Types).

Table of Contents

Calipso.io Administration Guide 1

1 Environments config 3

2 UI overview 5

2.1 User management 7

2.2 Logging in and out 8

2.3 Messaging check 9

2.4 Adding a new environment 9

3 Preparing an environment for scanning 10

3.1 Where to deploy Calipso application 10

3.2 Environment setup 10

3.3 Filling the environment config data 11

3.4 Testing the connections 11

4 Links and Cliques 12

4.1 Adding environment clique_types 13

5 Environment scanning 14

5.1 UI scanning request 14

5.2 UI scan schedule request 16

5.3 API scanning request 17

5.4 CLI scanning in the calipso-scan container 18

5.4.1 Clique Scanning 19

5.4.2 Viewing results 20

6 Editing or deleting environments 20

7 Event-based scanning 21

7.1 Enabling event-based scanning 21

7.2 Event-based handling details 22

8 ACI scanning 34

9 Monitoring enablement 36

10 Modules data flows 38

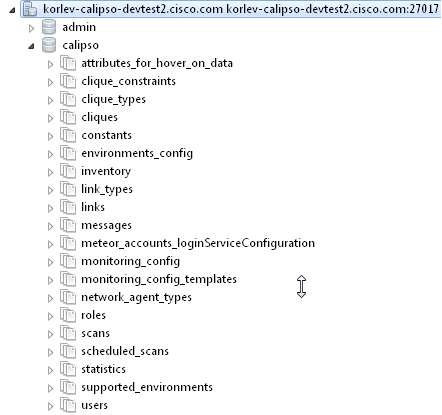

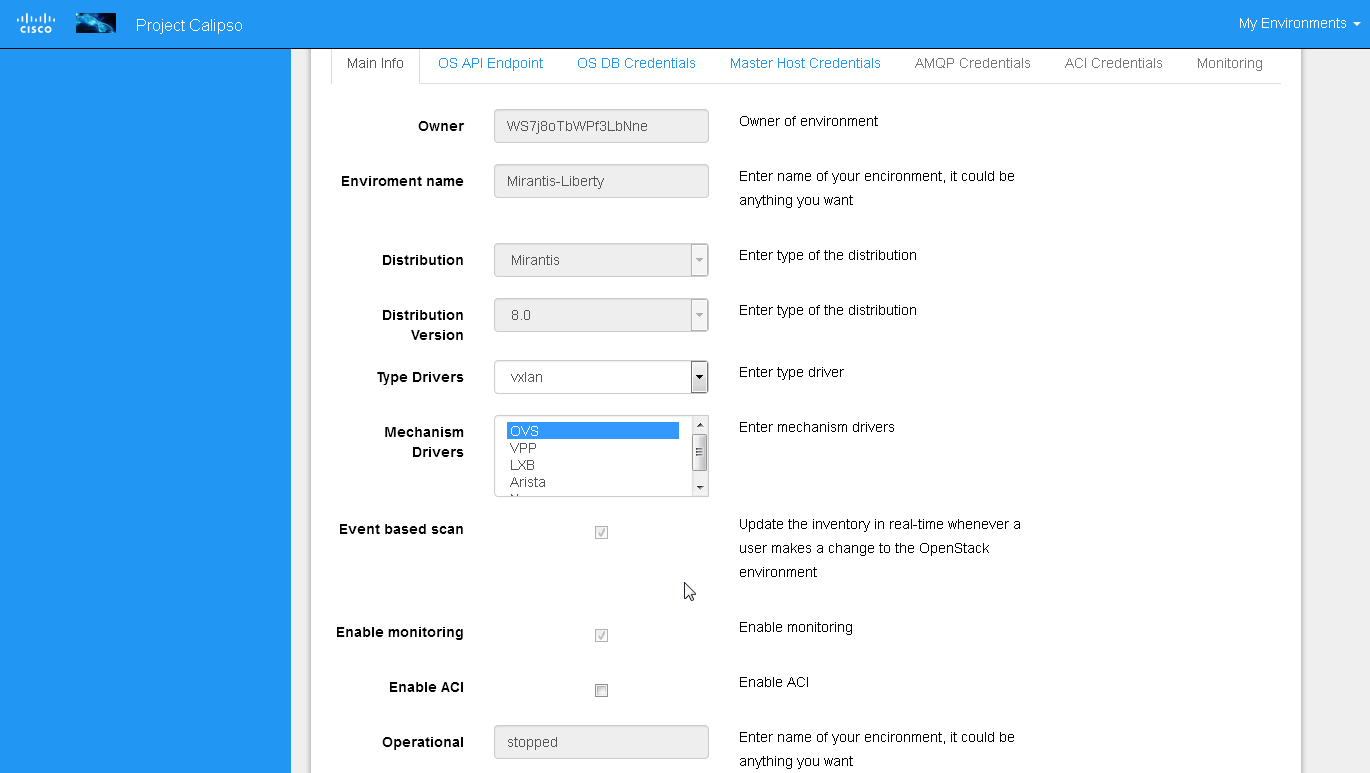

Environments config

Environment is defined as a certain type of Virtual Infrastructure

facility the runs under a single unified Management (like an

OpenStack facility).

Everything in Calipso application rely on environments config, this

is maintained in the “environments_config” collection in the

mongo Calipso DB.

Environment configs are pushed down to Calipso DB either through UI

or API (and only in OPNFV case Calipso provides an automated program

to build all needed environments_config parameters for an ‘Apex’

distribution automatically).

When scanning and discovering items Calipso uses this configuration

document for successful scanning results, here is an example of an

environment config document:

**{ **

**“name”: “DEMO-ENVIRONMENT-SCHEME”, **

**“enable_monitoring”: true, **

**“last_scanned”: “filled-by-scanning”, **

**“app_path”: “/home/scan/calipso_prod/app”, **

**“type”: “environment”, **

**“distribution”: “Mirantis”, **

**“distribution_version”: “8.0”, **

**“mechanism_drivers”: [“OVS”], **

“type_drivers”: “vxlan”

**“operational”: “stopped”, **

**“listen”: true, **

**“scanned”: false, **

“configuration”: [

{

**“name”: “OpenStack”, **

**“port”:”5000”, **

**“user”: “adminuser”, **

**“pwd”: “dummy_pwd”, **

**“host”: “10.0.0.1”, **

“admin_token”: “dummy_token”

**}, **

{

**“name”: “mysql”, **

**“pwd”: “dummy_pwd”, **

**“host”: “10.0.0.1”, **

**“port”: “3307”, **

“user”: “mysqluser”

**}, **

{

**“name”: “CLI”, **

**“user”: “sshuser”, **

**“host”: “10.0.0.1”, **

“pwd”: “dummy_pwd”

**}, **

{

**“name”: “AMQP”, **

**“pwd”: “dummy_pwd”, **

**“host”: “10.0.0.1”, **

**“port”: “5673”, **

“user”: “rabbitmquser”

**}, **

{

**“name”: “Monitoring”, **

**“ssh_user”: “root”, **

**“server_ip”: “10.0.0.1”, **

**“ssh_password”: “dummy_pwd”, **

**“rabbitmq_pass”: “dummy_pwd”, **

**“rabbitmq_user”: “sensu”, **

**“rabbitmq_port”: “5671”, **

**“provision”: “None”, **

**“env_type”: “production”, **

**“ssh_port”: “20022”, **

**“config_folder”: “/local_dir/sensu_config”, **

**“server_name”: “sensu_server”, **

**“type”: “Sensu”, **

“api_port”: NumberInt(4567)

**}, **

{

**“name”: “ACI”, **

**“user”: “admin”, **

**“host”: “10.1.1.104”, **

“pwd”: “dummy_pwd”

}

**], **

**“user”: “wNLeBJxNDyw8G7Ssg”, **

“auth”: {

“view-env”: [

“wNLeBJxNDyw8G7Ssg”

**], **

“edit-env”: [

“wNLeBJxNDyw8G7Ssg”

]

**}, **

}

Here is a brief explanation of the purpose of major keys in this

environment configuration doc:

Distribution: captures type of VIM, used for scanning of

objects, links and cliques.

Distribution_version: captures version of VIM distribution,

used for scanning of objects, links and cliques.

Mechanism_driver: captures virtual switch type used by the VIM,

used for scanning of objects, links and cliques.

Type_driver: captures virtual switch tunneling type used by the

switch, used for scanning of objects, links and cliques.

Listen: defines whether or not to use Calipso listener against

the VIM BUS for updating inventory in real-time from VIM events.

Scanned: defines whether or not Calipso ran a full and a

successful scan against this environment.

Last_scanned: end time of last scan.

Operational: defines whether or not VIM environment endpoints

are up and running.

Enable_monitoring: defines whether or not Calipso should deploy

monitoring of the inventory objects running inside all environment

hosts.

Configuration-OpenStack: defines credentials for OpenStack API

endpoints access.

Configuration-mysql: defines credentials for OpenStack DB

access.

Configuration-CLI: defines credentials for servers CLI access.

Configuration-AMQP: defines credentials for OpenStack BUS

access.

Configuration-Monitoring: defines credentials and setup for

Calipso sensu server (see monitoring-guide for details).

Configuration-ACI: defines credentials for ACI switched

management API, if exists.

User and auth: used for UI authorizations to view and edit this

environment.

App-path: defines the root directory of the scanning

application.

* This guide will help you understand how-to add new environment

through the provided Calispo UI module and then how-to use this

environment (and potentially many others) for scanning and real-time

inventories collection.

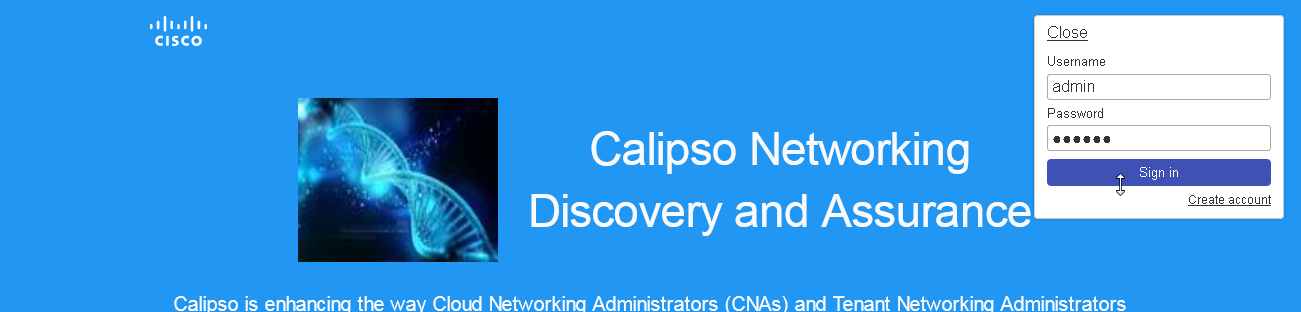

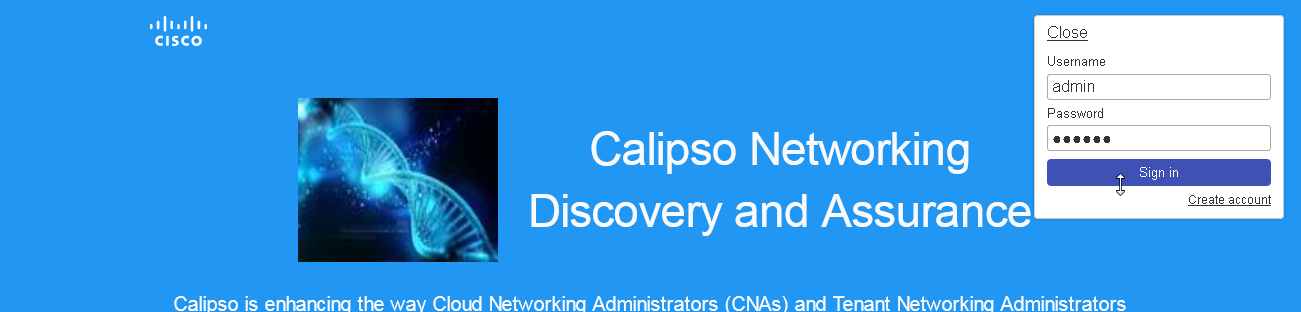

UI overview

Cloud administrator can use the Calipso UI for he’s daily tasks.

Once Calipso containers are running (see quickstart-guide) the UI

will be available at:

http://server-ip:80 , default login credentials: admin/123456.

Before logging in, while at the main landing page, a generic

information is provided.

Post login, at the main dashboard you can click on “Get started” and

view a short guide for using some of the basic UI functions,

available at:

server-ip/getstarted.

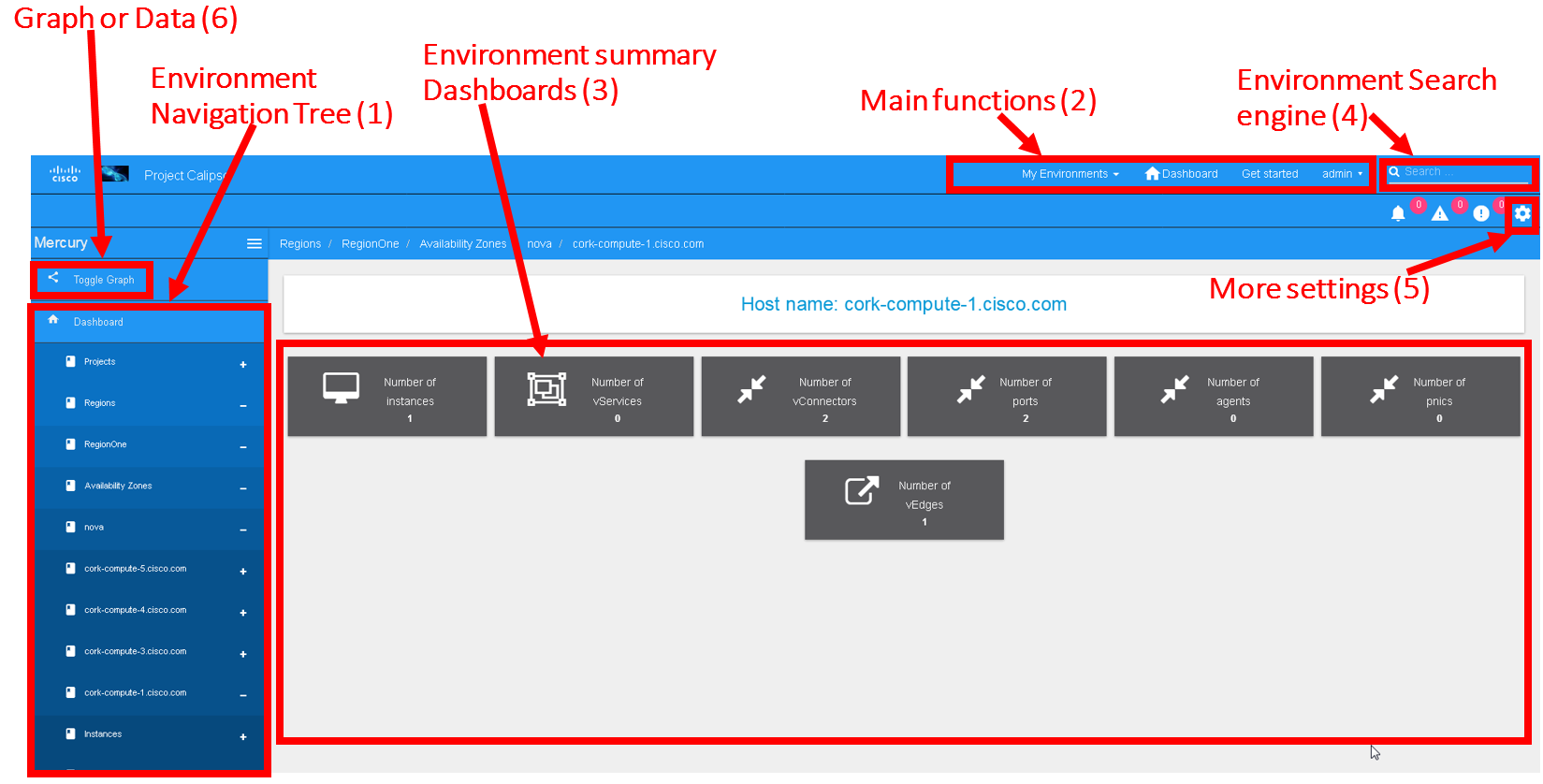

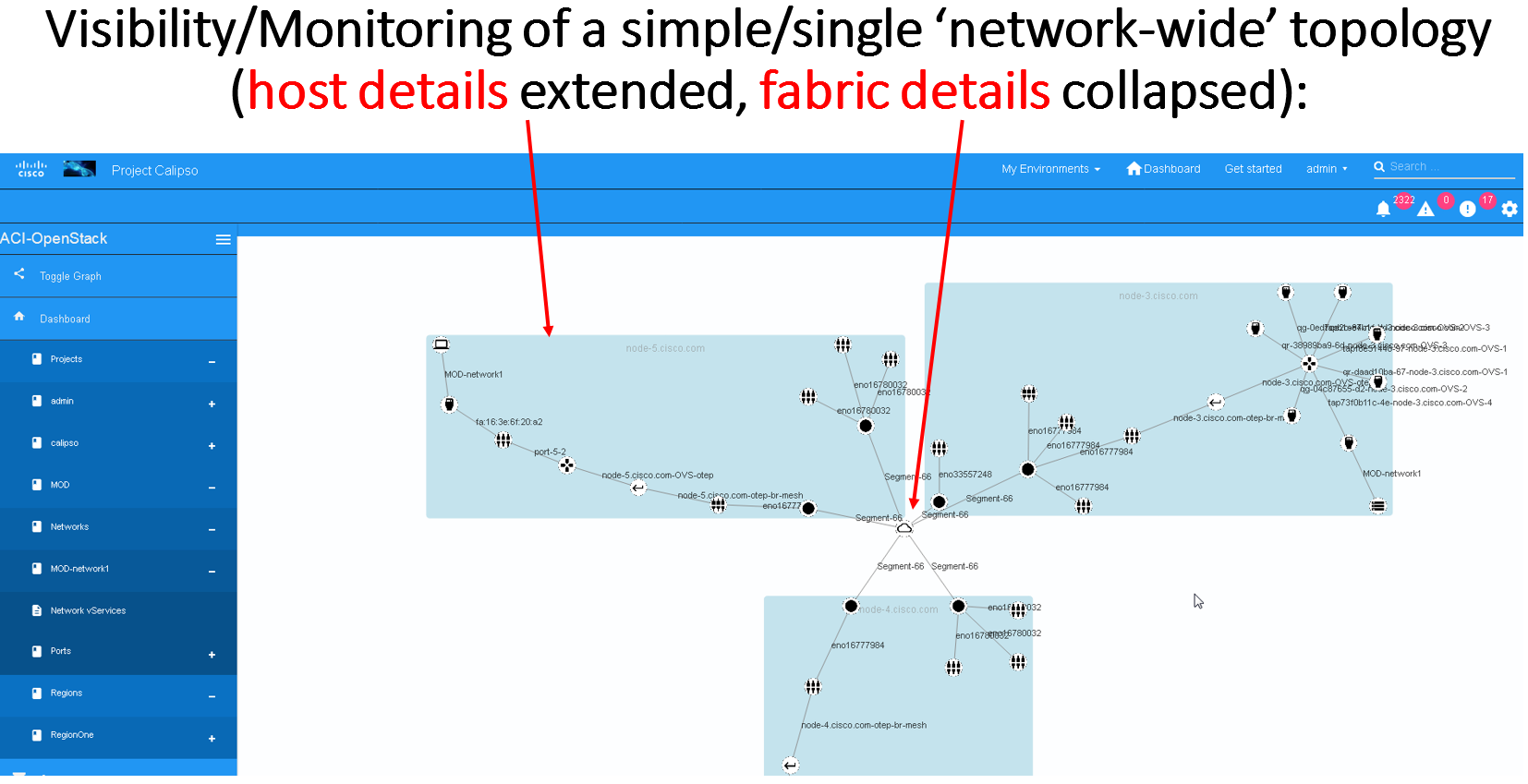

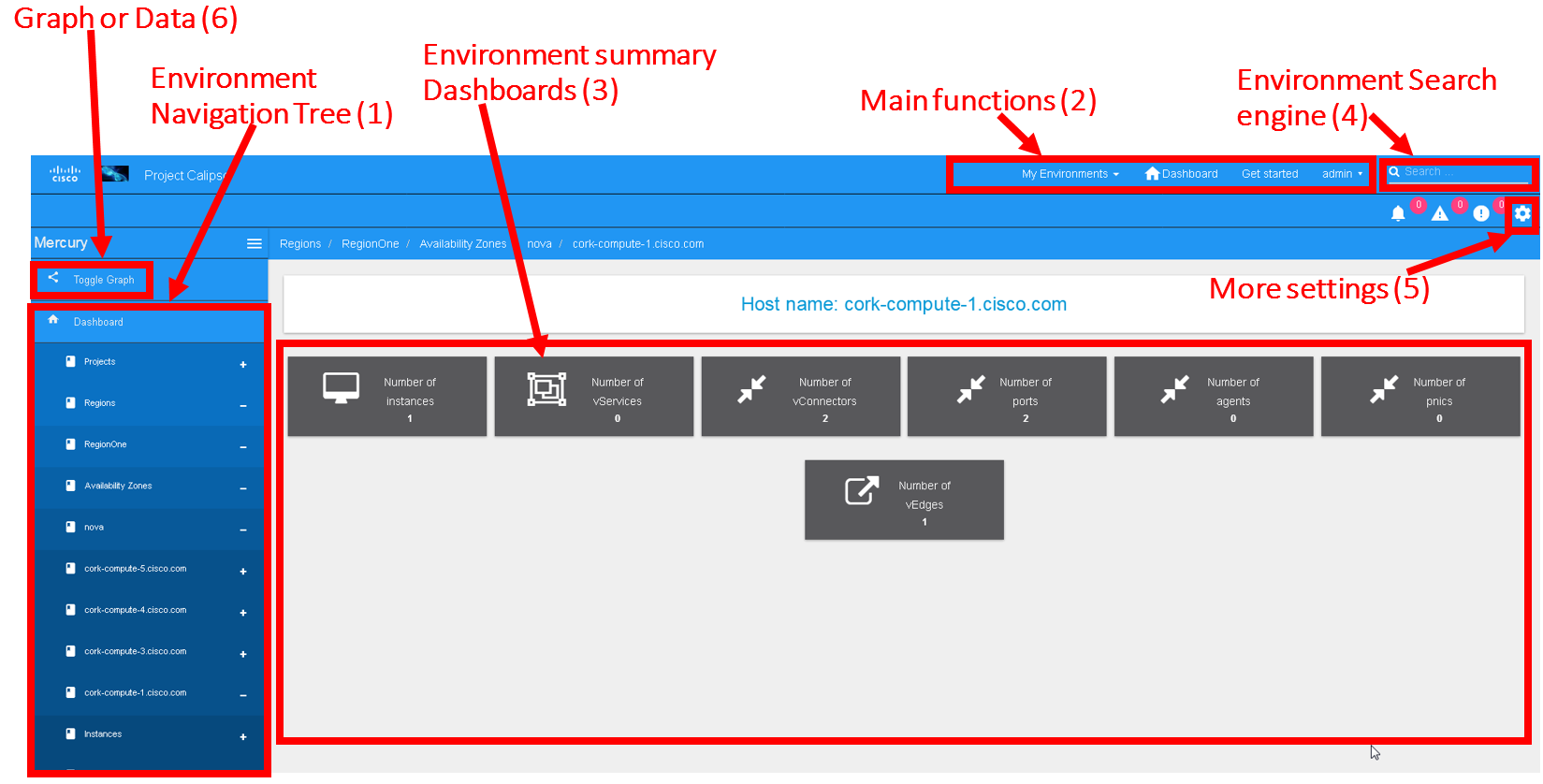

The main areas of interest are shown in the following screenshot:

Main areas on UI:

Main areas details:

Navigation Tree(1): Hierarchy searching through the inventory

using objects and parents details, to lookup a focal point of

interest for graphing or data gathering.

Main functions (2): Jumping between highest level dashboard (all

environments), specific environment and some generic help is

provided in this area.

Environment Summary (3): The central area where the data is

exposed, either through graph or through widget-attribute-listing.

Search engine (4): Finding interesting focal points faster

through basic object naming lookups, then clicking on results to get

transferred directly to that specific object dashboard. Searches are

conducted across all environments.

More settings (5): In this area the main collections of data are

exposed, like scans, schedules, messaging, clique_types,

link_types and others.

Graph or Data toggle (6): When focusing on a certain focal

point, this button allows changing from a graph-view to simple

data-view per request, if no graph is available for a certain object

the data-view is used by default, if information is missing try this

button first to make sure the correct view is chosen.

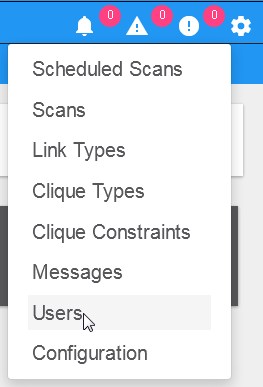

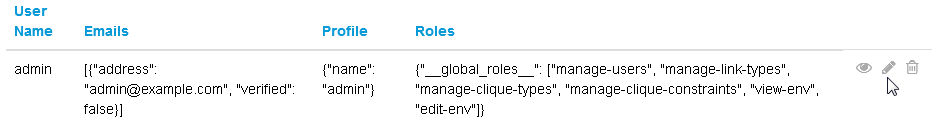

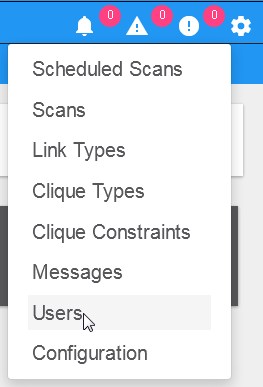

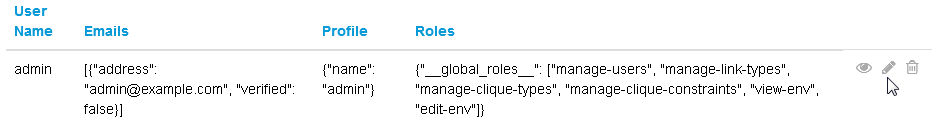

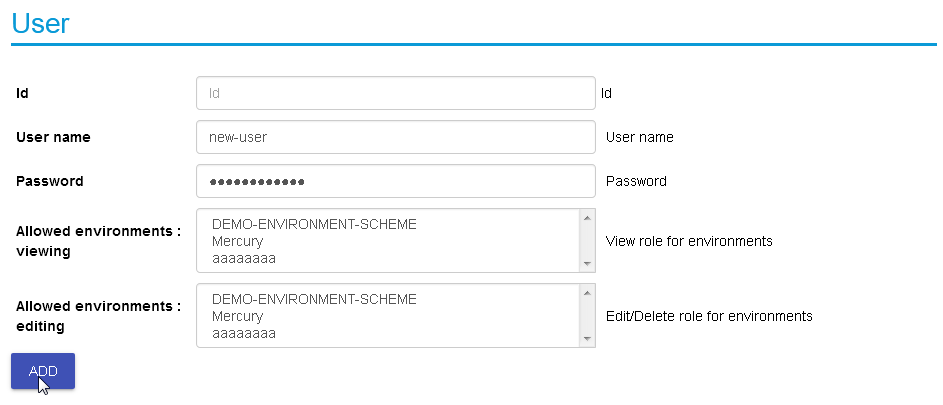

User management

The first place an administrator might use is the user’s

configurations, this is where a basic RBAC is provided for

authorizing access to the UI functions. Use the ‘settings’ button

and choose ‘users’ to access:

Editing the admin user password is allowed here:

Note:

The ‘admin’ user is allowed all functions on all environments, you

shouldn’t change this behavior and you should never delete this

user, or you’ll need re-install Calipso.

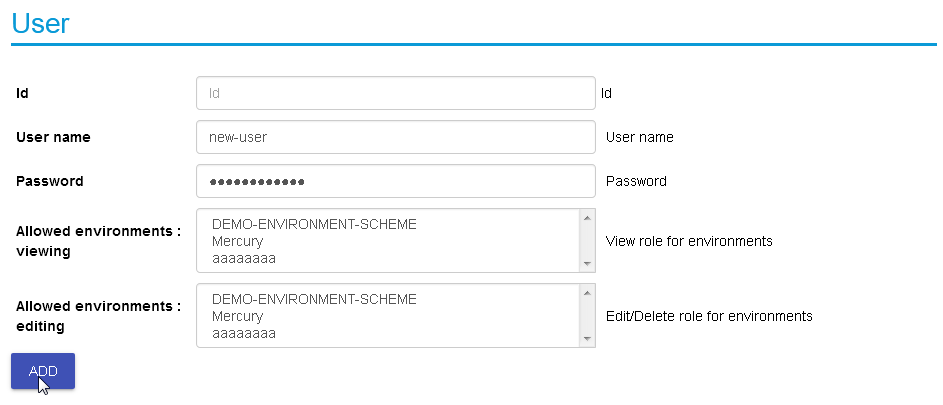

Adding new user is provided when clicking the “Create new user”

option:

Creating a new user:

Before environments are configured there is not a lot of options

here, once environments are defined (one or more), users can be

allowed to edit or view-only those environments.

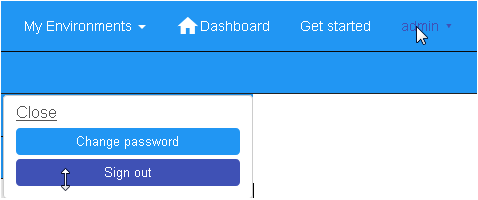

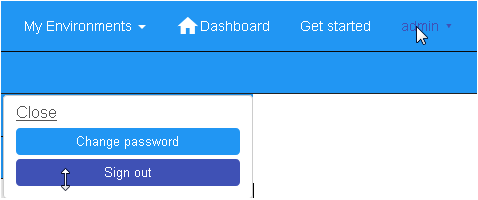

Logging in and out

To logout and re-login with different user credentials you can click

the username option and choose to sign out:

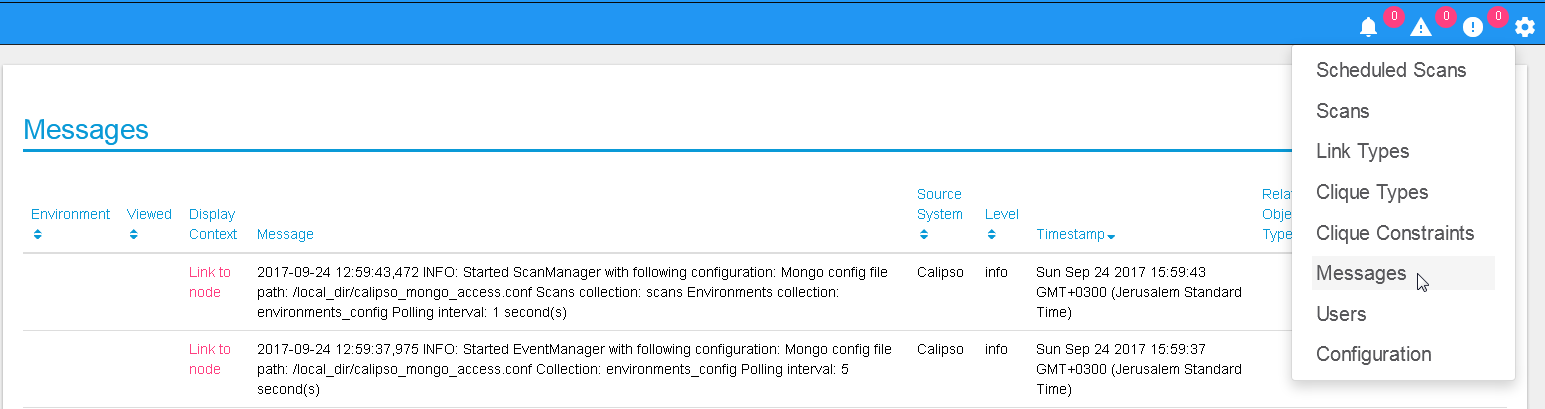

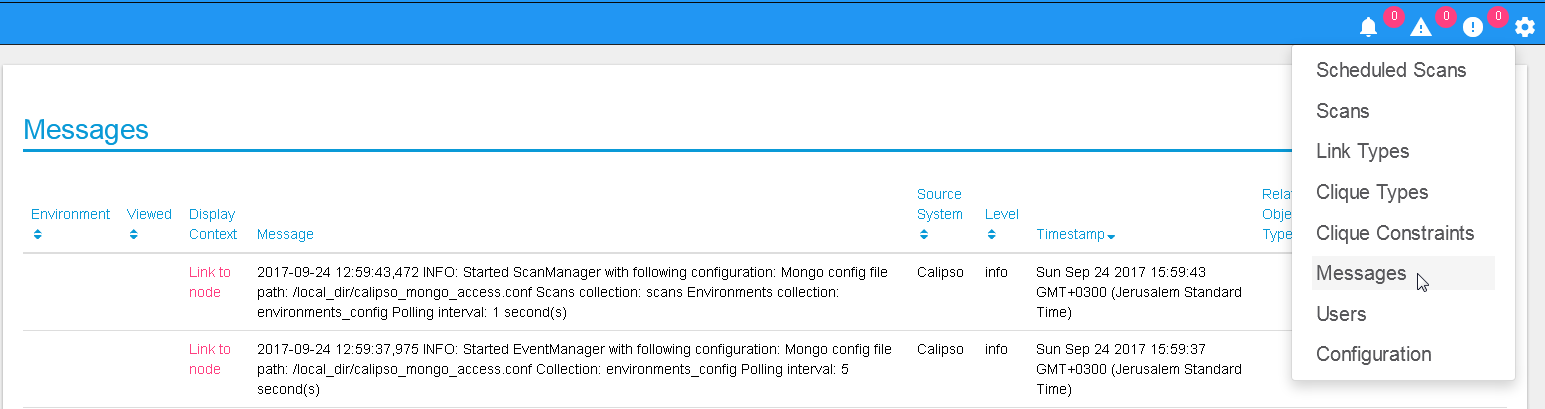

Messaging check

When calispo-scan and calipso-listen containers are running, they

provide basic messages on their processes status, this should be

exposed thorough the messaging system up to the UI, to validate this

choose ‘messages’ from the settings button:

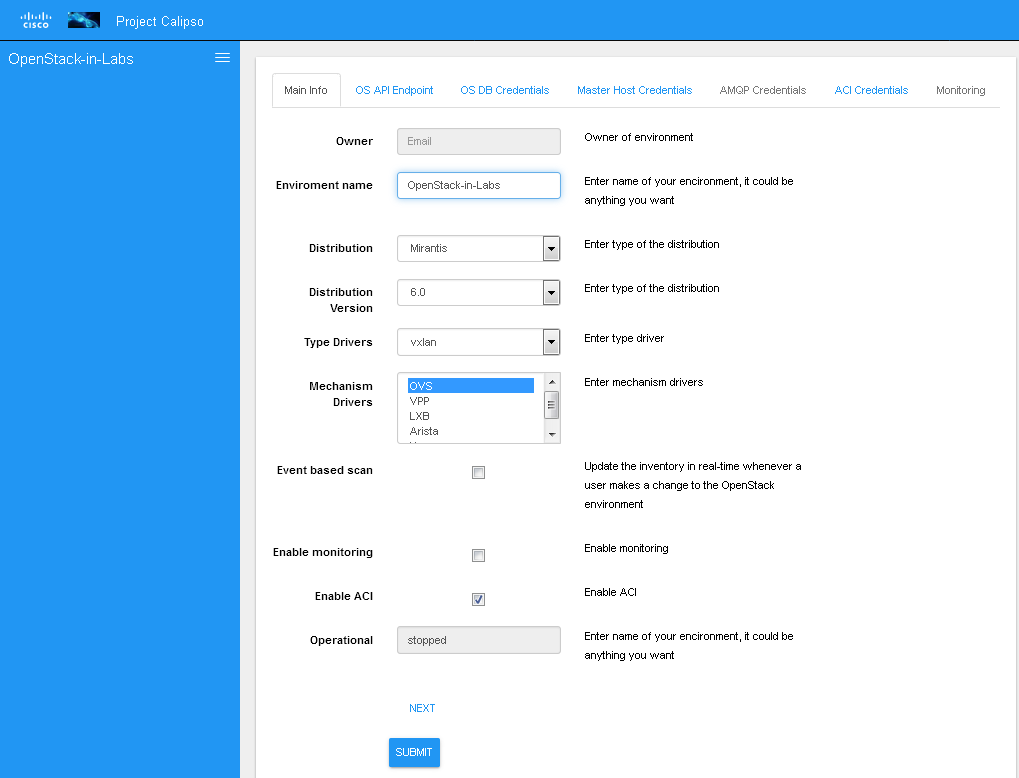

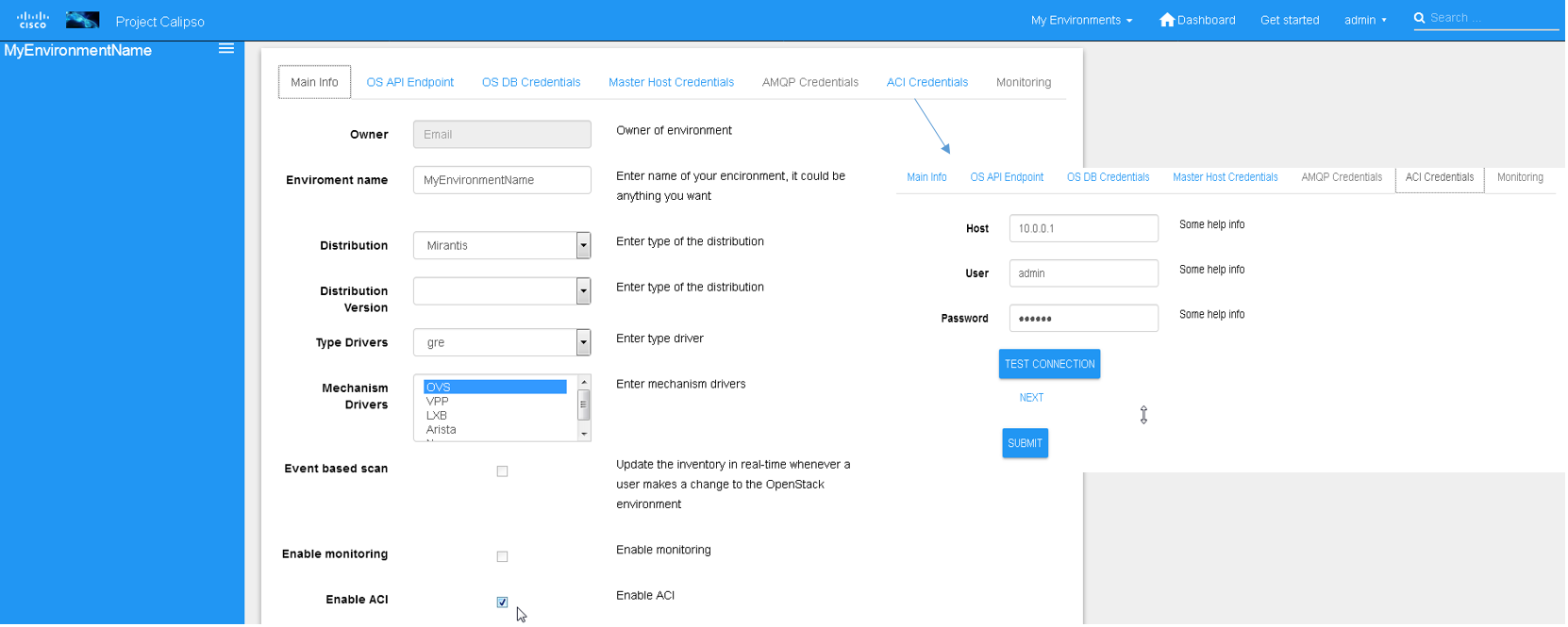

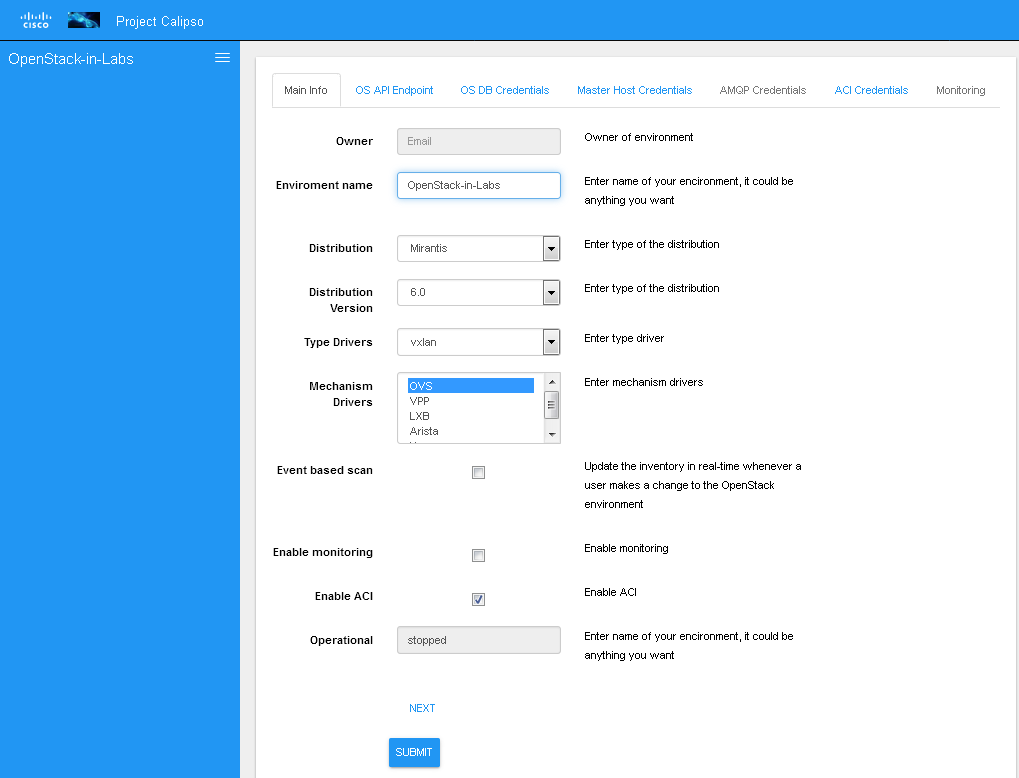

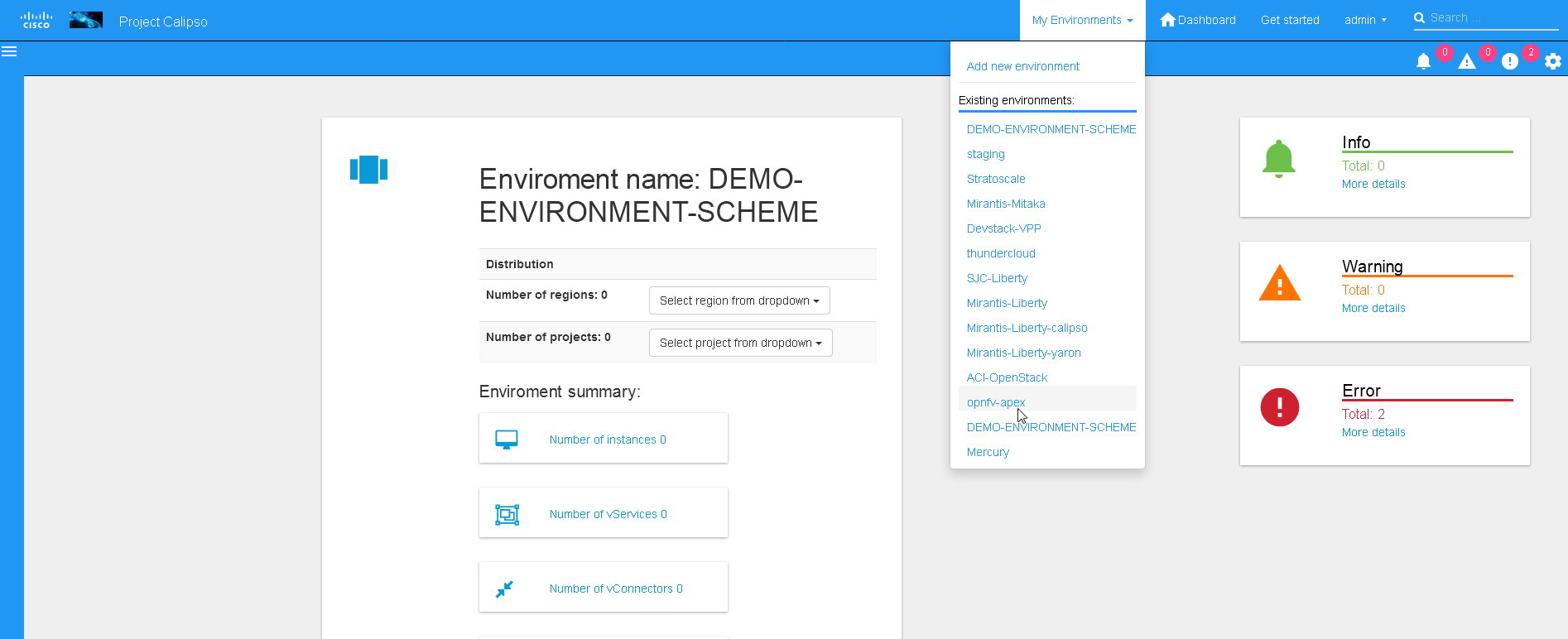

Adding a new environment

As explained above, environment configuration is the pre requisite

for any Calipso data gathering, goto “My Environments” -> and “Add

new Environment” to start building the environment configuration

scheme:

Note: this is automated with OPNFV apex distro, where Calipso

auto-discovers all credentials

Preparing an environment for scanning

Some preparation is needed for allowing Calipso to successfully

gather data from the underlying systems running in the virtual

infrastructure environment. This chapter explain the basic

requirements and provide recommendations.

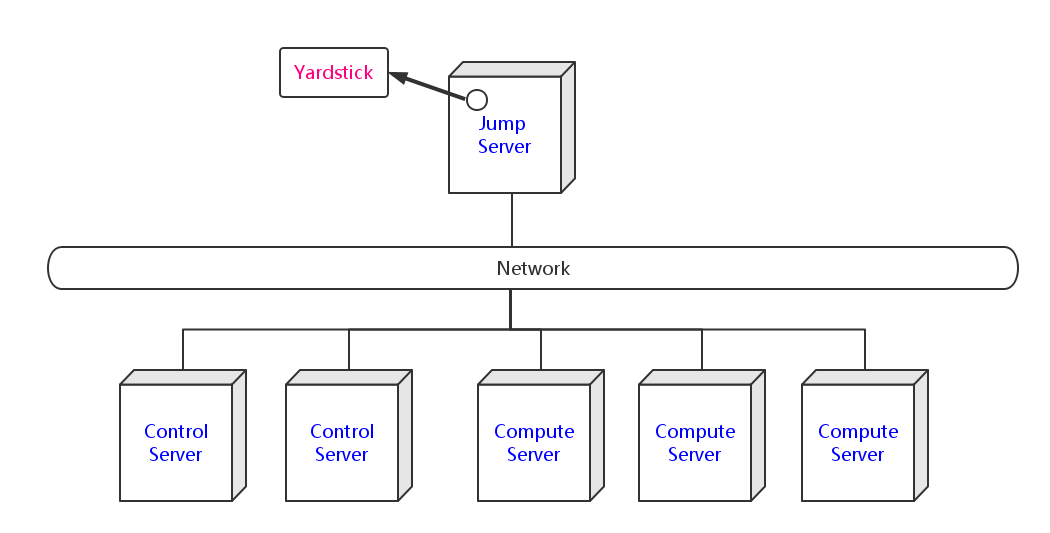

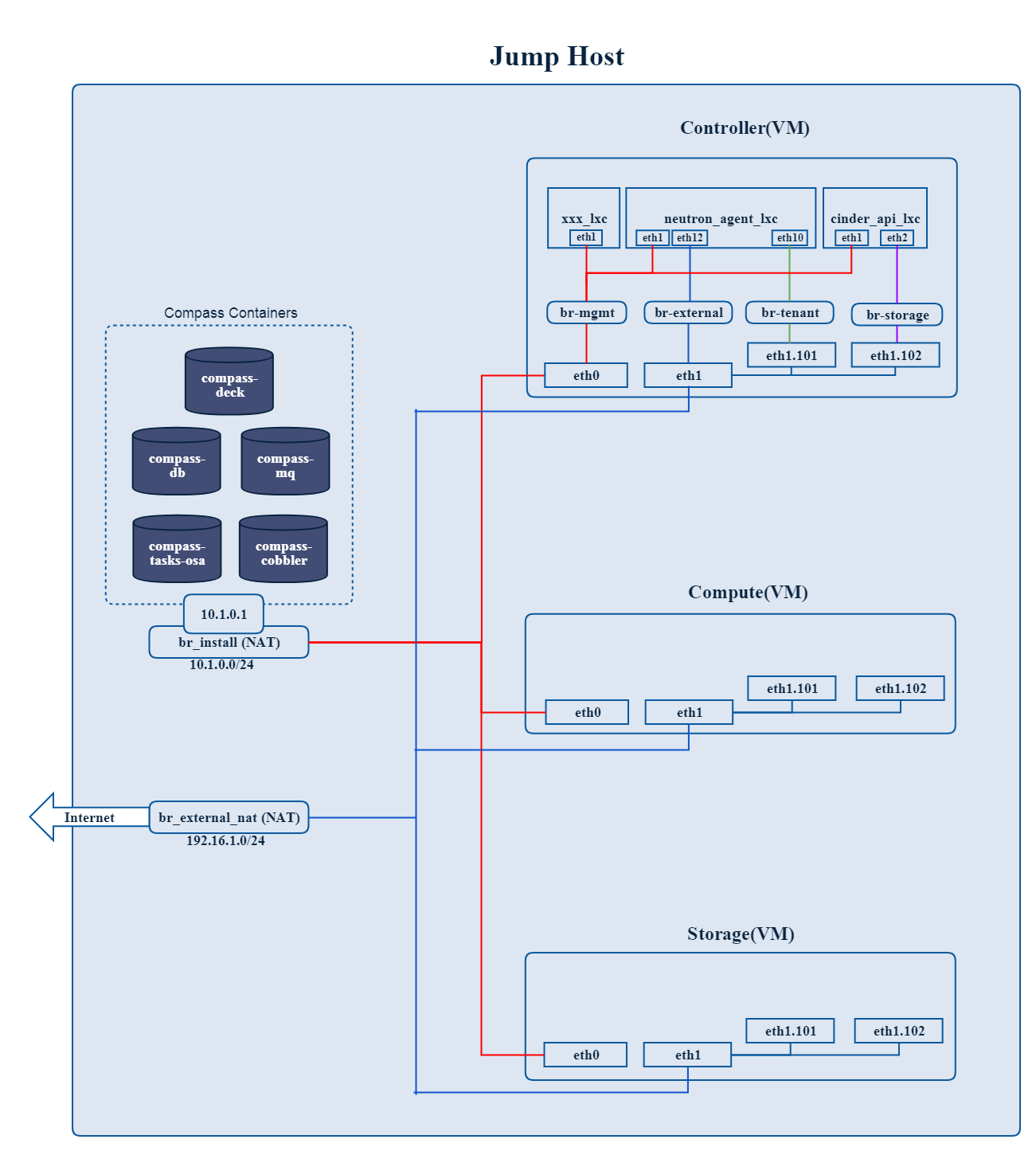

Where to deploy Calipso application

Calipso application replaces the manual discovery steps typically

done by the administrator on every maintenance and troubleshooting

cycles, It needs to have the administrators privileges and is most

accurate when placed on one of the controllers or a“jump server”

deployed as part of the cloud virtual infrastructure, Calipso calls

this server a “Master host”.

Consider Calipso as yet another cloud infrastructure module, similar

to neutron, nova.

Per supported distributions we recommend installing the Calipso

application at:

- Mirantis: on the ‘Fuel’ or ‘MCP’ server.

- RDO/Packstack: where the ansible playbooks are deployed.

- Canonical/Ubuntu: on the juju server.

- Triple-O/Apex: on the jump host server.

Environment setup

The following steps should be taken to enable Calispo’s scanner and

listener to connect to the environment controllers and compute

hosts:

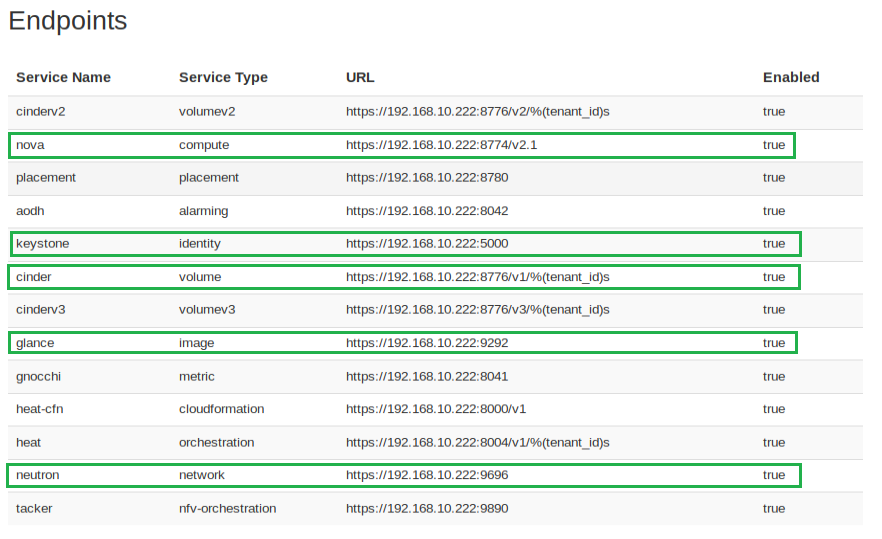

OpenStack API endpoints : Remote access user accessible from the

master host with the required credentials and allows typical ports:

5000, 35357, 8777, 8773, 8774, 8775, 9696

OpenStack DB (MariaDB or MySQL): Remote access user accessible from

the master host to ports 3306 or 3307 allowed access to all Databases

as read-only.

Master host SSH access: Remote access user with sudo privileges

accessible from the master host through either user/pass or rsa keys,

the master host itself should then be allowed access using rsa-keys

(password-less) to all other infrastructure hosts, all allowing to

run sudo CLI commands over tty, when commands entered from the master

host source itself.

AMQP message BUS (like Rabbitmq): allowed remote access from the

master host to listen for all events generated using a guest account

with a password.

Physical switch controller (like ACI): admin user/pass accessed from

master host.

Note: The current lack of operational toolsets like Calipso forces

the use of the above scanning methods, the purpose of Calipso is to

deploy its scanning engine as an agent on all environment hosts, in

such scenario the requirements above might be deprecated and the

scanning itself can be made more efficient.

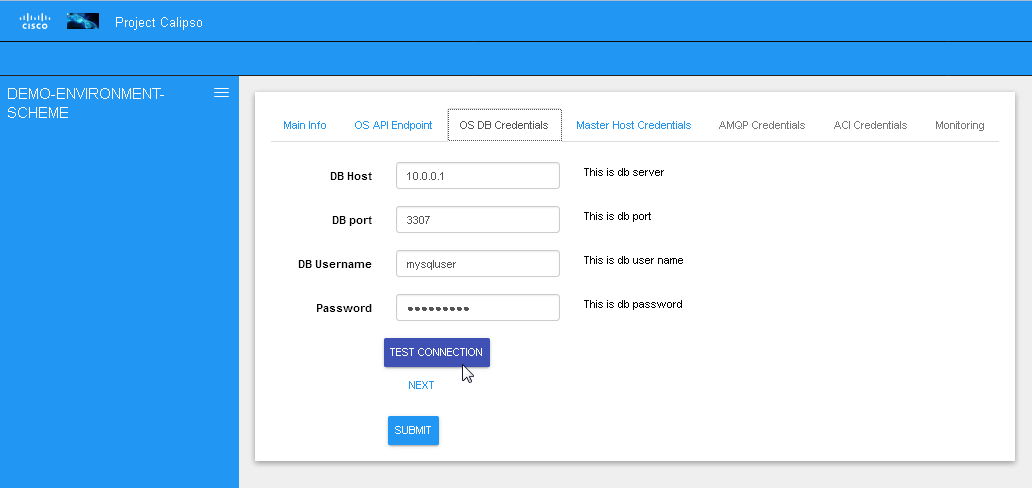

Filling the environment config data

As explained in chapter 1 above, environment configuration is the

pre requisite and all data required is modeled as described. See

api-guide for details on submitting those details through calispo

api module. When using the UI module, follow the sections tabs and

fill the needed data per help messages and the explanations in

chapter 1.

Only the AMQP, Monitoring and ACI sections in environment_config

documents are optional, per the requirements detailed below on this

guide.

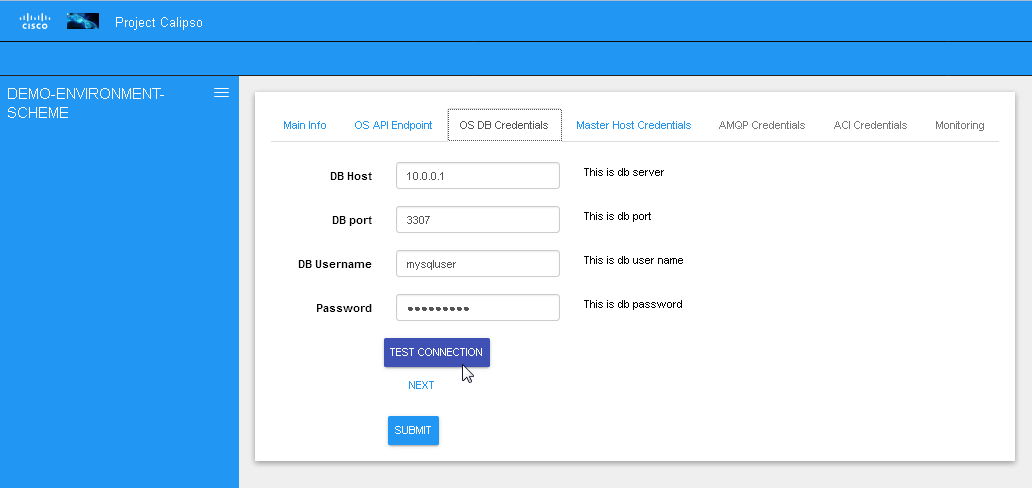

Testing the connections

Before submitting the environment_config document it is wise to

test the connections. Each section tab in the environment

configuration has an optional butting for testing the connection

tagged “test connection”. When this button is clicked, a check is

made to make sure all needed data is entered correctly, then a

request is sent down to mongoDB to the “connection_tests”

collection. Then the calispo scanning module will make the required

test and will push back a response message alerting whether or not

this connection is possible with the provided details and

credentials.

Test connection per configuration section:

With the above tool, the administrator can be assured that Calipso

scanning will be successful and the results will be an accurate

representation of the state of he’s live environment.

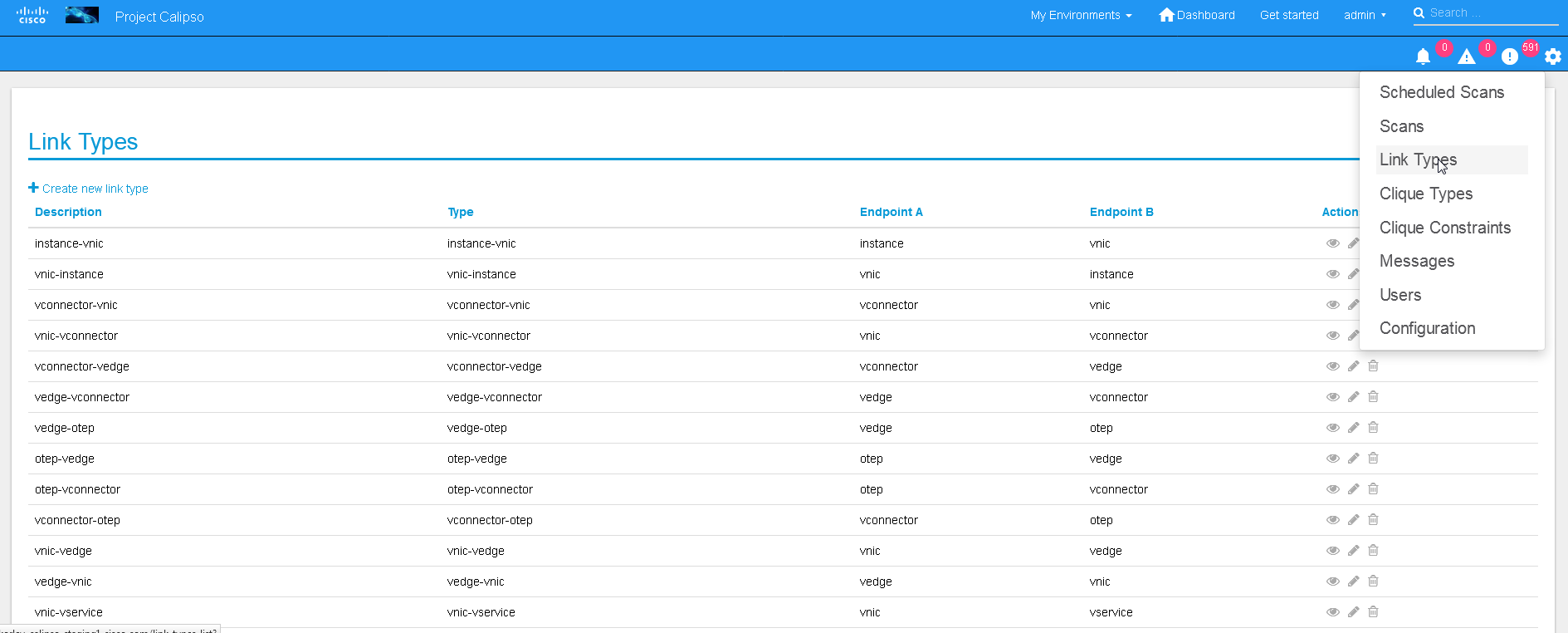

Links and Cliques

A very powerful capability in Calipso allows it to be very adaptive

and support many variances of VIM environments, this capability lies

in its objects, links and cliques models enabling the scanning of

data and analysis of inter-connections and creation of many types of

topology graphs..

Please refer to calipso-model document for more details.

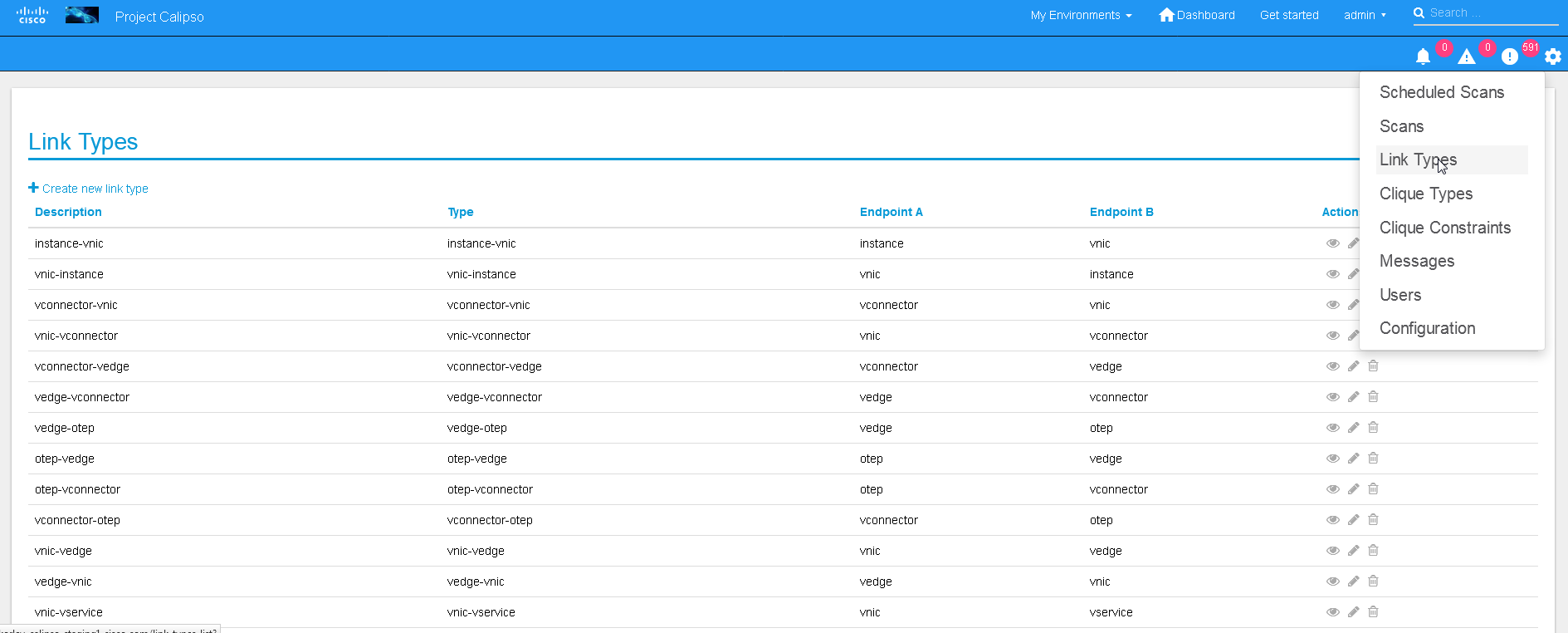

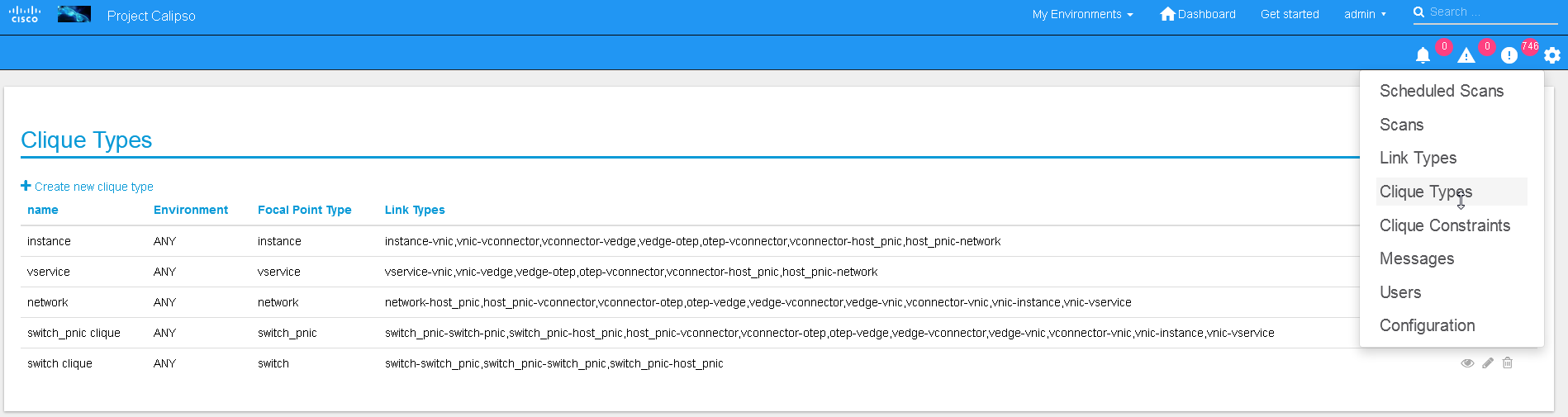

The UI allows viewing and editing of Link types and Clique types

through the settings options:

Link types:

Note:

We currently recommend not to add nor edit the Link types pre-built

in Calipso’s latest release (allowed only for the ‘admin’ user), as

it is tested and proven to support more than 60 popular VIM

variances.

An administrator might choose to define several environment specific

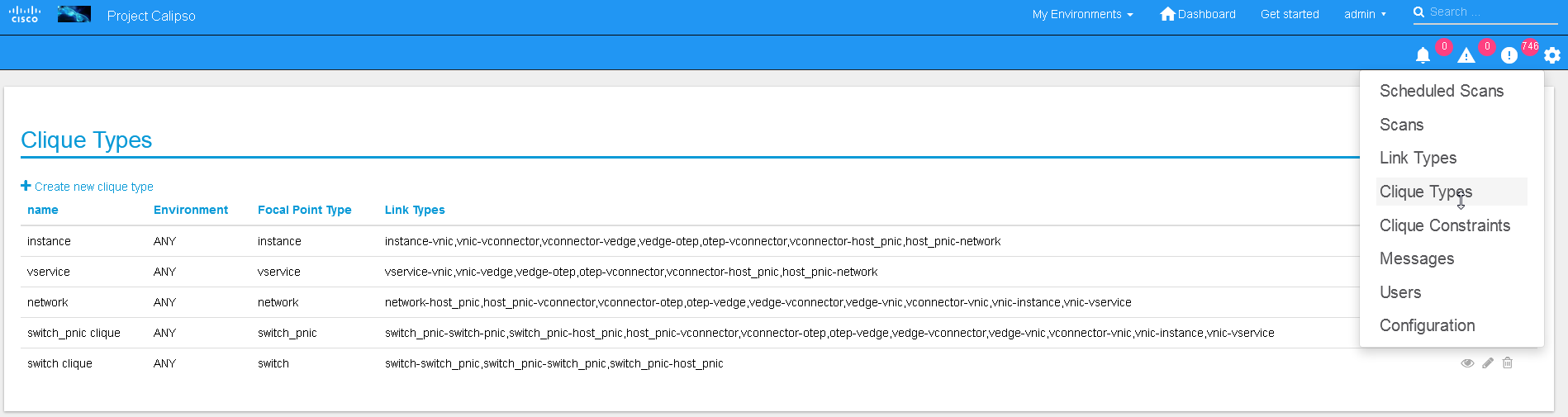

Clique types for creating favorite graphs using the focal_point

objects and link_types lists already built-in:

Adding environment clique_types

Use either the API or the UI to define specific environment

clique_types.

For adding clique_types, use settings menu and choose “Create new

clique type” option, then provide a specific environment name (per

previous environment configurations), define a focal_point (like:

instance, or other object types) and a list of resulted link_types

to include in the final topology graph. Refer to calipso-model

document for more details.

Clique_types are needed for accurate graph buildup, before sending

a scan request.

Several defaults are provided with each new Calipso release.

Clique types:

Note: ask calipso developers for recommended clique_types

(pre-built in several Calipso deployments), per distribution

variance, fully tested by Calipso developers:

Environment scanning

Once environment is setup correctly, environment_config data is

filled and tested, scanning can start. This is can be done with the

following four options:

UI scanning request

UI scan schedule request

API scanning or scheduling request.

CLI scanning in the calipso-scan container.

The following sections with describe those scanning options.

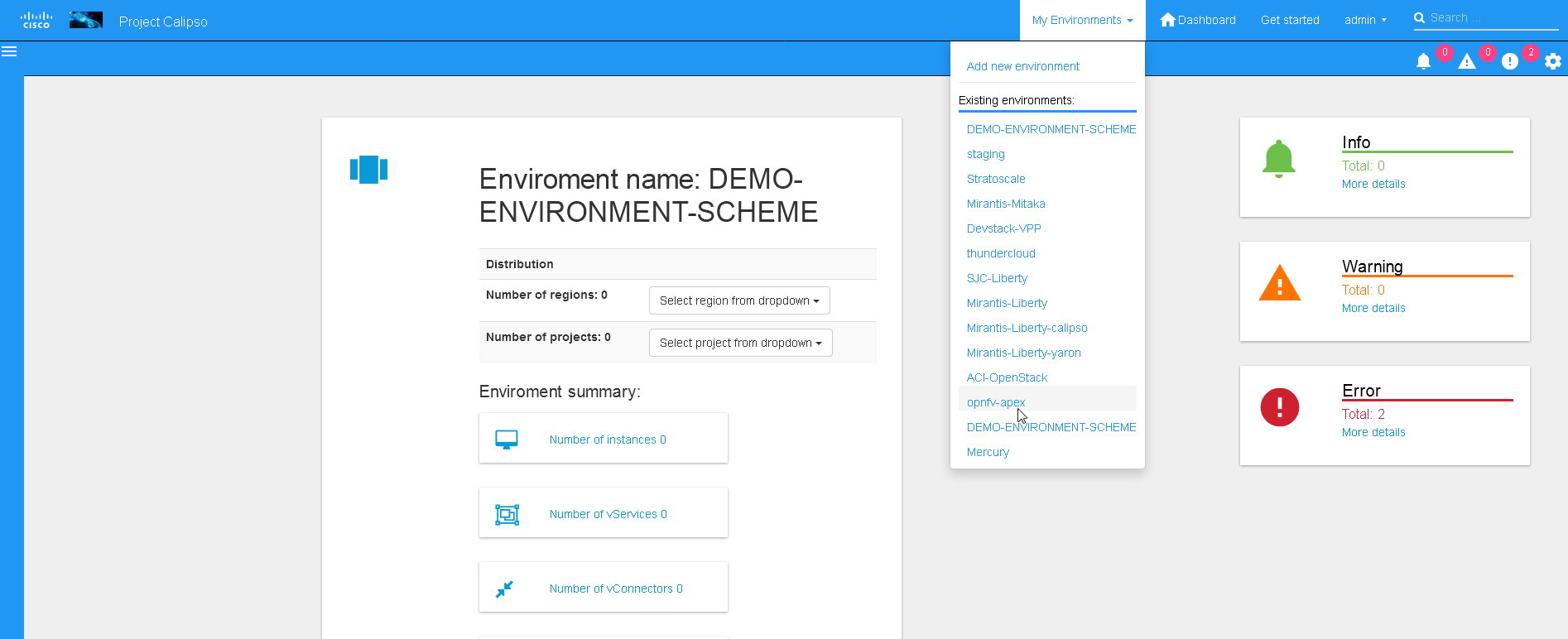

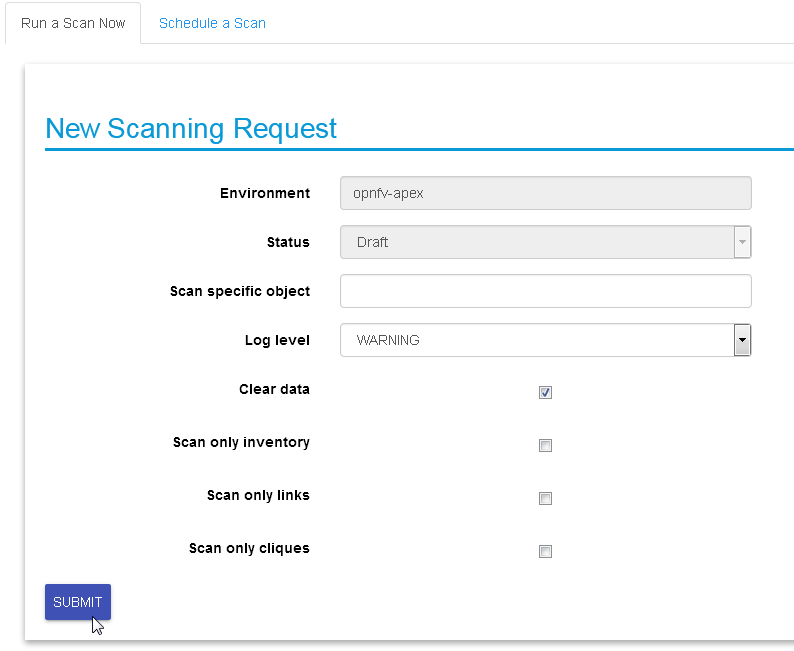

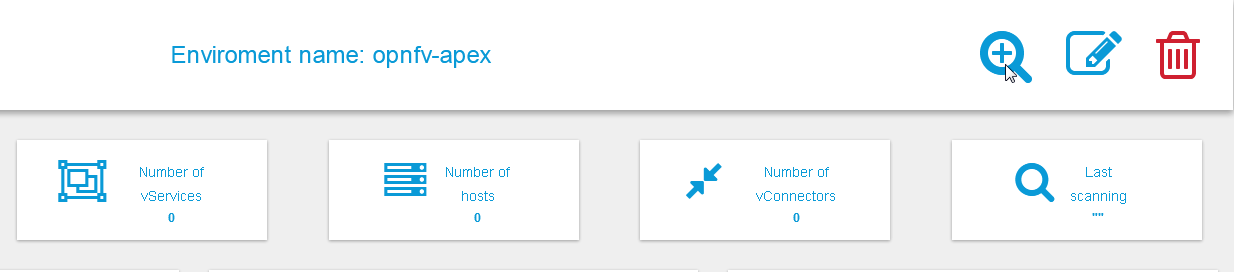

UI scanning request

This can be accomplished after environment configuration has been

submitted, the environment name will be listed under “My

environment” and the administrator can choose it from the list and

login to the specific environment dashboard:

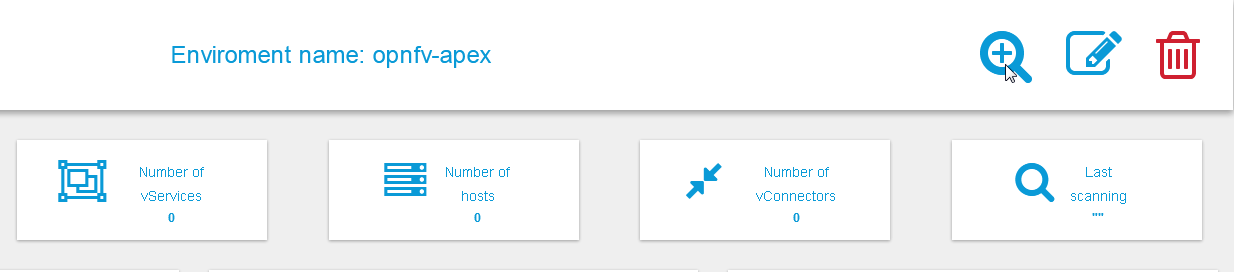

Onces inside a specific environment dashboard the administrator can

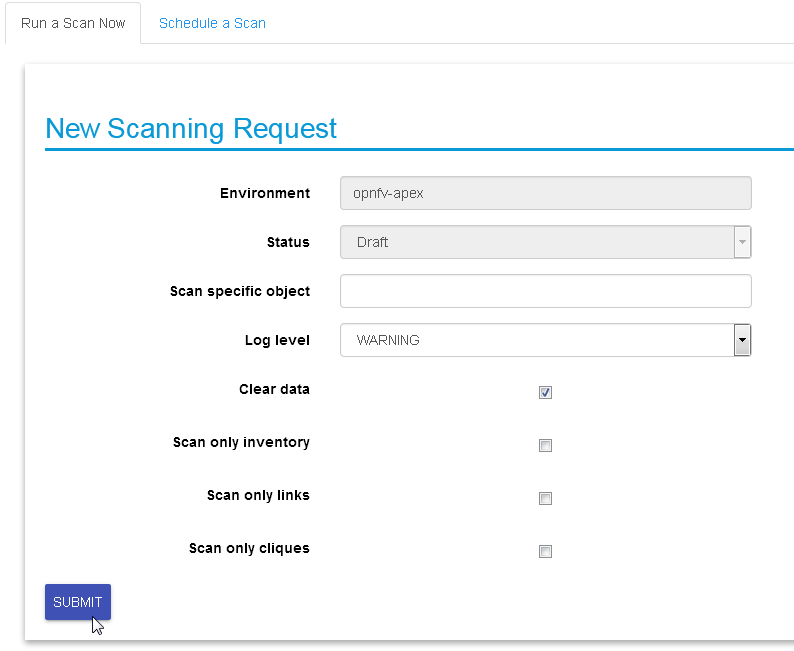

click the scanning button the go into scanning request wizards:

In most cases, the only step needed to send a scanning request is to

use all default options and click the “Submit” button:

Scanning request will propagate into the “scans” collection and will

be handled by scan_manager in the calipso-scan container.

Scan options:

Log level: determines the level and details of the scanning

logs.

Clear data: empty historical inventories related to that

specific environment, before scanning.

Only inventory: creates inventory objects without analyzing for

links.

Only links: create links from pre-existing inventory, does not

build graph topologies.

Only Cliques: create graph topologies from pre-existing

inventory and links.

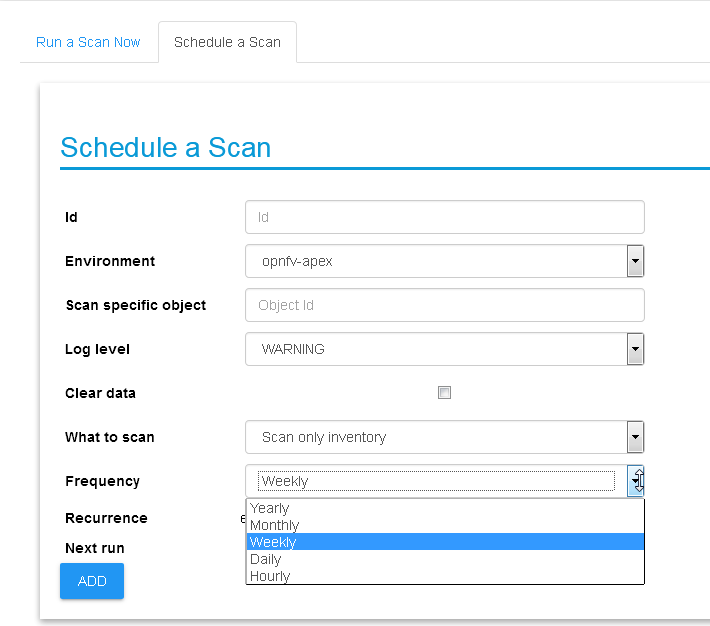

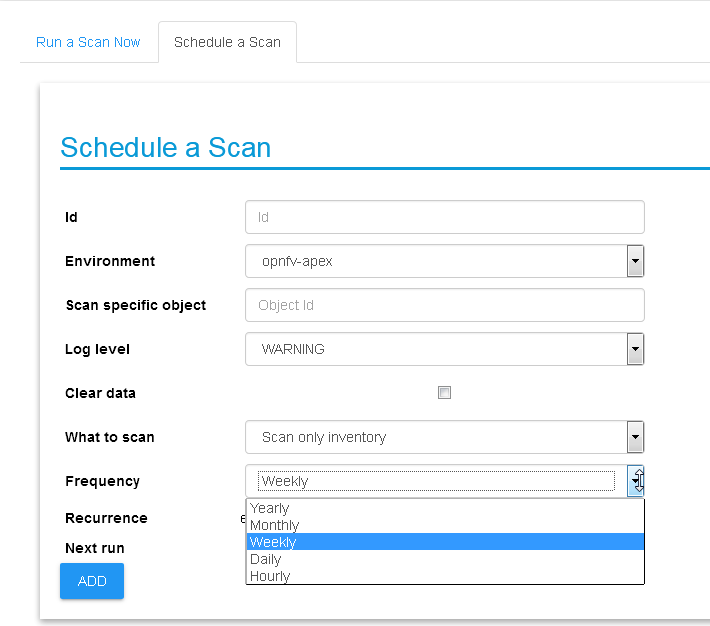

UI scan schedule request

Scanning can be used periodically to dynamically update the

inventories per changes in the underlying virtual environment

infrastructure. This can be defined using scan scheduling and can be

combined with the above one time scanning request.

Scheduled scans has the same options as in single scan request,

while choosing a specific environment to schedule on and providing

frequency details, timer is counted from the submission time, scan

scheduling requests are propagated to the “scheduled_scans”

collection in the Calispo mongoDB and handled by scan_manager in

the calispo-scan container.

API scanning request

Follow api-guide for details on submitting scanning request through

Calipso API.

CLI scanning in the calipso-scan container

When using the UI for scanning messages are populated in the

“Messages” menu item and includes several details for successful

scanning and some alerts. When more detailed debugging of the

scanning process is needed, administrator can login directly to the

calispo-scan container and run the scanning manually using CLI:

Login to calispo-scan container running on the installed host:

ssh scan@localhost –p 3002 , using default-password: ‘scan’

Move to the calipso scan application location:

cd /home/scan/calipso_prod/app/discover

Run the scan.py application with the basic default options:

python3 ./scan.py -m /local_dir/calipso_mongo_access.conf -e

Mirantis-8

Default options: -m points to the default location of mongoDB access

details, -e points to the specific environment name, as submitted to

mongoDB through UI or API.

Other optional scanning parameters, can be used for detailed

debugging:

The scan.py script is located in directory app/discover in the

Calipso repository.

To show the help information, run scan.py with –help option, here

is the results

:

Usage: scan.py [-h] [-c [CGI]] [-m [MONGO_CONFIG]] [-e [ENV]] [-t

[TYPE]]

[-y [INVENTORY]] [-s] [-i [ID]] [-p [PARENT_ID]]

[-a [PARENT_TYPE]] [-f [ID_FIELD]] [-l [LOGLEVEL]]

[–inventory_only] [–links_only] [–cliques_only]

[–clear]

Optional arguments:

-h, –help show this help message and exit

-c [CGI], –cgi [CGI]

read argument from CGI (true/false)

(default: false)

-m [MONGO_CONFIG], –mongo_config [MONGO_CONFIG]

name of config file with MongoDB server

access details

-e [ENV], –env [ENV]

name of environment to scan (default: WebEX-

Mirantis@Cisco)

-t [TYPE], –type [TYPE]

type of object to scan (default:

environment)

-y [INVENTORY], –inventory [INVENTORY]

name of inventory collection (default:

‘inventory’)

-s, –scan_self scan changes to a specific object (default:

False)

-i [ID], –id [ID] ID of object to scan (when scan_self=true)

-p [PARENT_ID], –parent_id [PARENT_ID]

ID of parent object (when scan_self=true)

-a [PARENT_TYPE], –parent_type [PARENT_TYPE]

type of parent object (when scan_self=true)

-f [ID_FIELD], –id_field [ID_FIELD]

name of ID field (when scan_self=true)

(default: ‘id’,

use ‘name’ for projects)

-l [LOGLEVEL], –loglevel [LOGLEVEL]

logging level (default: ‘INFO’)

–inventory_only do only scan to inventory (default: False)

–links_only do only links creation (default: False)

–cliques_only do only cliques creation (default: False)

–clear clear all data prior to scanning (default:

False)

A simple scan.py run will look, by default, for a local MongoDB

server. Assuming running this from within the scan container

running, the administrator needs to point it to use the specific

MongoDB server. This is done using the Mongo access config file

created by the installer (see install-guide for details):

./scan.py -m your\_mongo\_access.conf

Environment needs to be specified explicitly, no default environment

is used by scanner.

By default, the inventory collection, named ‘inventory’, along with

the accompanying collections: “links”, “cliques”, “clique_types”

and “clique_constraints” are used to place initial scanning data

results.

As a more granular scan example, for debugging purposes, using

environment “RDO-packstack-Mitaka”, pointing scanning results to

an inventory collection named “RDO”:

The accompanying collections will be automatically created and

renamed accordingly:

“RDO_links”, “RDO_cliques”, “RDO_clique_types” and

“RDO_clique_constraints”.

Another parameter in use here is –clear, which is good for

development: it clears all the previous data from the data

collections (inventory, links & cliques).

scan.py -m your_mongo_access.conf -e RDO-packstack-Mitaka -y RDO

–clear

Log level will provide the necessary details for cases of scan

debugging.

Clique Scanning

For creating cliques based on the discovered objects and links,

clique_types must be defined for the given environment (or a

default “ANY” environment clique_types will be used)

A clique type specifies the link types used in building a clique

(graph topology) for a specific focal point object type.

For example, it can define that for instance objects we want to

have the following link types:

instance-vnic

vnic-vconnector

vconnector-vedge

vedge-host_pnic

host_pnic-network

See calipso-model guide for more details on cliques and links.

As in many cases the same clique types are used, we can simply copy

the clique_types documents from an existing clique_types

collection. For example, using MongoChef:

Click the existing clique types collection

Right click the results area

Choose export

Click ‘next’ all the time (JSON format, to clipboard)

Select JSON format and “Overwrite document with the same _id”

Right click the target collection

Choose import, then JSON and clipboard

- Note that the name of the target collection should have the prefix

name of your collection’s name. For example, you create a

collection named your_test, then your clique types collection’s

name must be your_test_clique_types.

Now run scan.py again to have it create cliques-only from that data.

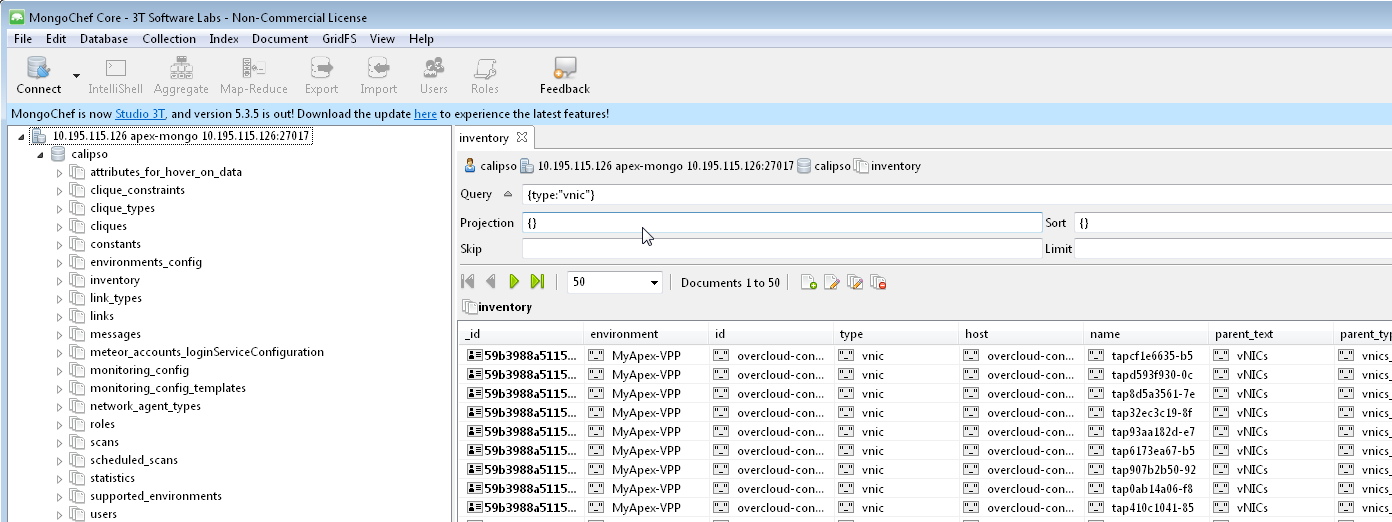

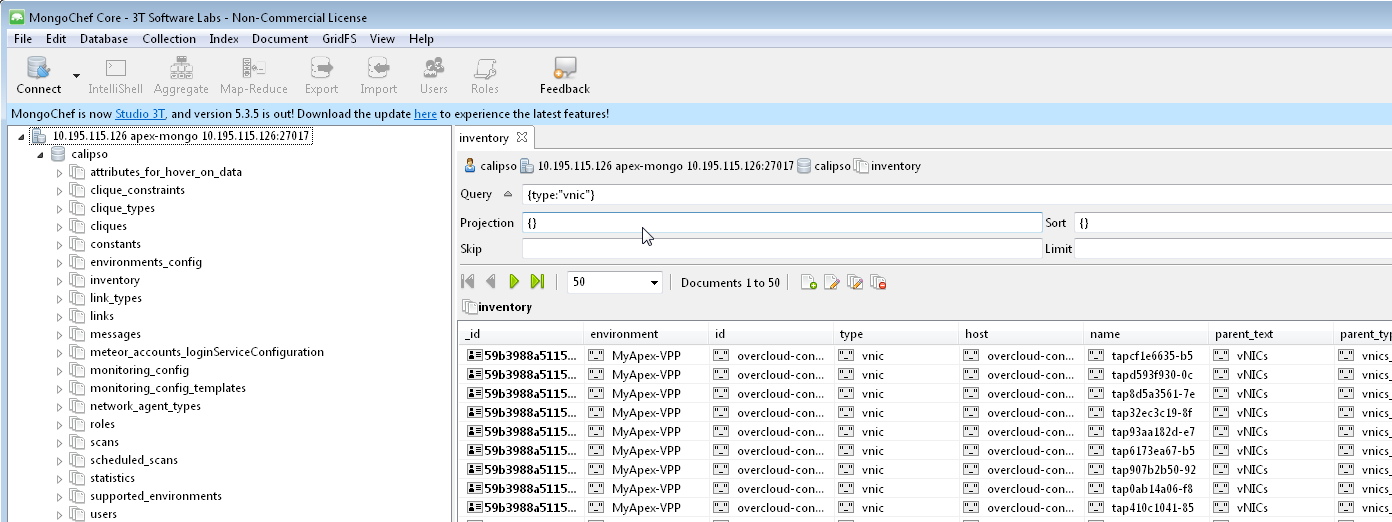

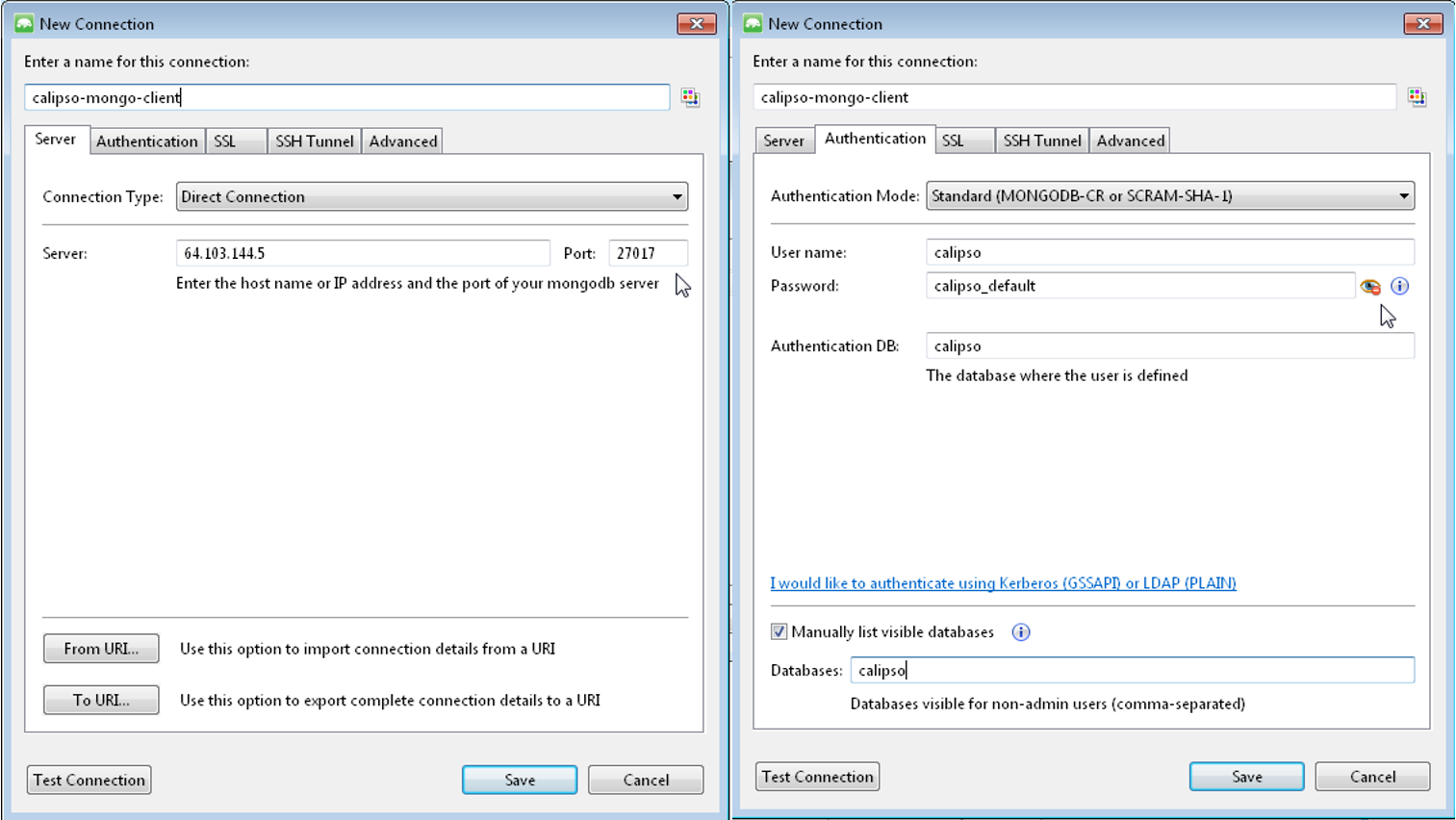

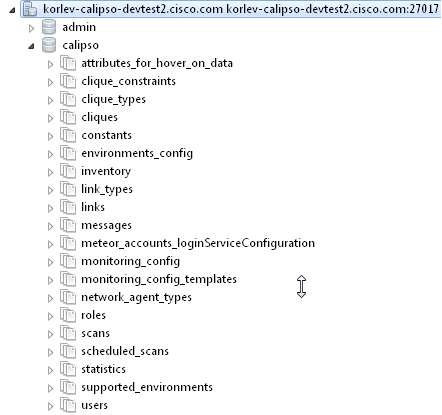

Viewing results

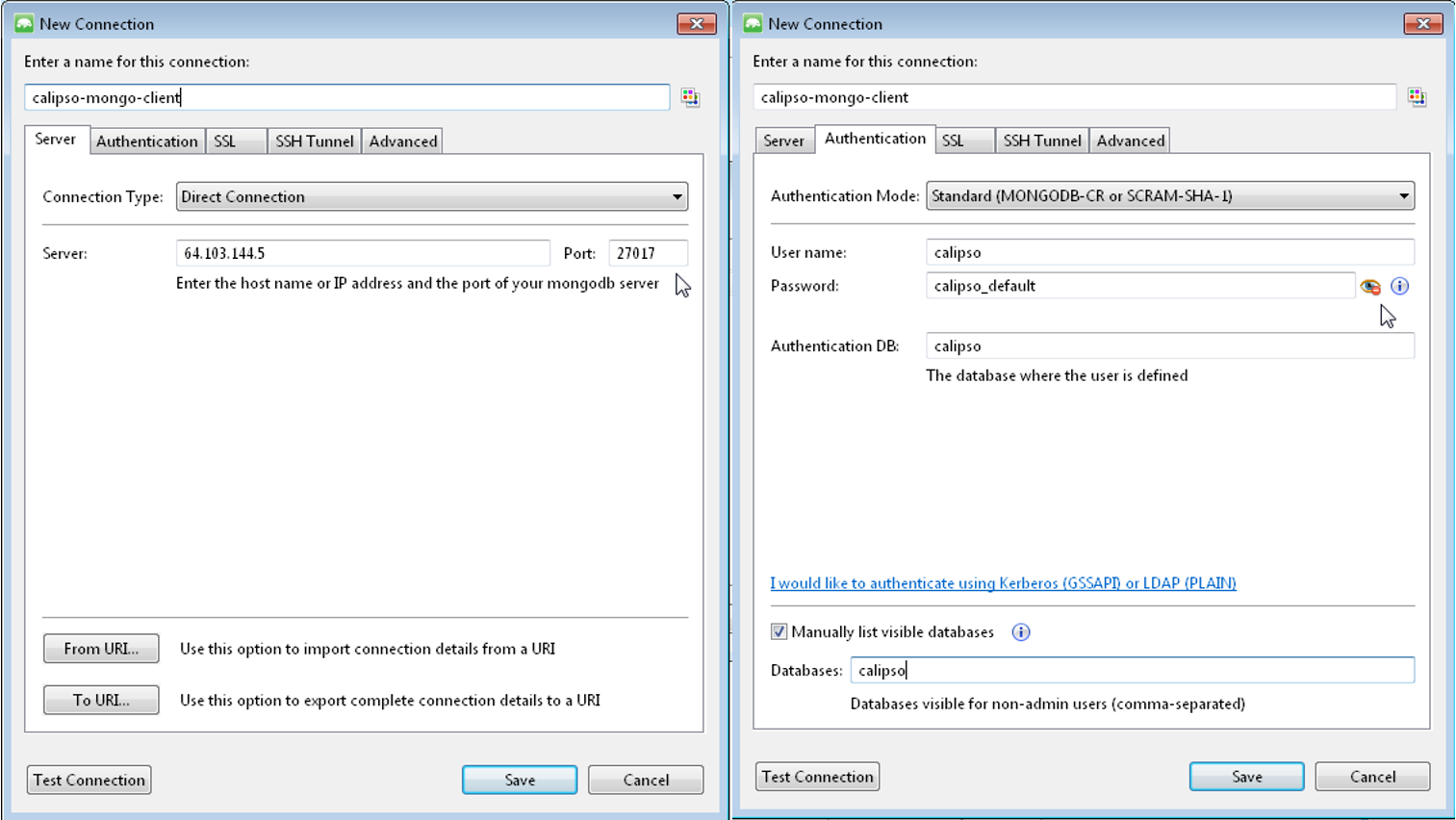

Scan results are written into the collections in the ‘Calispo’ DB on

the MongoDB database.

In our example, we use the MongoDB database server on

“install-hostname”http://korlev-osdna-devtest.cisco.com/,

so we can connect to it by Mongo client, such as Mongochef and

investigate the specific collections for details.

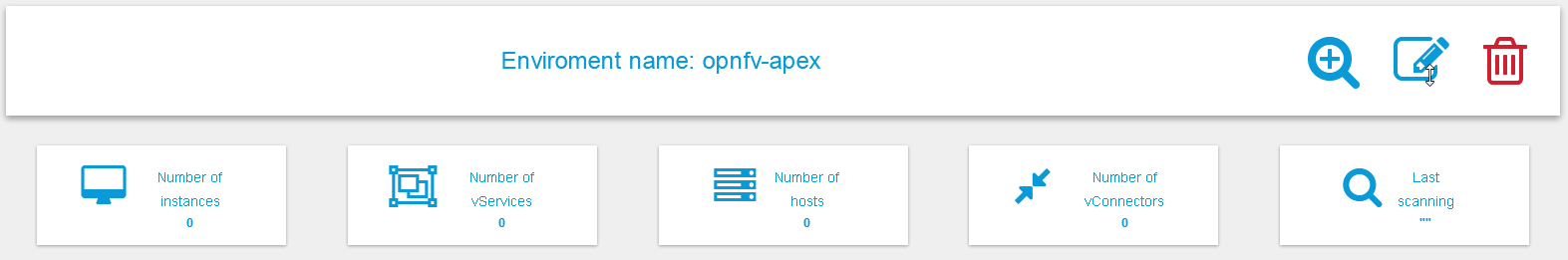

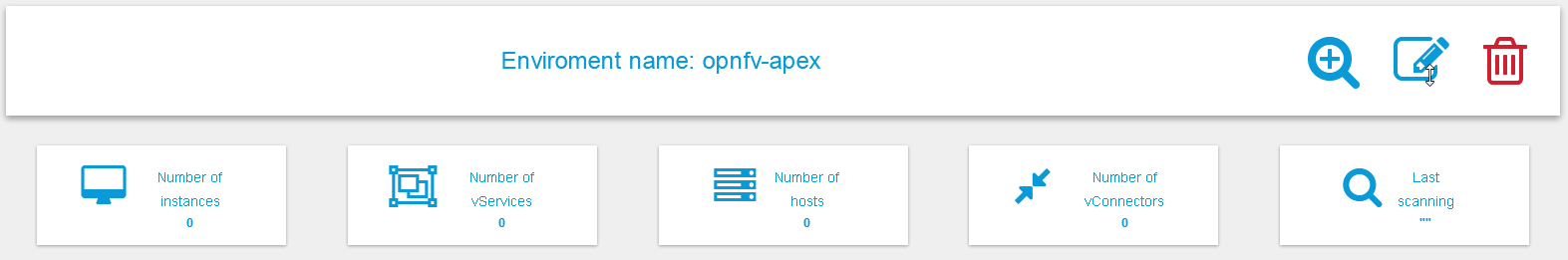

Editing or deleting environments

Inside a specific environment dashboard optional buttons are

available for deleting and editing the environment configurations:

Note: Deleting an environment does not empty the inventories of previous

scan results, this can be accomplished in future scans when using the

–clear options.

Event-based scanning

For dynamic discovery and real-time updates of the inventories

Calipso also provides event-based scanning with event_manager

application in the calipso-listen container.

Event_manager listens to the VIM AMQP BUS and based on the events

updates the inventories and also kickoff automatic scanning of a

specific object and its dependencies.

Enabling event-based scanning

Per environment, administrator can define the option of event-based

scanning, using either UI or API to configure that parameter in the

specific environment configuration:

In cases where event-based scanning is not supported for a specific

distribution variance the checkbox for event based scan will be

grayed out. When checked, the AMQP section becomes mandatory.

This behavior is maintained through the “supported_environments”

collection and explained in more details in the calipso-model

document.

Event-based handling details

The event-based scanning module needs more work to adapt to the

changes in any specific distribution variance, this is where we

would like some community support to help us maintain data without

the need for full or partial scanning through scheduling.

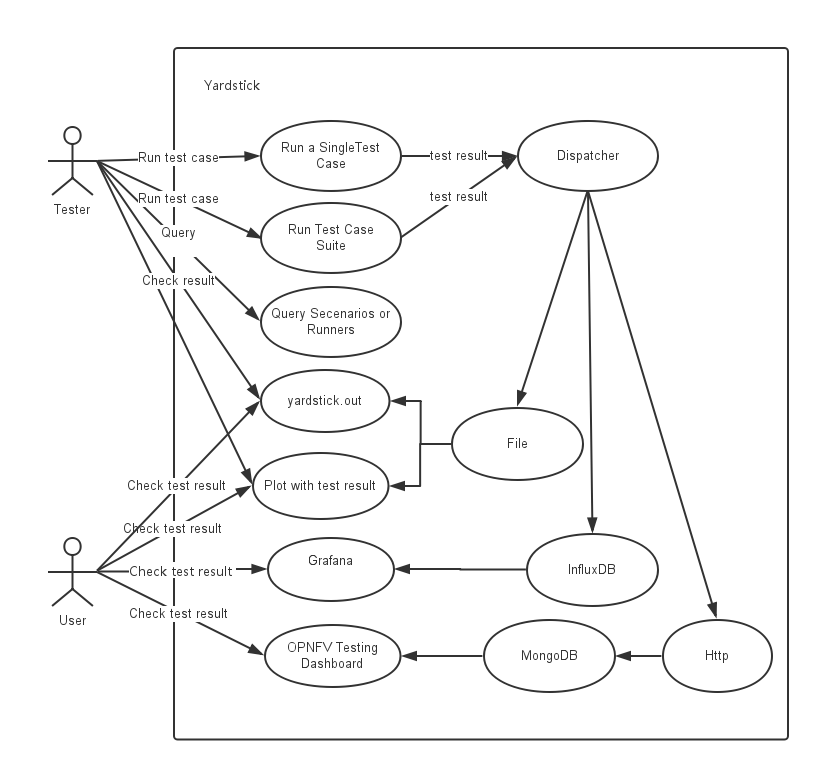

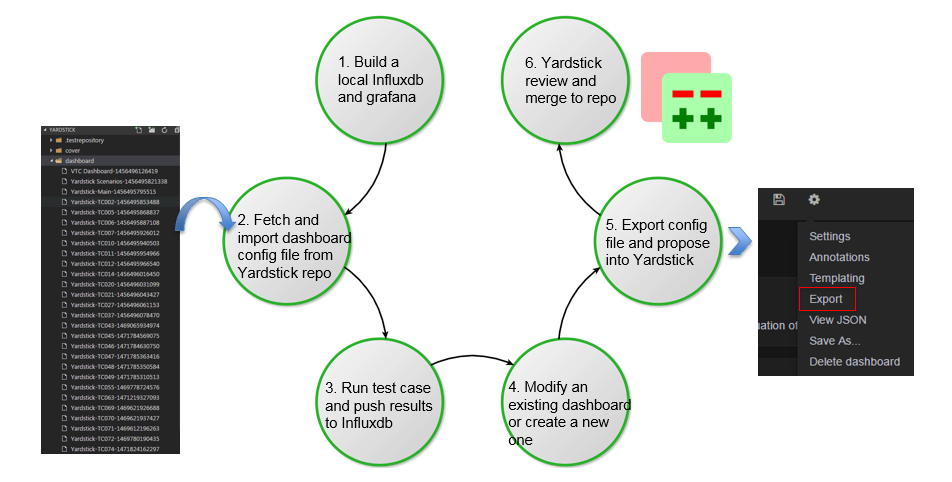

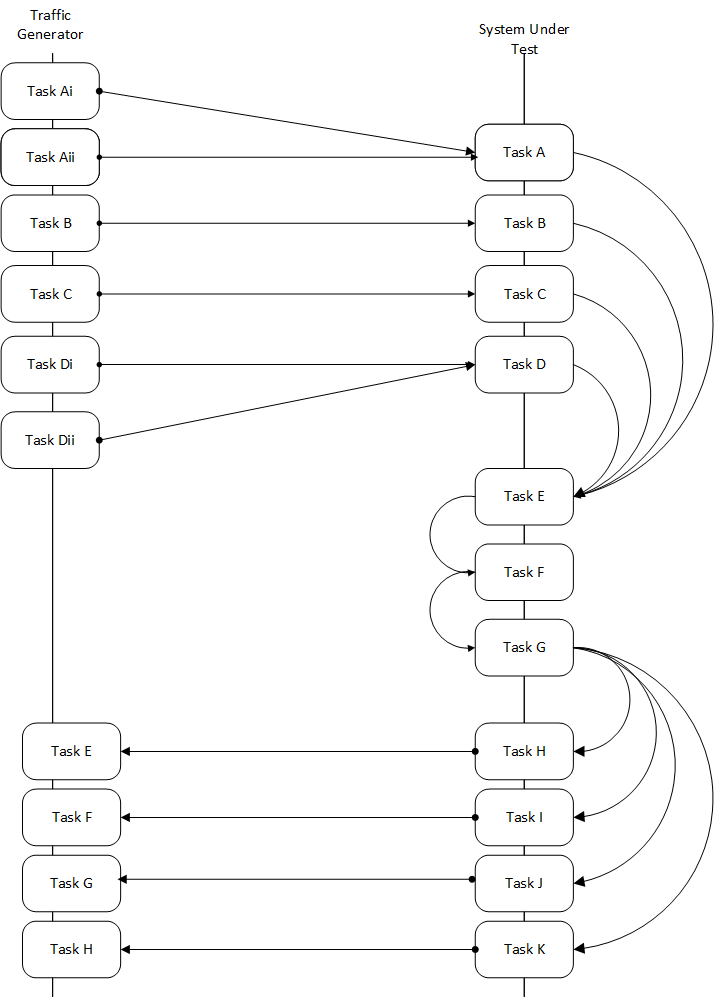

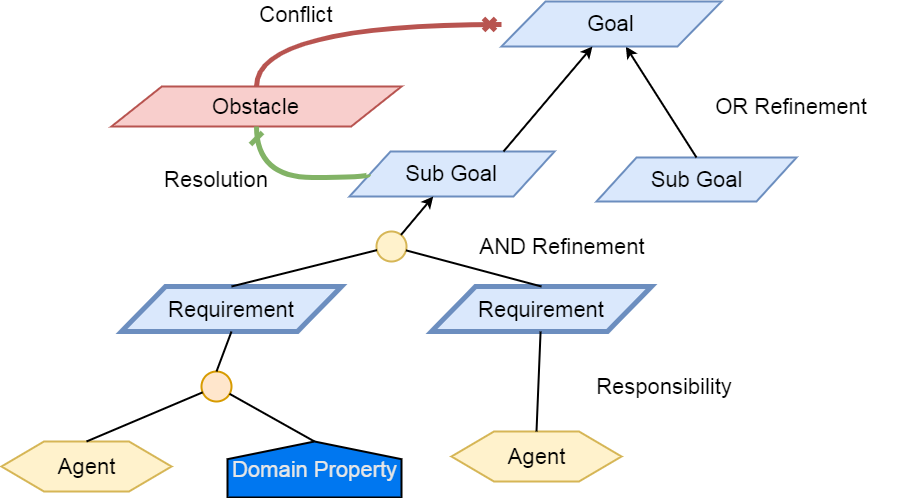

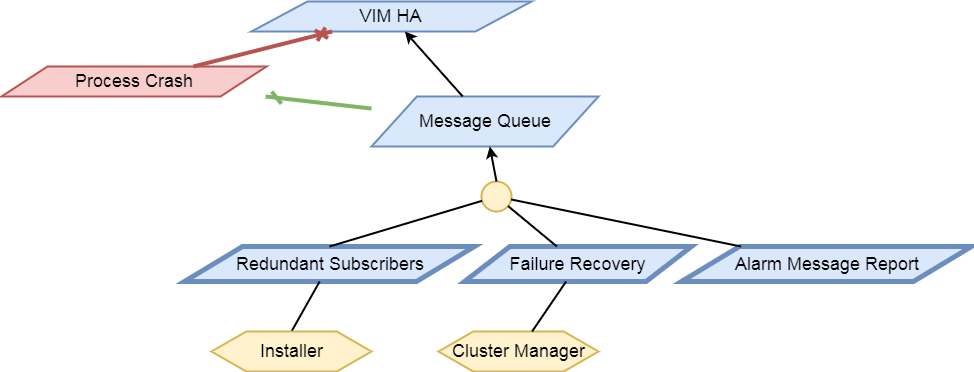

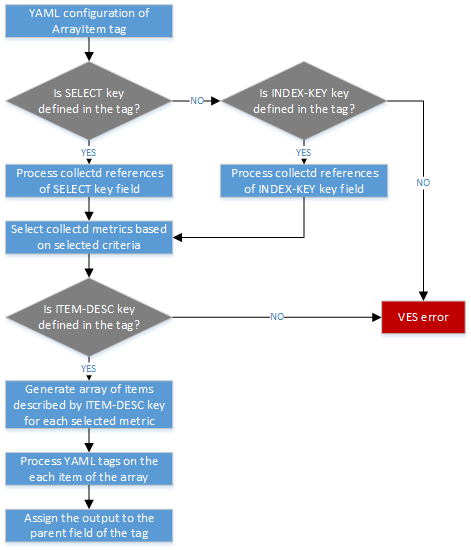

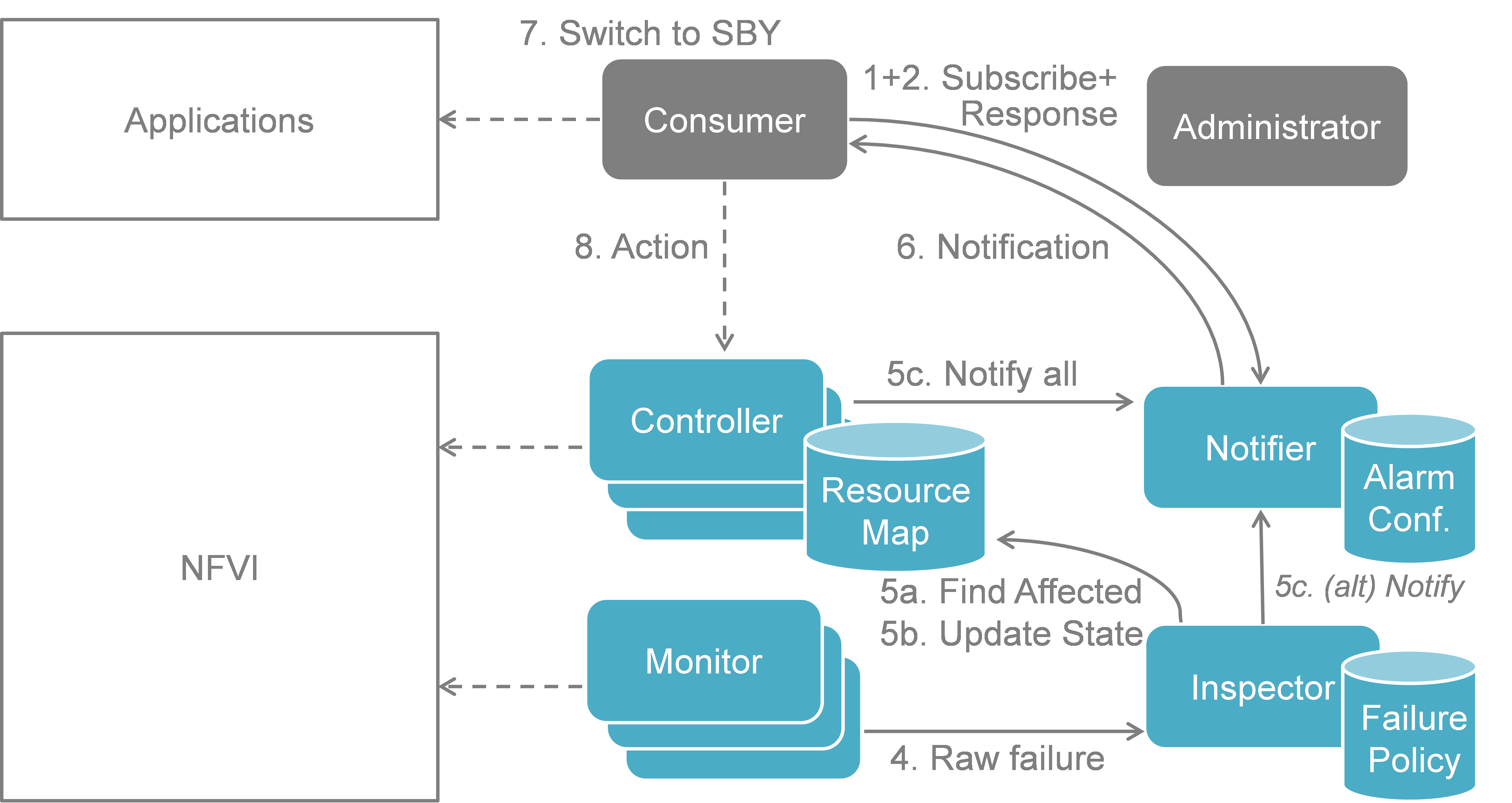

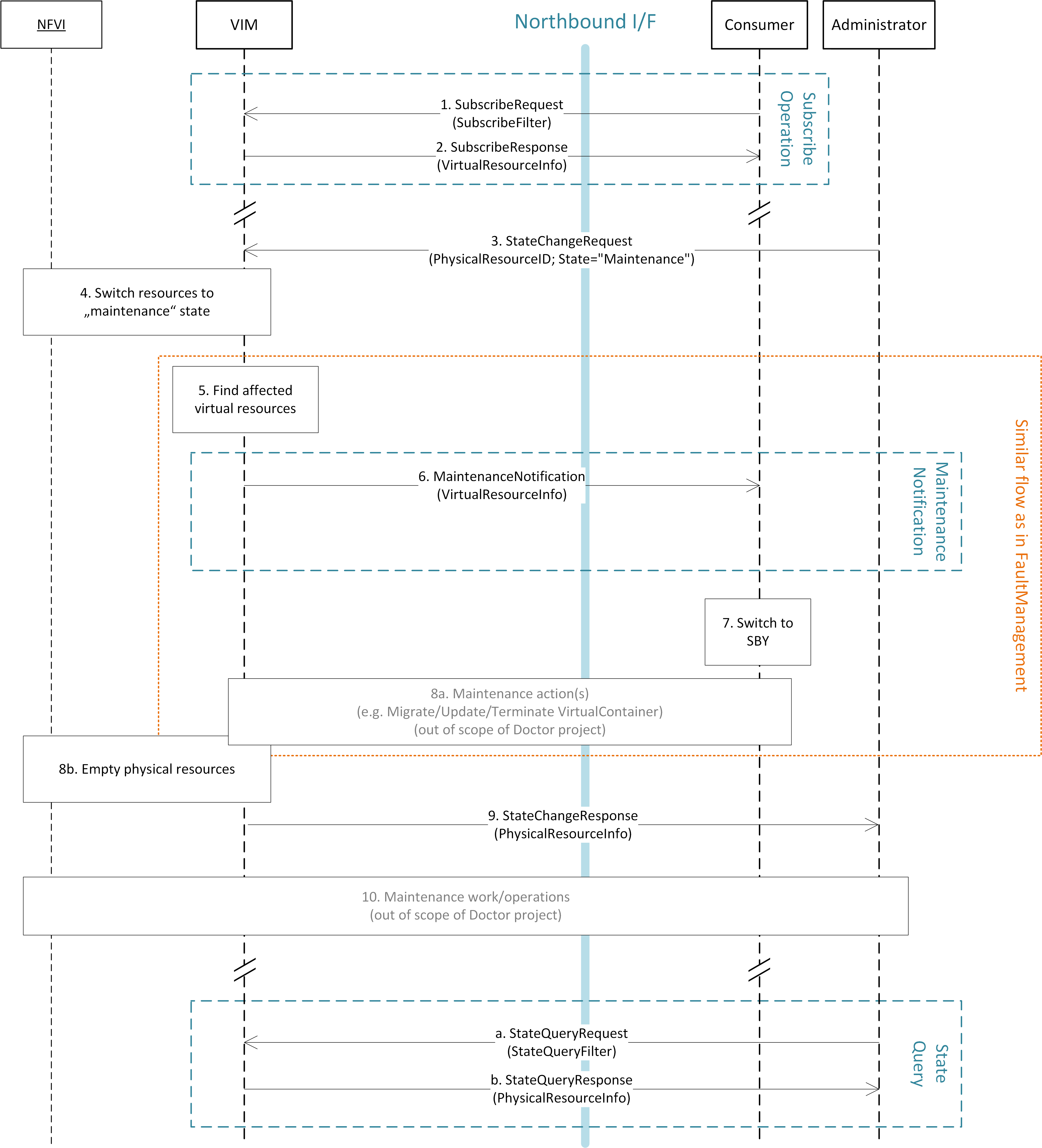

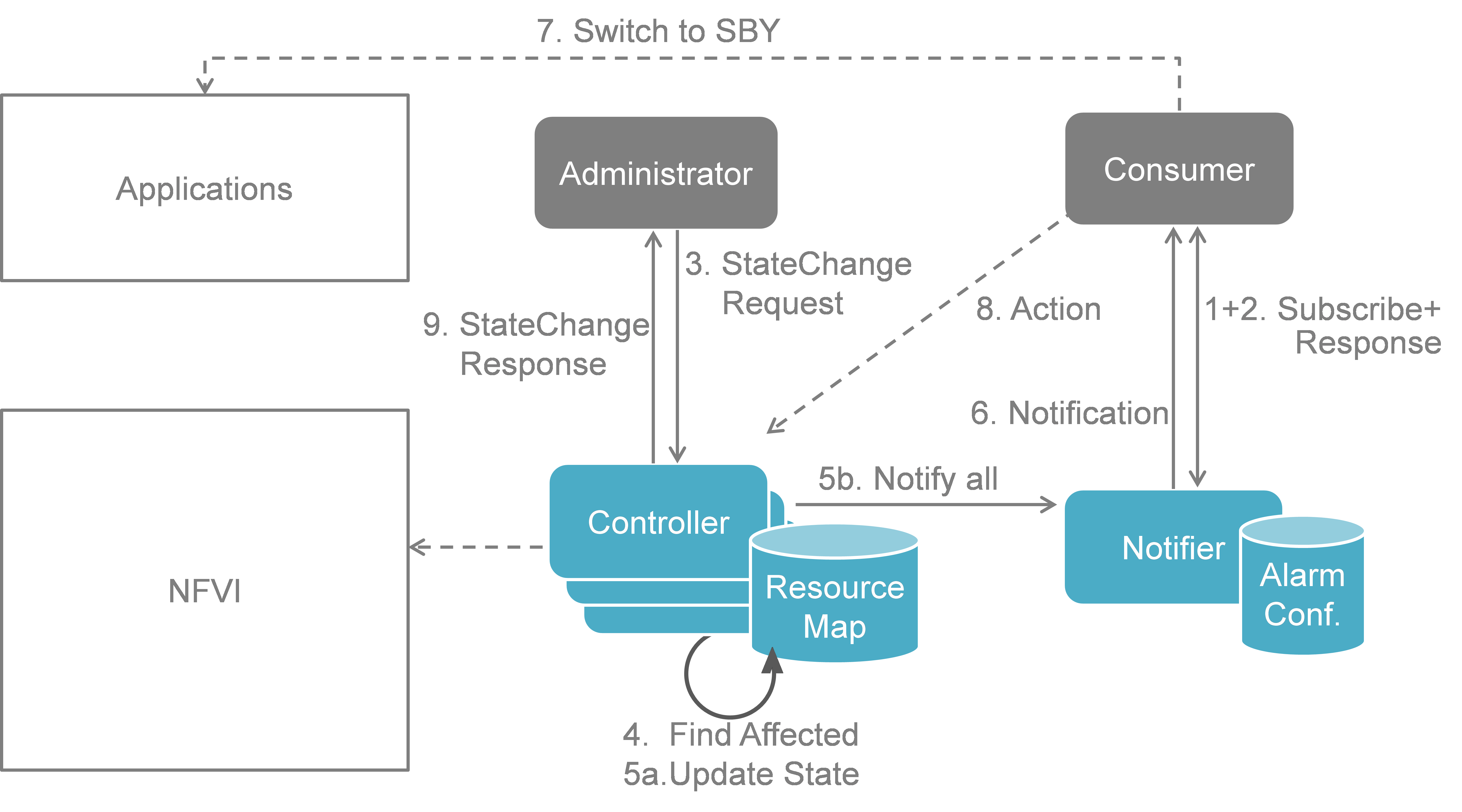

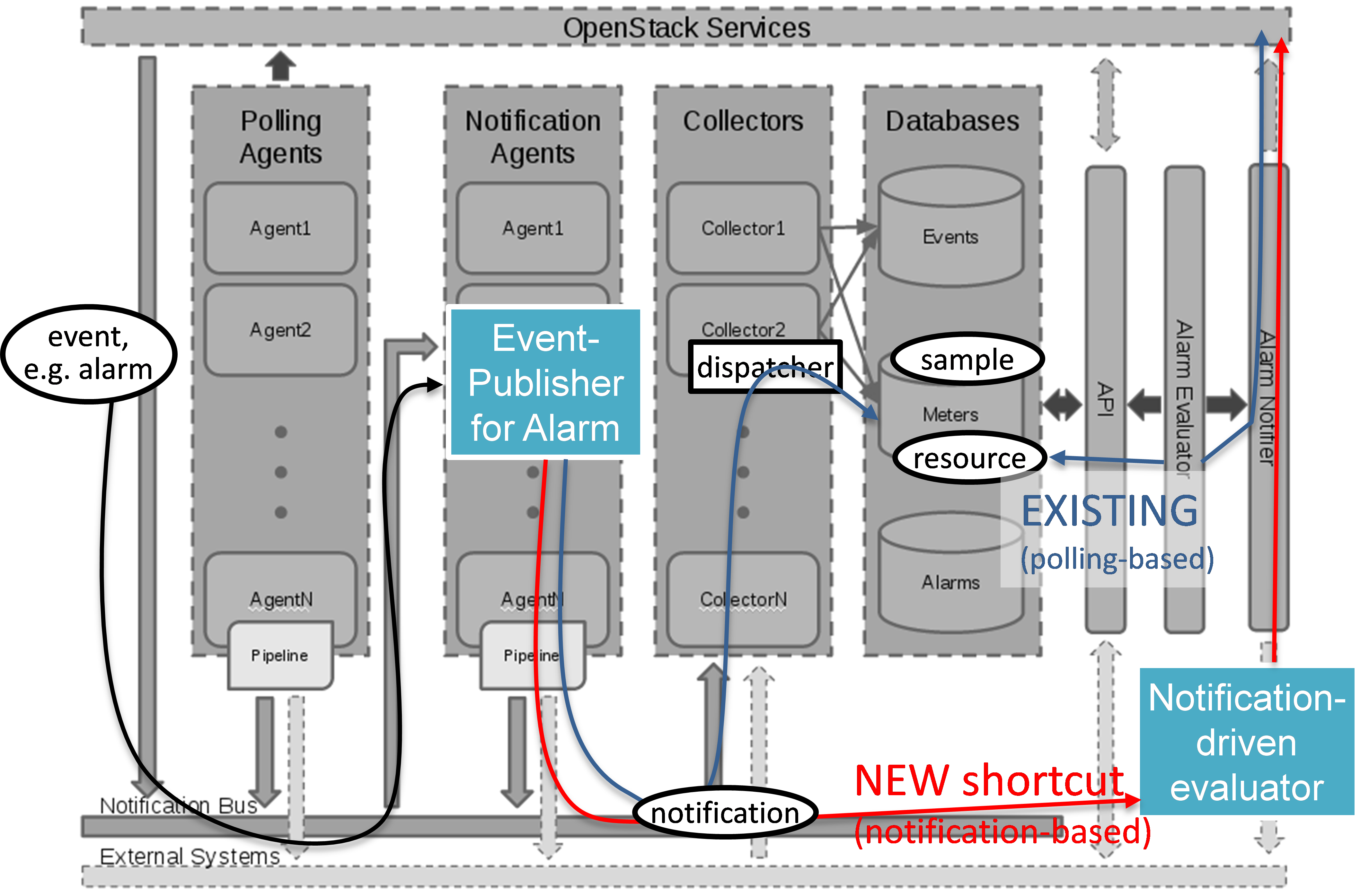

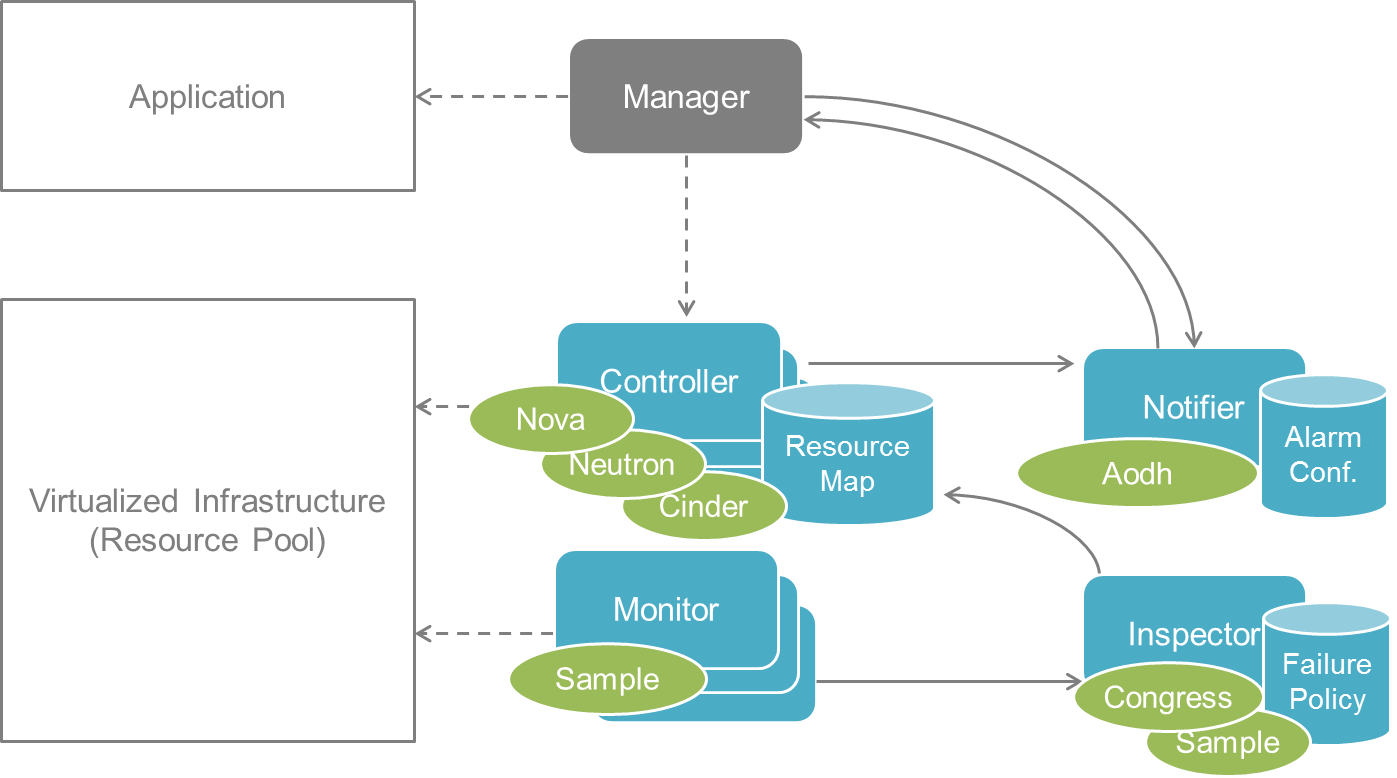

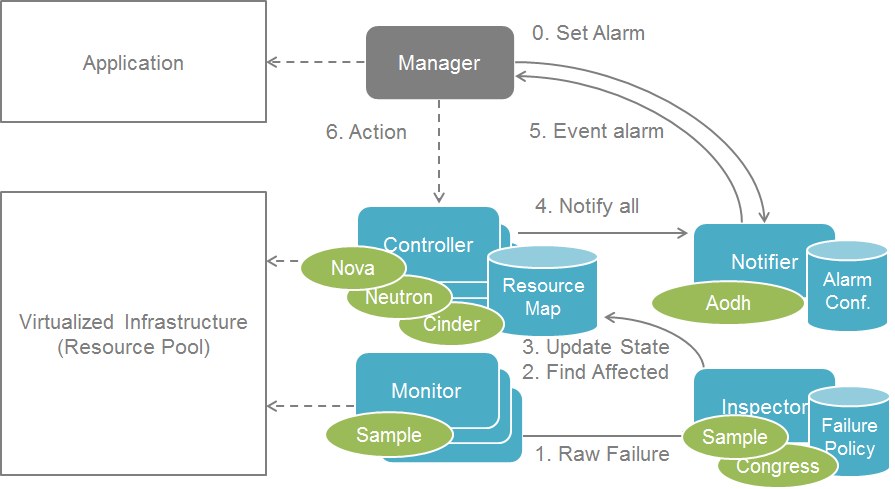

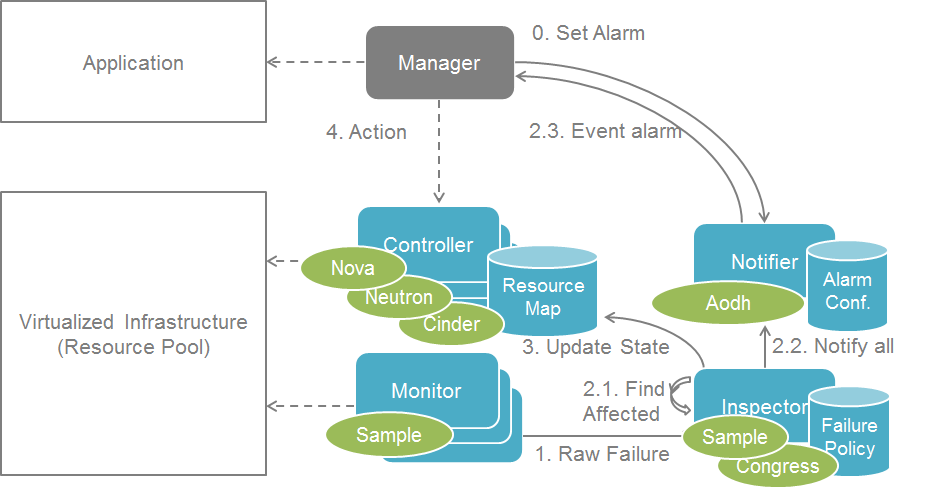

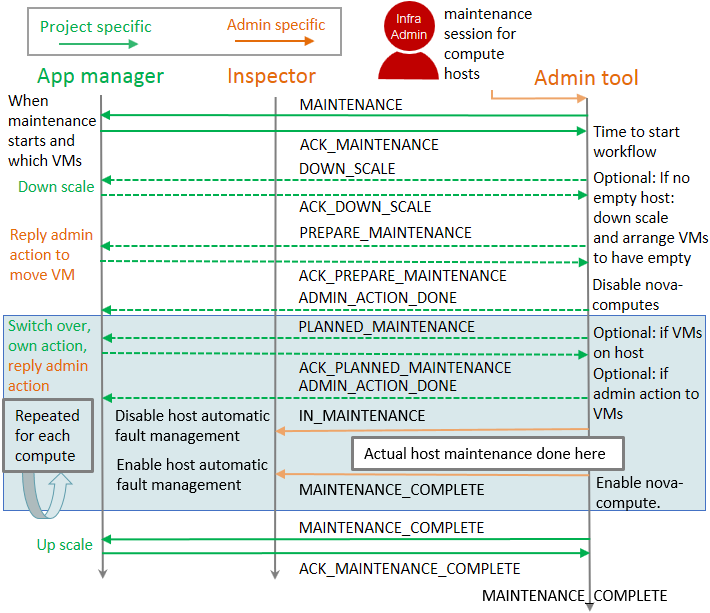

The following diagram illustrates event-based scanning module

functions on top of the regular scanning module functions:

In the following tables, some of the current capabilities of

event-handling and event-based scanning in Calipso are explained: (NOTE: see pdf version of this guide for better tables view)

| # |

Event name |

AMQP event |

Handler |

Workflow |

Scans |

Notes |

|---|

| Instance |

| 1 |

Create Instance |

compute.instance.create.end |

EventInstanceAdd |

- Get instances_root from inventory

- If instance_root is None, log error, return None

- Create ScanInstancesRoot object.

- Scan instances root (and only new instance as a child)

- Scan from queue

- Get host from inventory

- Scan host (and only children of types ‘vconnectors_folder’ and ‘vedges_folder’

- Scan from queue

- Scan links

- Scan cliques

- Return True

|

Yes

{by object id: 2,

links: 1,

cliques: 1,

from queue: ?}

|

|

| 2 |

Update Instance |

compute.instance.rebuild.end

compute.instance.update

|

EventInstanceUpdate |

- If state == ‘building’, return None

- If state == ‘active’ and old_state == ‘building’, call EventInstanceAdd (see #1), return None

- If state == ‘deleted’ and old_state == ‘active’, call EventInstanceDelete (see #2), return None

- Get instance from inventory

- If instance is None, log error, return None

- Update several fields in instance.

- If name_path has changed, update relevant names and name_path for descendants

- Update instance in db

- Return None

|

Yes (if #1 is used)

No (otherwise)

|

The only fields that are updated: name, object_name and name_path |

| 3 |

Delete Instance |

compute.instance.delete.end |

EventInstanceDelete (EventDeleteBase) |

- Extract id from payload

- Execute self.delete_handler()

|

No |

delete_handler() is expanded later |

| Instance Lifecycle |

| 4 |

Instance Down |

compute.instance.shutdown.start

compute.instance.power_off.start

compute.instance.suspend.start

|

Not implemented |

|

|

|

| 5 |

Instance Up |

compute.instance.power_on.end

compute.instance.suspend.end

|

Not implemented |

|

|

|

| Region |

| 6 |

Add Region |

servergroup.create |

Not implemented |

|

|

|

| 7 |

Update Region |

servergroup.update

servergroup.addmember

|

Not implemented |

|

|

|

| 8 |

Delete Region |

servergroup.delete |

Not implemented |

|

|

|

| Network |

| 9 |

Add Network |

network.create.end |

EventNetworkAdd |

- If network with specified id already exists, log error and return None

- Parse incoming data and create a network dict

- Save network in db

- Return None

|

No |

|

| 10 |

Update Network |

network.update.end |

EventNetworkUpdate |

- Get network_document from db

- If network_document doesn’t exist, log error and return None

- If name has changed, update relevant names and name_path for descendants

- Update admin_state_up from payload

- Update network_document in db

|

No |

The only fields that are updated: name, object_name, name_path and admin_state_up |

| 11 |

Delete Network |

network.delete.end |

EventNetworkDelete (EventDeleteBase) |

- Extract network_id from payload

- Execute self.delete_handler()

|

No |

delete_handler() is expanded later |

| Subnet |

| 12 |

Add Subnet |

subnet.create.end |

EventSubnetAdd |

- Get network_document from db

- If network_document doesn’t exist, log error and return None

- Update network_document with new subnet

- If dhcp_enable is True, we update parent network (*note 1*) and add the following children docs: ports_folder, port_document, network_services_folder, dhcp_document, vnic_folder and vnic_document.

- Add links for pnics and vservice_vnics (*note 2*)

- Scan cliques

- Return None

|

Yes {cliques: 1} |

- I don’t fully understand what *these lines* do. We make sure ApiAccess.regions variable is not empty, but why? The widespread usage of static variables is not a good sign anyway.

- For some reason *the comment* before those lines states we “scan for links” but it looks like we just add them.

|

| 13 |

Update Subnet |

subnet.update.end |

EventSubnetUpdate |

- Get network_document from db

- If network_document doesn’t exist, log error and return None

- If we don’t have a matching subnet in network_document[‘subnets’], return None

- If subnet has enable_dhcp set to True and it wasn’t so before:

4.1. Add dhcp document

4.2. Make sure ApiAccess.regions is not empty

4.3. Add port document

4.4. If port has been added, add vnic document, add links and scan cliques.

- Is subnet has enable_dhcp set to False and it wasn’t so before:

5.1. Delete dhcp document

5.2. Delete port binding to dhcp server if exists

- If name hasn’t changed, update it by its key in subnets. Otherwise, set it by the new key in subnets. (*note 1*)

|

Yes {cliques: 1} (only if dhcp status has switched to True) |

- If subnet name has changed, we set it in subnets object inside network_document by new key, but don’t remove the old one. A bug?

|

| 14 |

Delete Subnet |

subnet.delete.end |

EventSubnetDelete |

- Get network_document from db

- If network_document doesn’t exist, log error and return None

- Delete subnet id from network_document[‘subnet_ids’]

- If subnet exists in network_document[‘subnets’], remove its cidr from network_document[‘cidrs’]

and remove itself from network_document[‘subnets’]

- Update network_document in db

- If no subnets are left in network_document, delete related vservice dhcp, port and vnic documents

|

No |

|

| Port |

| 15 |

Create Port |

port.create.end |

EventPortAdd |

- Check if ports folder exists, create if not.

- Add port document to db

- If ‘compute’ is not in port[‘device_owner’], return None

- Get old_instance_doc (updated instance document) from db

- Get instances_root from db

- If instances_root is None, log error and return None (*note 1*)

- Use an ApiFetchHostInstances fetcher to get data for instance with id equal to the device from payload.

- If such instance exists, update old_instance_doc’s fields network_info, network and possibly mac_address with their counterparts from fetched instance. Update old_instance_doc in db

- Use a CliFetchInstanceVnics/CliFetchInstanceVnicsVpp fetcher to get vnic with mac_address equal to the port’s mac address

- If such vnic exists, update its data and update in db

- Add new links using FindLinksForInstanceVnics and FindLinksForVedges classes

- Scan cliques

- Return True

|

Yes {cliques: 1}

(only if ‘compute’ is in port[‘device_owner’] and instance_root is not None (see steps 3 and 6))

|

- The port and (maybe) port folder will still persist in db even if we abort the execution on step 6. See idea 1 for details.

|

| 16 |

Update Port |

port.update.end |

EventPortUpdate |

- Get port from db

- If port doesn’t exist, log error and return None

- Update port data (name, admin_state_up, status, binding:vnic_type) in db

- Return None

|

No |

|

| 17 |

Delete Port |

port.delete.end |

EventPortDelete (EventDeleteBase) |

- Get port from db

- If port doesn’t exist, log error and return None

- If ‘compute’ is in port[‘device_owner’], do the following:

3.1. Get instance document for the port from db. If it doesn’t exist, to step 4.

3.2. Remove port from network_info of instance

3.3. If it was the last port for network in instance doc, remove network from the doc

3.4. If port’s mac_address is equal to instance_doc’s one, then fetch an instance with the same id as instance_doc using ApiFetchHostInstances fetcher. If instance exists and ‘mac_address’ not in instance, set instance_doc’s mac_address to None

3.5. Save instance_docs in db

- Delete port from db

- Delete related vnic from db

- Execute self.delete_handler(vnic) for vnic

|

No |

delete_handler() is expanded later |

| Router |

| 18 |

Add Router |

router.create.end |

EventRouterAdd |

- Get host by id from db

- Fetch router_doc using a CliFetchHostVservice

- If router_doc contains ‘external_gateway_info’:

3.1. Add router document (with network) to db

3.2. Add children documents:

3.3. If no ports folder exists for this router, create one

3.4. Add router port to db

3.5. Add vnics folder for router to db

3.6. If port was successfully added (3.4), try to add vnic document for router to db two times (??)

3.7. If port wasn’t successfully added, try adding vnics_folder again (???) (*note 1*)

3.8. If step 3.7 returned False (*Note 2*), try to add vnic_document again (??)

- Add router document (without network) to db (Note 3)

- Add relevant links for the new router

- Scan cliques

- Return None

|

Yes {cliques: 1} |

- Looks like code author confused a lot of stuff here. This class needs to be reviewed thoroughly.

- Step 3.7 never returns anything for some reason (a bug?)

- Why are we adding router document again? It shouldn’t be added again on step 4 if it was already added on step 3.1. Probably an ‘else’ clause is missing

|

| 19 |

Update Router |

router.update.end |

EventRouterUpdate |

- Get router_doc from db

- If router_doc doesn’t exist, log error and return None

- If payload router data doesn’t have external_gateway_info, do the following:

3.1. If router_doc has a ‘gw_port_id’ key, delete relevant port.

3.2. If router_doc has a ‘network’:

3.2.1. If a port was deleted on step 3.1, remove its ‘network_id’ from router_doc[‘network’]

3.2.2. Delete related links

- If payload router data has external_gateway_info, do the following:

4.1. Add new network id to router_doc networks

4.2. Use CliFetchHostVservice to fetch gateway port and update it in router_doc

4.3. Add children documents for router (see #18 steps 3.2-3.8)

4.4. Add relevant links

- Update router_doc in db

- Scan cliques

- Return None

|

Yes {cliques: 1} |

|

| 20 |

Delete Router |

router.delete.end |

EventRouterDelete (EventDeleteBase) |

- Extract router_id from payload

- Execute self.delete_handler()

|

No |

delete_handler() is expanded later |

| Router Interface |

| 21 |

Add Router Interface |

router.interface.create |

EventInterfaceAdd |

- Get network_doc from db based on subnet id from interface payload

- If network_doc doesn’t exist, return None

- Make sure ApiAccess.regions is not empty (?)

- Add router-interface port document in db

- Add vnic document for interface. If unsuccessful, try again after a small delay

- Update router:

6.1. If router_doc is an empty type, log an error and continue to step 7 (*Note 1*)

6.2. Add new network id to router_doc network list

6.3. If gateway port is in both router_doc and db, continue to step 6.7

6.4. Fetch router using CliFetchHostVservice, set gateway port in router_doc to the one from fetched router

6.5. Add gateway port to db

6.6. Add vnic document for router. If unsuccessful, try again after a small delay

6.7. Update router_id in db

- Add relevant links

- Scan cliques

- Return None

|

Yes {cliques: 1} |

- Log message states that we should abort interface adding, though the code does nothing to support that. Moreover, router_doc can’t be empty at that moment because it’s referenced before.

|

| 22 |

Delete Router Interface |

router.interface.delete |

EventInterfaceDelete |

- Get port_doc by payload port id from db

- If port_doc doesn’t exist, log an error and return None

- Update relevant router by removing network id of port_doc

- Delete port by executing EventPortDelete().delete_port()

|

No |

|

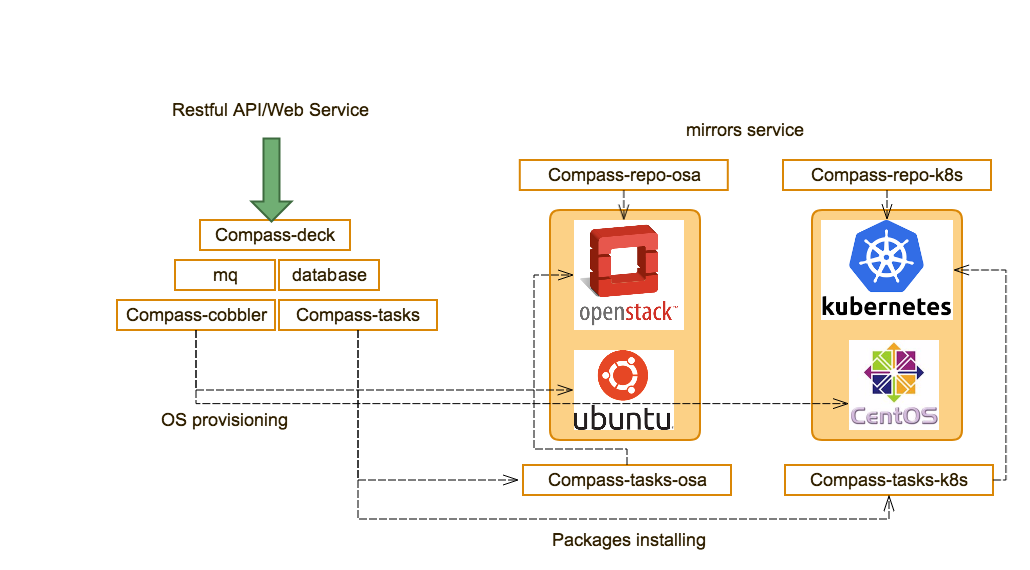

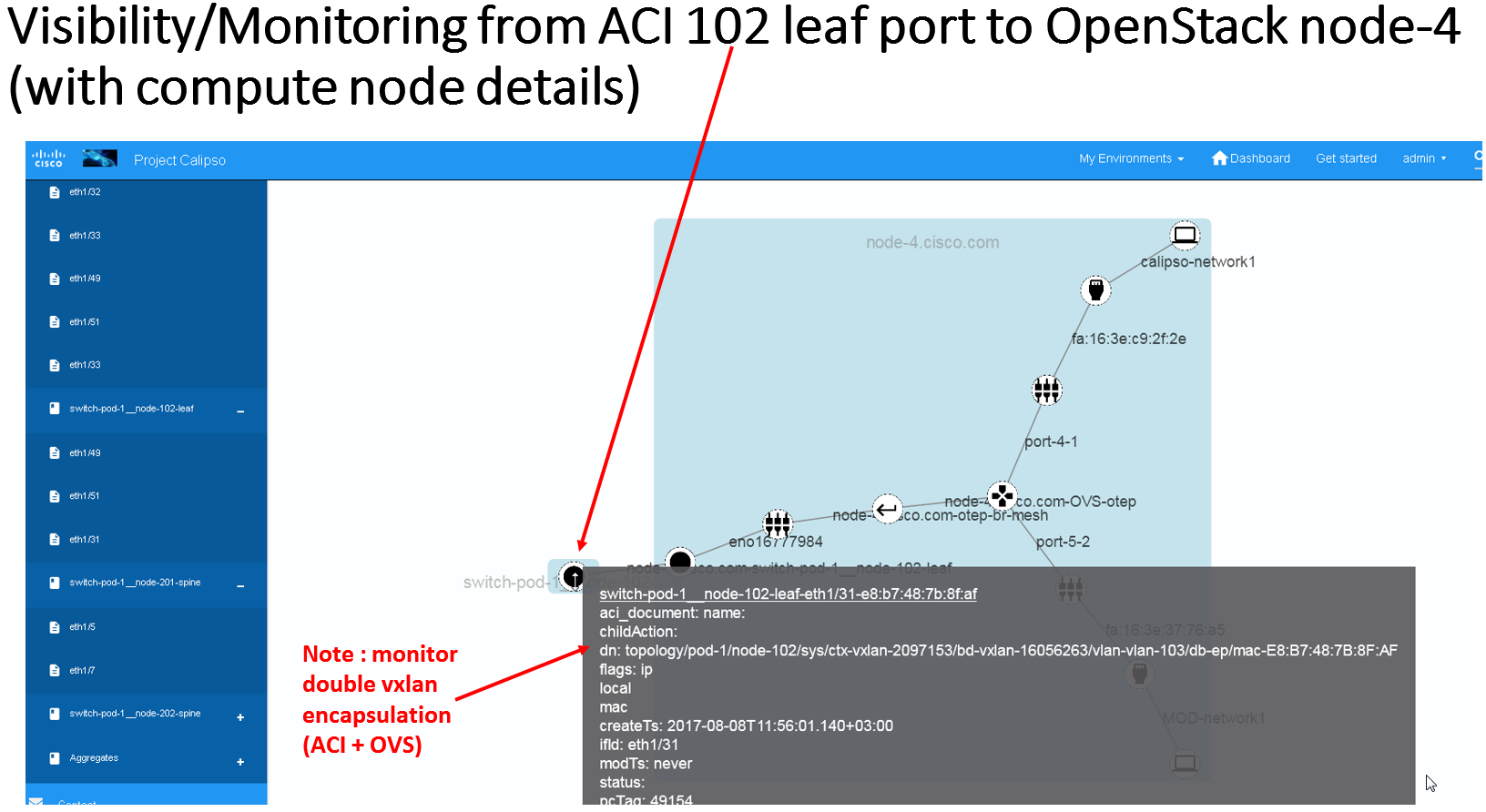

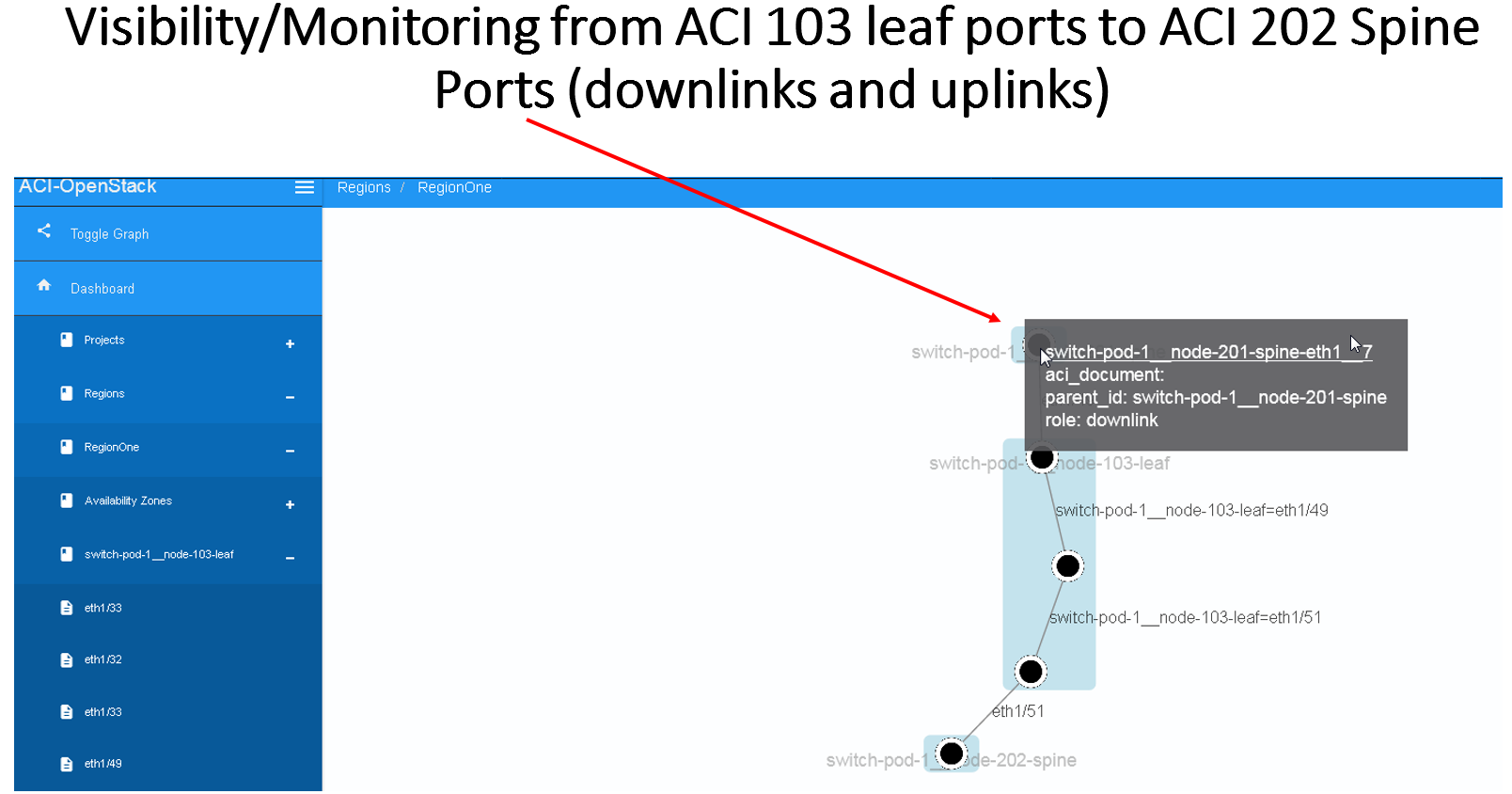

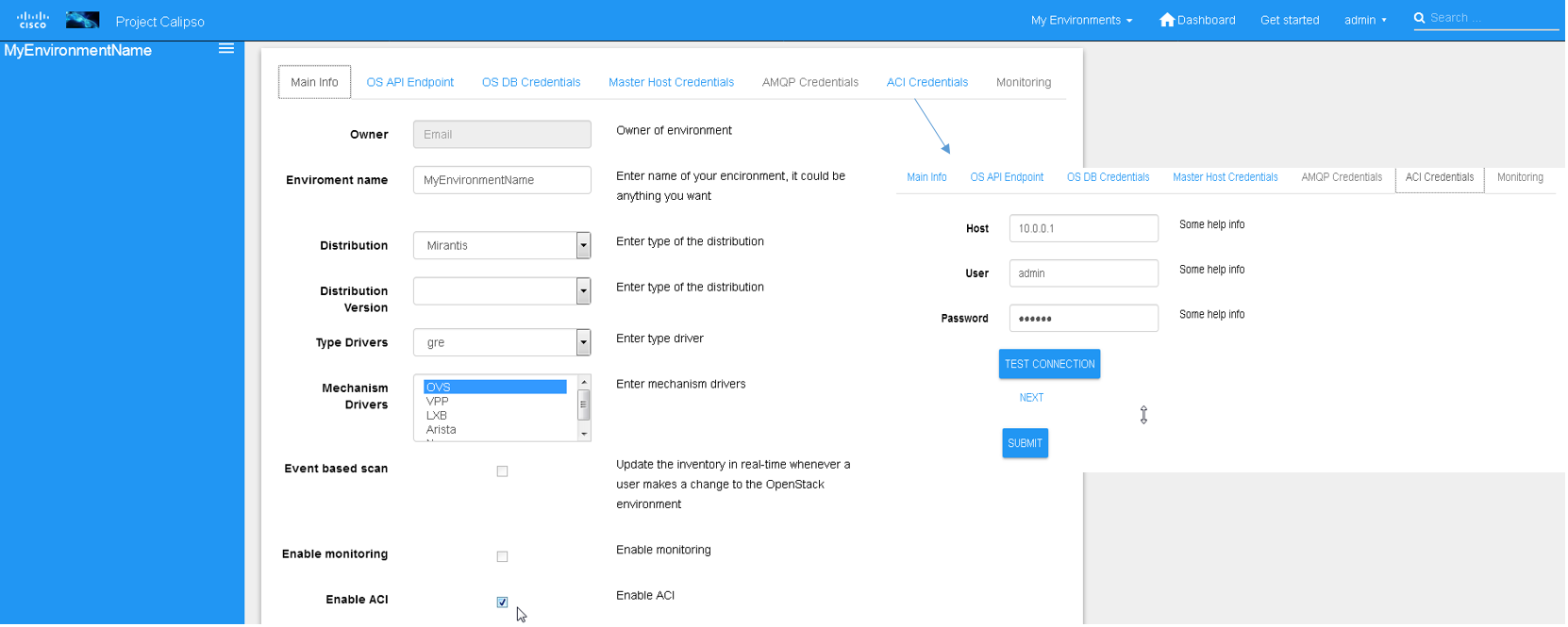

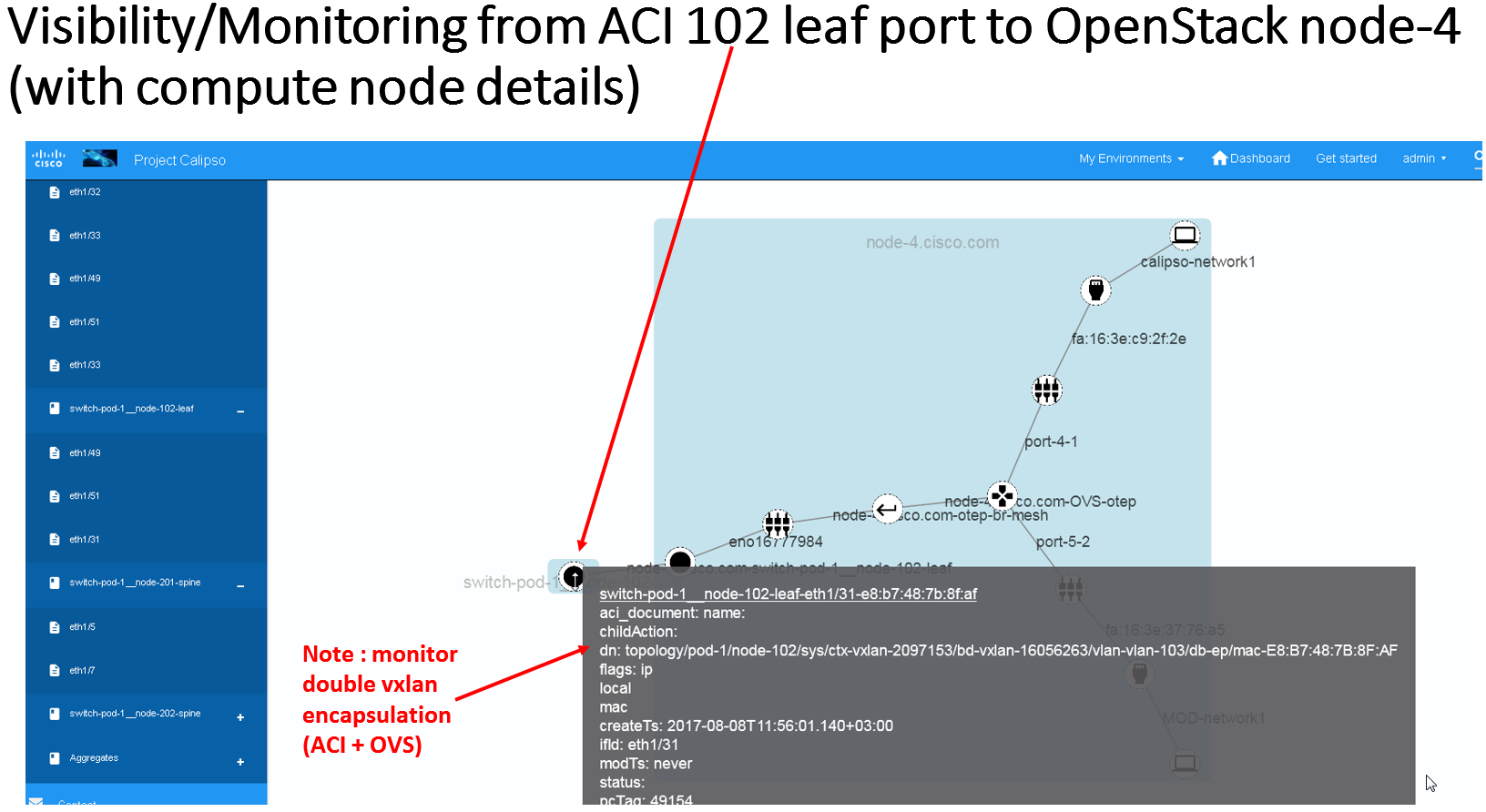

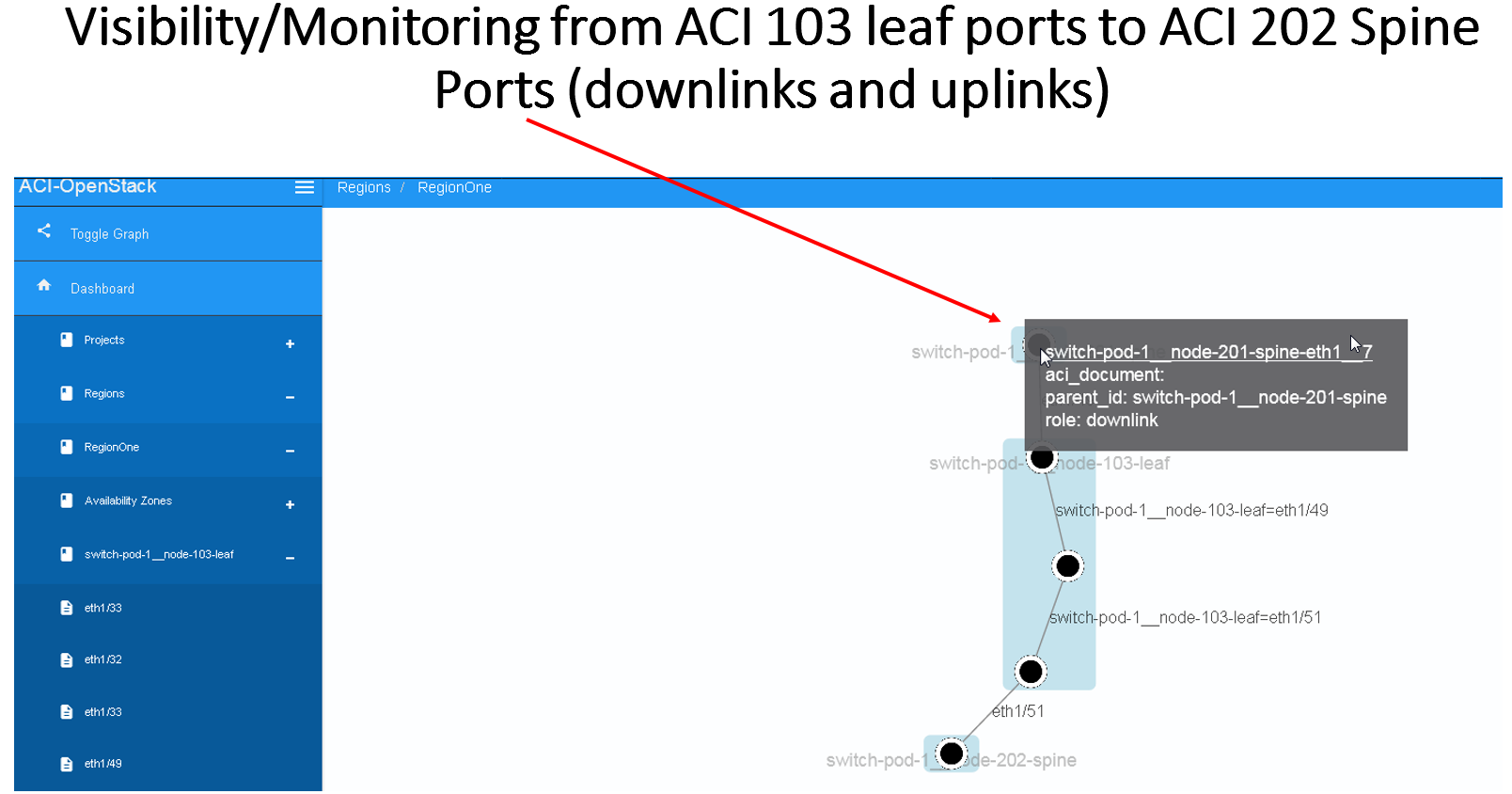

ACI scanning

For dynamic discovery and real-time updates of physical switches and

connections between physical switches ports and host ports (pNICs),

Calispo provides an option to integrate with the Cisco data center

switches controller called “ACI APIC”.

This is an optional parameter and once checked details on the ACI

server and API credentials needs to be provided:

The results of this integration (when ACI switches are used in that

specific VIM environment) are extremely valuable as it maps out and

monitors virtual-to-physical connectivity across the entire data

center environment, both internal and external.

Example graph generated in such environments:

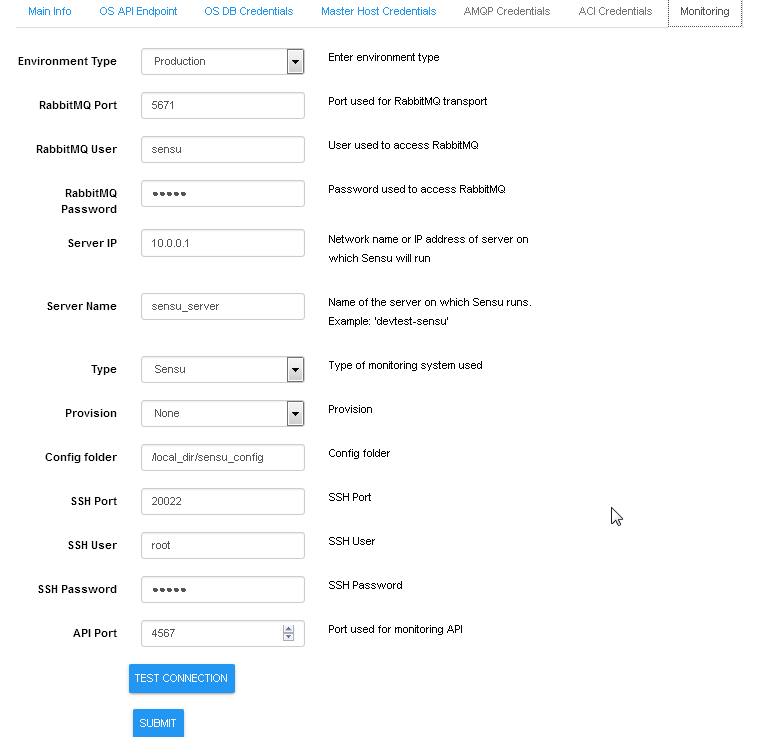

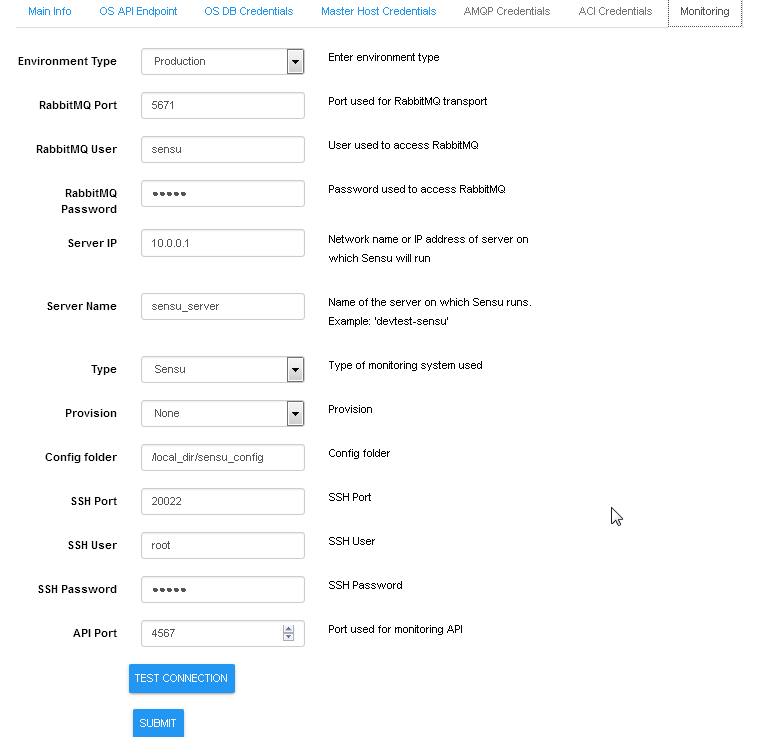

Monitoring enablement

For dynamic discovery of real-time statuses and states of physical

and virtual components and thier connections Calispo provides an

option to automatically integrate with the Sensu framework,

customized and adapted from the Calispo model and design concepts.

Follow the monitoring-guide for details on this optional module.

Enabling Monitoring through UI, using environment configuration

wizard:

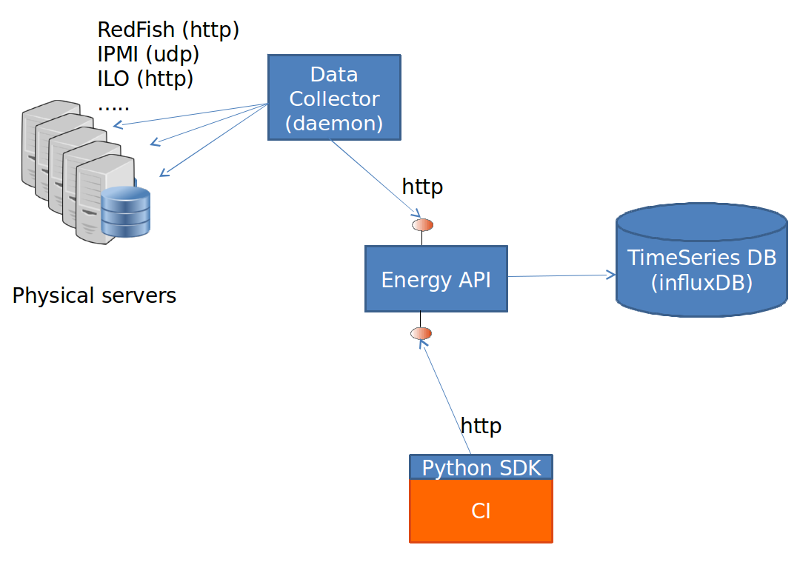

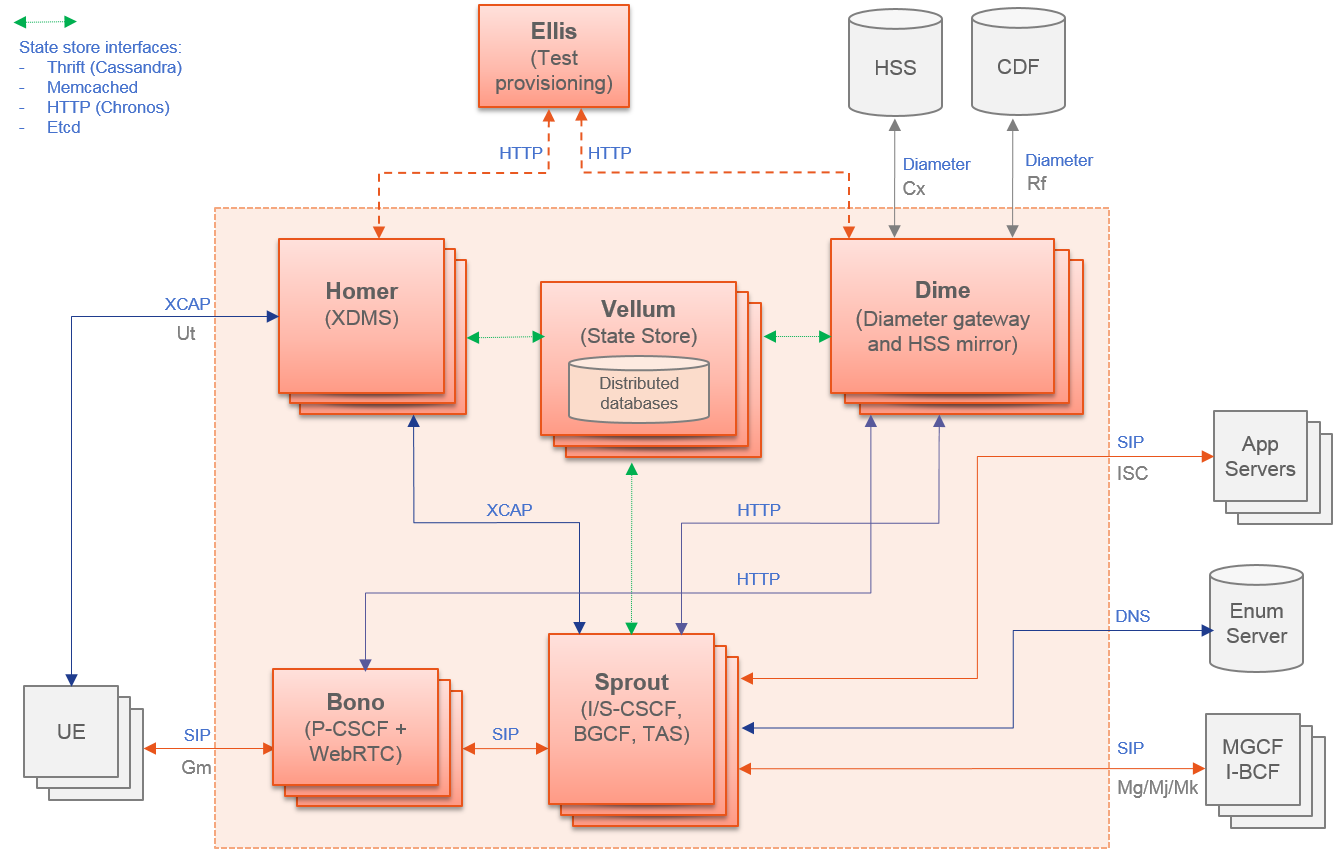

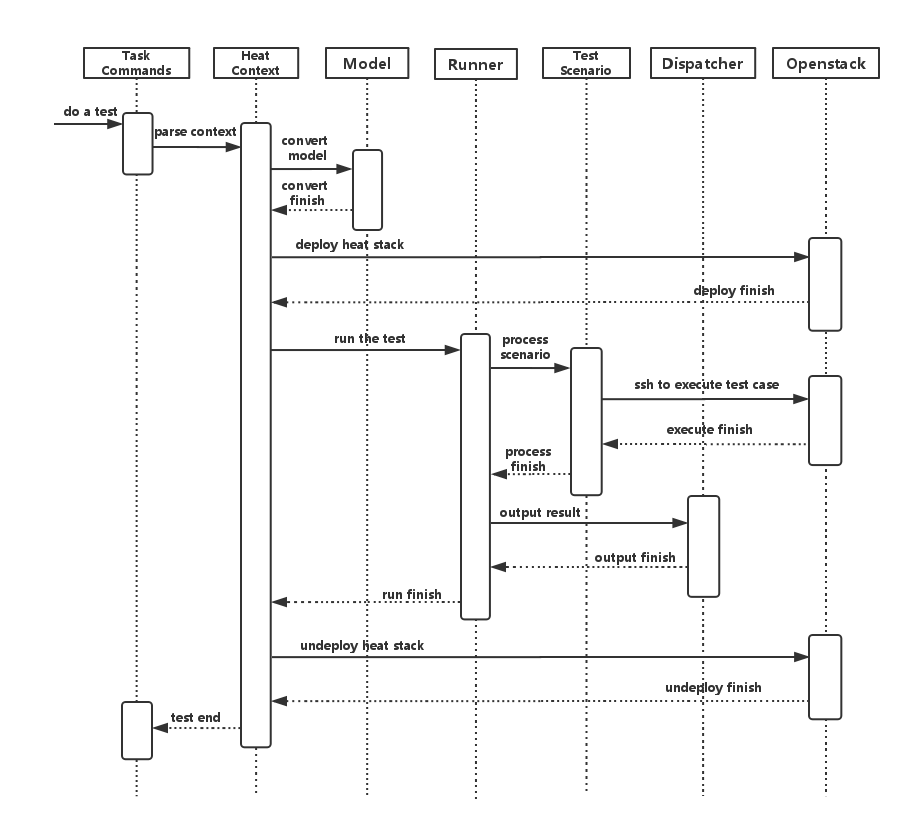

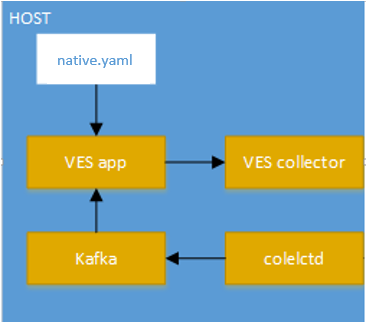

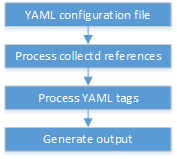

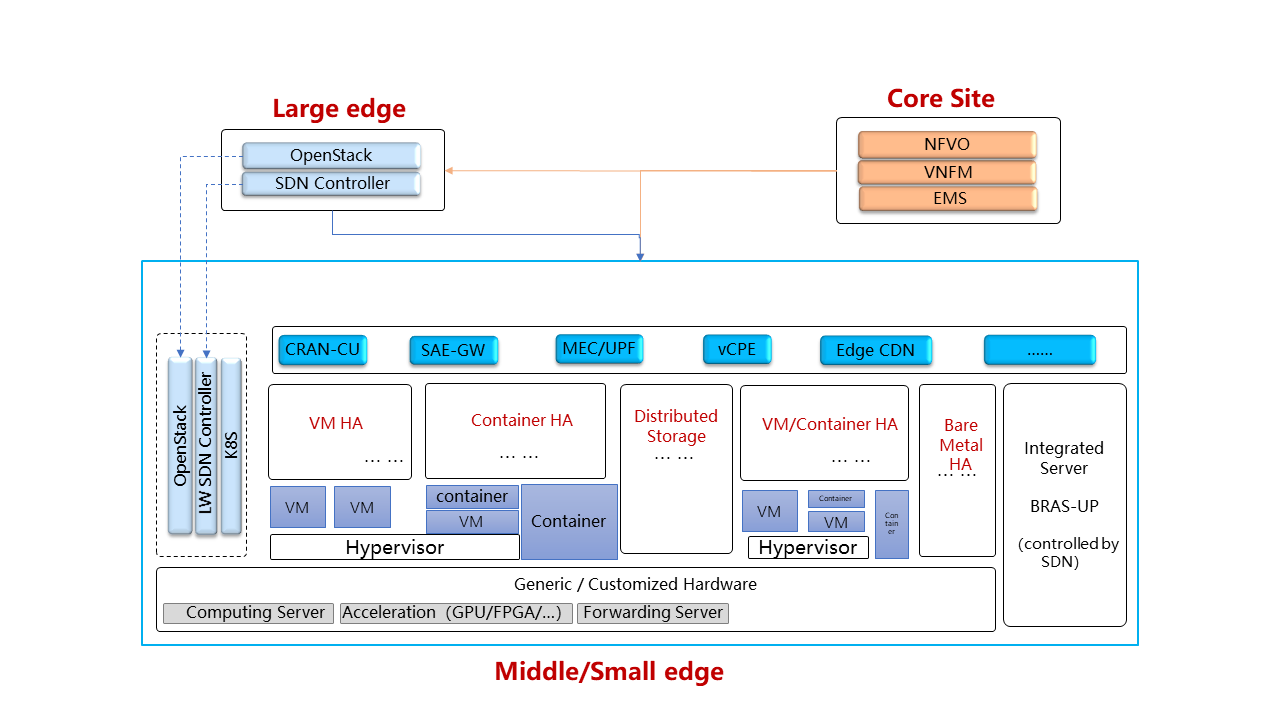

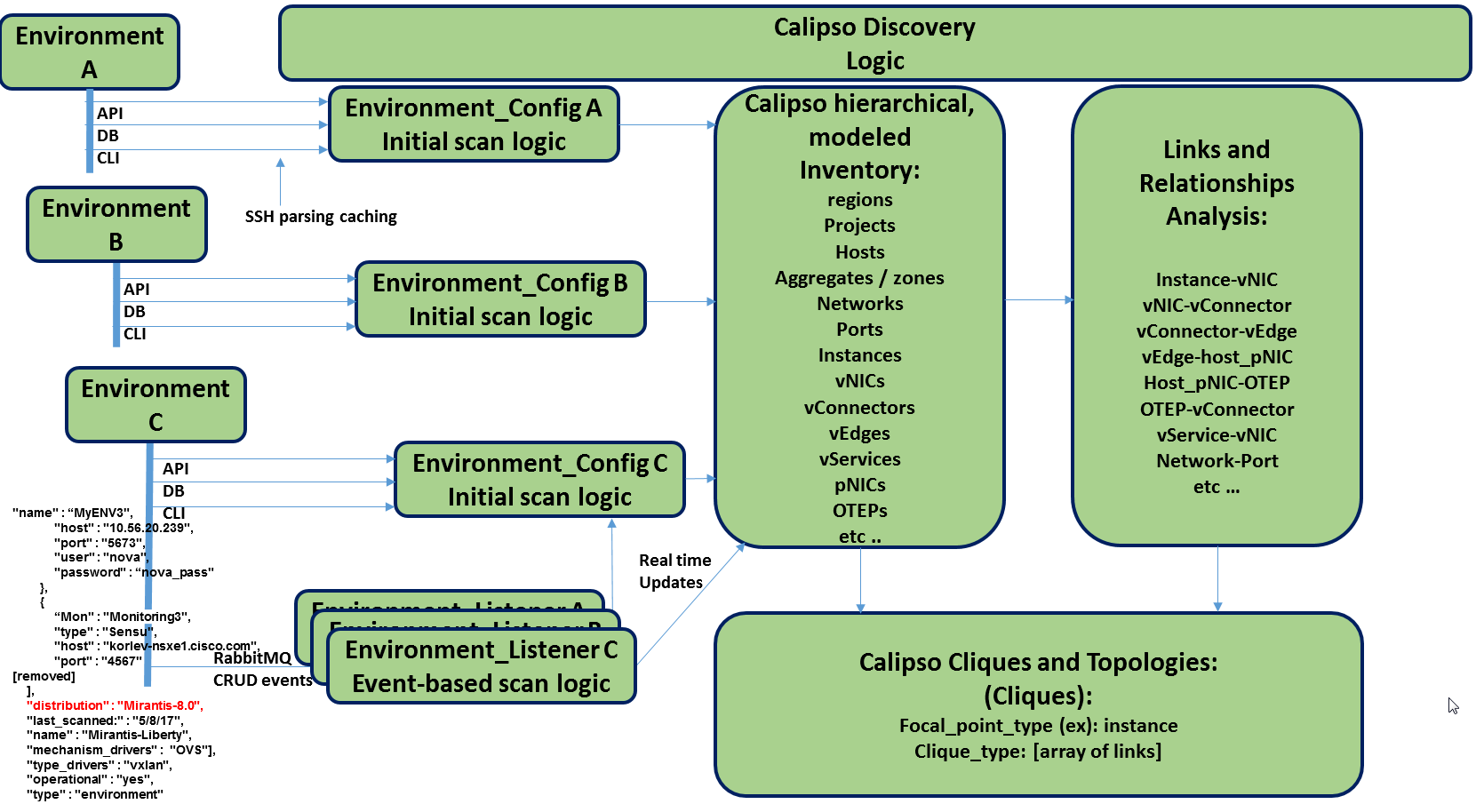

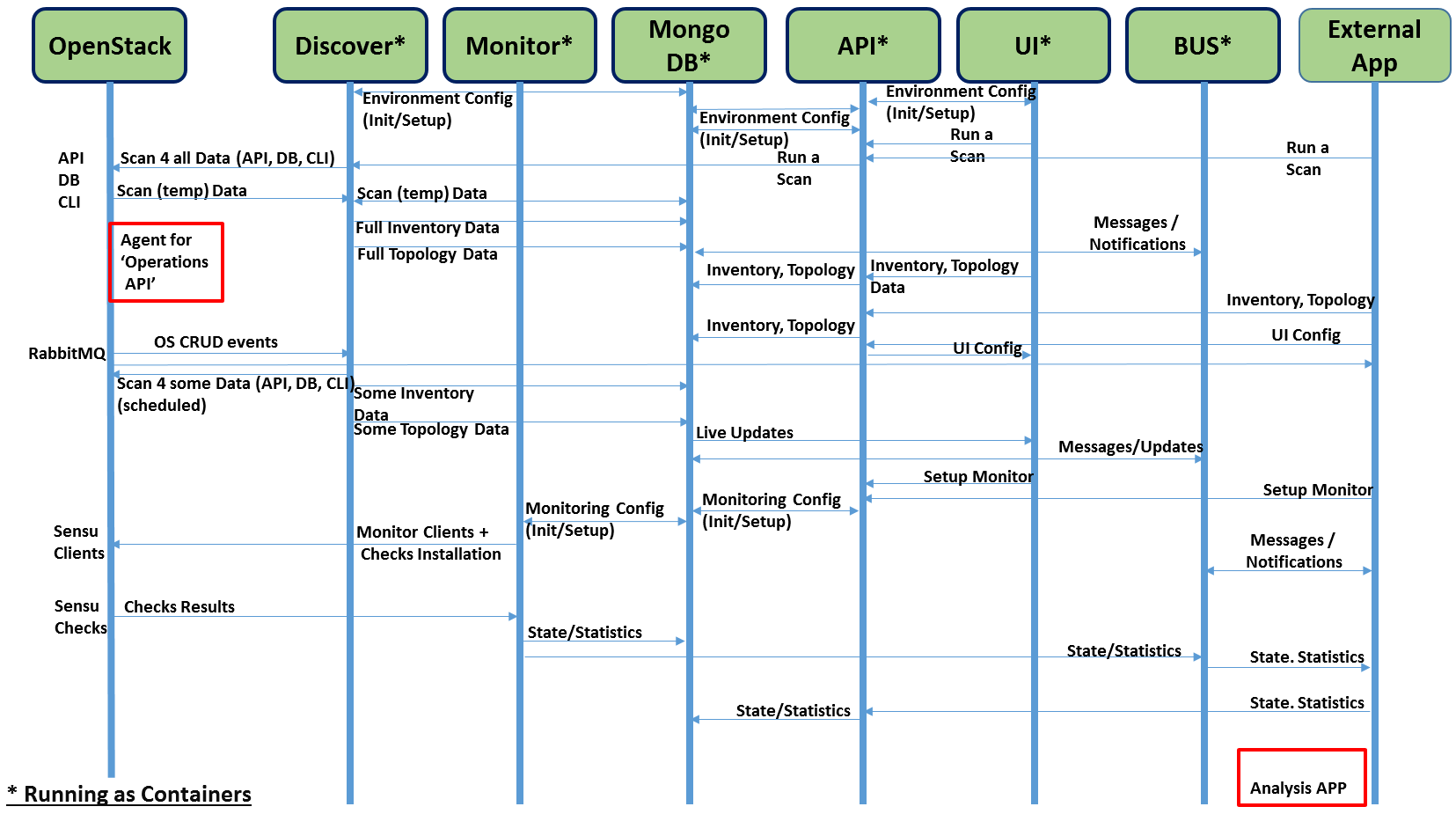

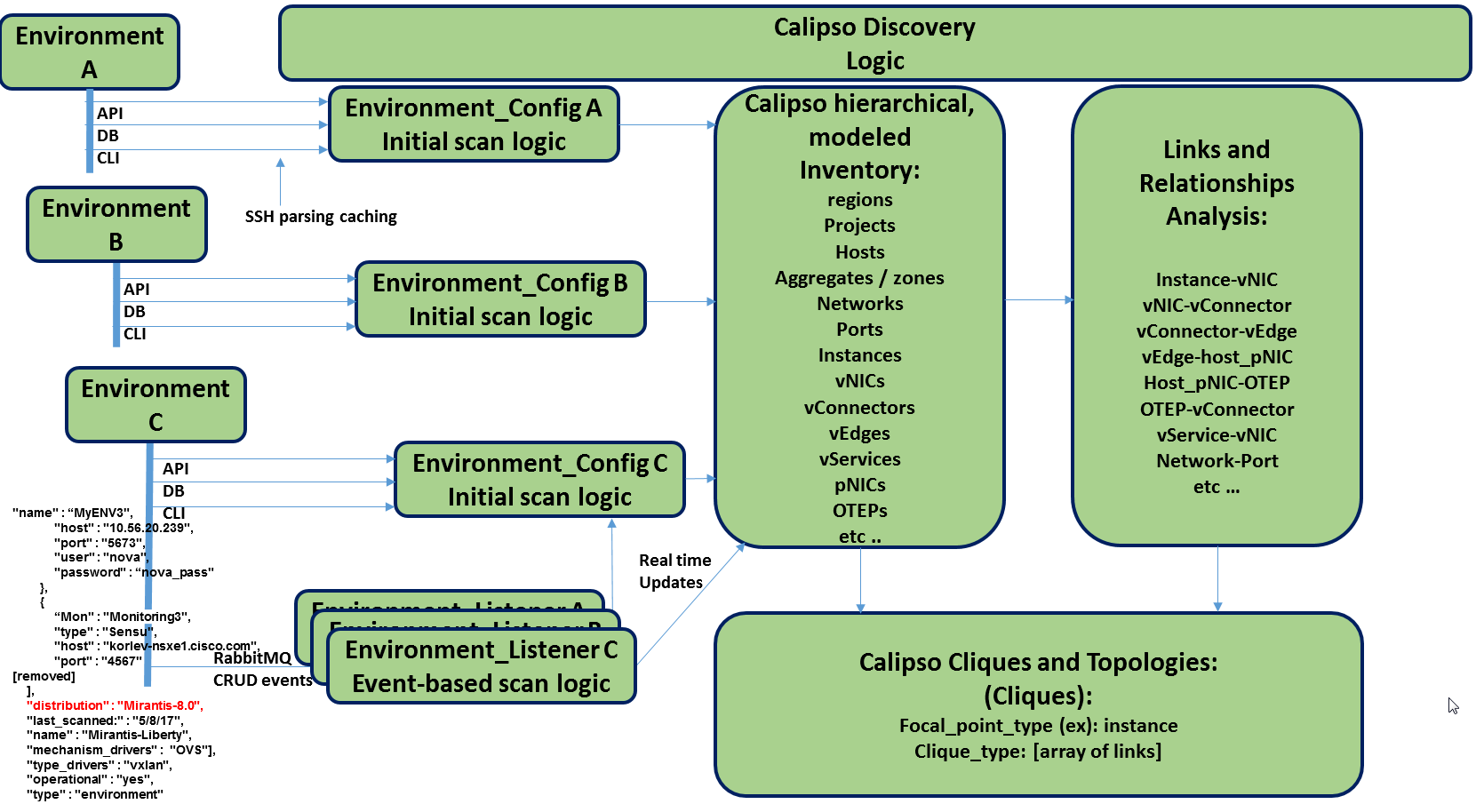

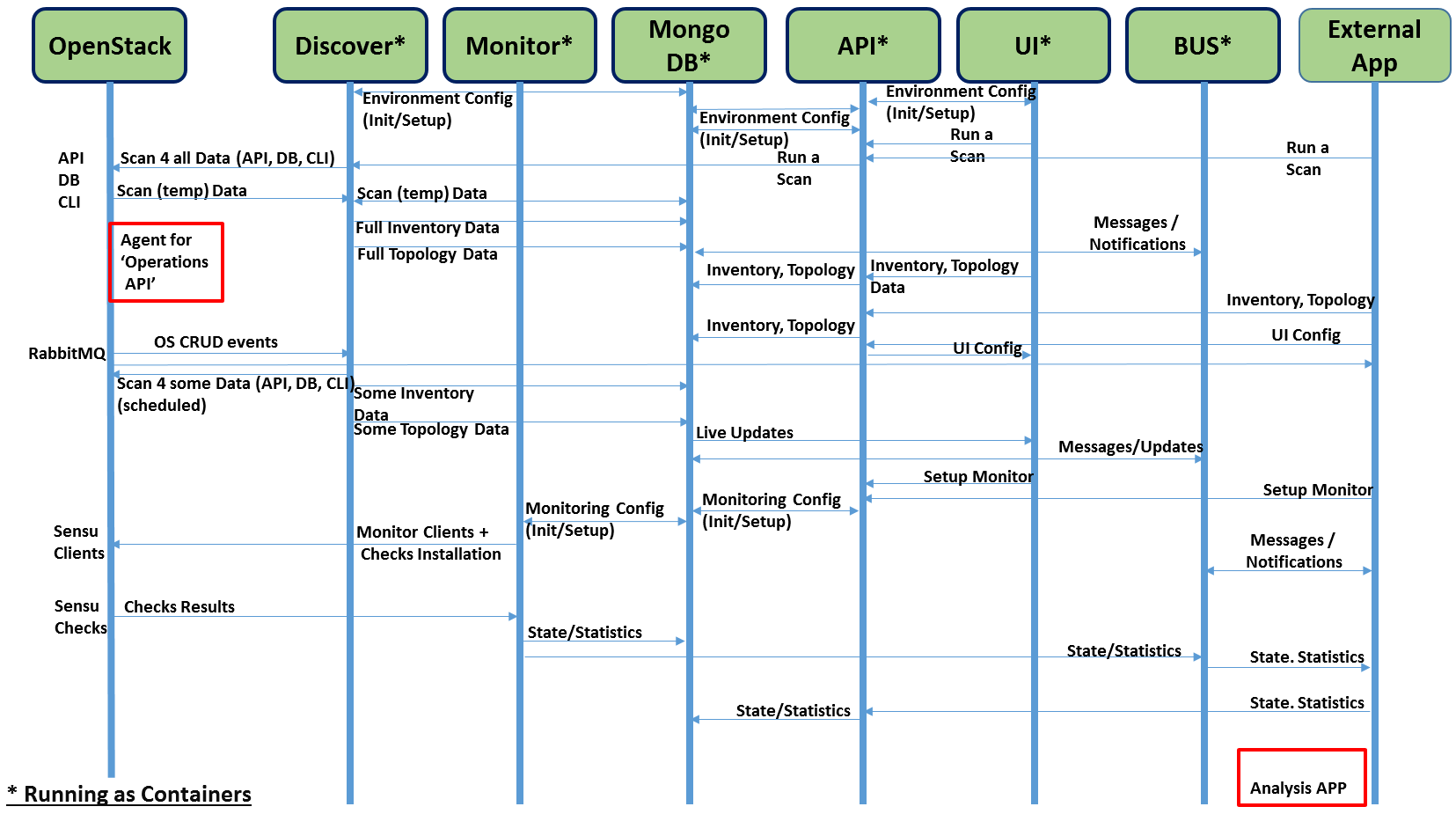

Modules data flows

Calipso modules/containers and the VIM layers have some

inter-dependencies, illustrated in the following diagram:

Copyright (c) 2017 Koren Lev (Cisco Systems), Yaron Yogev (Cisco Systems) and others

All rights reserved. This program and the accompanying materials

are made available under the terms of the Apache License, Version 2.0

which accompanies this distribution, and is available at

http://www.apache.org/licenses/LICENSE-2.0

Project “Calipso” tries to illuminate complex virtual networking with

real time operational state visibility for large and highly distributed

Virtual Infrastructure Management (VIM).

We believe that Stability is driven by accurate Visibility.

Calipso provides visible insights using smart discovery and virtual

topological representation in graphs, with monitoring per object in the

graph inventory to reduce error vectors and troubleshooting, maintenance

cycles for VIM operators and administrators.

Table of Contents

Calipso.io API Guide 1

1 Pre Requisites 3

1.1 Calipso API container 3

2 Overview 3

2.1 Introduction 3

2.2 HTTP Standards 4

2.3 Calipso API module Code 4

3 Starting the Calipso API server 4

3.1 Authentication 4

3.2 Database 5

3.3 Running the API Server 5

4 Using the Calipso API server 6

4.1 Authentication 6

4.2 Messages 9

4.3 Inventory 14

4.4 Links 17

4.5 Cliques 20

4.6 Clique_types 23

4.7 Clique_constraints 26

4.8 Scans 29

4.9 Scheduled_scans 32

4.10 Constants 35

4.11 Monitoring_Config_Templates 37

4.12 Aggregates 39

4.13 Environment_configs 42

Pre Requisites

Calipso API container

Calipso’s main application is written with Python3.5 for Linux

Servers, tested successfully on Centos 7.3 and Ubuntu 16.04. When

running using micro-services many of the required software packages

and libraries are delivered per micro service, including the API

module case. In a monolithic case dependencies are needed.

Here is a list of the required software packages for the API, and

the official supported steps required to install them:

Python3.5.x for Linux :

https://docs.python.org/3.5/using/unix.html#on-linux

Pip for Python3 : https://docs.python.org/3/installing/index.html

Python3 packages to install using pip3 :

falcon (1.1.0)

pymongo (3.4.0)

gunicorn (19.6.0)

ldap3 (2.1.1)

setuptools (34.3.2)

python3-dateutil (2.5.3-2)

bcrypt (3.1.1)

You should use pip3 python package manager to install the specific

version of the library. Calipso project uses Python 3, so

package installation should look like this:

pip3 install falcon==1.1.0

The versions of the Python packages specified above are the ones

that were used in the development of the API, other versions might

also be compatible.

This document describes how to setup Calipso API container for

development against the API.

Overview

Introduction

The Calipso API provides access to the Calipso data stored in the

MongoDB.

Calispo API uses

falcon (https://falconframework.org)

web framework and gunicorn

(http://gunicorn.org) WSGI server.

The authentication of the Calipso API is based on LDAP (Lightweight

Directory Access Protocol). It can therefore interface with any

directory servers which implements the LDAP protocol, e.g. OpenLDAP,

Active Directory etc. Calipso app offers and uses the LDAP built-in

container by default to make sure this integration is fully tested,

but it is possible to interface to other existing directories.

HTTP Standards

At present two types of operations are supported: GET (retrieve

data) and POST (create a new data object).

Calipso API module Code

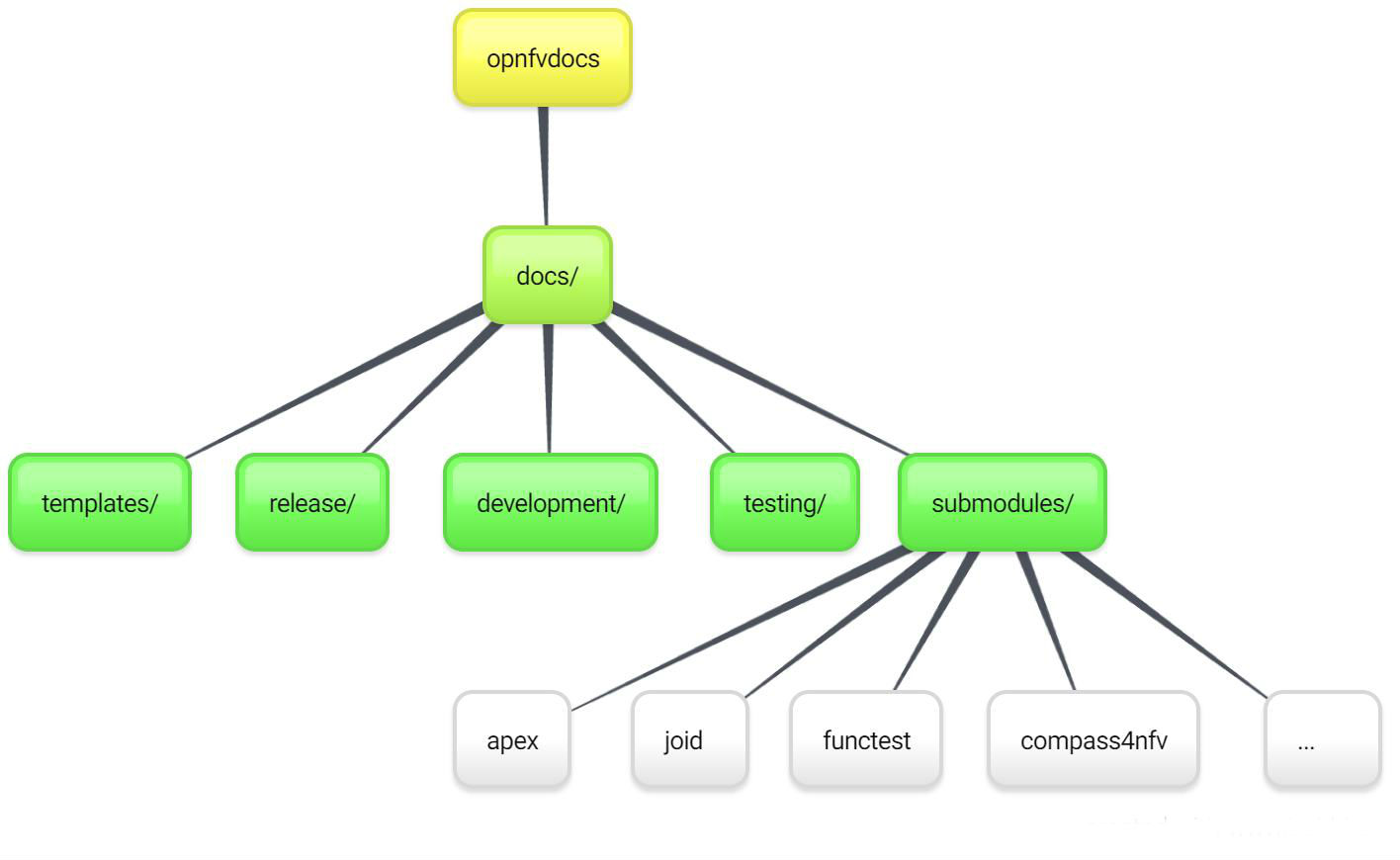

Clipso API code is currently located in opnfv repository.

Run the following command to get the source code:

git

clone **https://git.opnfv.org/calipso/**

The source code of the API is located in the app/api directory

sub-tree.

Starting the Calipso API server

Authentication

Calipso API uses LDAP as the protocol to implement the

authentication, so you can use any LDAP directory server as the

authentication backend, like OpenLDAP and Microsoft

AD. You

can edit the ldap.conf file which is located in app/config directory

to configure LDAP server options (see details in quickstart-guide):

# url for connecting to the LDAP server (customize to your own as

needed):

url ldap_url

# LDAP attribute mapped to user id, must not be a multivalued

attributes:

user_id_attribute CN

# LDAP attribute mapped to user password:

user_pass_attribute userPassword

# LDAP objectclass for user

user_objectclass inetOrgPerson

# Search base for users

user_tree_dn OU=Employees,OU=Example Users,DC=exmaple,DC=com

query_scope one

# Valid options for tls_req_cert are demand, never, and allow

tls_req_cert demand

# CA certificate file path for communicating with LDAP servers.

tls_cacertfile ca_cert_file_path

group_member_attribute member

Calipso currently implements the basic authentication, the client

send the query request with its username and password in the auth

header, if the user can be bound to the LDAP server, authentication

succeeds otherwise fails. Other methods will be supported in future

releases.

Database

Calipso API query for and retrieves data from MongoDB container, the

data in the MongoDB comes from the results of Calipso scanning,

monitoring or the user inputs from the API. All modules of a single

Calipso instance of the application must point to the same MongoDB

used by the scanning and monitoring modules. Installation and

testing of mongoDB is covered in install-guide and quickstart-guide.

Running the API Server

The entry point (initial command) running the Calipso API

application is the server.py script in the app/api directory.

Options for running the API server can be listed using: python3

server.py –help. Here is the current options available:

-m [MONGO_CONFIG], –mongo_config [MONGO_CONFIG]

name of config file with mongo access details

–ldap_config [LDAP_CONFIG]

name of the config file with ldap server config

details

-l [LOGLEVEL], –loglevel [LOGLEVEL] logging level (default:

‘INFO’)

-b [BIND], –bind [BIND]

binding address of the API server (default:

127.0.0.1:8000)

-y [INVENTORY], –inventory [INVENTORY]

name of inventory collection (default:

‘inventory’)

For testing, you can simply run the API server by:

python3 app/api/server.py

This will start a HTTP server listening on http://localhost:8000,

if you want to change the binding address of the server, you can run

it using this command:

python3 server.py –bind ip_address/server_name:port_number

You can also use your own configuration files for LDAP server and

MongoDB, just add –mongo_config and –ldap_config options in your

command:

python3 server.py –mongo_config your_mongo_config_file_path

–ldap_config your_ldap_config_file_path

—inventory option is used to set the collection names the server

uses for the API, as per the quickstart-guide this will default to

/local_dir/calipso_mongo_access.conf and

/local_dir/ldap.conf mounted inside the API container.

Notes: the –inventory argument can only change the collection names

of the inventory, links, link_types, clique_types,

clique_constraints, cliques, constants and scans collections, names

of the monitoring_config_templates, environments_config and

messages collections will remain at the root level across releases.

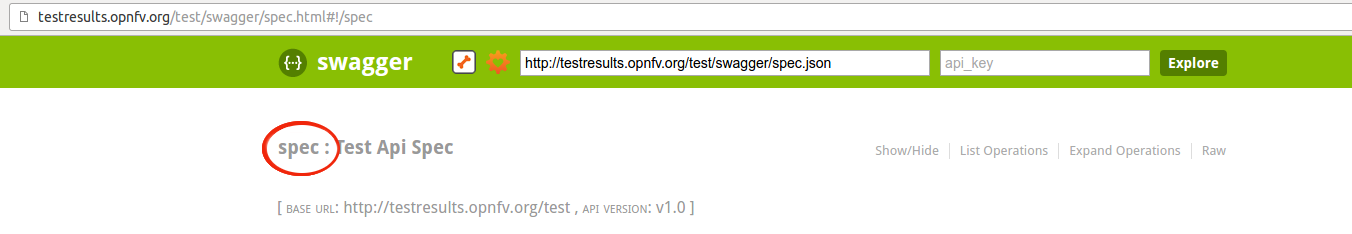

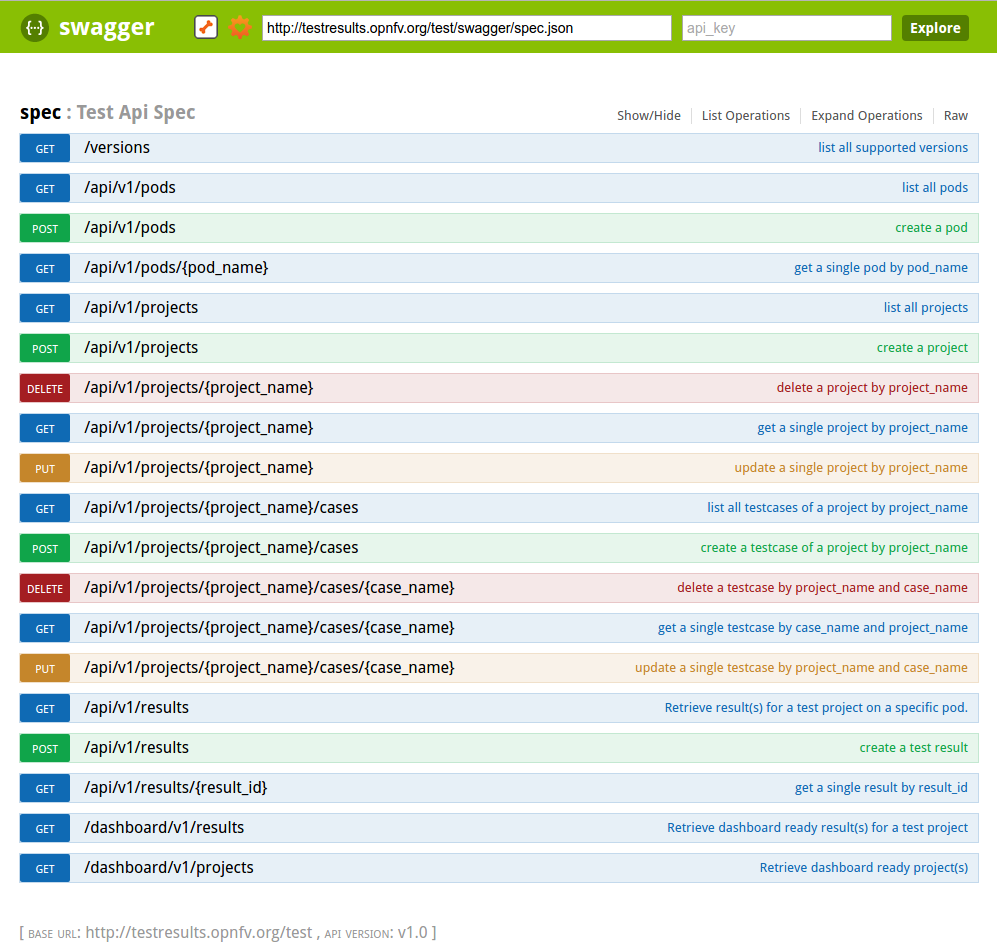

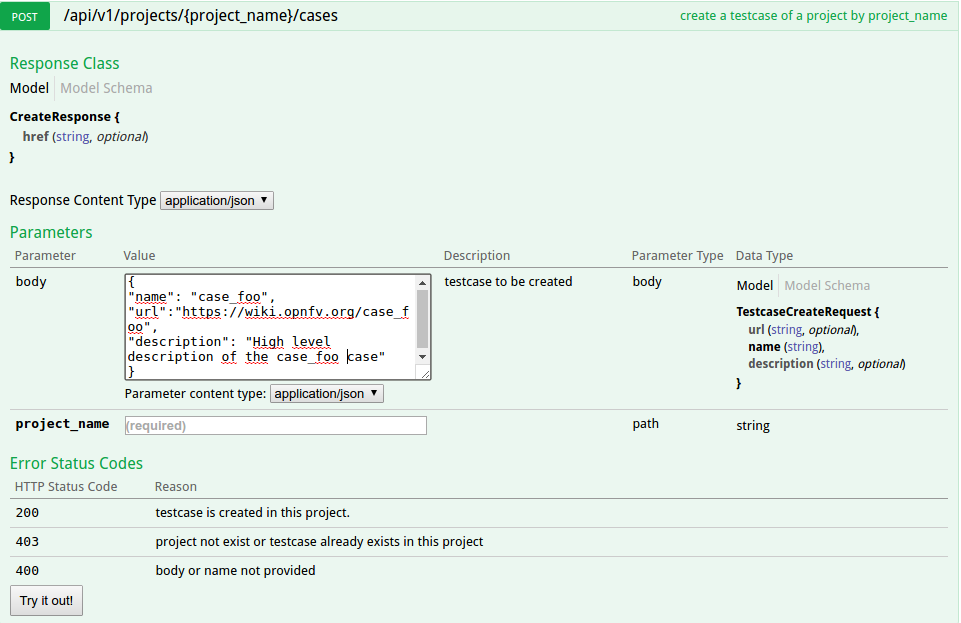

Using the Calipso API server

The following covers the currently available requests and responses on

the Calipso API

Authentication

POST /auth/tokens

Description: get token with password and username or a valid token.

Normal response code: 201

Error response code: badRequest(400), unauthorized(401)

Request

| Name |

In |

Type |

Description |

|---|

| auth(Mandatory) |

body |

object |

An auth object that contains the authentication information |

| methods(Mandatory) |

body |

array |

The authentication methods. For password authentication, specify password, for token authentication, specify token. |

| credentials(Optional) |

body |

object |

Credentials object which contains the username and password, it must be provided when getting the token with user credentials. |

| token(Optional) |

body |

string |

The token of the user, it must be provided when getting the user with an existing valid token. |

Response

| Name |

In |

Type |

Description |

|---|

| token |

body |

string |

Token for the user. |

| issued-at |

body |

string |

The date and time when the token was issued. the date and time format follows *ISO 8610*:

YYYY-MM-DDThh:mm:ss.sss+hhmm

|

| expires_at |

body |

string |

The date and time when the token expires. the date and time format follows *ISO 8610*:

YYYY-MM-DDThh:mm:ss.sss+hhmm

|

| method |

body |

string |

The method which achieves the token. |

**

Examples**

Get token with credentials:

Post *http://korlev-osdna-staging1.cisco.com:8000/auth/tokens*

{

“auth”: {

“methods”: [“credentials”],

“credentials”: {

“username”: “username”,

“password”: “password”

}

}

}

Get token with token

post http://korlev-calipso-staging1.cisco.com:8000/auth/tokens

{

“auth”: {

“methods”: [“token”],

“token”: “17dfa88789aa47f6bb8501865d905f13”

}

}

DELETE /auth/tokens

Description: delete token with a valid token.

Normal response code: 200

Error response code: badRequest(400), unauthorized(401)

Request

| Name |

In |

Type |

Description |

|---|

| X-Auth-Token |

header |

string |

A valid authentication token that is doing to be deleted. |

Response

200 OK will be returned when the delete succeed

Messages

GET /messages

Description: get message details with environment name and message id,

or get a list of messages with filters except id.

Normal response code: 200

Error response code: badRequest(400), unauthorized(401), notFound(404)

Request

| Name |

In |

Type |

Description |

|---|

| env_name(Mandatory) |

query |

string |

Environment name of the messages. e.g. “Mirantis-Liberty-API”. |

| id (Optional) |

query |

string |

ID of the message. |

| source_system (Optional) |

query |

string |

Source system of the message, e.g. “OpenStack”. |

| start_time (Optional) |

query |

string |

Start time of the messages, when this parameter is specified, the messages after that time will be returned, the date and time format follows *ISO 8610: *

YYYY-MM-DDThh:mm:ss.sss+hhmm

The +hhmm value, if included, returns the time zone as an offset from UTC, For example, 2017-01-25T09:45:33.000-0500. If you omit the time zone, the UTC time is assumed.

|

| end_time (Optional) |

query |

string |

End time of the message, when this parameter is specified, the messages before that time will be returned, the date and time format follows *ISO 8610*:

YYYY-MM-DDThh:mm:ss.sss+hhmm

The +hhmm value, if included, returns the time zone as an offset from UTC, For example, 2017-01-25T09:45:33.000-0500. If you omit the time zone, the UTC time is assumed.

|

| level (Optional) |

query |

string |

The severity of the messages, we accept the severities strings described in *RFC 5424*, possible values are “panic”, “alert”, “crit”, “error”, “warn”, “notice”, “info” and “debug”. |

| related_object (Optional) |

query |

string |

ID of the object related to the message. |

| related_object_type (Optional) |

query |

string |

Type of the object related to the message, possible values are “vnic”, “vconnector”, “vedge”, “instance”, “vservice”, “host_pnic”, “network”, “port”, “otep” and “agent”. |

| page (Optional) |

query |

int |

Which page will to be returned, the default is first page, if the page is larger than the maximum page of the query, and it will return an empty result set (Page start from 0). |

| page_size (Optional) |

query |

int |

Size of each page, the default is 1000. |

Response

| Name |

In |

Type |

Description |

|---|

| environment |

body |

string |

Environment name of the message. |

| id |

body |

string |

ID of the message. |

| _id |

body |

string |

MongoDB ObjectId of the message. |

| timestamp |

body |

string |

Timestamp of message. |

| viewed |

body |

boolean |

Indicates whether the message has been viewed. |

| display_context |

body |

string |

The content which will be displayed. |

| message |

body |

object |

Message object. |

| source_system |

body |

string |

Source system of the message, e.g. “OpenStack”. |

| level |

body |

string |

The severity of the message. |

| related_object |

body |

string |

Related object of the message. |

| related_object_type |

body |

string |

Type of the related object. |

| messages |

body |

array |

List of message ids which match the filters. |

Examples

Example Get Messages

Request:

http://korlev-calipso-testing.cisco.com:8000/messages?env_name=Mirantis-Liberty-API&start_time=2017-01-25T14:28:32.400Z&end_time=2017-01-25T14:28:42.400Z

Response:

{

“level”: “info”,

“environment”: “Mirantis-Liberty”,

“id”: “3c64fe31-ca3b-49a3-b5d3-c485d7a452e7”,

“source_system”: “OpenStack”

},

{

“level”: “info”,

“environment”: “Mirantis-Liberty”,

“id”: “c7071ec0-04db-4820-92ff-3ed2b916738f”,

“source_system”: “OpenStack”

},

Example Get Message Details

Request

http://korlev-calipso-testing.cisco.com:8000/messages?env_name=Mirantis-Liberty-API&id=80b5e074-0f1a-4b67-810c-fa9c92d41a98

Response

{

“related_object_type”: “instance”,

“source_system”: “OpenStack”,

“level”: “info”,

“timestamp”: “2017-01-25T14:28:33.057000”,

“_id”: “588926916a283a8bee15cfc6”,

“viewed”: true,

“display_context”: “*”,

“related_object”: “97a1e179-6a42-4c7b-bced-4f64bd9e4b6b”,

“environment”: “Mirantis-Liberty-API”,

“message”: {

“_context_show_deleted”: false,

“_context_user_name”: “admin”,

“_context_project_id”: “a3efb05cd0484bf0b600e45dab09276d”,

“_context_service_catalog”: [

{

“type”: “volume”,

“endpoints”: [

{

“region”: “RegionOne”

}

],

“name”: “cinder”

},

{

“type”: “volumev2”,

“endpoints”: [

{

“region”: “RegionOne”

}

],

“name”: “cinderv2”

}

],

“_context_user_identity”: “a864d9560b3048e9864118555bb9614c

a3efb05cd0484bf0b600e45dab09276d - - -”,

“_context_project_domain”: null,

“_context_is_admin”: true,

“_context_instance_lock_checked”: false,

“_context_timestamp”: “2017-01-25T22:27:08.773313”,

“priority”: “INFO”,

“_context_project_name”: “project-osdna”,

“_context_read_only”: false,

“message_id”: “80b5e074-0f1a-4b67-810c-fa9c92d41a98”,

“_context_user_id”: “a864d9560b3048e9864118555bb9614c”,

“_context_quota_class”: null,

“_context_tenant”: “a3efb05cd0484bf0b600e45dab09276d”,

“_context_remote_address”: “192.168.0.2”,

“_context_request_id”: “req-2955726b-f227-4eac-9826-b675f5345ceb”,

“_context_auth_token”:

“gAAAAABYiSVcHmaq1TWwNc1_QLlKhdUeC1-M6zBebXyoXN4D0vMlxisny9Q61crBzqwSyY_Eqd_yjrL8GvxatWI1WI1uG4VeWU6axbLe_k5FaXS4RVOP83yR6eh5g_qXQtsNapQufZB1paypZm8YGERRvR-vV5Ee76aTSkytVjwOBeipr9D0dXd-wHcRnSNkTD76nFbGKTu_”,

“_context_user_domain”: null,

“payload”: {

“image_meta”: {

“container_format”: “bare”,

“disk_format”: “qcow2”,

“min_ram”: “64”,

“base_image_ref”: “5f048984-37d1-4952-8b8a-9acb0237bad7”,

“min_disk”: “0”

},

“display_name”: “test”,

“terminated_at”: “”,

“access_ip_v6”: null,

“architecture”: null,

“audit_period_beginning”: “2017-01-01T00:00:00.000000”,

“metadata”: {},

“audit_period_ending”: “2017-01-25T22:27:12.888042”,

“instance_type”: “m1.micro”,

“ramdisk_id”: “”,

“availability_zone”: “nova”,

“kernel_id”: “”,

“hostname”: “test”,

“vcpus”: 1,

“bandwidth”: {},

“user_id”: “a864d9560b3048e9864118555bb9614c”,

“state_description”: “block_device_mapping”,

“old_state”: “building”,

“root_gb”: 0,

“instance_flavor_id”: “8784e0b5-7d17-4281-a509-f49d6fd102f9”,

“cell_name”: “”,

“reservation_id”: “r-zt7sh7vy”,

“access_ip_v4”: null,

“deleted_at”: “”,

“tenant_id”: “a3efb05cd0484bf0b600e45dab09276d”,

“disk_gb”: 0,

“instance_id”: “97a1e179-6a42-4c7b-bced-4f64bd9e4b6b”,

“memory_mb”: 64,

“os_type”: null,

“old_task_state”: “block_device_mapping”,

“state”: “building”,

“instance_type_id”: 6,

“launched_at”: “”,

“ephemeral_gb”: 0,

“created_at”: “2017-01-25 22:27:09+00:00”,

“progress”: “”,

“new_task_state”: “block_device_mapping”

},

“_context_read_deleted”: “no”,

“event_type”: “compute.instance.update”,

“_context_roles”: [

“admin”,

“_member_”

],

“_context_user”: “a864d9560b3048e9864118555bb9614c”,

“timestamp”: “2017-01-25 22:27:12.912744”,

“_unique_id”: “d6dff97e6f71401bb8890057f872644f”,

“_context_resource_uuid”: null,

“_context_domain”: null

},

“id”: “80b5e074-0f1a-4b67-810c-fa9c92d41a98”

}

Inventory

GET /inventory** **

Description: get object details with environment name and id of the

object, or get a list of objects with filters except id.

Normal response code: 200

Error response code: badRequest(400), unauthorized(401), notFound(404)

Request

| Name |

In |

Type |

Description |

|---|

| env_name (Mandatory) |

query |

string |

Environment of the objects. e.g. “Mirantis-Liberty-API”. |

| id (Optional) |

query |

string |

ID of the object. e.g. “*node-2.cisco.com*”. |

| parent_id (Optional) |

query |

string |

ID of the parent object. e.g. “nova”. |

| id_path (Optional) |

query |

string |

ID path of the object. e.g. “/Mirantis-Liberty-API/Mirantis-Liberty-API-regions/RegionOne/RegionOne-availability_zones/nova/*node-2.cisco.com*”. |

| parent_path (Optional) |

query |

string |

ID path of the parent object. “/Mirantis-Liberty-API/Mirantis-Liberty-API-regions/RegionOne/RegionOne-availability_zones/nova”. |

| sub_tree (Optional) |

query |

boolean |

If it is true and the parent_path is specified, it will return the whole sub-tree of that parent object which includes the parent itself, If it is false and the parent_path is specified, it will only return the siblings of that parent (just the children of that parent node), the default value of sub_tree is false. |

| page (Optional) |

query |

int |

Which page is to be returned, the default is the first page, if the page is larger than the maximum page of the query, it will return an empty set, (page starts from 0). |

| page_size (Optional) |

query |

int |

Size of each page, the default is 1000. |

Response

| Name |

In |

Type |

Description |

|---|

| environment |

body |

string |

Environment name of the object. |

| id |

body |

string |

ID of the object. |

| _id |

body |

string |

MongoDB ObjectId of the object. |

| type |

body |

string |

Type of the object. |

| parent_type |

body |

string |

Type of the parent object. |

| parent_id |

body |

string |

ID of the parent object. |

| name_path |

body |

string |

Name path of the object. |

| last_scanned |

body |

string |

Time of last scanning. |

| name |

body |

string |

Name of the object. |

| id_path |

body |

string |

ID path of the object. |

| objects |

body |

array |

The list of object IDs that match the filters. |

Examples

Example Get Objects

Request

http://korlev-calipso-testing.cisco.com:8000/inventory?env_name=Mirantis-Liberty-API&parent_path=/Mirantis-Liberty-API/Mirantis-Liberty-API-regions/RegionOne&sub_tree=false

Response

{

“objects”: [

{

“id”: “Mirantis-Liberty-regions”,

“name”: “Regions”,

“name_path”: “/Mirantis-Liberty/Regions”

},

{

“id”: “Mirantis-Liberty-projects”,

“name”: “Projects”,

“name_path”: “/Mirantis-Liberty/Projects”

}

]

}

Examples Get Object Details

Request

http://korlev-calipso-testing.cisco.com:8000/inventory?env_name=Mirantis-Liberty-API&id=node-2.cisco.com

Response

{

‘ip_address’: ‘192.168.0.5’,

‘services’: {

‘nova-compute’: {

‘active’: True,

‘updated_at’: ‘2017-01-20T23:03:57.000000’,

‘available’: True

}

},

‘id_path’:

‘/Mirantis-Liberty-API/Mirantis-Liberty-API-regions/RegionOne/RegionOne-availability_zones/nova/

*node-2.cisco.com*‘,

‘show_in_tree’: True,

‘os_id’: ‘1’,

‘_id’: ‘588297ae6a283a8bee15cc0d’,

‘host_type’: [

‘Compute’

],

‘name_path’: ‘/Mirantis-Liberty-API/Regions/RegionOne/Availability

Zones/nova/

*node-2.cisco.com*‘,

‘parent_type’: ‘availability_zone’,

‘zone’: ‘nova’,

‘parent_id’: ‘nova’,

‘last_scanned’: ‘2017-01-20T15:05:18.501000’,

‘environment’: ‘Mirantis-Liberty-API’,

‘type’: ‘host’

}

Links

GET /links

Description: get link details with environment name and id of the link,

or get a list of links with filters except id

Normal response code: 200

Error response code: badRequest(400), unauthorized(401), notFound(404)

Request

| Name |

In |

Type |

Description |

|---|

| env_name (Mandatory) |

query |

string |

Environment of the links. e.g. “Mirantis-Liberty-API”. |

| id (Optional) |

query |

string |

ID of the link, it must be a string which can be converted to MongoDB ObjectId. |

| host (Optional) |

query |

string |

Host of the link. e.g. “*node-1.cisco.com*”. |

| link_type (Optional) |

query |

string |

Type of the link, some possible values for that are “instance-vnic”, “otep-vconnector”, “otep-host_pnic”, “host_pnic-network”, “vedge-otep”, “vnic-vconnector”, “vconnector-host_pnic”, “vnic-vedge”, “vedge-host_pnic” and “vservice-vnic” . |

| link_name (Optional) |

query |

string |

Name of the link. e.g. “Segment-2”. |

| source_id (Optional) |

query |

string |

ID of the source object of the link. e.g. “qdhcp-4f4bf8b5-ca42-411a-9f64-5b214d1f1c71”. |

| target_id (Optional) |

query |

string |

ID of the target object of the link. “tap708d399a-20”. |

| state (Optional) |

query |

string |

State of the link, “up” or “down”. |

| attributes |

query |

object |

The attributes of the link, e.g. the network attribute of the link is attributes:network=”4f4bf8b5-ca42-411a-9f64-5b214d1f1c71”. |

| page (Optional) |

query |

int |

Which page is to be returned, the default is first page, when the page is larger than the maximum page of the query,

it will return an empty set. (Page starts from 0).

|

| page_size (Optional) |

query |

int |

Size of each page, the default is 1000. |

Response

| Name |

In |

Type |

Description |

|---|

| id |

body |

string |

ID of the link. |

| _id |

body |

string |

MongoDB ObjectId of the link. |

| environment |

body |

string |

Environment of the link. |

| source_id |

body |

string |

ID of the source object of the link. |

| target_id |

body |

string |

ID of the target object of the link. |

| source |

body |

string |

MongoDB ObjectId of the source object. |

| target |

body |

string |

MongoDB ObjectId of the target object. |

| source_label |

body |

string |

Descriptive text for the source object. |

| target_label |

body |

string |

Descriptive text for the target object. |

| link_weight |

body |

string |

Weight of the link. |

| link_type |

body |

string |

Type of the link, some possible values for that are “instance-vnic”, “otep-vconnector”, “otep-host_pnic”, “host_pnic-network”, “vedge-otep”, “vnic-vconnector”, “vconnector-host_pnic”, “vnic-vedge”, “vedge-host_pnic” and “vservice-vnic”. |

| state |

body |

string |

State of the link, “up” or “down”. |

| attributes |

body |

object |

The attributes of the link. |

| links |

body |

array |

List of link IDs which match the filters. |

Examples

Example Get Link Ids

Request

*http://korlev-calipso-testing.cisco.com:8000/links?env_name=Mirantis-Liberty-API&host=node-2.cisco.com*

Response

{

“links”: [

{

“id”: “58ca73ae3a8a836d10ff3b45”,

“link_type”: “host_pnic-network”,

“link_name”: “Segment-103”,

“environment”: “Mirantis-Liberty”

}

]

}

Example Get Link Details

Request

http://korlev-calipso-testing.cisco.com:8000/links?env_name=Mirantis-Liberty-API&id=5882982c6a283a8bee15cc62

Response

{

“target_id”: “6d0250ae-e7df-4b30-aa89-d9fcc22e6371”,

“target”: “58a23ff16a283a8bee15d3e6”,

“link_type”: “vnic-vedge”,

“environment”: “Mirantis-Liberty-API”,

“_id”: “58a240646a283a8bee15d438”,

“source_label”: “fa:16:3e:38:11:c9”,

“state”: “up”,

“link_weight”: 0,

“id”: “58a240646a283a8bee15d438”,

“source”: “58a23fd46a283a8bee15d3c6”,

“target_label”: “10”,

“attributes”: {},

“source_id”: “qr-24364cd7-ab”

}

Cliques

GET /cliques

Description: get clique details with environment name and clique id, or

get a list of cliques with filters except id

Normal response code: 200

Error response code: badRequest(400), unauthorized(401), notFound(404)

Request

| Name |

In |

Type |

Description |

|---|

| env_name (Mandatory) |

query |

string |

Environment of the cliques. e.g. “Mirantis-Liberty-API”. |

| id (Optional) |

query |

string |

ID of the clique, it must be a string that can be converted to Mongo ObjectID. |

| focal_point (Optional) |

query |

string |

MongoDB ObjectId of the focal point object, it must be a string that can be converted to Mongo ObjectID. |

| focal_point_type (Optional) |

query |

string |

Type of the focal point object, some possible values are “vnic”, “vconnector”, “vedge”, “instance”, “vservice”, “host_pnic”, “network”, “port”, “otep” and “agent”. |

| link_type(Optional) |

query |

string |

Type of the link, when this filter is specified, it will return all the cliques which contain the specific type of the link, some possible values for link_type are “instance-vnic”, “otep-vconnector”, “otep-host_pnic”, “host_pnic-network”, “vedge-otep”, “vnic-vconnector”, “vconnector-host_pnic”, “vnic-vedge”, “vedge-host_pnic” and “vservice-vnic”. |

| link_id (Optional) |

query |

string |

MongoDB ObjectId of the link, it must be a string that can be converted to MongoDB ID, when this filter is specified, it will return all the cliques which contain that specific link. |

| page (Optional) |

query |

int |