Pharos Specification¶

The Pharos Specification provides information on Pharos hardware and network requirements

Pharos Compliance¶

The Pharos Specification defines a hardware environment for deployment and testing of the Brahmaputra platform release. The Pharos Project is also responsible for defining lab capabilities, developing management/usage policies and process; and a support plan for reliable access to project and release resources. Community labs are provided as a service by companies and are not controlled by Pharos however our objective is to provide easy visibility of all lab capabilities and their usage at all-times.

Pharos lab infrastructure has the following objectives: - Provides secure, scalable, standard and HA environments for feature development - Supports the full Brahmaputra deployment lifecycle (this requires a bare-metal environment) - Supports functional and performance testing of the Brahmaputra release - Provides mechanisms and procedures for secure remote access to Pharos compliant environments for

OPNFV community

Deploying Brahmaputra in a Virtualized environment is possible and will be useful, however it does not provide a fully featured deployment and realistic test environment for the Brahmaputra release of OPNFV.

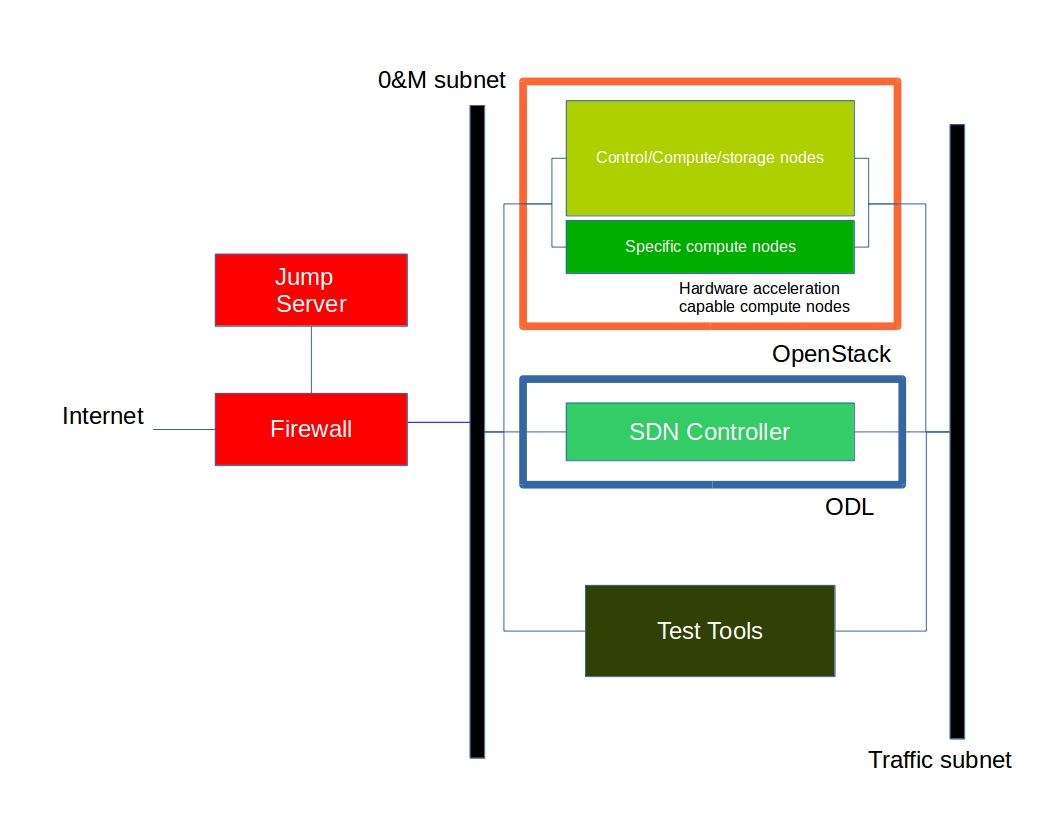

The high level architecture is outlined in the following diagram:

Hardware¶

A pharos compliant OPNFV test-bed provides:

- One CentOS 7 jump server on which the virtualized Openstack/OPNFV installer runs

- In the Brahmaputra release you may select a variety of deployment toolchains to deploy from the jump server.

- 5 compute / controller nodes (BGS requires 5 nodes)

- A configured network topology allowing for LOM, Admin, Public, Private, and Storage Networks

- Remote access as defined by the Jenkins slave configuration guide

http://artifacts.opnfv.org/brahmaputra.1.0/docs/opnfv-jenkins-slave-connection.brahmaputra.1.0.html

Servers

CPU:

- Intel Xeon E5-2600v2 Series or newer

- AArch64 (64bit ARM architecture) compatible (ARMv8 or newer)

Firmware:

- BIOS/EFI compatible for x86-family blades

- EFI compatible for AArch64 blades

Local Storage:

Below describes the minimum for the Pharos spec, which is designed to provide enough capacity for a reasonably functional environment. Additional and/or faster disks are nice to have and mayproduce a better result.

- Disks: 2 x 1TB HDD + 1 x 100GB SSD (or greater capacity)

- The first HDD should be used for OS & additional software/tool installation

- The second HDD is configured for CEPH object storage

- The SSD should be used as the CEPH journal

- Performance testing requires a mix of compute nodes with CEPH (Swift+Cinder) and without CEPH storage

- Virtual ISO boot capabilities or a separate PXE boot server (DHCP/tftp or Cobbler)

Memory:

- 32G RAM Minimum

Power Supply

- Single power supply acceptable (redundant power not required/nice to have)

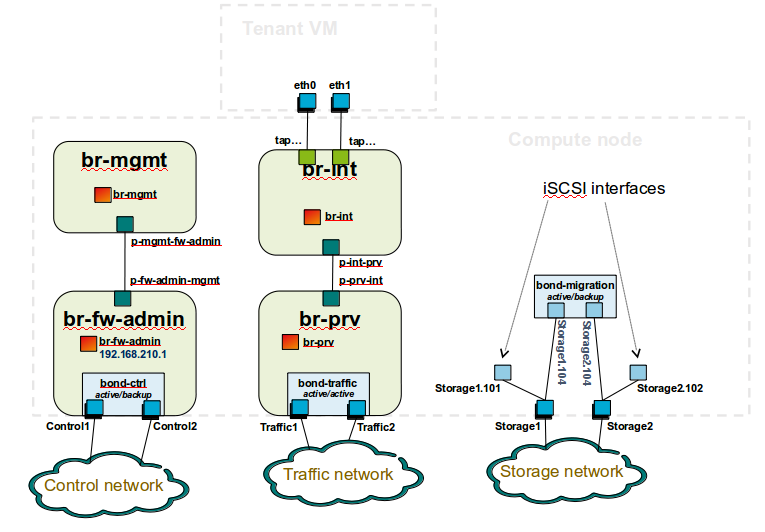

Networking¶

Network Hardware

- 24 or 48 Port TOR Switch

- NICs - Combination of 1GE and 10GE based on network topology options (per server can be on-board or use PCI-e)

- Connectivity for each data/control network is through a separate NIC. This simplifies Switch Management however requires more NICs on the server and also more switch ports

- BMC (Baseboard Management Controller) for lights-out mangement network using IPMI (Intelligent Platform Management Interface)

Network Options

- Option I: 4x1G Control, 2x10G Data, 48 Port Switch

- 1 x 1G for lights-out Management

- 1 x 1G for Admin/PXE boot

- 1 x 1G for control-plane connectivity

- 1 x 1G for storage

- 2 x 10G for data network (redundancy, NIC bonding, High bandwidth testing)

- Option II: 1x1G Control, 2x 10G Data, 24 Port Switch

- Connectivity to networks is through VLANs on the Control NIC

- Data NIC used for VNF traffic and storage traffic segmented through VLANs

- Option III: 2x1G Control, 2x10G Data, 2x10G Storage, 24 Port Switch

- Data NIC used for VNF traffic

- Storage NIC used for control plane and Storage segmented through VLANs (separate host traffic from VNF)

- 1 x 1G for lights-out mangement

- 1 x 1G for Admin/PXE boot

- 2 x 10G for control-plane connectivity/storage

- 2 x 10G for data network

Documented configuration to include:

- Subnet, VLANs (may be constrained by existing lab setups or rules)

- IPs

- Types of NW - lights-out, public, private, admin, storage

- May be special NW requirements for performance related projects

- Default gateways

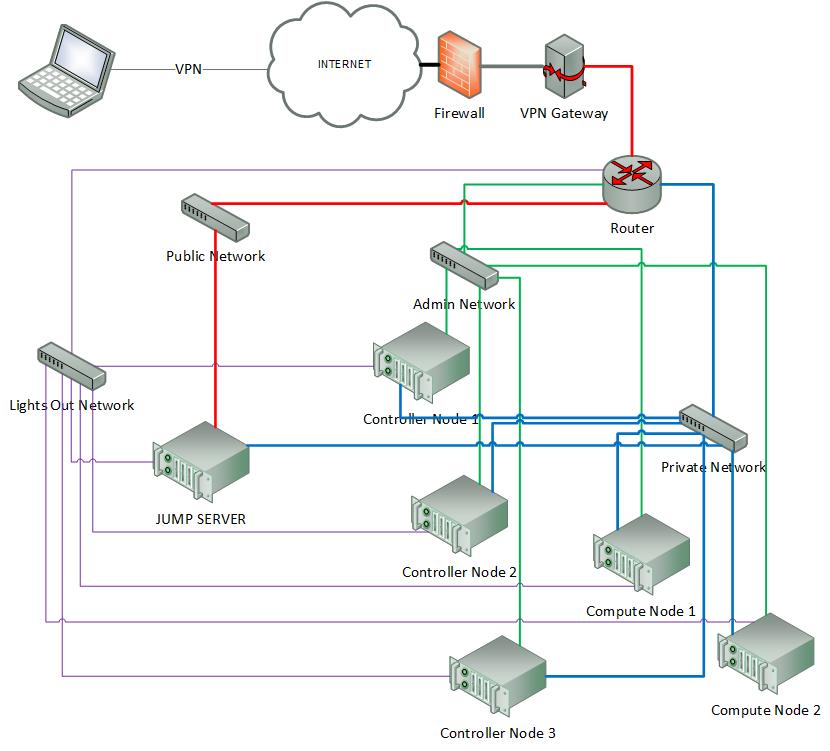

Sample Network Drawings

Download the visio zip file here: opnfv-example-lab-diagram.vsdx.zip

Remote Management¶

Remote access is required for …

- Developers to access deploy/test environments (credentials to be issued per POD / user)

- Connection of each environment to Jenkins master hosted by Linux Foundation for automated deployment and test

OpenVPN is generally used for remote however community hosted labs may vary due to company security rules. For POD access rules / restrictions refer to individual lab documentation as each company may have different access rules and acceptable usage policies.

Basic requirements:

- SSH sessions to be established (initially on the jump server)

- Packages to be installed on a system (tools or applications) by pullig from an external repo.

Firewall rules accomodate:

- SSH sessions

- Jenkins sessions

Lights-out management network requirements:

- Out-of-band management for power on/off/reset and bare-metal provisioning

- Access to server is through a lights-out-management tool and/or a serial console

- Refer to applicable light-out mangement information from server manufacturer, such as ...

Linux Foundation Lab is a UCS-M hardware environment with controlled access as needed

- Access rules and procedure are maintained on the Wiki

- A list of people with access is maintained on the Wiki

- Send access requests to infra-steering@lists.opnfv.org with the following information ...

- Name:

- Company:

- Approved Project:

- Project role:

- Why is access needed:

- How long is access needed (either a specified time period or define “done”):

- What specific POD/machines will be accessed:

- What support is needed from LF admins and LF community support team:

- Once access is approved please follow instructions for setting up VPN access ... https://wiki.opnfv.org/get_started/lflab_hosting

- The people who require VPN access must have a valid PGP key bearing a valid signature from LF

- When issuing OpenVPN credentials, LF will be sending TLS certificates and 2-factor authentication tokens, encrypted to each recipient’s PGP key