4. Component Level Architecture¶

4.1. Introduction¶

This chapter describes in detail the Kubernetes Reference Architecture in terms of the functional capabilities and how they relate to the Reference Model requirements, i.e. how the infrastructure profiles are determined, documented and delivered.

The specifications defined in this chapter will be detailed with unique

identifiers, which will follow the pattern: ra2.<section>.<index>, e.g.

ra2.ch.001 for the first requirement in the Kubernetes Node section. These

specifications will then be used as requirements input for the Kubernetes

Reference Implementation and any vendor or community implementations.

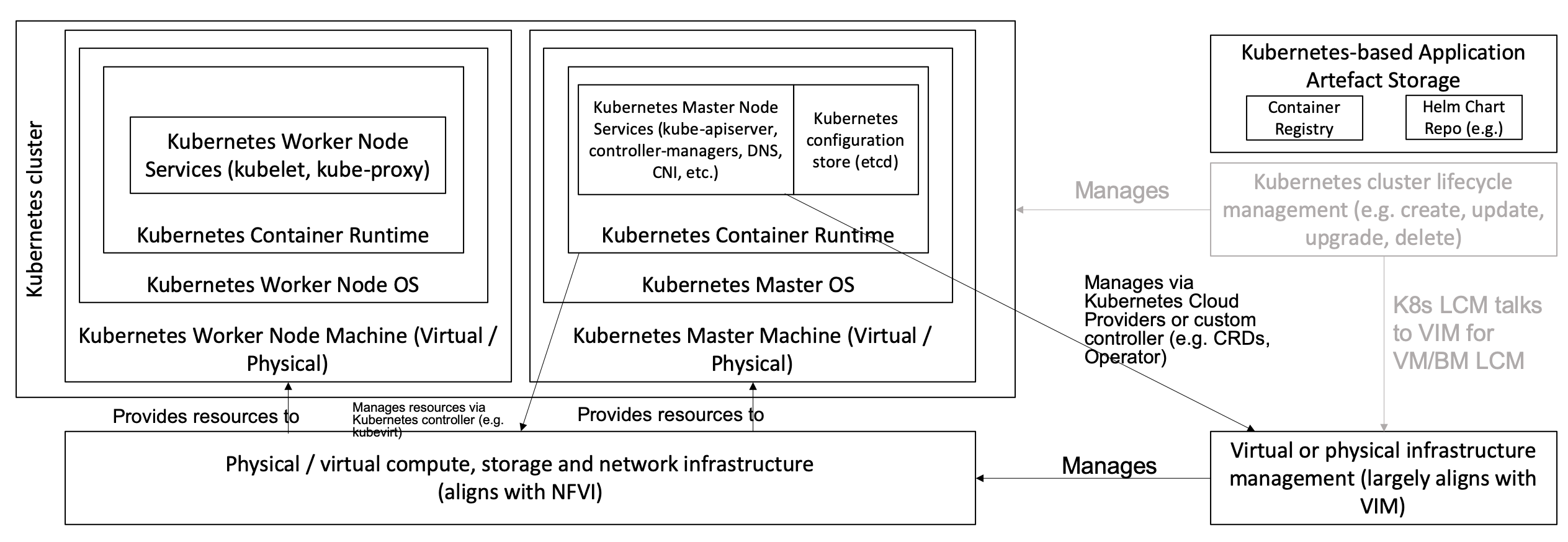

Figure 4-1 below shows the architectural components that are described in the subsequent sections of this chapter.

Figure 4-1: Kubernetes Reference Architecture

4.2. Kubernetes Node¶

This section describes the configuration that will be applied to the physical or virtual machine and an installed Operating System. In order for a Kubernetes Node to be conformant with the Reference Architecture it must be implemented as per the following specifications:

Ref |

Specification |

Details |

Requirement Trace |

Reference Implementation Trace |

|

|---|---|---|---|---|---|

|

Huge pages |

When hosting workloads matching the High

Performance profile, it must be possible

to enable Huge pages (2048KiB and 1048576KiB)

within the Kubernetes Node OS, exposing schedu

lable resources |

infra.com.cfg.004 |

RI2 4.3.1 |

|

|

SR-IOV capable NICs |

When hosting workloads matching the High Performance profile, the physical machines on which the Kubernetes Nodes run must be equipped with NICs that are SR-IOV capable. |

e.cap.013 |

RI2 3.3 |

|

|

SR-IOV Virtual Functions Functions |

When hosting workloads matching the High Performance profile, SR-IOV virtual functions (VFs) must be configured within the Kubernetes Node OS, as the SR-IOV Device Plugin does not manage the creation of these VFs. |

e.cap.013 |

RI2 4.3.1 |

|

|

CPU Simultaneo us Multi-Threa ding (SMT) |

SMT must be enabled in the BIOS on the physical machine on which the Kubernetes Node runs. |

infra.hw.cpu.cfg.004 |

RI2 3.3 |

|

|

CPU Allocation Ratio - VMs |

For Kubernetes nodes running as Virtual Machines, the CPU allocation ratio between vCPU and physical CPU core must be 1:1. |

|||

|

CPU Allocation Ratio - Pods |

To ensure the CPU allocation ratio between

vCPU and physical CPU core is 1:1, the sum of

CPU requests and limits by containers in Pod

specifications must remain less than the

allocatable quantity of CPU resources (i.e.

|

infra.com.cfg.001 |

RI2 3.3 |

|

|

IPv6DualStack |

To support IPv4/IPv6 dual stack networking, the Kubernetes Node OS must support and be allocated routable IPv4 and IPv6 addresses. |

|||

|

Physical CPU Quantity |

The physical machines on which the Kubernetes Nodes run must be equipped with at least 2 physical sockets, each with at least 20 CPU cores. |

infra.hw.cpu.cfg.001 infra.hw.cpu.cfg.002 |

RI2 3.3 |

|

|

Physical Storage |

The physical machines on which the Kubernetes Nodes run should be equipped with Sold State Drives (SSDs). |

infra.hw.stg.ssd.cfg.0 02 |

RI2 3.3 |

|

|

Local Filesystem Storage Quantity |

The Kubernetes Nodes must be equipped with local filesystem capacity of at least 320GB for unpacking and executing containers. Note, extra should be provisioned to cater for any overhead required by the Operating System and any required OS processes such as the container runtime, Kubernetes agents, etc. |

e.cap.003 |

RI2 3.3 |

|

|

Virtual Node CPU Quantity |

If using VMs, the Kubernetes Nodes must be equipped with at least 16 vCPUs. Note, extra should be provisioned to cater for any overhead required by the Operating System and any required OS processes such as the container runtime, Kubernetes agents, etc. |

e.cap.001 |

||

|

Kubernetes Node RAM Quantity |

The Kubernetes Nodes must be equipped with at least 32GB of RAM. Note, extra should be provisioned to cater for any overhead required by the Operating System and any required OS processes such as the container runtime, Kubernetes agents, etc. |

e.cap.002 |

RI2 3.3 |

|

|

Physical NIC Quantity |

The physical machines on which the Kubernetes Nodes run must be equipped with at least four (4) Network Interface Card (NIC) ports. |

infra.hw.nic.cfg.001 |

RI2 3.3 |

|

|

Physical NIC Speed - Basic Profile |

The speed of NIC ports housed in the physical machines on which the Kubernetes Nodes run for workloads matching the Basic Profile must be at least 10Gbps. |

infra.hw.nic.cfg.002 |

RI2 3.3 |

|

|

Physical NIC Speed - High Performance Profile |

The speed of NIC ports housed in the physical machines on which the Kubernetes Nodes run for workloads matching the High Performance profile must be at least 25Gbps. |

infra.hw.nic.cfg.002 |

RI2 3.3 |

|

|

Physical PCIe slots |

The physical machines on which the Kubernetes Nodes run must be equipped with at least eight (8) Gen3.0 PCIe slots, each with at least eight (8) lanes. |

|||

|

Immutable infrastructure |

Whether physical or virtual machines are used, the Kubernetes Node must not be changed after it is instantiated. New changes to the Kubernetes Node must be implemented as new Node instances. This covers any changes from BIOS through Operating System to running processes and all associated configurations. |

req.gen.cnt.02 |

RI2 4.3.1 |

|

|

NFD |

Node Feature Discovery must be used to ad vertise the detailed software and hardware capabilities of each node in the Kubernetes Cluster. |

tbd |

RI2 4.3.1 |

|

Table 4-1: Node Specifications

4.3. Node Operating System¶

In order for a Host OS to be compliant with this Reference Architecture it must meet the following requirements:

Ref |

Specification |

Details |

Requirement Trace |

Reference Implementation Trace |

|

|---|---|---|---|---|---|

|

Linux Distribution |

A deb/rpm compatible distribution of Linux (this must be used for the master nodes, and can be used for worker nodes). |

tbd |

tbd |

|

|

Linux Kernel Version |

A version of the Linux kernel that is compatible with kubeadm - this has been chosen as the baseline because kubeadm is focussed on installing and managing the lifecycle of Kubernetes and nothing else, hence it is easily integrated into higher-level and more complete tooling for the full lifecycle management of the infrastructure, cluster add-ons, etc. |

tbd |

tbd |

|

|

Windows Server |

Windows Server (this can be used for worker nodes, but be aware of the limitations). |

tbd |

tbd |

|

|

Disposable OS |

In order to support req.gen.cnt.03 (immutable infrastructure), the Host OS must be disposable, meaning the configuration of the Host OS (and associated infrastructure such as VM or bare metal server) must be consistent - e.g. the system software and configuration of that software must be identical apart from those areas of configuration that must be different such as IP addresses and hostnames. |

tbd |

tbd |

|

|

Automated Deployment |

This approach to configuration management supports req.lcm.gen.01 (automated deployments) |

tbd |

tbd |

|

Table 4-2: Operating System Requirements

Table 4-3 lists the kernel versions that comply with this Reference Architecture specification.

OS Family |

Kernel Version(s) |

Notes |

|---|---|---|

Linux |

3.10+ |

|

Windows |

1809 (10.0.17763) |

For worker nodes only |

Table 4-3: Operating System Versions

4.4. Kubernetes¶

In order for the Kubernetes components to be conformant with the Reference Architecture they must be implemented as per the following specifications:

Ref |

Specification |

Details |

Requirement Trace |

Reference Implementation Trace |

|---|---|---|---|---|

ra2.k8s.001 |

Kubernetes Conformance |

The Kubernetes distribution, product, or installer used in the implementation must be listed in the Kubernetes Distributions and Platforms document and marked (X) as conformant for the Kubernetes version defined in Required versions of most important components. |

req.gen.cnt. 03 |

RI2 4.3.1 |

ra2.k8s.002 |

Highly available etcd |

An implementation must consist of either three, five or seven nodes running the etcd service (can be colocated on the master nodes, or can run on separate nodes, but not on worker nodes). |

req.gen.rsl. 02, req.gen. avl.01 |

RI2 4.3.1 |

ra2.k8s.003 |

Highly available control plane |

An implementation must consist of at least one master node per availability zone or fault domain to ensure the high availability and resilience of the Kubernetes control plane services. |

||

ra2.k8s.012 |

Control plane services |

A master node must run at least the following

Kubernetes control plane services:

|

req.gen.rsl. 02, req.gen.avl. 01 |

RI2 4.3.1 |

ra2.k8s.004 |

Highly available worker nodes |

An implementation must consist of at least one worker node per availability zone or fault domain to ensure the high availability and resilience of workloads managed by Kubernetes |

req.gen.rsl. 01, req.gen. avl.01, req. kcm.gen.02, req.inf.com. 02 |

|

ra2.k8s.005 |

Kubernetes API Version |

In alignment with the Kubernetes version support policy, an implementation must use a Kubernetes version as per the subcomponent versions table in Required versions of most important components. |

||

ra2.k8s.006 |

NUMA Support |

When hosting workloads matching the High

Performance profile, the |

e.cap.007, infra.com.cfg .002, infra.hw.cpu. cfg.003 |

|

ra2.k8s.007 |

DevicePlugins Feature Gate |

When hosting workloads matching the High

Performance profile, the DevicePlugins feature

gate must be enabled (note, this is enabled

by default in Kubernetes v1.10 or later).

|

Various, e.g. e.cap.013 |

RI2 4.3.1 |

ra2.k8s.008 |

System Resource Reservations |

To avoid resource starvation issues on nodes, the

implementation of the architecture must

reserve compute resources for system daemons and

Kubernetes system daemons such as kubelet,

container runtime, etc. Use the following kubelet

flags: |

i.cap.014 |

|

ra2.k8s.009 |

CPU Pinning |

When hosting workloads matching the High

Performance profile, in order to support CPU

Pinning, the kubelet must be started with the

|

infra.com.cfg .003 |

|

ra2.k8s.010 |

IPv6DualStack |

To support IPv6 and IPv4, the |

req.inf.ntw. 04 |

|

ra2.k8s.011 |

Anuket profile labels |

To clearly identify which worker nodes are

compliant with the different profiles defined by

Anuket the worker nodes must be labelled

according to the following pattern: an

|

||

ra2.k8s.012 |

Kubernetes APIs |

Kubernetes Alpha API are recommended only for testing, therefore all Alpha APIs must be disabled. |

||

ra2.k8s.013 |

Kubernetes APIs |

Backward compatibility of all supported GA APIs of Kubernetes must be supported. |

||

ra2.k8s.014 |

Security Groups |

Kubernetes must support NetworkPolicy feature. |

||

ra2.k8s.015 |

Publishing Services (ServiceTypes) |

Kubernetes must support LoadBalancer Publishing Service (ServiceTypes). |

||

ra2.k8s.016 |

Publishing Services (ServiceTypes) |

Kubernetes must support Ingress. |

||

ra2.k8s.017 |

Publishing Services (ServiceTypes) |

Kubernetes should support NodePort Publishing Service (ServiceTypes). |

req.inf.ntw. 17 |

|

ra2.k8s.018 |

Publishing Services (ServiceTypes) |

Kubernetes should support ExternalName Publishing Service (ServiceTypes). |

||

ra2.k8s.019 |

Kubernetes APIs |

Kubernetes Beta APIs must be supported only when a stable GA of the same version doesn’t exist. |

req.int.api. 04 |

Table 4-4: Kubernetes Specifications

4.5. Container runtimes¶

Ref |

Specification |

Details |

Requirement Trace |

Reference Implementation Trace |

|---|---|---|---|---|

ra2.crt.001 |

Conformance with OCI 1.0 runtime spec |

The container runtime must be implemented as per the OCI 1.0 (Open Container Initiative 1.0) specification. |

req.gen.ost. 01 |

RI2 4.3.1 |

ra2.crt.002 |

Kubernetes Container Runtime Interface (CRI) |

The Kubernetes container runtime must be implemented as per the Kubernetes Container Runtime Interface (CRI) |

req.gen.ost. 01 |

RI2 4.3.1 |

Table 4-5: Container Runtime Specifications

4.6. Networking solutions¶

In order for the networking solution(s) to be conformant with the Reference Architecture they must be implemented as per the following specifications:

Ref |

Specification |

Details |

Requirement Trace |

Reference Implementation Trace |

|---|---|---|---|---|

ra2.ntw.001 |

Centralised network administration |

The networking solution deployed within the implementation must be administered through the Kubernetes API using native Kubernetes API resources and objects, or Custom Resources. |

req.inf.ntw. 03 |

RI2 4.3.1 |

ra2.ntw.002 |

Default Pod Network - CNI |

The networking solution deployed within the implementation must use a CNI-conformant Network Plugin for the Default Pod Network, as the alternative (kubenet) does not support cross-node networking or Network Policies. |

req.gen.ost. 01 req.inf.ntw. 08 |

RI2 4.3.1 |

ra2.ntw.003 |

Multiple connection points |

The networking solution deployed within the implementation must support the capability to connect at least FIVE connection points to each Pod, which are additional to the default connection point managed by the default Pod network CNI plugin. |

e.cap.004 |

RI2 4.3.1 |

ra2.ntw.004 |

Multiple connection points presentation |

The networking solution deployed within the implementation must ensure that all additional non-default connection points are requested by Pods using standard Kubernetes resource scheduling mechanisms such as annotations or container resource requests and limits. |

req.inf.ntw. 03 |

RI2 4.3.1 |

ra2.ntw.005 |

Multiplexer / meta-plugin |

The networking solution deployed within the implementation may use a multiplexer/meta-plugin. |

req.inf.ntw. 06, req.inf.ntw. 07 |

RI2 4.3.1 |

ra2.ntw.006 |

Multiplexer / meta-plugin CNI Conformance |

If used, the selected multiplexer/meta-plugin must integrate with the Kubernetes control plane via CNI. |

req.gen.ost. 01 |

RI2 4.3.1 |

ra2.ntw.007 |

Multiplexer / meta-plugin CNI Plugins |

If used, the selected multiplexer/meta-plugin must support the use of multiple CNI-conformant Network Plugins. |

req.gen.ost. 01, req.inf.ntw. 06 |

RI2 4.3.1 |

ra2.ntw.008 |

SR-IOV Device Plugin for High Performance |

When hosting workloads that match the High

Performance profile and require SR-IOV

acceleration, a Device Plugin for SR-IOV must

be used to configure the SR-IOV devices and

advertise them to the |

e.cap.013 |

RI2 4.3.1 |

ra2.ntw.009 |

Multiple connection points with multiplexer / meta-plugin |

When a multiplexer/meta-plugin is used, the additional non-default connection points must be managed by a CNI-conformant Network Plugin. |

req.gen.ost. 01 |

RI2 4.3.1 |

ra2.ntw.010 |

User plane networking |

When hosting workloads matching the High Performance profile, CNI network plugins that support the use of DPDK, VPP, and/or SR-IOV must be deployed as part of the networking solution. |

infra.net.acc .cfg.001 |

RI2 4.3.1 |

ra2.ntw.011 |

NATless connectivity |

When hosting workloads that require source and destination IP addresses to be preserved in the traffic headers, a NATless CNI plugin that exposes the pod IP directly to the external networks (e.g. Calico, MACVLAN or IPVLAN CNI plugins) must be used. |

req.inf.ntw. 14 |

|

ra2.ntw.012 |

Device Plugins |

When hosting workloads matching the High Performance profile that require the use of FPGA, SR-IOV or other Acceleration Hardware, a Device Plugin for that FPGA or Acceleration Hardware must be used. |

e.cap.016 e.cap.013 |

RI2 4.3.1 |

ra2.ntw.013 |

Dual stack CNI |

The networking solution deployed within the implementation must use a CNI-conformant Network Plugin that is able to support dual-stack IPv4/IPv6 networking. |

req.inf.ntw. 04 |

|

ra2.ntw.014 |

Security Groups |

The networking solution deployed within the implementation must support network policies. |

infra.net.cfg .004 |

|

ra2.ntw.015 |

IPAM plugin for multiplexer |

When a multiplexer/meta-plugin is used, a CNI-conformant IPAM Network Plugin must be installed to allocate IP addresses for secondary network interfaces across all nodes of the cluster. |

req.inf.ntw. 10 |

Table 4-6: Networking Solution Specifications

4.7. Storage components¶

In order for the storage solutions to be conformant with the Reference Architecture they must be implemented as per the following specifications:

Ref |

Specification |

Details |

Requirement Trace |

Reference Implementation Trace |

|---|---|---|---|---|

ra2.stg.001 |

Ephemeral Storage |

An implementation must support ephemeral storage, for the unpacked container images to be stored and executed from, as a directory in the filesystem on the worker node on which the container is running. See the Container runtimes section above for more information on how this meets the requirement for ephemeral storage for containers. |

||

ra2.stg.002 |

Kubernetes Volumes |

An implementation may attach additional storage to containers using Kubernetes Volumes. |

||

ra2.stg.003 |

Kubernetes Volumes |

An implementation may use Volume Plugins (see

|

||

ra2.stg.004 |

Persistent Volumes |

An implementation may support Kubernetes Persistent Volumes (PV) to provide persistent storage for Pods. Persistent Volumes exist independent of the lifecycle of containers and/or pods. |

req.inf.stg. 01 |

|

ra2.stg.005 |

Storage Volume Types |

An implementation must support the following

Volume types: |

||

ra2.stg.006 |

Container Storage Interface (CSI) |

An implementation may support the Container

Storage Interface (CSI), an Out-of-tree plugin.

In order to support CSI, the feature gates

|

||

ra2.stg.007 |

An implementation should use Kubernetes Storage Classes to support automation and the separation of concerns between providers of a service and consumers of the service. |

Table 4-7: Storage Solution Specifications

A note on object storage:

This Reference Architecture does not include any specifications for object storage, as this is neither a native Kubernetes object, nor something that is required by CSI drivers. Object storage is an application-level requirement that would ordinarily be provided by a highly scalable service offering rather than being something an individual Kubernetes cluster could offer.

Todo: specifications/commentary to support req.inf.stg.04 (SDS) and req.inf.stg.05 (high performance and horizontally scalable storage). Also req.sec.gen.06 (storage resource isolation), req.sec.gen.10 (CIS - if applicable) and req.sec.zon.03 (data encryption at rest).

4.8. Service meshes¶

Application service meshes are not in scope for the architecture. The service mesh is a dedicated infrastructure layer for handling service-to-service communication, and it is recommended to secure service-to-service communications within a cluster and to reduce the attack surface. The benefits of the service mesh framework are described in Use Transport Layer Security and Service Mesh. In addition to securing communications, the use of a service mesh extends Kubernetes capabilities regarding observability and reliability.

Network service mesh specifications are handled in section 4.5 Networking solutions.

4.9. Kubernetes Application package manager¶

In order for the application package managers to be conformant with the Reference Architecture they must be implemented as per the following specifications:

Ref |

Specification |

Details |

Requirement Trace |

Reference Implementation Trace |

|---|---|---|---|---|

ra2.pkg.001 |

API-based package management |

A package manager must use the Kubernetes APIs to manage application artifacts. Cluster-side components such as Tiller are not supported. |

req.int.api. 02 |

|

ra2.pkg.002 |

Helm version 3 |

All workloads must be packaged using Helm (version 3) charts. |

Helm version 3 has been chosen as the Application packaging mechanism to ensure compliance with the ONAP ASD NF descriptor specification and ETSI SOL0001 rel. 4 MCIOP specification.

Table 4-8: Kubernetes Application Package Manager Specifications

4.10. Kubernetes workloads¶

In order for the Kubernetes workloads to be conformant with the Reference Architecture they must be implemented as per the following specifications:

Ref |

Specification |

Details |

Requirement Trace |

Reference Implementation Trace |

|---|---|---|---|---|

ra2.app.001 |

Root Parameter Group (OCI Spec) |

Specifies the container’s root filesystem. |

TBD |

N/A |

ra2.app.002 |

Mounts Parameter Group (OCI Spec) |

Specifies additional mounts beyond root. |

TBD |

N/A |

ra2.app.003 |

Process Parameter Group (OCI Spec) |

Specifies the container process. |

TBD |

N/A |

ra2.app.004 |

Hostname Parameter Group (OCI Spec) |

Specifies the container’s hostname as seen by processes running inside the container. |

TBD |

N/A |

ra2.app.005 |

User Parameter Group (OCI Spec) |

User for the process is a platform-specific structure that allows specific control over which user the process runs as. |

TBD |

N/A |

ra2.app.006 |

Consumption of additional, non-default connection points |

Any additional non-default connection points must be requested through the use of workload annotations or resource requests and limits within the container spec passed to the Kubernetes API Server. |

req.int.api.01 |

N/A |

ra2.app.007 |

Host Volumes |

Workloads should not use |

req.kcm.gen.02 |

N/A |

ra2.app.008 |

Infrastructure dependency |

Workloads must not rely on the availability of the master nodes for the successful execution of their functionality (i.e. loss of the master nodes may affect non-functional behaviours such as healing and scaling, but components that are already running will continue to do so without issue). |

TBD |

N/A |

ra2.app.009 |

Device plugins |

Workload descriptors must use the resources advertised by the device plugins to indicate their need for an FPGA, SR-IOV or other acceleration device. |

TBD |

N/A |

ra2.app.010 |

Node Feature Discovery (NFD) |

Workload descriptors must use the labels advertised by Node Feature Discovery to indicate which node software of hardware features they need. |

TBD |

N/A |

ra2.app.011 |

Published helm chart |

Helm charts of the CNF must be published into a helm registry and must not be used from local copies. |

N/A |

|

ra2.app.012 |

Valid Helm chart |

Helm charts of the CNF must be valid and should pass the helm lint validation. |

N/A |

|

ra2.app.013 |

Rolling update |

Rolling update of the CNF must be possible using Kubernetes deployments. |

N/A |

|

ra2.app.014 |

Rolling downgrade |

Rolling downgrade of the CNF must be possible using Kubernetes deployments. |

N/A |

|

ra2.app.015 |

CNI compatibility |

The CNF must use CNI compatible networking plugins. |

N/A |

|

ra2.app.016 |

Kubernetes API stability |

The CNF must not use any Kubernetes alpha API-s. |

N/A |

|

ra2.app.017 |

CNF resiliency (node drain) |

CNF must not loose data, must continue to run and its readiness probe outcome must be Success even in case of a node drain and rescheduling occurs. |

N/A |

|

ra2.app.018 |

CNF resiliency (network latency) |

CNF must not loose data, must continue to run and its readiness probe outcome must be Success even in case of network latency up to 2000 ms occurs. |

N/A |

|

ra2.app.019 |

CNF resiliency (pod delete) |

CNF must not loose data, must continue to run and its readiness probe outcome must be Success even in case of pod delete occurs. |

N/A |

|

ra2.app.020 |

CNF resiliency (pod memory hog) |

CNF must not loose data, must continue to run and its readiness probe outcome must be Success even in case of pod memory hog occurs. |

N/A |

|

ra2.app.021 |

CNF resiliency (pod I/O stress) |

CNF must not loose data, must continue to run and its readiness probe outcome must be Success even in case of pod I/O stress occurs. |

N/A |

|

ra2.app.022 |

CNF resiliency (pod network corruption) |

CNF must not loose data, must continue to run and its readiness probe outcome must be Success even in case of pod network corruption occurs. |

N/A |

|

ra2.app.023 |

CNF resiliency (pod network duplication) |

CNF must not loose data, must continue to run and its readiness probe outcome must be Success even in case of pod network duplication occurs. |

N/A |

|

ra2.app.024 |

CNF resiliency (pod DNS error) |

CNF must not loose data, must continue to run and its readiness probe outcome must be Success even in case of pod DNS error occurs. |

N/A |

|

ra2.app.025 |

CNF local storage |

CNF must not use local storage. |

N/A |

|

ra2.app.026 |

Liveness probe |

All Pods of the CNF must have livenessProbe defined. |

N/A |

|

ra2.app.027 |

Readiness probe |

All Pods of the CNF must have readinessProbe defined. |

N/A |

|

ra2.app.028 |

No access to container daemon sockets |

The CNF must not have any of the container daemon sockets (e.g.: /var/run/docker.sock, /var/run/containerd.sock or /var/run/crio.sock) mounted. |

N/A |

|

ra2.app.029 |

No automatic service account mapping |

Non specified service accounts must not be automatically mapped. To prevent this the automountServiceAccountToken: false flag must be set in all Pods of the CNF. |

N/A |

|

ra2.app.030 |

No host network access |

Host network must not be attached to any of the Pods of the CNF. hostNetwork attribute of the Pod specifications must be False or should not be specified. |

N/A |

|

ra2.app.031 |

Host process namespace separation |

Pods of the CNF must not share the host process ID namespace or the host IPC namespace. Pod manifests must not have the hostPID or the hostIPC attribute set to true. |

N/A |

|

ra2.app.032 |

Resource limits |

All containers and namespaces of the CNF must have defined resource limits for at least CPU and memory resources. |

N/A |

|

ra2.app.033 |

Read only filesystem |

All containers of the CNF must have a read only filesystem. The readOnlyRootFilesystem attribute of the Pods in the their securityContext should be set to true. |

N/A |

|

ra2.app.034 |

Container image tags |

All referred container images in the Pod manifests must be referred by a version tag pointing to a concrete version of the image. latest tag must not be used. |

N/A |

|

ra2.app.035 |

No hardcoded IP addresses |

The CNF must not have any hardcoded IP addresses in its Pod specifications. |

N/A |

|

ra2.app.036 |

No node ports |

Service declarations of the CNF must not contain nodePort definition. |

N/A |

|

ra2.app.037 |

Immutable config maps |

ConfigMaps used by the CNF must be immutable. |

N/A |

|

ra2.app.038 |

Horizontal scaling |

Increasing and decreasing of the CNF capacity should be implemented using horizontal scaling. If horizontal scaling is supported, automatic scaling must be possible using Kubernetes Horizontal Pod Autoscale (HPA) feature. |

TBD |

N/A |

ra2.app.039 |

CNF image size |

The different container images of the CNF should not be bigger than 5GB. |

N/A |

|

ra2.app.040 |

CNF startup time |

Startup time of the Pods of a CNF should not be more than 60s where startup time is the time between starting the Pod until the readiness probe outcome is Success. |

N/A |

|

ra2.app.041 |

No privileged mode |

None of the Pods of the CNF should run in privileged mode. |

N/A |

|

ra2.app.042 |

No root user |

None of the Pods of the CNF should run as a root user. |

N/A |

|

ra2.app.043 |

No privilege escalation |

None of the containers of the CNF should allow privilege escalation. |

N/A |

|

ra2.app.044 |

Non-root user |

All Pods of the CNF should be able to execute with a non-root user having a non-root group. Both runAsUser and runAsGroup attributes should be set to a greater value than 999. |

N/A |

|

ra2.app.045 |

Labels |

Pods of the CNF should define at least the following labels: app.kubernetes.io/name, app.kubernetes.io/version and app.kubernetes.io/part-of |

N/A |

Table 4-9: Kubernetes Workload Specifications

4.11. Additional required components¶

This chapter should list any additional components needed to provide the services defined in Chapter 3.2 (e.g., Prometheus)